Abstract

This pilot-study takes a concept (Safety II), and tests to see if the premise of the concept can be applied to a commercial flight operation. The aim being to enhance flight safety and operational effectiveness. The tool used to facilitate this application is the Operational Learning Review (OLR). The OLR is a prescribed interview technique which encourages the subject of a safety interview (review) to provide context and create narrative based upon event recall. 20 interviews were conducted using the OLR technique and this resulted in rich narrative which could then be analysed in order to create an influence map of the event. The influence map highlights performance variability and performance shaping factors – which in turn highlight resilient behaviours. The study demonstrated that extant statutory investigation requirements are met using this tool – in addition, system learning is greatly enhanced, allowing a shift to Safety II principles - learning, from positive work and behaviour.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

“Airlines are safe” - so safe in fact that our opportunity for learning how we might become even more safe is now greatly diminished! In the absence of accidents and serious incidents our current approach to learning from less significant events (deemed to compromise safety), relies heavily on the continued reporting of near misses and the use of evidence from flight data systems. This reality is the rationale underpinning the creation of the concept of Safety I and Safety II (Hollnagel 2014).

The traditional view of Safety which Professor Hollnagel refers to as Safety I, is built on the premise that as few things as possible go wrong. Safety is assured when risk to the operation is maintained at a level which is deemed As Low As Reasonably Practicable (ALARP) – this term (ALARP) first appeared with regard to safety and risk in UK law in the 1970s (Health and Safety at Work Act 1974). What this approach leads us to concentrate on however is the absence of safety (focus on when safety fails) rather than the presence of what it is that makes us safe! If we are focusing on events which demonstrate the absence of something (in this case, safety) and these events happen rarely (because we are safe), we must surely look elsewhere to learn how we might improve our safety management going forward. Safety I is not to be dismissed however! This traditional approach and view has led us to the point we are at now, our dedication to leaving no stone unturned following accidents and serious incidents has helped shape the current Safety Management Systems (SMS), regulatory frameworks, international safety standards and quality and compliance frameworks which govern commercial aviation organisations.

The issue with Safety I is that it is reactive! We wait for something to fail, or we wait for an exceedance to manifest and be captured by our Flight Data Analysis Programme (FDAP) (ICAO 2008), we then focus our resource and attention on this anomaly. We track the trends with regard to unstable approaches, runway incursions, flight level busts and flight path deviations, and we do this as part of our quest for improving safety. These exceedances and events represent a tiny proportion of our daily activity in commercial aviation, possibly 1 or 2%, so what are we missing by only focusing on this! We are considered good and safe when these negative outcomes are not occurring, so why not also devote time, focus, energy and resource to understanding why they are not occurring, what does good look like? This is the Safety II approach.

Sidney Dekker talks about a new approach or new view in Safety Differently (2014). In taking this view, we begin to understand how our opportunities for learning from traditional safety metrics has plateaued. This new view moves us on from the linear causal logic/reactive approach (which served us so well as our aviation safety system matured), to a more “systems thinking” approach Hollnagel (1993), Perrow (2001), Rasmussen (2003), Reason (1990, 1997) and Woods et al. (1994). This approach takes in to account the socio-technically complex nature of a very different aviation system in 2020. This new view aligns with Safety II in many ways as it refocuses our safety effort to concentrating on the presence of safety rather than the absence of safety, it recognises the human influence and human input as a source of strength and resilience in the system, not as the weak link often portrayed in the past. This new view also encourages us to rightly approach the front line worker (in this case the pilots, engineers, cabin crew etc.) as being the experts in the field, an asset which can help us understand what really happens in the organisation day to day, a rich source of learning for the system. Safety II shines a light on the vast majority of activity occurring daily in our system which has successful outcomes often despite the intrusion of real life and real-world positive or negative influencing factors (see Fig. 1).

Background to the Introduction of the Operational Learning Review (OLR)

If we take a new view of safety, we conform to the following safety thinking:

-

Being safe relies on the presence of safety, not the absence of safety (Dekker 2014).

-

We need to understand work-as-done, not only work-as-imagined (Hollnagel 2014).

-

System thinking for safety – Principle 1 (Eurocontrol 2014) The front-line workers are the field experts, Seddon (2005), we will learn what really happens day to day by engaging with them and giving them the respect, they deserve.

-

The professionals we recruit, and train do not come to work to do a bad job or cause harm.

-

Performance variability is a function of normal work - this is where the real learning lies in the new view of safety.

-

With performance variability comes adaptability - this is a key component of resilience.

-

We must avoid hindsight bias and ensure we approach understanding any event from a local rationale perspective.

-

Normal and non-normal situations are opportunities for learning - there should be no jeopardy for the worker who helps us learn and improve the system.

-

Accountability works both ways - both the worker and the system are accountable for safety and safety learning.

-

Curiosity not judgement.

Safety is the presence of something not the absence of something. This concept is referred to in the introduction, it guides us to shift our focus and be cognisant of what is working well and providing the safety in our operation.

Work-as-done v work-as-imagined. There has been much literature addressing the difference between work as imagined and work as done. Some of the earliest references from a safety perspective were centred on a distinction between the system task description (work-as-imagined) and the cognitive tasks (work-as-done) (Hollnagel and Woods 1983), this distinction is now more broad and from an applied perspective work-as-imagined refers to work as seen from a management or organisation perspective (blunt end), it is work in strict adherence to the rules, regulations, guidance and procedures which direct that work. Work-as-done refers to the real work taking place at the sharp end. The individuals engaged in this activity are subject to a broad range of performance shaping influences, environmental, social and technical. The human operators have to adapt to these influences, shaping their performance in order to meet a range of sometimes competing goals. Tradeoffs are always occurring; this is described by Hollnagel when he outlines the “ETTO principle”. ETTO refers to the Efficiency Thoroughness Trade Off we often see in action on the front line. Further to this, Safety is also at risk of being traded for efficiency or thoroughness if those competing goals are considered to be of more value than the safety goal!

If we want to understand work-as-done, then we must engage with those who do the work. These are the people making the tradeoffs, they find the work arounds when policy, procedure, guidance or regulation might not be fit for purpose or may not be workable due to other factors imposing limits on the task. These workers are varying their performance and are often providing resilience and adaptability, whilst ultimately getting the job done. Mostly this will be as safe as the system expects, sometimes they may even be safer – occasionally though we may be drifting toward failure (Dekker 2014).

To fully comprehend work-as-done, we must take a viewpoint which matches the local rationale of those engaged with the task. Rather than measure the performance only against the prescribed rules, regulations, guidance and procedures, we must consider why workers actions and decisions make sense to them at the time. Dekker invites us to get inside the tunnel with these front-line workers and understand what they did or didn’t know when making decisions, what competing goals were they engaged with along the way.

Hindsight bias - passing judgement about performance once we already know the outcome will rarely prove to be valuable with regard to understanding work-as-done. Often guiding us to the obvious answer or solution, this approach bypasses the detail and context which is needed in order to understand why events happen, not simply how they happen or even more simplistic what has happened.

When events or occurrences present as an opportunity for learning, the worker involved should feel confident to engage in order to help improve the system. These individuals must be treated with respect and be given every opportunity to provide the narrative to frame the event or occurrence. They provide context and they describe the sense making that was key in shaping the outcome. This context is key to extracting learning not only from the event, but also in gaining insight into what the individual has learned from this experience, how they have reflected and how their behaviour may change because of the experience. The Operational Learning Review explores this narrative and aims to capture the learning in order to share this knowledge and experience across the wider system. Educating other workers, revising processes and procedures or designing technical or software solutions to make the system even more safe without necessarily compromising efficiency or thoroughness (tradeoffs which occur at all stages).

Trust is key to the Operational Learning Review; without it we lose the opportunity to understand the inner working of the system. Trust works both ways, as does accountability. The system is accountable to the worker and must provide training, tools, time and structure for completing the aviation related task undertaken by the worker, and the worker in turn should ensure they equip themselves for their role, making use of training and equipment provided.

1.1 The Operational Learning Review (OLR)

Designing the Framework

This framework based upon the Learning Review concept (Pupilidy 2015) was designed to support current occurrence review processes employed at a major airline. The airline already has a comprehensive and effective framework, policy and philosophy for dealing with occurrences and exceedances which come to light and need further understanding. The purpose therefore with regard to the initial implementation of the OLR was not to replace this extant process, but attempt to enhance it. The minimum acceptable measured output of the OLR therefore was the output already evident from current practice, the desired output would be the additional learning and understanding as described in the introduction section above – this learning would then be channeled back into the airlines Safety Management System (SMS), the goal being to further enhance both flight safety and efficient flight operations.

Current occurrence investigation processes employed across most of the high reliability transport sector is relatively person centred, Dekker (2014) refers to this when describing the old view of safety. This approach gives an indication as to what happened following an unwanted outcome or event, it may even point toward how it happened, it rarely gets to the why it happened. This old view has been practiced for many years across most organisations but doesn’t necessarily fit with the modern socio-technical environment that commercial airlines now represent. Taking the old view, the trust and respect enjoyed by both the worker and the system can be somewhat compromised and there may be an expectation on behalf of the worker that punitive action may follow any event or occurrence they are involved with. Flight crew will not fully trust that airline managers will always fully listen to or understand their rationale following an occurrence therefore the bare minimum might be disclosed through Air Safety Reports (ASR) or communication with Flight Operations management.

1.2 Designing the OLR (Understanding Work as Done vs Work as Imagined)

The OLR is versatile and creative, it is specifically suited to uncovering sense making in complex systems. Complex systems, unlike simple or complicated systems, requires sensemaking to be applied across the system components in order to learn and develop - this sense making requires a different pathway and the characteristics of the method employed will be different. We can classify system types ranging from simple, complicated to complex (see Table 1) This classification helps us to understand the origins of the traditional thinking around safety investigation, it highlights why historically specific approaches were taken.

1.3 Designing the OLR (The Front-Line Workers Are the Field Experts)

Commercial aviation is highly regulated, and the operation is highly procedural, meaning that in respect of the expectations outlined in Table 2 the status of the workers is less clear. The qualified, current, competent pilot will be expected to comply with instructions (Air Traffic Control for example), know rules, regulations, policy and procedure (compliance with Civil Aviation Authorities across the world) - this brings an element of system control to the operation. Work-as-done will reflect this control, however the OLR will need to capture the adaptations and performance variability being demonstrated particularly when the pilot is improvising to meet operational goals, using complex adaptive problem solving or critical thinking skills to achieve results and using intuition (knowing when to amend or change the plan).

1.4 Designing the OLR (Performance Variability)

Pilots are constantly varying performance in order to meet the changes and fluctuations in the dynamic environment. By the time they meet with the rest of the crew at dispatch they will have already been subject to factors which may shape their performance as they undertake their duty. New factors, environmental (weather or the operating environment), social (crew dynamic, personal relationships), organisational (company or regulatory environment) for example will all have the potential to influence the task. These influences are keenly felt at the sharp end, with the sharp end operator making the necessary adaptations to cope with any disturbance to the planned operation. The blunt end (management or the regulator) will not normally be aware of the adaptations or performance variation unless an outcome falls close to, or outside of the parameters deemed safe for the operation - when the outcome is not as expected, an Air Safety Report (ASR) is filed or a Flight Data Analysis Programme (FDAP) exceedance is logged.

1.5 Research Question?

The resilient behaviours of the human (agent) operating in complex socio-technical systems are not clearly identified from a proactive safety perspective, therefore the relationship between the” human agents and the technical/environmental artefacts” (Stanton 2016) with regard to maintaining system safety within acceptable bounds are not obvious! Therfore:

-

Can the Operational Learning Review approach identify operational (human) safety performance variability?

-

Is the adaptive context of a socio-technical aviation flight operations system adequately captured by the Operational Learning Review process?

-

Is trust and openness improved by taking the Operational Learning Review approach?

2 Method

This pilot study has considered the data gathered through conducting 20 Operational learning Reviews. This pilot study serves as a precursor to a much larger project planned for the organisation. A panel of experienced flight operations and flight safety specialists was tasked with developing the philosophy, framework and process for the introduction of the OLR. This panel consisted:

-

Human Factors Specialist (the author)

-

Aviation Risk Manager - Flight Operations - current pilot

-

Aviation Risk Manager - Group Risk

-

2 X type specific Risk Managers - line operations current pilots

-

Senior Flight Operations Managers and Training Managers - current pilots

The panel’s first task was to agree and produce a philosophy document which would convey the intent of the OLR to the wider flight operations community, this guidance outlined the following:

-

OLR - background and rationale.

-

Commitment to learning.

-

Commitment to “Just Culture”.

-

Human-centred approach.

A guidance document was produced which outlined how an OLR would be conducted, this document included detail around how the person conducting the OLR would engage with the front-line worker who had agreed to the review being conducted following an event. The guidance directs the person conducting the OLR to put the subject of the review at ease, ask open questions in order to gain understanding of the context of the event by hearing a free-flowing narrative. The next step is to discuss more directed elements of the narrative based upon this opening engagement, explore the sense-making which was happening at the time of the event, and that which has happened subsequently once the subject individual has had time to reflect on the occurrence. This same process was conducted with all individuals involved with the occurrences being reviewed - the aim then being to create an influence map consisting of those elements which had been shaping the performance of the individuals involved.

2.1 Design and Procedure

Two designs have been considered for this pilot study - interview to generate narrative and classification of factors to generate an influence map.

Participants were presented for interview having come to the attention of the OLR panel by:

-

Self-reported concerns following an event or occurrence (Air Safety Report or informal communication to the flight operations team).

-

An exceedance highlighted through the flight data programme.

Participants were welcomed to the review, thanked for their participation and giving a thorough brief on the purpose of the Operational Learning Review, this brief included the following:

-

The sole purpose of the OLR being learning for the system and the individual involved in any event.

-

No jeopardy for the individual engaged with the review - in line with extant Just Culture policy.

-

The importance of their expert involvement with the process.

-

An opportunity to take ownership of any personal development direction highlighted by the review, but also an opportunity to help shape the learning for the wider system.

The interview/review was conducted with a minimum of two interviewers/reviewers, designated number 1 and number 2.

Number 1 interviewer - The participant was informed that number 1 interviewer would lead the interview, and after building rapport would start the interview by asking an open question - often referred to using the pneumonic TED for Tell, Explain, Describe e.g.:

-

Tell me about the event ….

-

Explain your role …..

-

Describe the scene ….

This line of open questioning is to encourage the participant to start to recall the event or occurrence and provide a free-flowing narrative as they remember it. During this recall, number 1 interviewer will not interrupt the participant but will encourage the them to continue with their account if they begin to close down their communication. This is the first opportunity for the interviewer (number 1) to receive a download of data from the participant and it is important that they recall as much information as possible, even if they might consider it unimportant or irrelevant. Once this initial download is complete, the interviewer (number 1) will go back over the participant’s account, using key phrases and notes taken during the recall. This is the first opportunity to understand the participants sense making. The participant will be asked to talk about what they were thinking and how they were feeling, during the event but also after the event once they had time to reflect upon the event and their actions. The number 1 interviewer uses a simple grid system for note taking and recall (see Fig. 2), this is to avoid interrupting the participant but also to maintain eye contact and open body language, thus encouraging dialogue. Once the line of questioning is complete for the number 1 interviewer, they will thank the participant and then hand over to number 2.

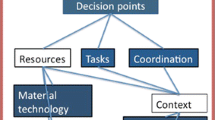

Number 2 interviewer - the initial role of the number 2 interviewer is to take detailed notes (working to the premise that participants may not give consent for recording). The number 2 interviewer will not interrupt the participant or the number 1 interviewer but will wait until the interview is handed over to them before asking any specific questions they may have regarding the event. This disciplined approach prevents the participants losing their flow or becoming confused during the interview. The detailed notes taken by the number 2 interviewer are crucial as it is these notes which will be used for thematic or categorical analysis once all the data has been collected. An influence map can then be generated. “Simple and complicated systems are governed by cause and effect relationships. Cause and effect relationships are the cornerstone for traditional investigations. Complex systems are governed by influence rather than cause” (Pupilidy 2015) (see Fig. 3).

During the interview, the participant will be asked to recall specific phases of the occurrence and the interviewer may encourage deeper thinking and recollection through the use of subtle cognitive interview techniques, Geiselman et al. (1986), or through encouraging the participant to draw key flight patterns or approach paths, annotated with perceived (or remembered) speeds and heights etc.

Once the participant has recalled all they can remember, they may then be invited to watch the animation (data reconstructed animation) of the event. One of the final tasks for the interviewers is to explore with the participant what they might now benefit from, with regard to learning (how the system can help them learn and improve, and also what they can now do to help the system learn and improve).

As the OLR process is still working alongside current occurrence investigation statutory requirements, there will be a perceived responsibility on the interview team with regard to maintaining and ensuring system safety - this is to be encouraged and is in keeping with “just culture”. It is not the role of the interview/review team however to apportion blame or liability, but merely to gain an understanding of why the delegates actions made sense to them at the time.

3 Findings

Findings will be considered across 3 categories; these same categories will be addressed in the discussion section:

-

1.

Participant - level of trust and engagement with the process (perceived).

-

2.

System - increased understanding of context around an event.

-

3.

System - understanding risk and meeting current statutory responsibilities.

Participant - Level of Trust and Engagement with the Process

The pilot study has relied on a perceived level of trust and engagement with the process, ascertained through direct communication on this topic with the participant. The participants attending the OLR’s did so following a broad range of occurrences, some of which they may have felt were resolved by their actions, some of which they may have believed were exacerbated by their actions! The opening section of the OLR brief explains the purpose of the OLR, how the OLR will be conducted and importantly the view that the participant is the expert (they were there at the event) and the system is grateful that they have agreed to help with system learning following the occurrence.

Across the OLR’s conducted to inform this pilot study, the interview panel encountered a broad range of perceived levels of trust (engagement will be discussed separately). Trust may have been an issue (positive or negative) for participants for many reasons:

-

Rank or position in the organisation.

-

Perceived level of accountability following a negative outcome.

-

Fear of punitive action following a perceived negative outcome.

-

Past experience of unfair treatment.

-

Vicarious feelings of being treated unfairly - due to others experience in the organisation.

-

Relationships with management unrelated to the occurrence or event.

-

Cultural influences.

These factors listed above must be considered during the opening section of the OLR, it is vitally important that the trust gained at this stage is then supported by the wider safety learning philosophy and “just culture” policy of the organisation. The OLR interviewers had to be prepared to answer difficult questions from the participants in direct response to any of the factors listed above - during the pilot study however, these factors did not prove to be challenging and the interviewers found that the thorough explanation of the process served to allay any fears and calm the participants ahead of the review.

System - Increased Understanding of the Context Around an Event

The OLR’s conducted covered a wide range of performance (perceived positive or negative) across varying flight operations scenarios, the following generic occurrences are examples of the topics which could be addressed for deeper learning:

-

Taxiway incursion

-

Runway incursion

-

Unstable approach

-

Go-around

-

Crew Resource Management

-

Emergency procedures

The review team explored these topics with the participants involved and were able to very quickly ascertain in each instance what had actually happened. This “what” is important to the OLR in setting the scene (it is also important from a statutory viewpoint, which will be addressed in the discussion) and provides the start point for gaining further information to build context and an understanding of the sense-making.

Once the “what” element has been established, the OLR goes on to explore the “how and the why”, but most importantly the local rationale “why did it make sense at the time”. From these pilot (pilot study) OLR discussions a clearer understanding of the performance shaping factors (environmental, technical, social and system) was ascertained. In a bid to understand the context around the event, the participants were encouraged to speak freely and openly, they were not interrupted or questioned during the initial part of the review - this proved to be extremely important and was instrumental in setting the scene for broader understanding of the trade-off’s which were occurring throughout the time period being discussed, and the time preceding this.

Once the context had been established, the narrative was then interrogated in detail - this serves different purposes;

-

Broader understanding of the context shaping performance.

-

Deeper analysis of the sense-making at the time of the event.

-

Deeper analysis of the sense-making since the event (reflection and reflective practice).

-

Understanding socio-technical shared and distributed Situational Awareness (SA) (Stanton 2016).

System - Understanding Risk and Meeting Current Statutory Responsibilities

At the end of the sessions, the participants are asked, what the system can do to help them learn following the occurrence, and what they can do to help the system learn? This is the whole point of conducting the OLR, it is therefore imperative that the loop is closed for the individual (provide them with assistance if required and accept their assistance if offered), and also to conduct deeper analysis of the individual accounts following an occurrence - also across the other occurrences considered for review.

There is as previously mentioned, the statutory safety requirement to ensure system safety. This element can be satisfied to the same level as was previously achieved through formal safety investigation methods. The data and evidence gained through the OLR can be analysed using extant analysis methods. For this pilot study a slightly amended version of the Accident Route Matrix (ARM) (see Fig. 4) was utilised to satisfy this statutory element.

derived from Harris (2011)

Version of Accident Route Matrix model –

This analysis method (ARM) whilst reflecting cause and effect logic, does allow for the linking of factors with less direct obvious performance shaping affect, it therefore helps us to develop the influence map (Fig. 3) we are setting out to create through the OLR process.

4 Discussion

This pilot study has involved the creation and implemetation of a new philosophy and approach to learning from occurrences in a commercial aviation setting. The opportunity to employ the OLR method in a dynamic real-world flight operations environment, has provided the opportunity to apply a conceptual tool (the OLR interview methodology) and test whether or not it does provide for additional learning, but also meet current statutory requirements following an occurrence. The pilot study has demonstrated that this methodology does encourage and provide for wider learning and does equal or surpass current investigation methods in this environment.

The challenge throughout this pilot study has been to find ways to deploy the OLR methodology in order to not only understand normal work, adaptability and resilience in the event of an unwanted outcome being reported, but also in the event that nothing unwanted has occurred – this normal work without a negative outcome represents 98% plus of the operational activity undertaken by the organisation.

From the OLR’s conducted to date, we have already identified factors and themes which may not have been uncovered using traditional methods of investigation (this refers to the low level incidents or occurrences addressed in this study to provide operational learning) - full safety investigation protocol following a serious incident or accident may uncover these elements, (those protocols would not be employed in these instances at present). It is of note that the traditional conceptual Threat and Error Management (TEM) approach may not have considered some of the factors uncovered in the OLR, therefore these elements would not necessarily have been brought to the conscious awareness of the crew even through exposure to the associated safety audit report! Through OLR we may uncover a trend demonstrating a crew’s willingness to continue to land from an unstable approach in VMC conditions for example, maybe due to the (false) assurance provided by a visible runway! Using this as an example, the OLR would encourage the understanding of local rationale, and also probe as to what we might learn operationally from this dangerous condition that crews may unwittingly be subjecting the system to - how does the system protect itself from this and how might it quickly resolve the dynamic issue that has been uncovered?

Line Operations Safety Audit (LOSA) as described by Klinect et al. (2003) is a safety tool that gathers cockpit observations during normal flight operations - the key benefits being:

Provides a proactive snapshot of system safety and flight crew performance.

Identifies the strategies employed by crews in order to prevent or deal with undesired aircraft states.

Provides a diagnosis of operational performance strengths and weaknesses (without an unwanted event having occurred).

Provides additional insight to the airline regarding the “work-as-done” on the daily operation.

LOSA has proved to be a valuable tool for safety and will continue to add value from a safety perspective. The OLR in conjunction with LOSA will widen the net with regard to operational learning. LOSA gives us insight through understanding observable behaviours, the OLR will add additional insight by exploring the unobserved cognitive rationale and thinking that may be driving the observable behaviours and those not consciously observed by crewmembers in themselves or their team.

Going back to the opening statement:

“Airlines are safe” - so safe in fact that our opportunity for learning how we might become even more safe is now greatly diminished! In the absence of accidents and serious incidents our traditional approach to learning from less significant events deemed to compromise safety, relies heavily on the continued reporting of near misses and the use of data systems (Flight Data Recorders). This reality is the rationale underpinning the Safety I and Safety II approach described by Hollnagel (2014).

From a safety II perspective we want to understand what good looks like, we want to know what the resilient behaviours are that our crews are demonstrating almost all of the time in order to make the operation work. We want to understand the trade-offs between efficiency and safety, and we need to determine what the adaptive capacity looks like. Adaptive capacity and the ability to vary performance is essential for the operation, but we must bear in mind that our airlines have become safe through strict regulation and strict process and procedures developed to allow safe operation. The performance variation and adaptability therefore must happen within certain bounds of expected and predictable behaviour.

5 Further Research

Based upon this pilot study, the research will now be expanded to capture and analyse a much larger sample of flight operations data for the purpose of learning and the creation of influence maps to demonstrate the potential areas of interest to the operation. This analysis will be used in conjunction with the LOSA analysed data and it is predicted that this will give an enhanced view of proactive safety in the operation.

References

Dekker, S.: Safety Differently (2014). https://doi.org/10.1201/b17126

Eurocontrol: Systems thinking for safety, ten principles, a white paper. Moving toward Safety II (2014)

Geiselman, R.E., Fisher, R.P., MacKinnon, D.P., Holland, H.L.: Enhancement of eyewitness memory with the cognitive interview. Am. J. Psychol. (1986). https://doi.org/10.2307/1422492

Harris, S.: Human factors investigation methodology. In: 16th International Symposium on Aviation Psychology, pp. 517–522 (2011). https://corescholar.libraries.wright.edu/isap_2011/28

Hollnagel, E.: The phenotype of erroneous actions. Int. J. Man Mach. Stud. (1993). https://doi.org/10.1006/imms.1993.1051

Hollnagel, E.: Safety-I and safety-II: the past and future of safety management (2014). https://doi.org/10.1080/00140139.2015.1093290

International Commercial Air Transport (ICAO): Standards and recommended Practices, Annex 6, Pt 1, 3.3.5 (2008)

Klinect, J., Murray, P., Merritt, A., Helmreich, R.: Line operation safety audits (LOSA): definition and operating characteristics. In: 12th International Symposium on Aviation Psychology (2003)

Perrow, C.: Accidents, normal. In: International Encyclopedia of the Social & Behavioral Sciences (2001). https://doi.org/10.1016/b0-08-043076-7/04509-5

Pupilidy, I.: The Transformation of Accident Investigation: from finding cause to sensemaking (2015). https://pure.uvt.nl/ws/files/7737432/PupilidyTheTransformation01092015.pdf

Rasmussen, J.: The role of error in organizing behaviour. 1990. Qual. Saf. Health Care (2003). https://doi.org/10.1136/qhc.12.5.377

Reason, J.: Human Error. Cambridge University Press, University Printing House, Cambridge (1990, 1997)

Seddon, J.: Freedom from Command and Control, 2nd edn. Vanguard, Buckingham (2005)

Stanton, N.A.: Distributed situation awareness. Theor. Issues Ergon. Sci. 17(1), 1–7 (2016). https://doi.org/10.1080/1463922X.2015.1106615

Woods, D.D., Johannesen, L; Cook, R., Sarter, N.: Behind Human Error: Cognitive Systems, Computers and Hindsight, Soar Cseriac 94-01, Dayton Ohio (1994)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

McCarthy, P. (2020). The Application of Safety II in Commercial Aviation – The Operational Learning Review (OLR). In: Harris, D., Li, WC. (eds) Engineering Psychology and Cognitive Ergonomics. Cognition and Design. HCII 2020. Lecture Notes in Computer Science(), vol 12187. Springer, Cham. https://doi.org/10.1007/978-3-030-49183-3_29

Download citation

DOI: https://doi.org/10.1007/978-3-030-49183-3_29

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-49182-6

Online ISBN: 978-3-030-49183-3

eBook Packages: Computer ScienceComputer Science (R0)