Abstract

The transformation of healthcare has and will continue to require a meaningful integration of data into bedside care, population health models, and sophisticated strategies to translate analytics into better outcomes. However, the ability to readily access, properly analyze, and effectively use data to drive improvement is still a challenge for many healthcare organizations. Harnessing the power of data for quality improvement requires a cultural change within many institutions and practices. Healthcare systems benefit when health informatics is applied and data is converted to useable information with timely delivery; this transformation requires both technology and expertise. Organizations must support cross-functional teams comprised of clinical, operational, and financial expertise with a data governance structure to support their functions. With the shift in healthcare payment models from fee-for-service to value-based payment (lower costs for better outcomes), there is an increasing need to measure both outcomes and costs with more accuracy and precision. This chapter explores key strategic elements needed to advance analytics in healthcare and how analytics can be applied to clinical decision support, population health strategies, and measuring the value of care.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Executive Summary

Today’s healthcare environment demands an expanded scope and sophistication of data collection from a variety of electronic health records to mobile and wearable devices. But there remains an untapped potential to maximize existing analytics systems to measure and improve healthcare quality at the individual and the population level. Organizations must support cross-functional teams comprised of clinical, operational, and financial expertise with a data governance structure to support their functions. This demands more than strategy; it requires a cultural change. This transformation demands that macro system strategy and micro system implementation accept data and analytics as a tool for learning rather than a tool for punitive reform. We present several cases illustrating how data can be harnessed to improve healthcare quality: this includes the development of clinical decision support tools to improve sepsis outcomes and the use of registries to benchmark outcomes across institutions. We also explain how the timely delivery of high-quality data can be streamlined to enable clinicians to drive improvement. The challenges of measuring healthcare value with the current information systems in healthcare are also described.

Learning Objectives

Upon completion of this chapter, readers should be able to

-

Describe the current landscape of data analytics in healthcare

-

Discuss the differences between the stages of analytic maturity

-

Discuss the key strategic elements needed to advance analytics in healthcare

-

Discuss how advanced analytics can be applied to clinical and population health settings as well as the measurement of healthcare value

-

Describe the difference between accuracy and precision of data

Current Analytics Landscape in Healthcare

Strategies for measurement, including the collection and utilization of healthcare data, vary widely. Ideally, technology can enable improvement work by creating timely access to, and the transformation of, data. Unfortunately, fragmented and proprietary data collection mechanisms and policies that limit data sharing create barriers to the effective use of technology in healthcare. Fragmentation in data exists both at the patient level and at the system level. Electronic health records (EHRs) are linked to the site of care such that one patient can have numerous elements of healthcare data related to their own health across several prehospital systems, hospitals, practitioners, and alternative care delivery venues. Efforts to bridge some of these practice silos have included the use of administrative data sets, particularly, billing and claims data; however, medical claims and billing information offer limited utility in the construction of robust clinical data models and decision support tools [1].

To accelerate the adoption of technology as a tool to drive healthcare improvement and stimulate the facile use of healthcare data, the federal government invested billions of dollars to fuel the implementation of EHRs (e.g., the American Recovery and Reinvestment Act of 2009) [2]. Unfortunately, despite a 2005 Rand report forecasting an $80 billion dollar savings in healthcare expenditures annually from the adoption of health information technology (HIT), costs have continued to grow. Failures of such initiatives have been attributed to a number of factors including sluggish adoption of HIT systems, poor interoperability of systems with limited ease of use, and a failure of providers and infrastructures to reengineer their care processes to reap the full benefit of HIT [3]. Some systems have managed to integrate data across their systems to drive improvement work (e.g., Kaiser Permanente); however, this has been accomplished by functioning as both the provider and payer, which is not possible in many other health system arrangements.

Advancing Data Analytics Maturity

Health informatics is “the interdisciplinary study of the design, development, adoption and application of IT-based innovations in healthcare services delivery, management, and planning.” [4] Healthcare systems benefit when health informatics is applied and data is converted to useable information with timely delivery. This transformation requires technology and expertise as well as a strategy to coalesce both toward the aim of improving patient outcomes.

In the world of informatics, data use increases in sophistication from simple data gathering and reporting, as can be done from a patient EHR report at the bedside, to aggregating and analyzing data in populations for themes (data analytics), predicting events or patients at risk (predictive analytics), or linking health observation with health knowledge to influence clinical decisions (prescriptive analytics or clinical decision support). Incorporating clinical decision support capabilities into practice can improve workflow through ease of documentation, provide alert information at the point of care, and improve the cognitive understanding of the clinician [5, 6]. An organization’s move toward leveraging technology and analytics to improve outcomes moves along a continuum of maturity. Typically, organizations begin with using static data in the form of reports and then move toward using simple analytic tools to manipulate data to gain insights (data analytics stage). Then, more advanced statistical algorithms are applied to data that allow organizations to predict outcomes and apply early interventions (predictive and prescriptive analytic stages) [7,8,9]. These stages of analytic maturity are illustrated in Fig. 6.1. The speed at which organizations move through the stages can vary, and often, in any given organization, there may be pockets of advanced maturity while the organization as a whole is less developed.

The stages of analytic maturity in the healthcare enterprise can be illustrated on a growth continuum. Reprinted from Clinical Pediatric Emergency Medicine , 18(2), C.G. Macias, J.N. Loveless, et al., “Delivering Value Through Evidence-based Practice”, p. 95, Copyright 2017, with permission from Elsevier

A multipronged approach must be in place to achieve value from advancing analytics. The first prong is having the right expertise such as informaticists and data analysts and tightly coupling the two so as to design efficient and defined data analytic tools as part of technology implementation. The second prong is to create strategies to manage significant organizational change that comes with increasing technological capability and desire for data. The third prong is an effective approach to data governance.

An institution or practice’s hardware and software and data management processes are critical to its capability of advancing along the continuum from data reporting to prescriptive analytics. Many EHRs are developing analytics platforms that embed some of these capabilities into their existing workflows; however, robust analytics must still overcome gaps in delivering transformed data to the provider. EHRs and other information systems are costly to implement and maintain. Furthermore, beyond the hardware and software to collect the data, there is a requirement for human investment. Data within the most spectacular system is worthless if not interpreted and applied appropriately. Thus, the evolution of informatics from data reporting to sophisticated analytics requires collaborative teams to drive improvement strategies. Our experience suggests that optimal team members include experts in evidence-based medicine, EHR and clinical data specialists, and data architects in addition to outcomes analysts, healthcare providers, and operational leaders. The size and level of expertise of such a team depend on the problem to be addressed, but all domains are necessary. Redesigning workflow is critical to maximize the investment in HIT, analytics, and decision support. This requires a cultural change driven by leadership understanding and participating in the transformation of healthcare that analytics can drive [5].

With the widespread use of EHRs in today’s healthcare environment, data is expected at the bedside when technology provides it for a single patient. However, the vast growth of available data has given rise to the concept of big data . Big data represent large volumes of high-velocity, complex data that require advanced techniques and technologies to capture, store, distribute, manage, and analyze them [10]. The benefit of analyzing such data is not limited to the operational needs of healthcare systems. Outputs of analytics can be delivered to the bedside through visualization tools, thus benefitting prehospital providers, single-physician offices, hospitals, and hospital networks [10, 11].

As healthcare systems respond to the availability of massive amounts of data and progress through the stages of analytic maturity, the culture of an organization must embrace the value of data to drive improved outcomes of care. Overcoming a cultural resistance to the uses and truth of data is critical and requires a nonpunitive environment for demonstrating successes and failures based upon data and the analytics. Don Berwick has described the journey of data acceptance in four stages [12]:

-

Stage One: “The data are wrong.”

Questions about adjustments, hidden variables, sampling, poor input information, and other weaknesses in the validity and reliability of the transformed data will exist. Thus, there is a tendency to default to a belief that the data do not reflect reality. However, while no data set is ever perfect, in general, most are good enough to act upon.

-

Stage Two: “The data are right, but it’s not a problem.”

While people and teams may believe in the integrity of the data, they will point to natural variation as the cause and the justification for inaction. There is an acceptance that the status quo is sufficient.

-

Stage Three: “The data are right; it’s a problem, but it’s not my problem.”

At this stage, stakeholders recognize there is a problem but are not engaged in driving a solution, expecting that others or the system are responsible for taking action.

-

Stage Four: “The data are right; it’s a problem, and it’s my problem.”

All levels of acceptance of the validity of the data, the importance of the problem, and the personal or team responsibility for correcting the problem are achieved at this level. This is the stage where stakeholders engage in action as the problem is my burden.

Once the relationship to data transparency, accountability, and action is firmly established, the work of engaging in a culture focused on quality improvement (QI) can be accelerated. However, an understanding that data delivery is not the same as analytics takes cultural change. It shifts stakeholders from a paradigm of asking for the solution (a data report) to asking the question of what problem are we trying to solve in order to develop the needed analytics. Criteria have been developed to evaluate such a change in a health system’s analytic culture. Most criteria take into account characteristics as defined within six domains:

-

Data sources: Scope and number of data sources feeding into an organization’s analytics.

-

Data quality: Minimal to consistent accuracy and integrity of data.

-

Data currency: Timeliness of data and frequency of its use.

-

Analytic features: Sophistication of data from reporting to predictive modeling and workflow integration.

-

User profiles: Nature and breadth of users from analysts to multiple skilled everyday users.

-

Adoption profiles: Organizational sophistication from report users to a performance management culture [13].

In addition to the expertise and culture change required to advance a health system toward analytic maturity, a nimble and effective data governance strategy must be applied. Data governance is the foundation for any health system that strives to use data to improve care. It is critical to assuring the effective uses of data within an organization and between partner entities. At its most foundational, data governance ensures that data is of the highest quality and easily accessible. In its more advanced applications, it ensures that there is a strategy behind increasing data content for use. Data governance allows organizations to implement best practices, pool analytics, standardize metrics, provide clinical decision support, and optimize the EHR and data warehousing. Data governance must be iterative to accommodate new evidence discovery, growing amounts of data, evolving personal technologies, and a shifting payment landscape [14].

Ownership and privacy of healthcare data create additional hurdles to the sharing of data for quality improvement purposes. Most organizations are comfortable with the uses of their own data for quality improvement when all sources remain internal. However, the use of data across multiple entities (health plan, hospital, clinic, and primary care physician office) can create legal challenges to utilizing data for improvement purposes and add delays to achieving the optimal integration of systems. Collaboration with legal counsel is critical to ensuring that standards are in place to protect the privacy of data while allowing robust usage within the healthcare system.

Data Analytics to Support Population Health Strategies

Strategies that drive sophistication in analytics allow an organization to engage in accelerated practices to improve care across the health spectrum. In an era of evolving healthcare reform (payment reform and delivery system reform) population health has become critical, and big data and data analytics are essential to engaging in and measuring effective care delivery [15]. Population health is a term that reflects the health of patients across continuums of care with a goal of improving health outcomes. Delivering improved outcomes for populations of patients requires partnerships with disciplines outside of traditional care delivery teams, including professionals in public health, advocacy, policy, and research. The focus of population health improvement activities may span geography (e.g., a region), a condition (e.g., children with a chronic condition such as asthma), a payer (e.g., patients in an accountable care organization or within a health plan), or any characteristic that would link accountability for outcomes for that group of patients. Population management is a concept in which a common condition or other linking element may drive the practitioner to create and implement prevention or care strategies and promote health for groups of patients [15].

As achieving improved health outcomes extends beyond the simple delivery of healthcare to ameliorate an acute illness or injury, the definition of population health expands to become the art and science of preventing disease, prolonging life, and promoting health through recognized efforts and informed choices of society, organizations, public and private communities, and individuals. Engaging in population health means expanding the reach (and the obligation) of the clinician from bedside care alone to a goal of assuring the health of the patient, including helping the patient and family overcome barriers to accessing and coordinating care [15].

In order to improve outcomes of care, the clinician and system must identify and mitigate the effects of social determinants of health and engage the patient and family in their own health management and disease and injury prevention. Social determinants of health may include education level, economic stability, social and community contexts, neighborhood and physical environments, and other such factors that are not within the locus of control of the clinician unless actively attempting to work in concert with other aspects of the healthcare system. As examples, childhood obesity, asthma, and dental caries are not only prevalent in children in the United States child population but have a reciprocal interaction with family dysfunction and school stress, necessitating that a provider addresses these social determinants in order to achieve improved outcomes of care [15].

Data analytics are necessary to quantify demographic information for populations and understand opportunities for improvements and demand robust analytics to create attribution models for understanding the potential areas in which quality improvement interventions may have potential impact. Bedside data reporting and simple EHR reports are insufficient to drive quality improvement across entire populations [14].

As payers and governments move toward value-based payment models (models that reward high-quality outcomes rather than provide fee-for-service), providers and healthcare systems are faced with external pressures to engage in population management to meet the demands of payers. The impact to the provider may be thought of in four domains of healthcare transformation within population health: business models (facilities and services as an infrastructure for a system), clinical integration (provision of care across a continuum), technology (EHRs and the systems of hardware and analytics that support care delivery), and payment models (innovative payment models that reward good outcomes or penalize poor outcomes). All of these components require data to identify opportunities for improvement and assess the impact of interventions [15].

Achievement of improvement in quality and satisfaction and reducing per capita costs through population health inherently require a measurement system capable of rapidly collecting and managing the storage and transformation of data analytics to drive advances in population health management. For example, sophisticated data analytics would allow users to derive prediction models to understand and subsequently intervene in population health issues such as readmission rates for children with diabetes through dietary counseling or home visits. Data in that setting can be utilized to understand the nuances in the population to effectively and efficiently deliver the right care to the right patient at the right time to optimize patient outcomes.

Use Cases for Data Analytics in Quality Improvement

Case Study • • •

-

Using Advanced Data Analytics to Improve the Outcomes of Patients with Sepsis

Sepsis is a potentially life-threatening complication of an infection in the blood and occurs when chemicals released into the bloodstream to fight the infection trigger an inflammatory response throughout the body. Worldwide, pediatric sepsis, or septic shock, is a leading cause of death in children. Some best practices have been identified to provide more timely recognition and management of sepsis. This includes timeliness of intravenous fluid resuscitation and antibiotics [16].

In our experience, initial efforts at improving processes were initially unsuccessful when data systems—which at the time were in disparate electronic systems and databases housed in the emergency department (ED), the pediatric intensive care unit (PICU), the EHR , and in billing and coding data—were utilized to retrieve valuable information on processes and outcomes. Piecemeal data was challenging to link, and without outcomes data (mortality rates clearly attributable to septic shock), clinicians were not convinced that there was a significant problem to be solved. Eventually, these disparate data sources had to be manually linked to pull data that was reliable and valid. Once the integrity of the data could be assured, clinicians and administrators engaged in improvement efforts. Our mortality rates for cases of septic shock mirrored what has emerged as national rates (about 3%–12%) depending on the unit and underlying condition of the patient.

Once the analytics could produce visualizations of the scope of the problem, ED and PICU members united to create a quality improvement team that was focused on rapid cycle process improvement to drive quicker recognition (diagnosis) and more timely and efficient management with fluids, antibiotics, and when necessary, vasoactive drug therapies. Utilizing risk stratification approaches, the team identified a number of comorbidities and vital sign abnormalities that could define patients with lower and higher levels of risk. A predictive model to identify those patients at high risk for septic shock was created within a computerized triage system alarm (a form of clinical decision support). System changes were enacted that would allow recruitment of additional nursing, respiratory therapy, and pharmacy personnel and physician clinicians when a patient was identified by the trigger tool (see Fig. 6.2). The tool is the third iteration of a best practice alert generated from predictive analytics data utilizing 4 years of data on patients evaluated for sepsis or potential sepsis [17].

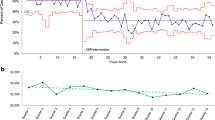

Fluids were administered via syringe (rather than pump) to improve time to fluid bolus. Standardized laboratory studies and antibiotics were prioritized within an evidence-based guideline to reduce unwanted variation in care. Frequent measurement and interventions were documented in a standardized graphical flowsheet to facilitate interpretation of physiologic responses to therapy (see Fig. 6.3). When compared to process measures before the intervention, time from triage to first bolus decreased, as did time to third bolus and time to first antibiotics.

Statistical process control charts of (a) time to first bolus for children identified at triage, (b) time to third bolus for children identified at triage, and (c) time to first antibiotic for children identified at triage. From A.T. Cruz, A.M. Perry, et al. “Implementation of goal-directed therapy for children with suspected sepsis in the emergency department.” Reproduced with permission from Pediatrics, Vol. 127(3), pages e758–66, Copyright 2011 by the AAP

Delivering Data for Local Quality Improvement Activities

One of the biggest barriers to clinicians and healthcare administrators engaging in quality improvement work is having access to high-quality data. High-quality data is defined not only as valid and reliable data but timely data as well. With the implementation of EHRs and data warehousing, unprecedented amounts of data are available in modern healthcare systems. However, the ability to readily access these data presents another challenge. Several dependencies must be met before high-quality data is made available. Data have to be complete, which means that the source systems must capture the data requested. Data need to be valid, which involves the cataloging of data fields and tables in such a manner that a source of truth can be identified for the various data types, and those delivering the data must have knowledge of the valid data fields and tables. Finally, the process of delivering data in a timely manner requires the development of an intuitive and nimble process for requestors as well as clearly delineated expectations of those completing the requests. The process must also take into account compliance and legal requirements as well as meeting best practices for cybersecurity. All of these dependencies should be considered in the context of a data maturation process to inform data governance strategies and help manage the expectations of data requestors. In other words, not every data point requested will be available initially. Expanding data content and assuring data validity occurs over time. Having high-quality data requires an institutional investment in resources as well as an institutional commitment to the development of an evolving data governance strategy [18].

In our experience, the initial installation of an enterprise data warehouse (EDW) included finding and accessing core data content from our clinical, financial, human resource, and patient satisfaction source systems. It also included an initial attempt at cataloging the content made available in the EDW. Having addressed two of the three dependencies to producing high-quality data, the next step was to improve the final dependency–timeliness of delivery. To address this, we developed a standardized data request process for the workforce. The previous request process was cumbersome and often resulted in a request that would seem to get lost in a black hole with the information services team.

The solution was to streamline the request process by asking fewer and more relevant questions initially, providing transparency to the approval steps required prior to delivering data, and ultimately linking these steps to the organization-wide ticketing system which is necessary for tracking and following-up on requests. Another key improvement to our process was the creation of a triage team to review incoming requests and assign them appropriately, while accounting for prior subject matter knowledge or knowledge of existing data repositories that can be used to fulfill requests. In addition to members from the information services team, the triage team includes members from the quality and finance teams to help review requests and provide additional subject matter expertise. This allowed a deeper understanding of the problem being solved and a better solution than the data report which the requestor, who may not have an in depth understanding of data, sought.

A critical and necessary step that was added to our new data request process was a data training module. This training is mandatory and required as part of the approval process before a data request can be completed. This also represented the first step in a system-wide approach to improving data literacy, which is a growing responsibility of any organization providing data to its workforce. Although it is not new for a healthcare organization to be communicating about protecting privacy and following regulations that govern patient information, providing this information within the data request workflow provides context to the regulations and also creates a more direct connection point between the requestors and their responsibilities with data once it is delivered. The entire process was codified in our policy and procedure manual.

Measuring Healthcare Value

With the shift in healthcare payment models from fee-for-service to value-based payment (lower costs for better outcomes), there is an increasing need to measure both outcomes and costs with a more holistic view that encompasses the perspectives of the provider (both institutional and physician), payer, and ultimately the patient. With the exception of a few health systems, historically, quality improvement strategies primarily focused on measuring improved clinical processes and outcomes at the provider level—either institutional (e.g., hospital or clinic) or sole practitioner. Over time, it has become increasingly evident that measuring the financial impact of improvement activities is important and necessary to demonstrate positive contributions to the operating margin that quality improvement can make. Measuring value requires the ability to measure the outcomes of healthcare delivery, the costs of delivering that care, and the impact to the payer and patient. This requires increasing knowledge of the measurement and data systems for all parties tied to the value equation.

When attempting to measure value, a critical issue to address is the legal aspect of sharing data between payers and providers. This hurdle can be overcome with one-time data use agreements or more comprehensive legal arrangements like the organized healthcare arrangement described under rules that allow for the seamless exchange of data across different entities within the same health system for either operational or quality improvement purposes.

Our organization recently embarked on a 12-month value-based payment pilot between our hospital, a physician services organization, and a health plan (insurance company) to understand and learn the nuances of value-based payment contracts. The health plan was the administrator of the program. The goal of the program was to achieve value for health plan members diagnosed with appendicitis who had an appendectomy. Quality metrics and financial targets were agreed upon at the outset of the program. A patient experience component was also added in the form of a measurement-only goal to understand the feasibility of using the existing patient satisfaction metrics in a value-based payment program. The quality and financial targets were derived from studying a comprehensive data set created by joining data from the health plan and the hospital. Ultimately, pace toward the quality and financial goals was measured using the health plan claims data, and not the clinical data. Another distinguishing feature of the program was the definition of the appendectomy episode. The appendectomy care cycle started 7 days before diagnosis and ended 30 days after the appendectomy operation. This had important implications for measurement as the unit of measure was not the individual patient encounter but the entire episode of care.

Undertaking this pilot elucidated the practical challenges of measuring value in the contemporary healthcare setting using the current data systems available. It also brought to light the limitations of our current units of measurement in healthcare that have implications for analyzing value and understanding performance. For example, merging the claims and clinical data sets necessitated routine reconciliation of the member identification number and the patient’s medical record number. While a patient’s medical record number in the provider system stays the same, the same patient can have several member identification numbers because of changes in enrollment status. Another challenge was the protracted intervals between performance reports. This was due, in part, to delays in claims processing that resulted in 3-month lag times to receive claims data and, in part, to the time required to manually merge the claims and clinical data together. This type of lag time hinders the clinical team’s ability to refine intervention strategies efficiently, if needed, and also translated into the untimely reporting of performance toward goals considering the program was only 12 months in duration.

Measurement at the hospital also presented challenges. Clinical process and outcome metrics were available in a relatively automated fashion, but measuring the cost of caring for a patient with appendicitis who subsequently had an appendectomy proved to be difficult. Generally speaking, costs incurred by a provider are measured by the business unit incurring the costs and not by the disease process that is necessitating the care delivery. In an attempt to understand the costs of the appendectomy episode, we employed a time-driven activity-based costing methodology. This allowed us to study the costs of care based on time spent and costs incurred at key milestones in the patient care continuum [18].

Measuring patient experience presented a similar challenge for a similar reason. Currently, patient experience is measured by location of care and, again, not by disease, so measuring the experience of our patients undergoing an appendectomy had to be done differently using billing codes. While feasible, the analysis of patient experience data for the appendectomy patients did not show much difference from all patients encountering our system (i.e., the dissatisfiers for the appendectomy population were similar to the dissatisfiers for the whole population).

These lessons illustrate the complexity of data analytics and the skills and technology that must be grown by organizations to enter into sophisticated value-based payment programs if those programs are to drive meaningful quality improvement.

Participation in Quality Improvement Activity Beyond the Local Institution

Local quality improvement work often extends beyond the confines of the local institution to leverage best practices of other facilities and to create robust data repositories for clinical research. This is achieved through participation in either multicenter patient registries or quality collaboratives. As is the case with all issues of data transfer as described earlier, regulatory requirements must be met prior to participation. Methods to address these regulations vary from institution to institution. Most often, participation is governed either through clinical research mechanisms and obtaining local institutional review board (IRB) approval or business associate and data use agreements. Some institutions require both.

A considerable obstacle to participating in these multicenter quality improvement efforts is the burden of data collection at the local level and subsequent submission to the outside, hosting organization. This is most often a manual process of extracting data from the medical record (paper or electronic) and transferring that data into a centralized data repository maintained by the host institution. Most often, participants are given access to data entry portals where data are manually submitted. In such scenarios, local participants have limited access to their own raw data for analysis . Sometimes data is simply submitted via encrypted simple spreadsheet files – a method that gives pause to any cybersecurity enforcer. There are a few organizations that have established systems whereby local participants can load data actively at their site, and then data is harvested by the national organization through mechanisms programmed within local software. This allows the local participant to have access to their raw data at any time.

Another potentially frustrating aspect of participating in multicenter QI efforts is the limited control by the participant over the metrics that are established by the multicenter group for reporting. The desired data to be reported is dictated by an outside entity which means that there is not necessarily consistency or alignment with metrics that may be tracked at the local level. Or, as is most often the case, there are nuances in the definition of the populations, processes, and outcomes being measured. Typically, a committee establishes the data elements, and metrics required for reporting and influence is gained only by being a member of that committee. Considering that the resources required to participate in QI activity are committed at the local institutional level, there is little consideration for the feasibility of data gathering when new measures are added to the existing compendium of measures. This culminates in an ever-increasing burden of reporting that rests with the provider participant [19, 20]. As the number of registries and QI collaboratives continues to grow, providers and their organizations will have to decide what they can support and consider the potential and realized return they are getting on their local investment.

Both consumers and payers are demanding more information about performance of both hospitals and individual providers [21]. While most multicenter QI efforts are currently considered voluntary, there is an increasing expectation that hospitals participate, and their participation is accounted for through hospital ranking systems such as US News and World Report Best Hospital Rankings. While much of what is in the public domain currently for publicly reported data is based on Medicare claims information, some professional societies have worked with payers to establish clinical data registries as sources of truth for their outcomes reporting [22].

For all the reasons cited previously, the number of data fields being requested is increasing, while the demand for transparency of quality and outcomes data is also increasing. Therefore, it seems logical that, in the era of big data and with the onset of the EHR , there would be more seamless mechanisms to move data from one electronic system to another to decrease the manual burden of data collection and ensure the validity and reliability of the data being reported from each participating site. Although there has been some progress made with EHR vendors and professional societies to create mechanisms for data retrieval within the EHR , there have been no major breakthroughs. Locally, we have developed strategies to extract data from the EHR and push them to data registries that are locally maintained and subsequently harvested at a national level. There remains a significant manual component to this process to map the fields from one data system to the appropriate field in another system.

Accuracy and Precision of Data

When embarking on any endeavor to measure quality, costs, or value, one must consider the concepts of accuracy and precision. Mathematically and statistically speaking, accuracy refers to how close the data reflects the true value of what is being measured, while precision reflects the exactness of that measurement. Consider the target in Fig. 6.4.

The center dot represents the true value of what is being measured. The first target represents a measurement that is precise, but not accurate. The repeated measurements, while near each other, are not near the true value. The second target shows a measurement that has high accuracy but low precision. The repeated measurements are not near each other, but they are near the true value. The third target shows a measurement that has high accuracy and high precision. The repeated measurements are both close to the true value and close to each other. For research endeavors, one requires both highly accurate and highly precise data. For rapid cycle quality improvement, data needs to be accurate, but not necessarily highly precise initially. As improvement cycles continue, the level of precision is refined. There are statistical methods that can be employed initially to ensure accuracy within defined margins of error when an improvement cycle begins.

Future Trends

The transformation of healthcare has and will continue to require a meaningful integration of data into bedside care, population health models, and sophisticated strategies to translate analytics into improvements in outcomes. At its core, quality improvement not only requires an attention to data collection and processing but also requires the people and organizational structures to assure effective workflows to translate data into information that can drive better healthcare outcomes. In order to be meaningful, analytics for quality improvement must be sensitive to and incorporate clinical, operational, and financial perspectives. The most proximal future of analytics will involve sophisticated technologies for predictive analytics and risk stratification, driving care through clinical decision support. Acceleration of those processes will harness technologies such as machine learning and artificial intelligence to create greater efficiencies than the otherwise manual strategies of analyzing population-based data to improve health.

References

Wills MJ (2014) Decisions through data: analytics in healthcare. J Healthc Manag 59:254–262

Department of Health and Human Services Centers for Medicare & Medicaid Services (2010) Medicare and Medicaid programs; electronic health record incentive program; final rule. Fed Regist 75(144):44313–44588

Abelson R, Creswell J (2013) In second look, few savings from digital health records. The New York Times. https://www.nytimes.com/2013/01/11/business/electronic-records-systems-have-not-reduced-health-costs-report-says.html. Accessed 28 July 2015

Procter R (2009) Health informatics topic scope. In: U.S. National Library of Medicine, Health Services Research Information Central. https://hsric.nlm.nih.gov/hsric_public/display_links/717

Macias CG, Bartley KA, Rodkey TL et al (2015) Creating a clinical systems integration strategy to drive improvement. Current Treat Options Peds 1:334–346. https://doi.org/10.1007/s40746-015-0031-7

Richardson JE, Ash JS, Sittig DF et al (2010) Multiple perspectives on the meaning of clinical decision support. AMIA Annu Symp Proc 2010:1427–1431

Strategy Institute (2013) Proceedings from the Second National Summit on Data Analytics for Healthcare, Toronto

Adams J, Garets D (2014) The healthcare analytics evolution: moving from descriptive to predictive to prescriptive. In: Gensinger R (ed) Analytics in healthcare: an introduction. HIMSS, Chicago, pp 13–20

Macias CG, Loveless JN, Jackson AJ et al (2017) Delivering value through evidence-based practice. Clin Ped Emerg Med 18(2):89–97

Raghupathi W, Raghupathi V (2014) Big data analytics in healthcare: promise and potential. Health Inf Sci Syst 2:3. https://doi.org/10.1186/2047-2501-2-3

Burghard C (2012) Big data and analytics key to accountable care success.. IDC Health Insights

Institute for Healthcare Improvement (2016) Improvement tip: taking the journey to “Jiseki”.. http://www.ihi.org/resources/Pages/ImprovementStories/ImprovementTipTaketheJourneytoJiseki.aspx. Accessed 7 December 2016

MedeAnalytics (2012) Healthcare Analytics Maturity Framework. https://www.scribd.com/document/330016789/MedeAnalytics-Healthcare-Analytics-Maturity-Framework-MA-HAMF-0212?doc_id=330016789&download=true&order=469192010

Sanders D (2013) 7 Essential practices for data governance in healthcare.. Health Catalyst. https://www.healthcatalyst.com/wp-content/uploads/2013/09/Insights-7EssentialPracticesforDataGovernanceinHealthcare.pdf

Schwarzwald H, Macias CG (2018) Population health management in pediatrics. In: Kline MW (ed) Rudolph’s pediatrics, 23rd edn. McGraw-Hill Education, New York, pp 21–24

Paul R, Melendez E, Wathen B et al (2018) A quality improvement collaborative for pediatric sepsis: lessons learned. Pediatric Quality & Safety 3(1):e051. https://doi.org/10.1097/pq9.0000000000000051

Cruz AT, Perry AM, Williams EA et al (2011) Implementation of goal-directed therapy for children with suspected sepsis in the emergency department. Pediatrics 3:e758–e766. https://doi.org/10.1542/peds.2010-2895

Goldenberg JN (2016) The breadth and burden of data collection in clinical practice. Neurol Clin Pract 6(1):81–86. https://doi.org/10.1212/CPJ.0000000000000209

Disch J, Sinioris M (2012) The quality burden. Nurs Clin N Am 47(3):395–405. https://doi.org/10.1016/j.cnur.2012.05.010

Harder B, Comarow A (2015) Hospital quality reporting by US News and World Report. JAMA 313:19. https://doi.org/10.1001/jama.2015.4566

Ferris TG, Torchiana DF (2010) Public release of clinical outcomes data–online CABG report cards. NEJM 363(17):1593–1595. https://doi.org/10.1056/NEJMp1009423

Mack MJ, Herbert M, Prince S et al (2005) Does reporting of coronary artery bypass grafting from administrative databases accurately reflect actual clinical outcomes? J Thoracic Cardiovasc Surg 129(6):1309–1317. https://doi.org/10.1016/j.jtcvs.2004.10.036

Additional Resources-Further Reading

IOM (Institute of Medicine) (2015) Vital Signs: Core metrics for health and health care progress. The National Academies Press, Washington DC

Kaprielian VS, Silberberg M, McDonald MA et al (2013) Teaching population health: a competency map approach to education. Academic Med 88(5):626–637. https://doi.org/10.1097/ACM.0b013e31828acf27

Office of Disease Prevention and Health Promotion (2011) Social determinants of health. http://www.healthypeople.gov. Accessed 20 August 2016

Stiefel M, Nolan K, Institute for Healthcare Improvement (2012) A guide to measuring the triple aim: population health, experience of care and per capita cost. IHI Innovation Series white paper. http://www.ihi.org/resources/Pages/IHIWhitePapers/AGuidetoMeasuringTripleAim.aspx

Stoto MA (2013) Population health in the affordable health care act era. Academy Health, Washington D.C

Taveras EM, Gortmaker SL, Hohman KH et al (2011) Randomized controlled trial to improve primary care to prevent and manage childhood obesity: the High Five for Kids study. Arch Pediatr Adolesc Med 165(8):714–722. https://doi.org/10.1001/archpediatrics.2011.44

Yu YR, Abbas PI, Smith CM et al (2016) Time-driven activity-based costing to identify opportunities for cost reduction in pediatric appendectomy. J Pediatr Surg 51:1962–1966. https://doi.org/10.1016/j.jpedsurg.2016.09.019

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 American College of Medical Quality (ACMQ)

About this chapter

Cite this chapter

Macias, C.G., Carberry, K.E. (2021). Data Analytics for the Improvement of Healthcare Quality. In: Giardino, A., Riesenberg, L., Varkey, P. (eds) Medical Quality Management. Springer, Cham. https://doi.org/10.1007/978-3-030-48080-6_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-48080-6_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-48079-0

Online ISBN: 978-3-030-48080-6

eBook Packages: MedicineMedicine (R0)