Abstract

Earth science models often raise computational challenges, requiring a large number of computing resources, and serial computing using a single computer is not sufficient. Further, earth science datasets produced by observations and models are increasingly larger and complex, exceeding the limits of most analysis and visualization tools, as well as the capacities of a single computer. HPC enabled modeling, analysis, and visualization solutions are needed to better understand the behaviors, dynamics, and interactions of the complex earth system and its sub-systems. However, there are a wide range of computing paradigms (e.g., Cluster, Grid, GPU, Volunteer and Cloud Computing), and associated parallel programming standards and libraries (e.g., MPI/OpenMPI, CUDA, and MapReduce). In addition, the selection of specific HPC technologies varies widely for different datasets, computational models, and user requirements. To demystify the HPC technologies and unfold different computing options for scientists, this chapter first presents a generalized HPC architecture for earth science applications, and then demonstrates how such a generalized architecture can be instantiated to support the modeling and visualization of dust storms.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

The advancement of observations, analysis and prediction of our earth system, such as weather and climate, could inform crucial environment decisions impacting current and future generations, and even significantly save lives (Shapiro et al. 2010). Computational modeling and numerical analysis are commonly used methods to understand and predict the behaviors, dynamics, and interactions of the earth system and its sub-systems, such as atmosphere, ocean, and land. High performance computing (HPC) is essential to support these complex models and analysis, which often require the availability of a large number of computing resources (Huang et al. 2013b; Prims et al. 2018; Massonnet et al. 2018). HPC enables earth prediction models to incorporate more realistic physical processes and a high degree of earth system complexity (Shapiro et al. 2010). As such, the Japanese government imitated the Earth Simulator project in 1997 to promote research for global change predictions by using HPC simulation. A large-scale geophysical and tectonic modeling cluster was also built to perform a range of geosciences simulations at Munich University’s Geosciences department (Oeser et al. 2006).

Further, earth system modeling and simulations are able to produce enormous amounts of spatiotemporal data at the peta and exa-scale (Feng et al. 2018). The growth of data volumes gives rise to serious performance bottlenecks in analyzing and visualizing data. In particular, performing interactive visualization can impose computational intensity on computing devices, and exceed the limits of most analysis tools and the capacities of these devices. To address the intensity, scientists have utilized high performance techniques to analyze, visualize, interpret, and understand data of large magnitude (Hoffman et al. 2011; Ayachit et al. 2015; Bauer et al. 2016; Childs 2012). Previously, most of the high performance visualization solutions required high-end computing devices. Most recently, visualization of massive data with remote servers was enabled by utilizing parallel computing capabilities in the cloud to perform visualization intensive tasks (Zhang et al. 2016). With the remote high performance visualization paradigm, users only need to install a light-weight visualization client to visualize massive data (Al-Saidi et al. 2012; Huntington et al. 2017). Such a design removes the hardware constraints to end users and delivers scalable visualization capabilities.

To date, the choice of HPC technologies varies widely for different datasets, computational models, and user requirements (Huang et al. 2018). In fact, there are a wide range of computing paradigms (e.g., Cluster, Grid, GPU, Volunteer and Cloud Computing), and associated data parallel programming standards and libraries, such as Message Passing Interface (MPI)/OpenMPI, Compute Unified Device Architecture (CUDA), and MapReduce. While Cluster and Grid Computing used to dominate HPC solutions, Cloud Computing has recently emerged as a new computing paradigm with the goal of providing computing infrastructure that is economical and on-demand based. As an accompaniment, the MapReduce parallel programming framework becomes increasingly important to support distributed computing over large datasets on cloud. More complicatedly, a single HPC infrastructure may not meet the computing requirements for a scientific application, leading to the popularity of hybrid computing infrastructure, which leverages multi-sourced HPC resources from both local and cloud data centers (Huang et al. 2018), or GPU and CPU devices (Li et al. 2013).

As such, it has been a challenge to select optimal HPC technologies for a specific application. The first step to address such challenge is to unmask different computing options for scientists. This chapter will first present a generalized HPC architecture for earth science applications based on the summary of existing HPC solutions from different aspects, including the data storage and file system, computing infrastructure, and programming model. Next, we demonstrate the instantiation of the proposed computing architecture to facilitate the modeling and visualization of earth system process using dust storm as an example.

2 Related Work

2.1 HPC for Environmental Modeling

Previously, large-scale earth science applications, such as ocean and atmosphere models, were developed under monolithic software-development practices, usually within a single institution (Hill et al. 2004). However, such a practice prevents the broader community from sharing and reusing earth system modeling algorithms and tools. To increase software reuse, component interoperability, performance portability, and ease of use in earth science applications, the Earth System Modeling Framework project was initiated to develop a common modeling infrastructure, and standards-based open-source software for climate, weather, and data assimilation applications (Hill et al. 2004; Collins et al. 2005). The international Earth-system Prediction Initiative (EPI) was suggested to provide research and services to accelerate advances in weather, climate, and earth system prediction and the use of this information by global societies by worldwide scientists (Shapiro et al. 2010). In addition, these models are mostly processed sequentially on a single computer without taking advantage of parallel computing (Hill et al. 2004; Collins et al. 2005; Shapiro et al. 2010).

In contrast to sequential processing, parallel processing distributes data and tasks to different computing units through multiple processes and/or threads to reduce the data and tasks to be handled on each unit, as well as the execution time of each computing unit. The advent of large-scale HPC infrastructure has benefited earth science modeling tremendously. For example, Dennis et al. (2012) demonstrated that the resolution of climate models can be greatly increased on HPC architectures by enabling the use of parallel computing for models. Building upon Grid Computing and a variety of other technologies, the Earth System Grid provides a virtual collaborative environment that supports the management, discovery, access, and analysis of climate datasets in a distributed and heterogeneous computational environment (Bernholdt et al. 2005; Williams et al. 2009). The Land Information System software was also developed to support high performance land surface modeling and data assimilation (Peters-Lidard et al. 2007).

While parallel computing has moved into the mainstream, computational needs mostly have been addressed by using HPC facilities such as clusters, supercomputers, and distributed grids (Huang and Yang 2011; Wang and Liu 2009). But, they are difficult to configure, maintain, and operate (Vecchiola et al. 2009), and it is not economically feasible for many scientists and researchers to invest in dedicated HPC machines sufficient to handle large-scale computations (Oeser et al. 2006). Unlike most traditional HPC infrastructures (such as clusters) that lack the agility to keep up with the requirements for more computing resource to support the addressing of big data challenge, Cloud Computing provides a flexible stack of massive computing, storage, and software services in a scalable manner at low cost. As a result, more and more scientific applications traditionally handled by using HPC Cluster or Grid facilities have been tested and deployed on the cloud and various strategies and experiments have been made to better leverage the cloud capabilities (Huang et al. 2013a; Ramachandran et al. 2018). For example, knowledge infrastructure, where cloud-based HPC platform is used to host the software environment, models, and personal user space, is developed to address common barriers to enable numerical modeling in Earth sciences (Bandaragoda et al. 2019).

2.2 HPC for Massive Data Visualization

In the earth science domain, observational and modeled data created by the various disciplines vary widely in both temporal scales, ranging from seconds to millions of years, and spatial scales from microns to thousands of kilometers (Hoffman et al. 2011). The ability to analyze and visualize these massive data is critical to support advanced analysis and decision making. Over the past decade, there has been growing research interest in the development of various visualization techniques and tools. Moreland et al. (2016) built a framework (VTK-m) to support the feasible visualization on large volume data. Scientists from Lawrence Livermore National Laboratory (LLNL) have successfully developed an open-source visualization tool (Childs 2012). The Unidata program center also released a few Java-based visual analytics tools for various climate and geoscience data, such as the Integrated Data View (IDV) and Advanced Weather Interactive Processing System (AWIPS) II (Unidata 2019). Williams et al. (2013) developed Ultrascale Visualization Climate Data Analysis Tools (UV-CDAT) to support the visualization on massive data. Li and Wang (2017) developed a virtual globe based high performance visualization system for visualizing multidimensional climate data.

Despite a number of visual analytics tools have been developed and put into practice, most of those solutions depend on specific HPC infrastructure to address computational intensity. Such dependence prohibits scientists who have limited accessibility to high-end computing resources from exploring larger datasets. Performing interactive visualization of massive spatiotemporal data poses a challenging task for resource-constrained clients. Recently, scientists have explored remote visualization methods implemented on Cloud Computing facilities (Zhang et al. 2016). A complete interactive visualization pipeline for massive data that can be adapted to feasible computing resources is desirable.

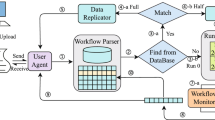

3 High Performance Computing Solution Architecture for Earth Science

To address major computing challenges from earth system modeling and data visualization, this section proposes a generalized HPC architecture by summarizing common HPC tools, libraries, technologies, and solutions (Fig. 14.1). Such an architecture includes four layers, data storage and file system, computing infrastructure, programming model, and application. While there are different computing technologies and options available from each layer, the combinations of these technologies can be used to solve most data and computing challenges raised from an earth science application. The application layer includes common libraries, tools, and models for a specific scientific problem. This layer varies with different earth science applications and problems, which in turn determines the other three layers providing the support of data storage, computing, and programming technologies for the problem.

3.1 Data Storage and File System

The purpose of the first layer in the architecture is to organize and store datasets. Different storage models could have different impacts on the performance for I/O and data-intensive applications (Huang et al. 2013b). Additionally, the performance of the parallel system would be compromised if the network connection and topology of the remote storage and computing nodes were not properly configured (Huang and Yang 2011). The selection of a file system depends highly on the selected computing infrastructure (Sect. 3.2). In the HPC cluster infrastructure, for example, each computing node is usually designed to access the same remote data storage to execute tasks in parallel. Therefore, a network file system (NFS) or other methods, such as Lustre (Schwan 2003), Parallel Virtual File System (PVFS; Ross and Latham 2006), and General Parallel File System (GPFS) developed by IBM, are used to share the storage and to ensure the synchronization of data access. In other words, these file systems are mostly used for HPC servers and supercomputers, and can provide high speed access to a large number of data.

The demand and challenge for big data storage and processing has catalyzed the development and adoption of distributed file systems (DFSs ; Yang et al. 2017), which meet the challenge with four key techniques: storage of small files, load balancing, copy consistency, and de-duplication (Zhang and Xu 2013). DFSs allow access to files via a network from multiple computing nodes which include cheap “commodity” computers. Each file is duplicated and stored on multiple nodes enabling a computing node to access the local dataset to complete the computing tasks. With advances in distributed computing and Cloud Computing, many DFSs have developed to support large-scale data applications and to fulfill other cloud service requirements. For example, the Google File System is a DFS designed to meet Google’s core data storage and usage needs (e.g., Google Search Engines). Hadoop distributed file system (HDFS), used along with the Hadoop Cloud Computing infrastructure and MapReduce programming framework (Sect. 3.3), has been adopted by many IT companies, including Yahoo, Intel, and IBM, as their big data storage technology. In many cases, to enable the efficient processing of scientific data, a computing infrastructure has to leverage the computation power of both traditional HPC environment and the big data cloud computing systems, which are built upon different storage infrastructures. For example, Scientific Data Processing (SciDP) was developed to integrate both PFS and HDFS for fast data transfer (Feng et al. 2018).

3.2 Computing Infrastructure

The computing infrastructure layer provides facilities to run the computing tasks, which could be run on central processing units (CPUs), graphics processing units (GPUs) or hybrid units to best utilize CPUs and GPUs collaboratively (Li et al. 2013). The CPU or GPU devices can be deployed onto a single supercomputer or/and multiple computing severs working in parallel. They can be embedded in local computing resources, or cloud-based virtual machines (Tang and Feng 2017). A hybrid CPU-GPU computing infrastructure can leverage both CPUs and GPUs cooperatively to maximize their computing capabilities. Further, a hybrid local-cloud infrastructure can be implemented by running tasks on local HPC systems, and bursting to clouds (Huang et al. 2018). Such a hybrid infrastructure often runs computing tasks on local computing infrastructure by default, but automatically leverages the capabilities of cloud resources when needed.

Advances in computing hardware have greatly enhanced the computing capabilities for earth science data and models. Before GPU computing technology becomes popular, scientists designed computing strategies with high performance CPU clusters or multi-core CPUs, which addressed the computational needs for earth science for many years. As a specialized circuit, GPU was initially designed to accelerate image processing. Advances in GPUs have yielded powerful computing capabilities for scientific data processing, rendering, and visualization, serving as ubiquitous and affordable computing devices for high performance applications (Li et al. 2013). Similar to the multithreading parallelization implemented with CPUs, GPU Computing enables programs to process data or tasks through multiple threads with each executing a portion of the data or tasks. Currently, GPU Computing has been gradually integrated into cloud computing infrastructure, called GPU-based Cloud Computing, which benefits from the efficiency of the parallel computing power of GPUs, as well as utilizing cloud capabilities simultaneously.

Typically, this layer will include a middleware deployed on each computing server to discover, organize, and communicate with all the computing devices. The middleware also implements the functions of scheduling jobs and collecting the results. Several open-source middleware solutions are widely used, including MPICH (Gropp 2002), and the Globus Grid Toolkit (Globus 2017). As a standard for Grid Computing infrastructure, the Globus Grid Toolkit is a low-level middleware and requires substantial technical expertise to set up and utilize (Hawick et al. 2003).

3.3 Programming Model

The programming model layer determines the standards, libraries, and application programming interfaces (APIs) to interact with the underlying HPC devices, and achieve parallel processing of datasets and computing tasks. Similar to the storage and file system layer, the selection of the programming model or framework most likely is determined by the computing infrastructure layer. For example, a GPU Computing infrastructure requires leveraging libraries, such as CUDA, ATI Stream, and OpenACC, to invoke GPU devices, and MPI defines communication specifications to implement parallel computing on HPC clusters (Prims et al. 2018).

As a distributed programming model, MapReduce includes associated implementation to support distributed computing, and is increasingly utilized for scalable processing and generation of large datasets due to its scalability and fault tolerance (Dean and Ghemawat 2008). In both industry and academia, it has played an essential role by simplifying the design of large-scale data-intensive applications. The high demand on MapReduce has stimulated the investigation of MapReduce implementations with different computing architectural models and paradigms, such as multi-core clusters, and Clouds (Jiang et al. 2015). In fact, MapReduce has become a primary choice for cloud providers to deliver data analytical services since this model is specially designed for data-intensive applications (Zhao et al. 2014). Accordingly, many traditional algorithms and models developed in a single machine environment have been moved to the MapReduce platform (Kim 2014; Cosulschi et al. 2013).

4 Dust Storm Modeling and Visualization Using HPC

This section presents two case studies, dust storm modeling and model output visualization, to demonstrate how the generalized computing architecture in Sect. 3 can be instantiated to support real-world earth science applications.

4.1 Dust Storm Modeling

In this work, non-hydrostatic mesoscale model (NMM)-dust (Huang et al. 2013b) is used as the dust storm simulation model for demonstration purposes. Model parallel implementation is supported through the MPI programming model at the programming model layer, and adopts an HPC cluster at the computing infrastructure layer (Fig. 14.1). Specifically, MPICH is used to manage the HPC cluster. In the HPC cluster, all computing nodes access the same remote data storage, and NFS is used in the data storage and file system layer to share the storage, and to synchronize data access at the data storage and file system layer.

4.1.1 Dust Storm Models

Dust storm simulation models are developed by coupling dust process modules to atmospheric process models. The Dust Regional Atmospheric Model (DREAM) is one of the most used for dust cycle modeling (Nickovic et al. 2001). DREAM can be easily incorporated into many atmosphere models, such as the Eta weather prediction model (Janjić 1994), including Eta-4bin that simulates and divides dust into 4 classes of particle size, and Eta-8bin for 8 classes of particle size simulation. While both Eta-4bin and Eta-8bin have been tested for various dust storm episodes in various places and resolutions, Eta has a coarse spatial resolution of 1/3 of a degree that cannot be used for many potential applications (Xie et al. 2010). Additionally, numerical weather prediction models run in sequence and reach valid limits for increasing resolution. Therefore, the Eta model was replaced by a Non-hydrostatic Mesoscale Model (NMM), which can produce high resolution forecasting up to 1 km (KM) and runs in parallel (Janjic 2003). As such, the coupling of DREAM and NMM (NMM-dust; Huang et al. 2013b) is adopted in this work for achieving parallel processing and high resolution forecasting of dust storms.

4.1.2 Parallel Implementation

The atmosphere is modeled by dividing the domain (i.e., study area) into 3D grid cells, and solving a system of coupled nonlinear partial differential equations on each cell (Huang et al. 2013b). The calculations of the equations on each cell are repeated with a time step to model phenomena evolution. Correspondingly, the computational cost of an atmospheric model is a function of the number of cells in the domain and the number of time steps (Baillie et al. 1997). As dust storm models are developed by adding dust solvers into the regional atmospheric models, dust storm model parallelization parallelizes the core atmospheric modules using a data decomposition approach. Specifically, the study domain represented as 3D grid cells is decomposed into multiple sub-domains (i.e., sub-regions), which are then distributed onto a HPC cluster node and processed by one CPU of the node as a process (i.e., task).

Figure 14.2 shows parallelizing a 4.5° × 7.1° domain with a spatial resolution 0.021° into 12 sub-domains for one vertical layer. This would result in 215 × 345 grid cells with 71 × 86 cells for each sub-domain except for these on the border. The processes handling the sub-domains will need to communicate with their neighbor processes for local computation and synchronization. The processes responsible for handling the sub-domain within the interior of the domain, such as sub-domains 4 and 7, will require communication among four neighbor processes, causing intensive communication overhead. During the computation, the state and intermediate data representing a sub-domain are produced in the local memory of a processor. Other processes need to access the data through file transfer across the computer network. The cost of data transfer due to the communication among neighbor sub-domains is a key efficiency issue because it adds significant overhead (Baillie et al. 1997; Huang et al. 2013b).

4.1.3 Performance Evaluation

This HPC cluster, used to evaluate the performance and scalability of the proposed HPC solution to speed up the computation of dust storm model, has a 10 Gbps network and 14 computing nodes. Each node has 96 GB of memory and dual 6-core processors (12 physical cores) with a clock frequency of 2.8 GHz. We parallelized the geographic scope of 4.5° × 7.1° along the longitude and latitude, respectively, into different sub-domains and utilized different computing nodes and process (i.e., sub-domain) numbers to test the performance (Fig. 14.3).

The total execution time, including the computing time to run model and communication and synchronization time among processes, drops sharply with the increase of the process (sub-domain) number from 8 to 16, and then to 24. After that, the computing time is still reduced but not significantly, especially when two computing nodes are used. This is because the communication and synchronization time is also gradually increased until equal to the computing time when using 96 processes (Fig. 14.4). The experiment result also shows that the execution times of the model with different domain sizes converge to roughly the same values when the number of CPUs increases. The cases where 7 and 14 computing nodes are used yield similar performance. Especially, when more and more processes are utilized, seven computing nodes could have a little better performance than 14 computing nodes.

In summary, this experiment demonstrates that HPC can significantly reduce the execution time for the dust storm modeling. However, the communication overhead could result in two scalability issues: (1) No matter how many computing nodes are involved, there is always a peak performance point of the highest number of processes that can be leveraged for a specific problem size. The peak point is 128 processes for 14 computing nodes, 80 processes for 7 computing nodes, and 32 for 2 computing nodes; and (2) A suitable number of computing nodes should be used to complete the model simulation.

4.2 Massive Model Output Visualization

The visualization of massive NMM-dust model data is implemented by developing a tightly-coupled cloud-enabled remote visualization system for data analysis. Scientists can exploit our remote visualization system to interactively examine the spatiotemporal variations of dust load at different pressure levels. We adopt ParaView as the primary visualization engine. As an open-source, multi-platform data analysis and visualization application, ParaView supports distributed rendering with multiple computing nodes. We have extended ParaView by including interfaces to process model outputs and perform rendering based on view settings sent from the client. Several visualization methods are available for multidimensional data, such as volume rendering with ray casting, iso-volume representation, and field visualization. To support the parallel visualization, computing resources from Amazon Elastic Cloud Computing (EC2) are used to build the computing infrastructure (Fig. 14.1). The communication between instances is based on MPICH as well. The datasets are hosted in the NFS and are sent to the computing nodes during the visualization process. We also built JavaScript based tools in the application layer to support the interaction between users and the computing infrastructure (Fig. 14.1).

4.2.1 Test Datasets

The NMM-dust model generates one output in NetCDF format at a three-hour interval for the simulated spatiotemporal domain. Each output contains the information of dust dry deposition load (μg/m2), dust wet deposition (μg/m2), total dust load (μg/m2), and surface dust concentration of PM10 aerosols (μg/m3) at four dimensions: latitude, longitude, time, and pressure. Performance evaluation is conducted using two model datasets about 50 Megabytes (MB) and 1.8 GigaBytes (GB) in size (referred as the small and large dataset hereafter respectively; Table 14.1).

Note that although the large dataset is relatively small compared to some massive climate simulation datasets in the literature, the dust load distribution was described at a spatial resolution of 0.021°, which is relatively high at the regional scale compared to other data from mesoscale climate simulation models. The datasets can be easily expanded by including larger temporal time frames. The production of dust storm data is computationally demanding (Xie et al. 2010) and presents a challenging problem for interactive remote data visualization.

4.2.2 Parallel Implementation

We followed the parallel design of ParaView to build the visualization pipeline. In particular, we have extended ParaView to support time series volume rendering for multidimensional datasets. The distributed execution strategy is based on data parallelism that splits one dataset into many subsets and distributes the subsets on multiple rendering nodes. Upon finishing rendering on the subsets, every node will upload the rendering results for combination. A sort last composite rendering method is used to ensure the correct composition of the final rendering results. The visualization pipeline is identical on all nodes.

Figure 14.5 shows the parallel rendering process on the model output. The output dataset is stored in the form of NetCDF , which represents an attribute of a time period as a multidimensional array. The parallel strategy splits the array into a series of 3D arrays. Every 3D array is further decomposed into small arrays as subsets. The decomposition is along all three spatial dimensions. Every subset is assigned to a processor to perform rendering. Upon the completion of rendering by the processors, the pipeline merges the results to form the final image. Note only the 3D arrays from the same time stamp will be merged to ensure that all relevant subsets of data are combined.

4.2.3 Performance Evaluation

We examined the relative performance of pipeline components. We used a range of m1.xlarge instances provided by Amazon EC2 service and measured the rendering performance delivered by Amazon EC2 clusters of 1–8 instances with 4–32 cores. Every instance has one four-core CPU, 15 GB main memory and more than 1 TB storage. Due to the popularity of volume rendering for multidimensional data, we use volume rendering as an example of the high performance visualization application (Fig. 14.6). The ray casting algorithm was adopted as the default volume rendering method for representing 3D spatiotemporal attributes such as surface dust concentration at different pressure levels. For each time step, dust information on 3D voxels (longitude, latitude, and pressure level) can be rendered and displayed in high-quality images. Unless otherwise specified, all results reported below were averaged over five independent runs.

Figure 14.7 shows the detailed breakdowns of overall time costs given different network connections and CPU cores. The entire pipeline is divided into six components: sending user requests on visualization, connecting and disconnecting to the cloud, reading data and variable values, preparing visualization (including preparing duplicated data subset for parallel rendering), rendering (creating images and streaming), and transferring visualization results. To identify the impact of networking, user requests are issued from three different locations: (1) a residential area that is connected to the network with low internet speed (less than 20 Mbps), (2) a campus area (organization) with relatively stable and fast Internet-2 connection (200–300 Mbps), and (3) Amazon inter-region connections with average network speed more than 100 Mbps. These three types are typical connections that users may have. Figure 14.7 shows the visualization with the small dataset, whereas Fig. 14.8 shows the visualization with the large dataset in the cloud. In Fig. 14.7, in the case of 1 core is used, connection time is zero as the no connection with multiple CPU cores is initiated. Sending requests, taking extremely small amount of time (~0.002 s), are not included in the figures.

Time cost allocation for remote visualization pipeline components with the small datasets. Disconnection time is not included due to its small time cost. We have tested the visualization pipeline in three type of network environments, each with a one-core instance and a four-core instance. a) Time costs of data reading (grey bar), preparation (yellow bar) and image transfer (green bar). b) Time costs of connecting to the cloud (orange bar) and rendering images (blue bar)

Time cost allocation for remote visualization pipeline components with the large datasets. Disconnection time is not included due to its small time cost. We have tested the visualization pipeline in three type of network environments, each with a one-core instance and a four-core instance. a) Time costs of data reading (grey bar), preparation (yellow bar) and image transfer (green bar). b) Time costs of connecting to the cloud (orange bar) and rendering images (blue bar)

The principal observation from Figs. 14.7 and 14.8 is that rendering time is the dominant cost (e.g., 61.45% in average for the larger dataset), followed by connection time if multiple CPU cores are used. Main performance bottlenecks of our system include server side rendering with the result that rendering of massive data degrades remote visualization performance. When increasing the number of nodes, the time spent on rendering reduces, though, not proportionally. Therefore, in the case of multidimensional visualization, we suggest that configuring a powerful rendering cluster is important in delivering an efficient visualization pipeline.

Connection is the second most time consuming process in the visualization pipeline. It occurs when multiple cores are used. The connection time changes slightly with the number of cores but does not increase with the size of the dataset. The connection time is mainly the warm-up time for visualization nodes. Similarly, the preparation time increases with the number of nodes as the datasets should be further divided to produce more subtasks for visualization.

The effects of network speeds between the user client and server side on the overall runtime are very minor. Both sending requests and transferring rendered images have limited contribution to the overall runtime (e.g., 0.02% and 1.23% in average, respectively, for the larger dataset). The time to transfer the rendered image is basically constant for all the tests as the size of the rendered image does not vary greatly. Other resources such as data storage and network connection are less important in the pipeline as they take relatively small amounts of time.

Another observation is that each dataset presents a unique time allocation pattern: system connection and image transfer time for the small dataset (Fig. 14.7) is a much larger share than for the large dataset (Fig. 14.8) because the rendering takes less time, indicating that the applicability of remote visualization is poor when the data size is small. In particular, we do not see significant performance gains when increasing the computing cores from 1 to 4 due to high communication overheads in the cloud.

In summary, based on the implementation and the tests, we make the following recommendation when planning a remote visualization cluster.

-

1.

The benefits of a remote visualization framework are to provide powerful visualization capabilities through using multiple nodes and to reduce the hardware requirements on the clients. Building such a shared remote visualization framework will be beneficial for organization and communities to reduce shared costs.

-

2.

Generally speaking, adding more powerful computing nodes to a visualization cluster will increase the rendering performance. However, additional overhead such as data preparation, connection to multiple nodes should be carefully considered to achieve better performance.

-

3.

The visualization costs vary significantly with different visualization methods and data volumes. Therefore, users should evaluate the data sizes and perform small scale visualization tests on the data before configuring remote visualization strategies. In particular, users should evaluate the performance gains when changing the number of cores from 1 to a larger number,

-

4.

Given the relatively long warm-up time of computing nodes, system administrators should modify the visualization pipeline so that the pipeline requires only one time of connection and multiple rounds of interactive operations.

5 Conclusion

This chapter reviews relevant work on using HPC to address the major challenges from earth science modeling and visualization, and presents a generalized HPC architecture for earth science. Next, we introduce two case studies, dust storm modeling and visualization, to demonstrate how such a generalized HPC architecture can be instantiated to support real-world applications. Specifically, the dust storm modeling is supported through local Cluster Computing, and model output visualization is enabled through Cloud-based Cluster Computing, where all computing resources are provisioned on the Amazon EC2 platform. For both cases, all computing nodes communicate through the MPI and datasets are managed by the NFS. Of course, other HPC solutions, as summarized in Sect. 3, can be leveraged to support the two applications. For example, Huang et al. (2013b) used Cloud Computing to enable high resolution dust storm forecasting, and Li et al. (2013) leveraged hybrid GPU-CPU computing for model output visualization.

The work presented in this chapter explores the feasibility of building a generalized framework that allows scientists to choose computing options for special domain problems. Results demonstrate that the applicability of different computing resources varies with the characteristics of computing resources as well as the features of the specific domain problems. Selecting the optimal computing solutions requires further investigation. While the proposed generalized HPC architecture is demonstrated to address the computational needs posed by dust storm modeling and visualization, the architecture is general and extensible to support applications that go beyond earth science.

References

Al-Saidi, A., Walker, D. W., & Rana, O. F. (2012). On-demand transmission model for remote visualization using image-based rendering. Concurrency and Computation: Practice and Experience, 24(18), 2328–2345.

Ayachit, U., Bauer, A., Geveci, B., O’Leary, P., Moreland, K., Fabian, N., et al. (2015). ParaView catalyst: Enabling in situ data analysis and visualization. In Proceedings of the First Workshop on In Situ Infrastructures for Enabling Extreme-Scale Analysis and Visualization (pp. 25–29). ACM.

Baillie, C., Michalakes, J., & Skålin, R. (1997). Regional weather modeling on parallel computers. New York: Elsevier.

Bandaragoda, C. J., Castronova, A., Istanbulluoglu, E., Strauch, R., Nudurupati, S. S., Phuong, J., et al. (2019). Enabling collaborative numerical Modeling in Earth sciences using Knowledge Infrastructure. Environmental Modelling & Software., 120, 104424.

Bauer, A. C., Abbasi, H., Ahrens, J., Childs, H., Geveci, B., Klasky, S., et al. (2016). In situ methods, infrastructures, and applications on high performance computing platforms. Computer Graphics Forum, 35(3), 577–597.

Bernholdt, D., Bharathi, S., Brown, D., Chanchio, K., Chen, M., Chervenak, A., et al. (2005). The earth system grid: Supporting the next generation of climate modeling research. Proceedings of the IEEE, 93(3), 485–495.

Childs, H. (2012, October). VisIt: An end-user tool for visualizing and analyzing very large data. In High Performance Visualization-Enabling Extreme-Scale Scientific Insight (pp. 357–372).

Collins, N., Theurich, G., Deluca, C., Suarez, M., Trayanov, A., Balaji, V., et al. (2005). Design and implementation of components in the Earth System Modeling Framework. International Journal of High Performance Computing Applications, 19(3), 341–350.

Cosulschi, M., Cuzzocrea, A., & De Virgilio, R. (2013). Implementing BFS-based traversals of RDF graphs over MapReduce efficiently. In 2013 13th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGrid) (pp. 569–574). IEEE.

Dean, J., & Ghemawat, S. (2008). MapReduce: Simplified data processing on large clusters. Communications of the ACM, 51(1), 107–113.

Dennis, J. M., Vertenstein, M., Worley, P. H., Mirin, A. A., Craig, A. P., Jacob, R., et al. (2012). Computational performance of ultra-high-resolution capability in the Community Earth System Model. The International Journal of High Performance Computing Applications, 26(1), 5–16.

Feng, K., Sun, X. H., Yang, X., & Zhou, S. (2018, September). SciDP: Support HPC and big data applications via integrated scientific data processing. In 2018 IEEE International Conference on Cluster Computing (CLUSTER) (pp. 114–123). IEEE.

Gropp, W. (2002). MPICH2: A new start for MPI implementations. In European Parallel Virtual Machine/Message Passing Interface Users’ Group Meeting (p. 7). Springer.

Hawick, K. A., Coddington, P. D., & James, H. A. (2003). Distributed frameworks and parallel algorithms for processing large-scale geographic data. Parallel Computing, 29(10), 1297–1333.

Hill, C., DeLuca, C., Balaji, S. M., & Ad, S. (2004). The architecture of the earth system modeling framework. Computing in Science & Engineering, 6(1), 18–28.

Hoffman, F. M., Larson, J. W., Mills, R. T., Brooks, B.-G. J., Ganguly, A. R., Hargrove, W. W., et al. (2011). Data mining in Earth system science (DMESS 2011). Procedia Computer Science, 4, 1450–1455.

Huang, Q., Li, J., & Li, Z. (2018). A geospatial hybrid cloud platform based on multi-sourced computing and model resources for geosciences. International Journal of Digital Earth, 11, 1184.

Huang, Q., & Yang, C. (2011). Optimizing grid computing configuration and scheduling for geospatial analysis: An example with interpolating DEM. Computers & Geosciences, 37(2), 165–176.

Huang, Q., Yang, C., Benedict, K., Chen, S., Rezgui, A., & Xie, J. (2013b). Utilize cloud computing to support dust storm forecasting. International Journal of Digital Earth, 6(4), 338–355.

Huang, Q., Yang, C., Benedict, K., Rezgui, A., Xie, J., Xia, J., et al. (2013a). Using adaptively coupled models and high-performance computing for enabling the computability of dust storm forecasting. International Journal of Geographical Information Science, 27(4), 765–784.

Huntington, J. L., Hegewisch, K. C., Daudert, B., Morton, C. G., Abatzoglou, J. T., McEvoy, D. J., et al. (2017). Climate Engine: Cloud computing and visualization of climate and remote sensing data for advanced natural resource monitoring and process understanding. Bulletin of the American Meteorological Society, 98(11), 2397–2410.

Janjic, Z. (2003). A nonhydrostatic model based on a new approach. Meteorology and Atmospheric Physics, 82(1–4), 271–285.

Janjić, Z. I. (1994). The step-mountain eta coordinate model: Further developments of the convection, viscous sublayer, and turbulence closure schemes. Monthly Weather Review, 122(5), 927–945.

Jiang, H., Chen, Y., Qiao, Z., Weng, T.-H., & Li, K.-C. (2015). Scaling up MapReduce-based big data processing on multi-GPU systems. Cluster Computing, 18(1), 369–383.

Kim, C. (2014). Theoretical analysis of constructing wavelet synopsis on partitioned data sets. Multimedia Tools and Applications, 74(7), 2417–2432.

Li, J., Jiang, Y., Yang, C., Huang, Q., & Rice, M. (2013). Visualizing 3D/4D environmental data using many-core graphics processing units (GPUs) and multi-core central processing units (CPUs). Computers & Geosciences, 59, 78–89.

Li, W., & Wang, S. (2017). PolarGlobe: A web-wide virtual globe system for visualizing multidimensional, time-varying, big climate data. International Journal of Geographical Information Science, 31(8), 1562–1582.

Massonnet, F., Ménégoz, M., Acosta, M. C., Yepes-Arbós, X., Exarchou, E., & Doblas-Reyes, F. J. (2018). Reproducibility of an Earth System Model under a change in computing environment (No. UCL-Université Catholique de Louvain). Technical Report. Barcelona Supercomputing Center.

Moreland, K., Sewell, C., Usher, W., Lo, L.-t., Meredith, J., Pugmire, D., et al. (2016). VTK-m: Accelerating the visualization toolkit for massively threaded architectures. IEEE Computer Graphics and Applications, 36(3), 48–58.

Nickovic, S., Kallos, G., Papadopoulos, A., & Kakaliagou, O. (2001). A model for prediction of desert dust cycle in the atmosphere. Journal of Geophysical Research: Atmospheres, 106(D16), 18113–18129.

Oeser, J., Bunge, H.-P., & Mohr, M. (2006). Cluster design in the Earth Sciences tethys. In International Conference on High Performance Computing and Communications. (pp. 31–40). Springer.

Peters-Lidard, C. D., Houser, P. R., Tian, Y., Kumar, S. V., Geiger, J., Olden, S., et al. (2007). High-performance Earth system modeling with NASA/GSFC’s Land Information System. Innovations in Systems and Software Engineering, 3(3), 157–165.

Prims, O. T., Castrillo, M., Acosta, M. C., Mula-Valls, O., Lorente, A. S., Serradell, K., et al. (2018). Finding, analysing and solving MPI communication bottlenecks in Earth System models. Journal of Computational Science, 36, 100864.

Project TG. (2017). The Globus Project. Retrieved from http://www.globus.org

Ramachandran, R., Lynnes, C., Bingham, A. W., & Quam, B. M. (2018). Enabling analytics in the cloud for earth science data.

Ross, R., & Latham, R. (2006). PVFS: A parallel file system. In Proceedings of the 2006 ACM/IEEE Conference on Supercomputing (p. 34). ACM.

Schwan, P. (2003). Lustre: Building a file system for 1000-node clusters. In Proceedings of the 2003 Linux Symposium, vol. 2003.

Shapiro, M., Shukla, J., Brunet, G., Nobre, C., Béland, M., Dole, R., et al. (2010). An earth-system prediction initiative for the twenty-first century. Bulletin of the American Meteorological Society, 91(10), 1377–1388.

Tang, W., & Feng, W. (2017). Parallel map projection of vector-based big spatial data: Coupling cloud computing with graphics processing units. Computers, Environment and Urban Systems, 61, 187–197.

Unidata. (2019). Unidata. Retrieved 14, August, 2019, from http://www.unidata.ucar.edu/software/

Vecchiola, C., Pandey, S., & Buyya, R. (2009, December). High-performance cloud computing: A view of scientific applications. In 2009 10th International Symposium on Pervasive Systems, Algorithms, and Networks (pp. 4–16), Kaohsiung, Taiwan, December 14–16. IEEE.

Wang, S., & Liu, Y. (2009). TeraGrid GIScience gateway: Bridging cyberinfrastructure and GIScience. International Journal of Geographical Information Science, 23(5), 631–656.

Williams, D. N., Ananthakrishnan, R., Bernholdt, D., Bharathi, S., Brown, D., Chen, M., et al. (2009). The earth system grid: Enabling access to multimodel climate simulation data. Bulletin of the American Meteorological Society, 90(2), 195–206.

Williams, D. N., Bremer, T., Doutriaux, C., Patchett, J., Williams, S., Shipman, G., et al. (2013). Ultrascale visualization of climate data. Computer, 46(9), 68–76.

Xie, J., Yang, C., Zhou, B., & Huang, Q. (2010). High-performance computing for the simulation of dust storms. Computers, Environment and Urban Systems, 34(4), 278–290.

Yang, C., Huang, Q., Li, Z., Liu, K., & Hu, F. (2017). Big Data and cloud computing: Innovation opportunities and challenges. International Journal of Digital Earth, 10(1), 13–53.

Zhang, T., Li, J., Liu, Q., & Huang, Q. (2016). A cloud-enabled remote visualization tool for time-varying climate data analytics. Environmental Modelling & Software, 75, 513–518.

Zhang, X., & Xu, F. (2013). Survey of research on big data storage. In 2013 12th International Symposium on Distributed Computing and Applications to Business, Engineering & Science (DCABES) (pp. 76–80). IEEE.

Zhao, J., Tao, J., & Streit, A. (2014). Enabling collaborative MapReduce on the Cloud with a single-sign-on mechanism. Computing, 98, 55–72.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Huang, Q., Li, J., Zhang, T. (2020). Domain Application of High Performance Computing in Earth Science: An Example of Dust Storm Modeling and Visualization. In: Tang, W., Wang, S. (eds) High Performance Computing for Geospatial Applications. Geotechnologies and the Environment, vol 23. Springer, Cham. https://doi.org/10.1007/978-3-030-47998-5_14

Download citation

DOI: https://doi.org/10.1007/978-3-030-47998-5_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-47997-8

Online ISBN: 978-3-030-47998-5

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)