Abstract

Psychoperiscope is a coined nomenclature which integrates periscope and psychometrics to mirror the mediating-moderating effect of cognitive coping strategies in the relationship between illness and quality of life. Coping refers to the effort toward mastering demands posed by harm, threat, or challenge being appraised and/or perceived as taxing available resources. It could be in terms of problem-focused versus emotion-focused as well as behavioral coping versus cognitive coping dimensions. The mediating-moderating effect of cognitive coping strategies, in the relationship between illness and quality of life, has not been clearly understood due to lack of a construct-relevant assessment scale. Therefore, this study developed a suitable scale using mixed methods embedded design. The mixed methods embedded design was opted for due to its advantageous measurement characteristics which would elucidate quality of life variance in relation to the effects of cognitive coping strategies on the variance of illness. Based on the Gandi Psychometric Model, the term psychoperiscope was coined as a new psychometric nomenclature and adopted in this context as the scale name. Psychoperiscope was pilot-tested on a sample of 30*3 (i.e., n = 30 × 3) participants, translated as consisting of 30 patients alongside their respective 30 family members and 30 clinical practitioners selected by the multistage sampling method. The final psychoperiscope, a 21-item (3-version) scale, proves significantly reliable for research and also serves as a valid screening tool. Following the useful data it elicited in this study, psychoperiscope would effectively generate more optimal and robust data if complemented with an experimental case study.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Quality of life

- Psychoperiscope

- Psychometrics

- Mixed methods embedded design

- Illness

- Cognitive coping strategies

1 Introduction

1.1 Background to the Study

Cognitions help us in regulating our emotion or any feeling in order not to be overwhelmed by the effects of negative or stressful life events. Since it seems the regulatory effect of cognitions would moderate life events, the perspective suggesting that cognitive processes can impact both illness and quality of life in some way is appropriate (in the circumstances). Garnefski et al. (2002) opine that “the regulation of emotions through cognitions is inextricably associated with human life.” Such cognitions include both conscious and unconscious cognitive processes. The unconscious cognitive processes, which include mental defence mechanisms such as projection, denial, daydreaming, and rationalization have been more significantly studied than the conscious ones which are popularly referred to as cognitive coping strategies (Garnefski et al. 2002).

Psychoperiscope is a coined nomenclature which integrates the principles of periscope and the ideals of psychometrics to more optimally define the mediating-moderating effect of cognitive coping strategies in the relationship between illness and quality of life. Coping, according to Monat and Lazarus (1991, p. 5), is defined as “an individual’s efforts to master demands (i.e., conditions of harm, threat, or challenge) that are appraised and/or perceived as exceeding or taxing available resources.” Coping could be classified in terms of problem-focused versus emotion-focused as well as behavioral coping (what you do) versus cognitive coping (what you know) dimensions (Gandi and Wai 2010; Garnefski et al. 2002). Considering that behavior includes actions, interactions, and reactions mostly in response to goals, motivations, needs, and problems, a complex interplay of psychological components has to form appropriate networks that determine and/or shape it. According to Epskamp et al. (2017), there are three measures of the network structure which include the strength, the closeness, and the between-ness of the node. Just as Lauritzen (1996) showed in the Gausian graphical model, the node represents observed variables while the edges represent partial correlation coefficients between two variables after conditioning on all other variables in the dataset.

The strength quantifies how well a node is indirectly connected to other nodes, the closeness quantifies how well a node is directly connected to other nodes, and the between-ness quantifies how important a node is in the average path between two other nodes. These measures of the network structure (strength, closeness, and between-ness) corroborate and/or represent Schwartz and Rapkin’s (2004) three-stage quality of life (QOL) measurement model which include performance-based, perception-based, and evaluation-based measurements. QOL measures are either designed on assumptions that measurement scales are consistently used while scores are directly comparable across people over time or even designed to account for response shift phenomena. Schwartz and Rapkin (2004) insist on the inferred evidential suggestion supporting response shift phenomena that the underlying processes of appraisal differ across people and over time. This can greatly affect how most of the QOL scale items are responded to. It could also be inferred, from the viewpoint of Schwartz and Rapkin (2004), that a more optimal assessment scale will be best suited if the ideals of clinimetrics and psychometrics are taken into consideration as an integrative whole.

Clinimetrics, which was conceptualized as the science of clinical measurement (Fava et al. 2011), refers to the domain concerned with indexes and other experiences that are used to describe or measure symptoms, physical signs, and any distinctly clinical phenomena (Mayo 2015; Feinstein 1987). Clinmetrics aims to develop seemingly heterogeneous measures with good face validity for clinical common sense and, therefore, principally rely on the opinions of patients and clinicians (Feinstein 1987; Upton and Upton 2007). Psychometrics, which on the other hand ensures quality and valued degree of homogeneity, rely more on statistical techniques and generally aims to develop measures that are mathematically valid and reliable (Upton and Upton 2007). Despite the obvious differences, there is some overlap “ab initio” between the ideals of clinimetrics and the techniques of psychometrics, which makes for easy complementary integration of their principles. To effectively integrate the ideals/techniques of clinimetrics (the science of clinical measurement) and psychometrics (the science of psychological measurement), an appropriate choice of suitable and more optimal research design seems cogently helpful.

De Vaus (2001) and Yin (2014) believe that any design that uses a more logical and comprehensive approach to investigating the research problem ensures that the evidence(s) obtained enables us to answer initial research questions as unambiguously as possible. Such designs would have to be adequately representative, by integrating different techniques, to accommodate various peculiarities toward attenuating/controlling extraneous (or confounding) variable effects. Combining different techniques this way has been described in terms of multimethod and mixed methods designs. A multimethod research involves combining multiple elements of either qualitative techniques or quantitative techniques, while mixed methods research involves combining the elements of both qualitative and quantitative techniques in one study. The major specific designs of mixed methods research include triangulation design, embedded design, explanatory design, and exploratory design (Creswell and Plano Clark 2007).

Mixed methods triangulation design aims at obtaining different but contemporary data on the same topic to best resolve the research problem (Morse 2003). It brings together the differing strengths and nonoverlapping weaknesses of quantitative methods (symbolized as QUAN: large sample size, trends, and generalization) with those of qualitative methods (symbolized as QUAL: small N, details and in depths). The small N (in this case) refers to one group or single-subject (single-case) designs that are particularly based on qualitative approach. Hence, mixed methods triangulation design is simply symbolized as QUAN + QUAL. It is used to directly compare and contrast quantitative results with qualitative findings (Schoonenboom and Johnson 2017).

Another mixed methods design is the embedded design which includes one data set that can provide a supportive secondary role in a study based primarily on the other data type (Creswell 2003). This was corroborated by Schoonenboom and Johnson (2017) who believe that it has been premised on three facts: that a single data set is not sufficient, that different questions need to be answered, and that each type of question requires different types of data. The need to include qualitative data to answer a research question within a largely quantitative study and vice versa is a cogent justification for using the mixed methods embedded design. Thus, it embeds a qualitative (qual) component within a quantitative (QUAN) design (symbolized as QUAN + qual), it compares quantitative (QUAN) and qualitative (QUAL) designs (symbolized as QUAN + QUAL), and then embeds a quantitative (quan) component within a qualitative (QUAL) design (symbolized as QUAL + quan). Therefore, the mixed methods embedded design is generally symbolized as QUAL + quan, QUAN + QUAL, and QUAN + qual.

The next mixed methods design is referred to as mixed methods explanatory design which is a two-phase design whose overall purpose is completely dependent on the fact that qualitative (qual) data helps explain or build upon initial quantitative (QUAN) results (Creswell 2003; Schoonenboom and Johnson 2017). It is most suitable for any study that requires qualitative data to explain three findings: (a) significant or nonsignificant results, (b) outlier results, and (c) surprising results (Morse 2003). The mixed methods explanatory design is, therefore, symbolized as QUAN + qual. The design (QUAN + qual) can be used to form groups based on quantitative results and follow-up with the groups through subsequent qualitative research or using quantitative participant characteristics to guide purposeful sampling for a qualitative phase (Creswell 2003).

The last mixed methods design is exploratory design, a two-phase design, in which results of the qualitative (QUAL) method can help develop or inform the quantitative (quan) method (Greene et al. 1989). It has been premised on the fact that an exploration is cogently needed for one of the several reasons which include nonavailability of instruments, unknown variables, lack of a guiding framework, or the need for appropriate theories. Because the mixed methods exploratory design (symbolized as QUAL + quan) begins qualitatively, it is most suitable for exploring a phenomenon. The design (QUAL + quan) is particularly useful in developing and testing new instruments (Creswell 2014) and for identifying and quantitatively studying important unknown variables. Mixed methods exploratory design is also appropriate in case of the need to generalize results to different groups, to test aspects of an emergent theory (or classification), or to explore a phenomenon in-depth and measure its prevalence (Creswell 2014; Morgan 1998).

Whatsoever may be the case, designs must be construct-relevant in order to help minimize or even avoid drawing incorrect causal inferences from data (De Vaus 2001). The mixed methods designs (triangulation, embedded, explanatory, and exploratory) have been found to appropriately approach investigating problem(s) in various logical ways that mostly lead to correct causal inferences. The overall goal of these designs has been to expand and strengthen a study’s conclusions and make more empirical contributions to the published literature. This has been more significantly demonstrated by the way and manner any mixed methods design answers research questions more effectively in empirical ways than the other designs. Johnson and Christensen (2014) subscribe to this by corroborating that the mixed methods approach heightens knowledge by providing sufficient quality to achieve more legitimate multiple validities. Although exploratory design is said to be particularly useful in developing and testing new instruments, the embedded design is found to be more useful and optimally suitable in developing and testing new instruments (especially 3-version scales) for complex or multifaceted mixed methods assessments. According to Schoonenboom and Johnson, the mixed methods embedded design has additional advantage of being implemented either sequentially or concurrently as the case may be. It is, therefore, more optimally suitable for empirical studies than other designs.

1.2 Statement of Problem and Purpose of the Study

It has been observed that “if the meaning of quality of life (QOL) rating depends upon any underlying appraisal processes, the relationship between the observed item and the underlying latent true score is far more complicated than assumed” (Schwartz and Rapkin 2004). This, as Gandi and Wai (2010) inferred, has been more obvious in some scale for assessing the impacts of cognitions in emotion regulation to determine QOL. Hence invoking the principles of performance-based, perception-based, and evaluation-based measurements is expected to lend credence to and adequately ensure a construct-relevant scale. While the clarion call by Upton and Upton (2007) to integrate clinimetric and psychometric strategies in developing a multi-item health outcome measure is apt, its suitability needs to be tested by integrating the principles of performance-based, perception-based, and evaluation-based measurements as a model.

The purpose of the study was because the cogent need to assess the mediating-moderating effects of cognitive coping strategies in the relationship between illness and quality of life has been hampered by lack of a suitable construct-relevant scale over the years. Therefore, the study was designed to develop and validate a suitable scale for assessing the mediating-moderating effects of cognitive coping strategies in the relationship between illness and perceived quality of life. Thus, the envisioned scale was developed as a measurement tool that assesses the mediating-moderating role(s) of cognitive coping strategies in the relationship between illness and quality of life.

1.3 Conceptual Framework

The new scale was conceptualized by and premised on lucid integration of Gandi Psychometric Model (2018) and Schwartz and Rapkin’s (2004) model (see Figs. 1 and 2). To clarify the complicated relationship between observed items and the underlying latent true score as the appraisal processes upon which the QOL rating depends, Schwartz and Rapkin (2004) propounded a model which integrated performance-based, perception-based, and evaluation-based measurement dimensions as presented in Fig. 1.

Clarifying the discrepancy in performance-based, perception-based, and evaluation-based methods. (Adopted with copyright permission from the authors Schwartz and Rapkin 2004)

Scale Development Framework based on Gandi Psychometric Model. (Adopted from Gandi 2018)

The performance-based, which yields measures reflecting the quantity and quality of effort, is independent of judgment and more susceptible to error of measurement. Perception-based, which yields measures of individual judgment concerning the occurrence of an observable phenomenon, involves judgment while raters are expected to converge due to response bias. Evaluation-based, which yields measures rating experience as positive or negative compared with an internal standard, involves judgment using idiosyncratic criteria that enhances cogent merits of response shift.

A test development conceptual framework, based on the nine stages according to Gandi Psychometric Model (2018), which facilitates answering the “how” and “why” question(s), is presented in Fig. 2. These nine stages include test conceptualization, item generation, scaling methods, item pretesting, scoring models, test tryout, item analysis, test revision, and scale validation and standardization. It sets out clearly “how” the main stages through which the test development process moves (i.e., from left to right) and also reflects the systematic sequence of the process, i.e., from test conceptualization to scale validation (Fig. 2), including “why” particular stage(s) or variable(s) precedes and/or succeeds the other (Gandi 2018, 2019).

The creative integration of Figs. 1 and 2 (Schwartz and Rapkin 2004; Gandi 2018) led to forming the coined term “psychoperiscope,” which is a combination of psychometrics and periscope, deliberately conceptualized and adopted as the scale name. While psychometrics refers to the science of psychological measurement, periscope is an instrument that consists of a tube attached to a set of mirrors or prisms by which an observer can see things that are otherwise out of sight (Soanes and Stevenson 2007). Mental periscope refers to the ability of the intellect to observe, understand, and initiate appropriate action(s) in which the self can re-energize, examine, reflect, and refine, or just be completely still. When the intellect uses its capacity as a periscope, it can find a balance between the inside and the outside worlds.

This integrative conceptual framework forms the premise upon which the envisioned psychoperiscope, a 3-version scale, aims to more effectively be mirroring the mediating-moderating effects of cognitive coping strategies in the relationship between illness and quality of life. More optimal designs such as mixed methods embedded designs and a 3-step formula for sample size determination would positively corroborate the conceptual framework.

2 Methods

2.1 Research Design and Study Setting

The study adopted a multifaceted mixed methods design, referred to as mixed methods embedded design, in which Creswell (2003) pointed out that “one data set provides a supportive secondary role in a study primarily based on the other data type.” It was premised on the fact that “a single data set is not sufficient, emphasizing that different questions need to be answered, and that each type of question requires different types of data.” The need to include qualitative data to answer a research question within a largely quantitative study and vice versa is a cogent justification for using the mixed methods embedded design (Creswell 2003; Schoonenboom and Johnson 2017). It embeds a qualitative (qual) component within a quantitative (QUAN) design (QUAN + qual), compares a quantitative (QUAN) design with a qualitative (QUAL) design (QUAN + QUAL), and embeds a quantitative (quan) component within a qualitative (QUAL) design (QUAL + quan). Hence, the design (i.e., mixed methods embedded design) has been symbolized as QUAL + quan, QUAN + QUAL, and QUAN + qual which is implementable, both concurrently and sequentially, for developing 3-version scale (such as psychoperiscope). Mixed methods embedded design was also adopted because of the considered suitability for elucidating quality of life variance in relation to effects of cognitive coping strategies on the variance of illness. This corroborates the embedded design’s optimal suitability for eliciting three sets of data (from three sources) on the same target subjects-of-assessment.

The study was conducted at Jos University Teaching Hospital (JUTH) in Plateau State of Central Nigeria. JUTH’s diversity added impetus to the resulting data in terms of helping to “adequately prevent and avoid any perceived social desirability or other unwanted influence(s) that could amount to raping the psychometric quality of the scale” under consideration (Gandi 2019). This is because diverse professional and ethnic peculiarities as well as different ideological leanings within JUTH (the study setting) helped in achieving a study sample that more optimally met the requirements for adequate participant representativeness. Jos city is a miniature Nigeria and one of the settlements in the country where men and women exist as co-equals, without a significant gender bias or discrimination. This also had positive implication for research participation and the collected data characteristics.

2.2 Target Population and Sample Participants

The study essentially targeted clinical population, comprising patients alongside their family members and respective clinical practitioners, at the Jos University Teaching Hospital (JUTH). It must be noted that patients are the primary target participants (i.e., the subjects of assessment) for whom the new scale (herein referred to as psychoperiscope) was developed. The participating patients’ family members (spouse, parent, child, sibling, or others) and the clinical practitioners (doctor, nurse, or psychologist), as significant others in this case, serve the purpose of providing relevant data to adequately complement, supplement, and even validate the individual patient’s respective responses.

Since psychoperiscope is a 3-version scale that can generate data from three sources and is suitable for studies that adopt mixed methods embedded design, the pilot sample size consisted of 30*3 participants. Thus, it comprises 30 patients (the target subjects of assessment) alongside 30 family members (family source of embedded data) and 30 clinical practitioners (professional source of embedded data), respectively. This sample size (30*3) was systematically determined by forming a computation formula that determines the appropriate sample size for any mixed methods design that adopts concurrent data collection procedure, such as the mixed methods embedded design. The steps of the integrated formula include: determining sample size for infinite population (n i), determining attenuating sample size (n a) adjusted to facilitate avoiding nonresponse effects (n e), and converting the attenuating adjusted sample size (n a) to a sample size for finite population (n f).

First step – Determining sample size for infinite population by using confidence level, population proportion (P), and error margin (E):

where in this case Z = 2.576 which is the Z value corresponding to adopted confidence level which was set to 99%, P was assumed to be 50%, and E was set to 1% in this case.

Second step – Determining attenuating sample size adjusted to facilitate avoiding nonresponse effects:

where ne was set to 5%.

Third step – Determining sample size for finite population by using the adjusted sample size (a n):

where N = Population size.

Using the aforementioned adopted formulae (1), (2), and (3) helped to systematically determine the study sample size as 30*3 participants, which translates as 30 patients alongside 30 family members and 30 clinical practitioners, respectively. The 30 selected patients included male (n = 15) and female (n = 15) aged 16–73 years across different ethnicity, religion, education levels, occupations, and socioeconomic status.

The 30*3 participants were selected by multistage sampling across the study setting, Jos University Teaching Hospital (JUTH). Although it is a more complex method, the choice of multistage sampling premised on reliability and validity of its combined techniques. Trochim and Donnelly (2008, p. 47) describe multistage sampling as a method that combines several probability sampling techniques to create a more reliable and efficient or effective sample than the use of just any one sampling type can achieve on its own. The sampling techniques that constituted the multistage sampling method for this study include cluster sampling technique (phase 1) and stratified random sampling technique (phase 2). Cluster sampling helped in determining the specific study sites (i.e., units/wards) within the hospital and then stratified random sampling helped in selecting the individual study participants, i.e., patients (the subjects of assessment) alongside family members (the family embedded source of data) and clinical practitioners (the professional embedded source of data) at each of the participating units/wards.

2.3 Materials and Procedure

Materials

Psychoperiscope, a 21-item scale primarily developed for research and screening, consists of three versions namely version A (the patient or target participant version), version B (the patient family member version), and version C (the clinical practitioner version). Materials used in developing psychoperiscope have been similar to the instruments and conditions used in Rumor Scale Development chapter by Gandi (2019). Thus, the materials for psychoperiscope development include informed consent forms, interview schedule forms, demographic data forms, focus group discussions checklist, expert reviews rating rubrics, cognitive testing feedback sheets, video camera, writing materials, SPSS software, and the processing/analysis system (computer). The conditions considered as significant materials in the study include the basic necessary and sufficient conditions as well as a great deal of miscellaneous conditions that Mackie (1965) referred to as “insufficient but nonredundant part of unnecessary but sufficient (inus) conditions”.

Procedure

Psychoperiscope was developed by applying the nine stages of the Gandi Psychometric Model, which include test conceptualization, item generation, scaling methods, item pretesting, scoring models, test tryout, item analysis, test revisions, and scale validation (Gandi 2018). Having conceptualized psychoperiscope to be developed as a 3-version scale (patient version, family version, and clinician version), using deductive and inductive methods generated a pool of 64 items as an item bank. The first 30 items were deductively derived from literature review on focal constructs and target population as well as from systematic review of existing related scales. The next 34 items were inductively devised by conducting focus group discussions, in-depth interviews, and personal brainstorming across potential stakeholders. Thereafter, all the 64 items in the item bank were subjected to deliberate pretesting with the aid of expert reviews and cognitive testing interviews which refined them for more relevance and suitability. Only 28 items survived the process while 36 items were deleted at this stage for want of suitability. Likert-type scale has been the adopted scaling method alongside its corresponding scoring model for the resulting 28 items that survived the preceding rigorous pretesting reviews.

In implementing the test tryout, required research ethical clearances were earned based on certificate in human subjects’ research course as well as the social and behavioral research curriculum completion for collaborative institutional training initiative (CITI) was appropriately fulfilled. All essential ethical considerations, such as voluntariness, confidentiality, autonomy, avoiding even minimal risk, ensuring individual privacy, and other required conditions for studies with human participants, were observed and consciously adhered to. Prior to the designed protocol implementation, research field assistants (n = 5) and research confederates (n = 5) were systematically recruited (one at each of the respective study units/wards) among the health professionals. Individual informed consent(s) were duly obtained from each participating patient as well as their respective family members and clinical practitioners separately. The recruited field assistants and research confederates, who facilitated the pilot study protocol implementations alongside the researcher, lend credence to appropriate task of data collection. After administering the 28 items as a self-response scale, all the completed forms were retrieved while individual participants were being appreciated for their time and kind participation.

The completed and retrieved scale forms were systematically collated and then coded, preparatory for onward analysis as required. The analysis techniques then emphasized item reliability index, difficulty index, discrimination index and validity index which together ensured adequate soundness of the scale (Gandi 2019). Just as Gandi (2019) earlier noted, the item difficulty index and discrimination index were qualitatively determined (based on item pretesting process), while the statistical analysis (quantitative methods) emphasized ensuring reliability index and validity index of the retained items. The analyses conducted include content validity index (CVI), item-total statistics, Pearson’s correlation analysis, and exploratory factor analysis (EFA). A befitting threshold of item minimum excellent significance level, set at 0.60, was adopted while all the pilot data analyses were respectively carried out at p ≤ 0.05.

3 Results

Results of the study have shown significant reliability and validity for 21 items retained out of the pilot-tested 28 items that were subjected to analysis. Item correlation coefficients, based on Pearson, r = 0.62–0.70 (p < 0.05), had an overall average Cronbach’s alpha as ά = 0.66. Thus, the raw Cronbach’s and standardized Cronbach’s alpha values were found to be 0.62 and 0.70, respectively.

The scale mean of the means (3.90) and mean of the variances (0.46) as well as the variance of means (0.19) and variance of variances (0.24) all corroborated its reliability, as shown in Table 1 (summary item statistics). Likewise, Table 2 shows that the mean (261.59), variance (574.96), and standard deviation (23.98) have constituted very good scale statistics.

Item-total statistics, which checks for any item(s) that might be inconsistent with average behavior of others, was analyzed in order to safely discard inconsistent item(s). As shown in Table 2, the item-total statistics analysis results reflected scale mean if item deleted, correlated item-total correlation, and Cronbach’s alpha if item deleted.

By investigating the item-total correlation, seven items with low correlations (below required alpha values) were dropped from the preceding 28 items to retain 21 items that correlated highly (0.60 and above). Table 2 shows the scale mean, if item deleted, for all the retained 21 cases with an average of 22.99 for the duly summated items. The scale variance, if item deleted, was summed up for all the 21 cases as 22.93, to be the variance of the summed items. By exploring alpha, and having deleted any or all of the low correlated items, the reliability of the scale would increase to 0.88 in either case (Table 2).

Table 2 shows that the corrected item-total correlation has provided empirical evidence to the extent that only few items correlated at low values which, undoubtedly, translated to the fact that just few items are construct-irrelevant in this case and have been deleted. The changes in Cronbach’s alpha (for the retained 21 items) if any of the items were deleted have effectively supported and corroborated the corrected item-total correlations by indicating high correlations (as presented in Table 2) ab initio.

Table 3 presents scale mean, if item deleted, for all the retained 21 cases, averagely as 23.41, for the duly summated items. The scale variance, if item deleted, were summed up, for all the 21 cases as 23.02, to be the variance of the summed items. It presented the overall item-total statistics, which is known to check for any item(s) that might be inconsistent with average behavior of other items, as analyzed in order to ascertain the measure by discarding any inconsistent item(s). The item-total statistics result appropriately reflected scale mean if item deleted, correlated item-total correlation, and Cronbach’s alpha if item deleted.

By investigating the item-total correlation (Table 3), seven items with low correlations (below required alpha values) were discarded from the preceding 28 items to retain 21 items that correlated highly (0.60 and above). By exploring alpha, and having deleted any or all of the low correlated items, the reliability of the scale would increase to 0.82 in either case (Table 3). The corrected item-total correlation provided empirical evidence that only few items correlated at low values, indicating that only few items are construct-irrelevant in this case. As seen in Table 3, the changes in Cronbach’s alpha if any items were deleted have corroborated the corrected item-total correlations by indicating high correlations.

The mean scale shown in Table 4, if item deleted, for all the 21 cases has an average of 22.97 for the duly summated retained items. The scale variance (Table 4), if item deleted, was summed up for all the 21 cases as 22.93 which is the variance of the summed 21 retained items. By evaluating total correlations in Table 4, it could be noticed that the retained 21 items have respectively satisfied the desired psychometric requirements. There has been no significant variation among or between the respective patient family members on the presented results.

Likewise, by evaluating the total correlations in Table 4, it could be noticed that the 21 retained items have respectively satisfied the desired psychometric requirements. There has been no significant variation among or between clinical practitioners on the presented results.

So far, it would be noticed that the respective Tables (i.e., Tables 2, 3, and 4) have reflected item-total statistics, showing all the retained 21 items with significant reliability. Items that were not consistent with how other items behaved were checked for and summarily deleted from the table for their nonsuitability. The reliability, after deleting inconsistent items from the tables, was based on scale mean, scale variance, corrected item-total correlation, and Cronbach’s alpha.

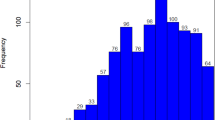

Notwithstanding the findings from item-total statistics analysis, a purification based on exploratory factor analysis (EFA) was further conducted for the purification of all the scale items. This has addressed the respective items’ skewness and kurtosis (Table 5). Thus, the retained 21 items were further explored and found to have significantly score values that adequately satisfied the skewness and kurtosis requirements.

The skewness and kurtosis (Table 5) consideration in the study requires that any items with absolute values of more than three for skewness and then less than eight for kurtosis are psychometrically inadequate, therefore not satisfactory for inclusion in the items to be retained. Consequent upon this, the exploratory purification retained all the 21 items on the basis of satisfying the criteria for inclusion.

4 Discussion

The study, which was designed to develop a suitable scale for assessing the mediating-moderating effects of cognitive coping strategies in the relationship between illness and quality of life, gave rise to psychoperiscope as a 21-item (3-versions) scale. Using the nine stages of test development, based on Gandi psychometric model (2018), both deductive and inductive approaches as well as expert reviews and cognitive interviews were critically implemented. Gandi psychometric model corroborates the perspectives of De Vaus (2001) and Yin (2014), which hold that any design that uses more logical and comprehensive approach to investigating problem ensures that the evidence(s) obtained enables us to answer initial research questions as unambiguously as possible. Such designs would have to be adequately representative, by integrating different techniques, to accommodate various peculiarities toward attenuating/controlling extraneous (or confounding) variable effects. Combining different techniques in dramatic ways at such magnitude and intensity have been described in terms of multimethod and mixed methods designs. A multimethod research involves combining multiple elements of either a qualitative technique or quantitative technique while mixed methods research involves combining the elements of both qualitative and quantitative techniques in one study. The robust procedural nitty-gritty lends credence to psychoperiscope development.

Mixed methods embedded design was adopted in developing and pilot-testing the new scale because, according to Creswell (2014), it is the most rigorous procedure for collecting and analyzing data as well as interpreting and reporting the study findings that emanate from the data. The four major mixed methods designs (triangulation, embedded, explanatory, and exploratory designs) have been found psychometrically suitable in their respective rights. However, the embedded design which was specifically adopted for the study under review has best addressed the research problem toward achieving psychoperiscope development. This is because, in mixed methods embedded design, one data set provides a supportive secondary role where the study is also based on other data type or source. Creswell (2014) premised this on the empirical fact that single data set is not sufficient, that different questions need to be answered, and that each type of question requires different types of data. As a 3-version scale, the resulting psychoperiscope elicits three data sets on the same participant (i.e., the same subject of assessment) because one data set was considered insufficient.

Whatsoever may be the case, designs must be construct-relevant in order to help minimize or even avoid drawing incorrect causal inferences from data (De Vaus 2001). The mixed methods designs (triangulation, embedded, explanatory, and exploratory) have been found to appropriately approach the problem investigation in various logical ways that mostly lead to correct causal inferences. The overall goal of these designs has been to expand and strengthen a study’s conclusions and make more empirical contributions to the published literature. This has been more significantly demonstrated by the way and manner any mixed methods design answers research questions more effectively in empirical ways than the other designs. Johnson and Christensen (2014) subscribe to this by corroborating that the mixed methods approach heightens knowledge by providing sufficient quality to achieve more legitimate multiple validities. Although exploratory design is said to be particularly useful in developing and testing new instruments, the embedded design is found to be more useful and optimally suitable in developing and testing new instruments (especially 3-version scales) for complex or multifaceted mixed methods assessments.

According to Schoonenboom and Johnson, the mixed methods embedded design has additional advantage of being implemented either sequentially or concurrently as the case may be. It is, therefore, more optimally suitable for empirical studies than other designs. To actualize the envisioned goal of developing psychoperiscope using mixed methods embedded design in practical terms, a suitable formula for sample size determination had to be formulated as part of the study. The systematically formulated computation formula helped in determining appropriate sample size for the study which adopted the mixed methods embedded design, using concurrent data collection procedure. The steps of this formula include: determining sample size for infinite population (n i), determining attenuating adjusted sample size (n a) to facilitate avoiding nonresponse effects (n e), and converting the attenuating adjusted sample size (n a) to a sample size for finite population (n f).

The qualitative and quantitative approaches, which together answer research questions based on embedded design more adequately, were systematically implemented at every stage of the psychoperiscope development process. Thus, the embeddedness is twofold: (i) the quantitative components were embedded within qualitative design and (ii) the qualitative components were embedded within quantitative design. The mixed methods embedded design was specifically opted because of its advantages and more optimal suitability in designing performance-based, perception-based, and evaluation-based measures that the psychoperiscope represents in QOL assessment. For instance, embeddedness (by its very nature) helps check and minimize any possibility of social desirability that seems to define the weakness of some other scales.

Qualitative analysis, supported by Lawshe’s (1975) content analysis, helped to ensure significant item content validity which translated to having a suitable construct-relevant scale. Quantitatively, item-total statistics helped to check for item(s) that might be inconsistent with the average behavior of other items on the scale as it were. This analysis (item-total statistics) was a huge contribution that suggested safe discarding of specific items with significant inconsistent characteristics in relation to the perceived relevant items. This was confirmed based on the correlated item-total correlation and the Cronbach’s alpha if item deleted which provided empirical evidence suggesting that the items which correlated at low values are construct-irrelevant and deserved to be discarded. All the three sets of data that were analyzed have reflected a similar scenario in the presented findings thereof.

Notwithstanding the findings from item-total statistics analysis, a further purification by conducting exploratory factor analysis has added significant impetus to the scale items. Thus, exploratory factor analysis has effectively addressed the skewness and kurtosis outlook of respective items on the scale. This presented the retained items as only those having significant score values that have adequately satisfied the skewness and kurtosis requirements. Consequent upon this, therefore, the overall analyses have finally retained all the 21 items on the basis of satisfying the criteria for inclusion.

References

Creswell, J. W. (2003). Research design: Qualitative, quantitative, and mixed methods approaches (2nd ed.). Thousand Oaks: Sage.

Creswell, J. W. (2014). A concise introduction to mixed methods research. Thousand Oaks: Sage.

Creswell, J. W., & Plano Clark, V. (2007). Designing and conducting mixed methods research. Thousand Oaks: Sage.

De Vaus, D. A. (2001). Research design in social research. London: Sage.

Epskamp, S., Borsboom, D., & Fried, E. I. (2017). Estimating psychological networks and their accuracy. Behaviour Research Methods, 50(1), 195–212. https://doi.org/10.3758/s13428-017-0862-1.

Fava, G. A., Tomba, E., & Sonino, N. (2011). Clinimetrics: The science of clinical measurement. The International Journal of Clinical Practice, 66(1), 11–15. https://doi.org/10.1111/j.1742-1241.2011.02825.x.

Feinstein, A. R. (1987). Clinimetrics. New Haven: Yale University Press.

Gandi, J. C. (2018). Development and validation of health personnel perceived quality of life scale (Unpublished doctoral thesis). University of Ibadan, Nigeria.

Gandi, J. C. (2019). Rumor scale development. In M. Wiberg, S. Culpepper, R. Jansen, J. Gonzalez, & D. Molenaar (Eds.), Quantitative psychology. IMPS 2018 Springer proceedings in mathematics and statistics (Vol. 265, pp. 429–447). Cham: Springer.

Gandi, J. C., & Wai, P. S. (2010). Impact of partnership in coping in mental health recovery: An experimental study at the Federal Neuro-Psychiatric Hospital Kaduna. International Journal of Mental Health Nursing, 19(5), 322–330.

Garnefski, N., van den Kommer, T., Kraaij, V., Teerds, J., Legerstee, J., & Onstein, E. (2002). The relationship between cognitive emotion regulation strategies and emotional problems. European Journal of Personality, 16, 403–420.

Greene, J. C., Caracelli, V. J., & Graham, W. F. (1989). Towards a conceptual framework for mixed methods evaluation designs. Educational Evaluation and Policy Analysis, 11(3), 255–274.

Johnson, R. B., & Christensen, L. (2014). Educational research: Quantitative, qualitative, and mixed approaches. Washington, DC: Sage.

Lauritzen, S. L. (1996). Graphical models. Oxford: Clarendon Press.

Lawshe, C. H. (1975). A quantitative approach to content validity. Personnel Psychology, 28, 563–575.

Mackie, J. L. (1965). Causes and conditions. American Philosophical Quarterly, 12, 245–265.

Mayo, N. E. (2015). Dictionary of quality of life and health outcomes measurement (1st ed.). Milwaukee: International Society for Quality of Life Research (ISOQOL).

Monat, A., & Lazarus, R. S. (1991). Stress and coping: An anatomy. New York: Columbia University Press.

Morgan, D. L. (1998). Focus group guidebook. Thousand Oaks: Sage.

Morse, J. M. (2003). Principles of mixed methods and multimethod research design. In A. Tashakkori & C. Teddlie (Eds.), Handbook of mixed methods research (pp. 189–208). Thousand Oaks: Sage.

Schoonenboom, J., & Johnson, R. B. (2017). How to construct a mixed methods research design. Kolner Z Soz Sozpsychology, 69(Suppl 2), 107–131.

Schwartz, C. E., & Rapkin, B. D. (2004). Reconsidering the psychometrics of quality of life assessment in light of response shift and appraisal. Health and Quality of Life Outcomes, 2(16), 1–11.

Soanes, C., & Stevenson, A. (2007). Concise oxford English dictionary (11th edition revised (3rd impression)). Oxford: Oxford University Press.

Trochim, W. M. K., & Donnelly, J. P. (2008). The research methods knowledge base (3rd ed.). Cincinnati: Atomic Dog Publishing (a part of Cengage Learning).

Upton, D., & Upton, P. (2007). A psychometric approach to health related quality of life measurement: A brief guide for users. In Leading-edge psychological tests and testing research (pp. 71–89). New York: Nova Science.

Yin, R. K. (2014). Case study research design and methods (5th ed.). Thousand Oaks: Sage.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Gandi, J.C. (2020). Psychoperiscope. In: Wiberg, M., Molenaar, D., González, J., Böckenholt, U., Kim, JS. (eds) Quantitative Psychology. IMPS 2019. Springer Proceedings in Mathematics & Statistics, vol 322. Springer, Cham. https://doi.org/10.1007/978-3-030-43469-4_23

Download citation

DOI: https://doi.org/10.1007/978-3-030-43469-4_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-43468-7

Online ISBN: 978-3-030-43469-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)