Abstract

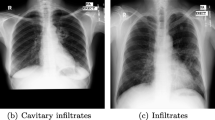

The isolation of different structures is often performed on chest radiography (CXR) and the classification of abnormalities is an initial step in detection systems as computer-aided diagnosis (CAD). The shape and size of lungs may hold clues to serious diseases such as pneumothorax, pneumoconiosis and even emphysema. More than 500,000 people die in the United States every year due to heart and lung failure, often being tested for the normal CXR film. With an increasing number of patients, the doctors must over-work, hence they cannot provide the advice and take care of their patients correctly. In this case, the computer system that supports image classification and boundary CXR detection is needed. This paper presents our automated approach for lung boundary detection and CXR classification in conventional poster anterior chest radiographs. We first extract the lung region, size measurements, and shape irregularities using segmentation techniques that are used in image processing on chest radiographs. For the CXR image, we extract 18 various features using the gray level co-occurrence matrix (GLCM) which enables the CXR to be classified as normal or abnormal using the probabilistic neural network (PNN) classifier. We measure the performance of our system using two data sets: the Montgomery County (MC) x-ray dataset and the Shenzhen X-ray dataset. The proposed methodology has competitive results with relatively shorter training time and higher accuracy.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Chest X-ray imaging

- Balance Contrast Enhancement Technique

- Lung boundary detection

- Gray level co-occurrence matrix

- Probabilistic neural network

- Classification

1 Introduction

Chest radiography or chest X-ray (CXR) is still the most commonly used imaging modality for diagnosing various pulmonary diseases and the most widely used diagnostic imaging in the world due to its low radiation, free of side-effects, economic feasibility, and moderate sensitivity. Many people die annually due to chest diseases such as lung infections, pulmonary disease, and lung cancer. For most diseases, many drugs are effective only in the early stages and without symptoms of the disease. An examination can help in early diagnosis, and chest radiography is the most common method of popular imaging for the above reasons [1].

The important steps in the automatic analysis of chest x-ray images in the chest are to accurately detect the lung boundaries and classify them to normal and abnormal. It is an early diagnostic tool commonly used in clinical settings to monitor defects in the heart, including the lungs, heart, bleeding, fusion, breast surgery, pleural effusion, edema, cardiac hypertrophy, and hypertrophy [2]. In some diagnostic cases, the information is based on images directly related to the border and easy without further analysis. For example, the availability of irregular shape, volume measurements, total lung volume [3] evidence of serious diseases such as heart enlargement [4] pneumonia, pneumonia, or emphysema [5].

There have been many studies on the discovery of lung abnormalities in the past. Computer-assisted diagnosis (CAD) has become part of routine clinical work in many countries. The discovery of chest x-ray lung areas is an important component, especially in determining whether the lung is normal or abnormal [6]. The detection of lung fields is usually the first important step in the computerized analysis of chest radiography. Once you know the limits of the lung fields, further assessment of the condition of the lungs can be made [7].

Neural networks are a relatively modern programming methodology for computers. They are excellent in performing many tasks that cannot be performed by artificial intelligence and machine learning such as pattern recognition. Programs that use Artificial Neural networks (ANN) can achieve self-learning and will be able to cope with changing circumstances [8]. ANN is a methodology for processing information with key ideas inspired by the mechanism of human minds to process information. In other words, ANN is inspired by human neurotransmitters.

Neural network applications in computer diagnostics are the main direction of computing Intelligence in medical imaging [9]. Their penetration and participation in almost all-inclusive. Health problems due to the fact that: (1) neural networks have the adaptive nature of learning input Information, using the appropriate learning algorithm, can improve itself depending on the variety and Change the input content, (2) neural networks have the ability to improve the relationship between; Input and output through distributed computing, training and processing, leading to the reliable solutions required Specifications; (3) The medical diagnosis is based on a visual examination and provides most of the medical imaging. An important tool to facilitate this verification and visualization. In the training process, the connection line tables are frequently corrected so that the network can resolve the current problem. Gained deep neural networks or automated learning in general, popular in recent years due to outstanding performance in several difficult images analysis problems, such as image classification and detecting objects and the segmentation of semantics [10]. In particular, the radiologist can help reduce the workload. There are several suggested classification methods to resolve this issue. At present, there are common ways to solve image classification problems, such as K-Mean, K-NN, deep neural network and vector support machine (SVM). The convolutional neural network (CNN) is one of the deep learning models that has received considerable attention from researchers in recent years. It is frequently used in image classification, image recognition, language translation, medical diagnoses, and many other fields, etc. [11].

In the proposed method, the first stage presents contrast enhancement of CXR using the Balance Contrast Enhancement Technique (BCET), and segmentation techniques that are used in image processing on chest radiographs to identify the lungs region, size measurements, and shape irregularities. And the second stage is to extract features from chest x-ray images using the gray level co-occurrence matrix (GLCM). In the end, a classification of the normal and abnormal CXR is conducted by using PNN. When tested on a public database of chest x-ray images, the proposed method achieved comparable and higher accuracy on most of the structures than the state-of-the-art segmentation methods.

The rest of the paper is organized as follows. In Sect. 2 the related work is analyzed. Section 3 provides details of materials and methods for lung boundaries detection and classification of CXR images to normal and abnormal. In Sect. 4, the experimental study is described and the main results obtained are presented. The conclusions are given in Sect. 5.

2 Related Works

The advent of digital chest radiography CXR and digital image processing have given new impetus to computer diagnostics and diagnostics. However, despite its presence in medical practice, the CXR standard is a very complex visualization tool. A deep learning neural network methodology used to help clinicians identify stenosis of the lumbar spine through semantic segmentation and identification of scans of magnetic resonance imaging (MRI) of the lumbar spine [12]. And the work in [13] addresses to automatic tomography of the lumbar MRI to determine the border between the anterior arch and the posterior arch of the lumbar spine. This is necessary to effectively identify the occurrence of stenosis of the lumbar spine as the main cause of chronic lower back pain. And a separate training group is created for each region, and the final rating is by voting and weighted integration. Image processing techniques are used on chest radiographs to determine lung area, size measurements, and shape irregularities using segmentation methods [14]. Besides, they use the difference between the corresponding regions in the left and right fields of the lungs as markers

To classify images of TB, it is proposed to classify images of TB using the Multi-Layer Perceptron (MLP) neural network activated by Support Vector Machine (SVM) and the results are compared with the state-of-the-art approach, Back Propagation Neural Network (BPNN) [15]. Ways to detect natural and pathological forms of tuberculosis should increase the classification accuracy, to a large extent both on the image and at the level of the object. Chest x-ray using an anatomical atlas with undiluted registration in [16] suggested methods for detecting lung boundaries that are superior to current indicators, as well as improving modern standard estimates of abnormal lung forms. Computer diagnostics are used to detect pulmonary tuberculosis during a chest x-ray, lung proliferation, as well as texture and shape. Features of extraction, classification using vector support machines to achieve high accuracy [17]. A hierarchical graph of directional gradients [17] using the edge map, to automate the examination of pulmonary anomalies. To distinguish normal and abnormal CXR images using the corresponding chest edge, first translate the area of interest (ROI). In [18] the subsystems of textual, focal, and shape distortions are combined into one system to deal with heterogeneity. An abnormal expression in different population groups. Performance on tuberculosis screening and TB database is open using both External and radiological reference standards [18]. In [19] this work is a way to classify CXRs into two parts: TB and non-TB. Depending on the ways the shape is selected, the structure is considered an advantage, then combed and categorized by classification such as SVM and CNN.

3 The Proposed System

As mentioned above, the purpose of this work is to develop methods of segmentation and classification of x-ray chest, which can determine the limits of lung regions and abnormalities in the form of X-rays. During the design of our method, there was a set of design objectives kept in our mind. These set of design objectives include:

-

Extracting the minimal set of features that distinguish between normal and abnormal X-ray images.

-

Achieving high accuracy of segmentation lung boundaries and classification.

-

Minimizing the time required for the process of segmentation and classification.

The main purpose of the proposed method to lung boundary detection automatically and classification from the chest X-ray images. The extracted information from the segmented image is useful in computer vision applications and to identify constituent parts in the image. The PNN workbook showed good accuracy, very short training time, resistance to weight changes and small retraining time. The results-based performance will be analyzed at the end of the development phase. The final output of the proposed method is lung boundary detection and either normal or abnormal chest X-ray image. A detailed block diagram of the proposed method is shown in Fig. 1.

3.1 Preprocessing

The primary task of pretreatment is to improve the quality of X-ray images of the chest and to make them suitable for further processing by human or computer vision. Preprocessing helps to improve certain parameters of chest x-ray images such as improving the signal-to-noise ratio and enhancing the visual appearance of the chest x-ray images, removing the irrelevant noise and undesired parts in the background. To improve the contrast for highlighting the area of interest the researchers proposed to use Balance Contrast Enhancement Technique (BCET). Typically, during medical image processing, the contrast enhancement is required for the area of interest. The work of Kumbhara, Patila, and Rudrakshi ware used in [20] to harmonize the limited variance restriction (CLAHE) to improve the performance of medical images for appropriate diagnosis. Unsharp masking is another interesting approach to image enhancement. It aims to enhance the edges and details, but the usage of a high-pass filter also makes the method extremely sensitive to noise [20].

The images can be extended or contrasted without changing the (IOld) image graph pattern. The solution depends on the equivalent function obtained from the input image. The general form of an equivalent function is defined as follows:

Transactions A, B, and C are derived from the input value, the minimum image output value (INew), the maximum image output value, and the average image output value

-

Where the parameters of the input image and output images as:

-

l is the minimum value of the input image.

-

h is the maximum value of the input image.

-

e is the average value of the input image.

-

s is the summation of the square root of input image.

-

L is the minimum value of the output image.

-

H is the maximum value of the output image.

3.2 Segmentation

In medical CXR images, lung regions are segmented. The higher segmentation accuracy in medical image analysis identifies the disease more precisely. There are two approaches in segmentation they are discontinuity and similarity-based approaches. Discontinuity based approach is used for identifying isolated points, lines and edges in an image (ex-identifying lung boundary in CXR). Similarity-based approach is the grouping of similar intensity values in an image. The operation in this approach is clustering, thresholding, region splitting, and merging. The lung segmentation based on geometric features like object edge, circularity and image size has long been introduced to isolate the lung region from other anatomies inside the CXR image. Segmenting the lung with edge detection is fundamental and essential in the pre-processing step because edges represent important contour features within the corresponding image [21].

3.3 Otsu’s Thresholding Method

The Otsu thresholding is one of the similarities-based approaches, which is simpler to divide the image into two regions. Let’s assume that the image has a dark object toward the light background or vice versa. The intensity values in the image are mainly concentrated near two regions, whereas the dark areas consist of low-intensity values or photoreceptors of higher intensity values. In the case of CXR, the dark object means the lungs and others are its backgrounds. After lung segmentation, the lung boundary detected using canny edge detection filters. An example shown in the Fig. 2, Obtained segmented chest x-ray image will be helpful in the medical diagnosis. Additional, our segmentation method allows getting the size of the left lung, right lung, and all lung.

3.4 Feature Extraction

The GLCM is a robust way of statistical image analysis. It’s used to evaluation of images features regarding second-order statistics, by looking at the link between two neighboring pixels in one offset as the second-order texture. GLCM Texture Measurement is a method of analyzing image texture. This is a powerful way to calculate the properties of the first and second-order of an image. In addition, the GLCM matrix represents a schedule of occurrence of different combinations of gray levels in an image. The accuracy of the classification depends on the quality of the extracted features. The gray level co-occurrence matrix is a robust way of statistical image analysis. It is used for the evaluation of images features regarding second-order statistics. GLCM is defined as a two-dimensional matrix of joint probabilities between pairs of pixels over an image (I) as the distribution of co-occurring values at a given offset (dx, dy) for an image with size N × M:

Features were chosen after Gómez et al. [22]: Energy (F8), Contrast (F2), Correlation (F3), Autocorrelation (F1), Entropy (F9), Homogeneity (F10), Dissimilarity (F7), Cluster Shade (F6), Cluster Prominence (F5), Maximum Probability (F12), Sum Of Square (F13), Inverse Difference Moment (F21), Sum Average (F14), Sum Variance (F16), Sum Entropy (F15), Difference Variance (F17), Difference Entropy (F18) and Information Measure Of Correlation (F19).

In our work, we have tried different dataset values in computing the GLCM matrix. In order to obtain better classification results, GLCM matrixes were generated for different offsets (from 2 to 4 pixels) and angles (0°, 45°, 90°, and 135°).

3.5 Classification

Neural networks are a relatively modern programming methodology for computers. Probabilistic neural network (PNN) is effective machine learning technique for classification and regression. In this stage of the test phase. Given a grey level image, after applying the feature extraction steps, the PNN classifier is used to make a decision about the status of the given image. It is a binary classification process in which the classifier determines whether the given image is a normal or abnormal CXR image. In order to perform its role, the classifier uses the extracted features in addition to the previously learned knowledge.

Probabilistic Neural Network:

A probabilistic neural network (PNN) is a direct neural network that depends on a Bayesian algorithm and a statistical algorithm called Fourier differentiation kernel analysis. One of the advantages of PNN is that it is insensitive to emissions. As shown in Fig. 3, the PNN file consists of four layers: the input layer, hidden layer, template layer/composite layer, output layer. We used this algorithm to distinguish a normal image from a stego [23]. The workbook is used to detect abnormal x-rays. To do this, we use PNN, which classifies vectors as calculated as normal or abnormal, regardless of whether the x-ray image on the chest is affected with TB or not.

4 Experiments

In this work, we used two datasets, the Montgomery County chest X-ray set (MC) and Shenzhen chest X-ray set [24] to assess the proposed lung boundary detection and classification method. The MC group was assembled in collaboration with the Department of Health and Human Services of Montgomery County, Maryland, and USA. The group contains 138 X-ray images of a screening program for tuberculosis in the Montgomery district, of which 80 are normal and 58 with manifestations of tuberculosis (TB). And the Shenzhen dataset was collected in collaboration with Shenzhen No.3 People’s Hospital, Guangdong Medical College, and Shenzhen, China. The chest X-rays are from outpatient clinics and were captured as part of the daily hospital routine within a 1-month period, using a Philips DR Digital Diagnose system. The set contains 662 frontal chest X-rays, of which 326 are normal cases and 336 are cases with manifestations of TB. The X-rays are provided in PNG and DICOM format. The size of the X-rays is either 4,020 × 4,892 or 4,892 × 4,020 pixels for Montgomery County chest X-ray set and 3K × 3K pixels for Shenzhen chest X-ray set. The proposed system was implemented under a MATLAB2016 platform, windows7 OS, and Intel core i5-2400@1.6 GHZ CPU.

For lung boundary detection we compute the figure of merit (FOM), Jaccard similarity coefficient Ω, sensitivity and accuracy and compared with the works in [2, 6, 12, 15], and [20], our method outperforms all the other methods. Besides, our qualitative results are shown in Fig. 4.

To assess the reliability and correctness of detection of the lung border obtained by the proposed method, the following parameters were used: Pratt’s Merit Index (FOM), Jaccard similarity coefficient Ω, sensitivity, and accuracy. These parameters are mainly based on the values of TP, TN, FP, FN, and RECnt, where TP is the number of correctly selected pixels as tumor lines, TN is the number of pixels correctly detected as background, FP is the number of fake pixels defined as lung borders, and FN - The number of pixels erroneously identified as background. It represents the number of link edges (RECnt) the edge of the number of pixels of a reference map created by an expert.

Pratt’s Merit Index (PMI) is another useful measure for evaluating the performance of edge detectors. This measure uses the distance between all pairs of corresponding points to determine the difference between the flaps. The PMI, which evaluates contour similarity, is defined as:

where RECnt and AECnt are two ideal numbers (ref) and the actual point of the edge, di is the distance between the edge of the pixel and the nearest pixel edge of the reference, α is the experiment with constant calibration (α = 1/9 was used, the optimal value developed by Pratt [25]).

PMI reaches a maximum value of one for identical, and gives contrast the lowest value. Interference metrics define the overlapping space between algorithm fragmentation and reference limits. The most widely used coefficient is Jaccard similarity and is defined as follows:

Accuracy is the ratio of real results. Accuracy gives a percentage of the number of pixels and objects that have been accurately detected. The range of metrics is in the range from 0 to 1. If the accuracy value is 1, the result will be the same record. Accuracy is defined as:

Sensitivity or true positive speed calculates the percentage of pixels in an object that is correctly identified as pixels of an object. The range of metrics is from 0 to 1, and the maximum value is optimal. Sensitivity can be described using:

Table 1 demonstrates several techniques for the formation of a lung boundary detection for the model images shown in Fig. 4.

Two different experiments have been conducted to evaluate the performance of the classifiers. The first one used dataset 1 features while the second one used dataset 2 features. The results are shown in Table 2 and Fig. 5.

From Table 2 we conclude that the dataset 2 get a better result than the dataset 1. Where the accuracy of dataset 2 is 96.73%, but the accuracy of dataset 1 is 95.93%. Where the ratio of correctly classified images from normal chest x-ray image increased from 95.94% in dataset 1 to 96.89% in dataset 2, and the ratio of correctly classified images from an abnormal chest x-ray images increased from 94.98% in dataset 1 to 95.77% in dataset 2. While the ratio of incorrectly classified images from normal chest x-ray images decreased from 4.06% in dataset 1 to 3.11% in dataset 2, and the ratio of incorrectly classified images from an abnormal chest x-ray images decreased from 5.02% in dataset 1 to 4.23% in dataset 2. We note the dataset 2 give the high ability to the PNN to detecting normal or abnormal chest x-ray image.

5 Conclusion

In this study, we developed a methodology that allowed us to create an automated system for detecting the boundaries of lung and classifying CXR images. The proposed methodology consists of three main stages. Initially, CXR image enhancement is performed by reducing noise and adjusting the contrast. The lung areas are then detected. After that, we calculate the CXR extraction feature set after optimization as input for a PNN workbook, which classifies the selected input image as normal or abnormal. The proposed method of rapid detection and accuracy of the lung area is consistent with other methods according to the similarity of Jaccard and Dyce. The average values for these indicators are 0.915 and 0.955 in contrast. A PNN-based workbook shows good results with moderate detection accuracy on CXR images. Thus, a correct estimate of an average of 94.98–95.77% depends on the data set. Images classified incorrectly with pathology are about 4–5%, which is not enough for full automation. However, a system based on the proposed methods can be used as an auxiliary component for medical professionals.

References

Ahmad, W.S.H.M.W., Zaki, W.M.D.W., Fauzi, M.F.A., Tan, W.H.: Classification of infection and fluid regions in chest x-ray images. In: 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA) (2016)

Candemir, S., et al.: Lung segmentation in chest radiographs using anatomical atlases with nonrigid registration. IEEE Trans. Med. Imaging 33, 577–590 (2014)

Carrascal, F.M., Carreira, J.M., Souto, M., Tahoces, P.G., Gómez, L., Vidal, J.J.: Automatic calculation of total lung capacity from automatically traced lung boundaries in postero-anterior and lateral digital chest radiographs. Med. Phys. 25, 1118–1131 (1998)

Meyers, P.H., Nice, C.M., Becker, H.C., Nettleton, W.J., Sweeney, J.W., Meckstroth, G.R.: Automated computer analysis of radiographic images. Radiology 83, 1029–1034 (1964)

Coppini, G., Miniati, M., Monti, S., Paterni, M., Favilla, R., Ferdeghini, E.M.: A computer-aided diagnosis approach for emphysema recognition in chest radiography. Med. Eng. Phys. 35, 63–73 (2013)

Li, X., Chen, L., Chen, J.: A visual saliency-based method for automatic lung regions extraction in chest radiographs. In: 2017 14th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP) (2017)

Iakovidis, D.K.: Versatile approximation of the lung field boundaries in chest radiographs in the presence of bacterial pulmonary infections. In: 2008 8th IEEE International Conference on BioInformatics and BioEngineering (2008)

Gurney, K.: An Introduction to Neural Networks. CRC Press, Boca Raton (2014)

Anwar, S.M., Majid, M., Qayyum, A., Awais, M., Alnowami, M., Khan, M.K.: Medical image analysis using convolutional neural networks: a review. J. Med. Syst. 42, 226 (2018)

Wang, C.: Segmentation of multiple structures in chest radiographs using multi-task fully convolutional networks. In: Sharma, P., Bianchi, F.M. (eds.) SCIA 2017. LNCS, vol. 10270, pp. 282–289. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-59129-2_24

Kieu, P.N., Tran, H.S., Le, T.H., Le, T., Nguyen, T.T.: Applying multi-CNNs model for detecting abnormal problem on chest x-ray images. In: 2018 10th International Conference on Knowledge and Systems Engineering (KSE) (2018)

Al-Kafri, A.S., et al.: Boundary delineation of MRI images for lumbar spinal stenosis detection through semantic segmentation using deep neural networks. IEEE Access 7, 43487–43501 (2019)

Kafri, A.S.A., et al.: Segmentation of lumbar spine MRI images for stenosis detection using patch-based pixel classification neural network. In: 2018 IEEE Congress on Evolutionary Computation (CEC) (2018)

Jaeger, S., Karargyris, A., Antani, S., Thoma, G.: Detecting tuberculosis in radiographs using combined lung masks. In: 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (2012)

Priya, E., Srinivasan, S.: Automated object and image level classification of TB images using support vector neural network classifier. Biocybern. Biomed. Eng. 36, 670–678 (2016)

Candemir, S., Antani, S.: A review on lung boundary detection in chest x-rays. Int. J. Comput. Assist. Radiol. Surg. 14, 563–576 (2019)

Santosh, K.C., Vajda, S., Antani, S., Thoma, G.R.: Edge map analysis in chest x-rays for automatic pulmonary abnormality screening. Int. J. Comput. Assist. Radiol. Surg. 11, 1637–1646 (2016)

Hogeweg, L., et al.: Automatic detection of tuberculosis in chest radiographs using a combination of textural, focal, and shape abnormality analysis. IEEE Trans. Med. Imaging 34, 2429–2442 (2015)

Vajda, S., et al.: Feature selection for automatic tuberculosis screening in frontal chest radiographs. J. Med. Syst. 42, 146 (2018)

Kumbhar, U., Patil, V., Rudrakshi, S.: Enhancement of medical images using image processing in MATLAB. Int. J. Eng. Res. Technol. 2, 2359–2364 (2013)

Saad, M.N., Muda, Z., Ashaari, N.S., Hamid, H.A.: Image segmentation for lung region in chest x-ray images using edge detection and morphology. In: 2014 IEEE International Conference on Control System, Computing and Engineering, ICCSCE 2014 (2014)

Gómez, W., Pereira, W.C.A., Infantosi, A.F.C.: Analysis of co-occurrence texture statistics as a function of gray-level quantization for classifying breast ultrasound. IEEE Trans. Med. Imaging 31, 1889–1899 (2012)

Nandhagopal, N., Gandhi, K.R., Sivasubramanian, R.: Probabilistic neural network based brain tumor detection and classification system. Res. J. Appl. Sci. Eng. Technol. 10, 1347–1357 (2015)

Jaeger, S., Candemir, S., Antani, S., Wáng, Y.X.J., Lu, P.X., Thoma, G.: Two public chest x-ray datasets for computer-aided screening of pulmonary diseases. Quant. Imaging Med. Surg. 4(6), 475 (2014)

Abdou, I., Pratt, W.: Quantitative design and evaluation of enhancement/thresholding edge detectors. Proc. IEEE 67, 753–763 (1979)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Hamad, Y.A., Simonov, K., Naeem, M.B. (2020). Lung Boundary Detection and Classification in Chest X-Rays Images Based on Neural Network. In: Khalaf, M., Al-Jumeily, D., Lisitsa, A. (eds) Applied Computing to Support Industry: Innovation and Technology. ACRIT 2019. Communications in Computer and Information Science, vol 1174. Springer, Cham. https://doi.org/10.1007/978-3-030-38752-5_1

Download citation

DOI: https://doi.org/10.1007/978-3-030-38752-5_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-38751-8

Online ISBN: 978-3-030-38752-5

eBook Packages: Computer ScienceComputer Science (R0)