Abstract

Epilepsy occurs when localized electrical activity of neurons suffer from an imbalance. One of the most adequate methods for diagnosing and monitoring is via the analysis of electroencephalographic (EEG) signals. Despite there is a wide range of alternatives to characterize and classify EEG signals for epilepsy analysis purposes, many key aspects related to accuracy and physiological interpretation are still considered as open issues. In this paper, this work performs an exploratory study in order to identify the most adequate frequently-used methods for characterizing and classifying epileptic seizures. In this regard, a comparative study is carried out on several subsets of features using four representative classifiers: Linear Discriminant Analysis (LDA), Quadratic Discriminant Analysis (QDA), K-Nearest Neighbor (KNN), and Support Vector Machine (SVM). The framework uses a well-known epilepsy dataset and runs several experiments for two and three classification problems. The results suggest that DWT decomposition with SVM is the most suitable combination.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Electroencephalogram (EEG)

- Epilepsy diagnosis

- K-Nearest Neighbors (KNN)

- Linear Discriminant Analysis (LDA)

- Quadratic Discriminant Analysis (QDA)

- Support Vector Machine (SVM)

1 Introduction

Epilepsy has become the third most common neurological disorder after stroke and dementia. According to the World Health Organization (WHO), epilepsy affects 0.5–1.5% of the world population, mainly children under 10 and people over 65, and it is more common in developing countries and disadvantaged socioeconomic classes [2]. The seizures are the hallmark for an epilepsy diagnosis. They are recurrent but infrequently and unprovoked signals caused by the synchronized electrical discharge of a large number of neurons [9]. Since epilepsy occurs when the localized electrical activity of neurons suffers from an imbalance, analyzing the electroencephalographic signals (EEG) is one of the most suitable methods to diagnosis this disorder.

Most of the computer-aided systems for diagnosis epilepsy use EEG because it allows rapid and visual inspection of seizures, not only when they are occurring, but also the pre-occurrence- and between- seizures [12]. The common procedure for developing automatic diagnostic-assistance systems based on EEG has five stages (citation): EEG signal acquisition, pre-processing, signal characterization, classification and in-context interpretation (visualization). Zhou and colleagues [13] developed an epileptic seizure detection using the raw EEG Signals (temporal approach), meanwhile, Tsipouras [10] studies the epilepsy classification using spectral information of EEG signals.

This work aims to contribute with an exploratory study about the feature extraction and classification techniques on seizure detection. The proposed framework includes the typical stages described above as follows: a simple amplitude normalization for pre-processing signals. Subsequently, features are extracted using statistical measures on both the original signals and spectral transformation thereof (Discrete Wavelet Transformation, DWT). Afterward, a set of features is chosen by applying feature selection methods such as Bestfirst and Ranker. Then, the selected features are classified by using four of the most representative classification approaches for EEG: Linear Discriminant Analysis (LDA), Quadratic Discriminant Analysis (QDA), K-Nearest Neighbor (KNN) and Support Vector Machine (SVM). The framework uses the “Epileptic Seizure Recognition Data Set”Footnote 1 for running several tests in order to explore as much as possible the proposed classifiers and features. The outcome of the study points out the DWT features and SVM classifier as the most suitable combination.

2 Materials and Methods

2.1 Dataset

The “Epileptic Seizure Recognition Data Set” is composed of 500 individuals with 4097 data points of 23.6 seconds each one. This dataset is later divided and shuffled every 4097 data points into 23 chunks which contain 178 data points for 1-second [8]. Therefore, it contains a matrix of dimension \(11500 \times 178\). The last column represents the labels (1,2,3,4,5) as follows:

-

1.

Seizure activity

-

2.

EEG signal from the area where the tumor is

-

3.

EEG activity from the healthy brain area

-

4.

EEG signal from eyes closed

-

5.

EEG signal from eyes open.

2.2 EEG Pipeline

Pre-processing: The signals are normalized in order to remove offset levels using the Eq. 1.

where S is the signal, \(max \left| S \right| \) is the maximum absolute value of the signal, and \(\bar{S}\) is the mean of the signal.

Signal Decomposition: Discrete Wavelet Transform (DWT) decomposes a signal recurrently into two sub-signals (approach and detail) with less resolution regarding the frequency, known as coefficients [6]. Daubechies family is used in this work (order four) through the MATLAB function wavedec.

Characterization: Considering previous works of EEG signal [1, 11], several features are used as follows:

Number | Type | Description |

|---|---|---|

\(x^{(1)} \cdots x^{(15)}\) | Temporal | Statistical features |

Entropy | ||

\( x^{(16)} \cdots x^{(27)}\) | Morphological | Area under the curve |

Amplitude change | ||

Energy | ||

\(x^{(28)} \cdots x^{(35)} \) | Spectral | Fourier transform |

(Best features) | ||

\(x^{(36)} \cdots x^{(221)}\) | Representative | DWT |

(Temporal and morphological features) |

This results in a feature matrix of dimension \(11500 \times 221\). Where 221 is the total number of features normalized through the Eq. 1.

Feature Selection: In order to use the most significant features, two methods from Weka [5] are used to create a smaller subset:

-

(i)

Using the CfsSubsetEval as attribute evaluator and BestFirst as search method [4]. This is applied to the whole feature matrix.

-

(ii)

Using the InfoGainAttributeEval as attribute evaluator and Ranker as search method. This is applied to the outcome of the previous step.

Obtaining thus a final feature matrix of size \(11500 \times 9\). Where 9 is the total number of features used in this work. Most of the features coming from the DWT subset.

2.3 Classification

These features are used to train and evaluate four different classifiers. In this sense, a representative method of each typology (Distance-based (k-NN), model-based (LDA, QDA) and SVM (data-driven)) are used [3, 7]:

-

Linear Discriminant Analysis (LDA): LDA creates a hyperplane by the projection of the co-variance matrices that separates the classes and estimates the probability that a new data belongs to each class (maximum probability).

-

Quadratic Discriminant Analysis (QDA): It is a variant of LDA, where an individual co-variance matrix is estimated for every class of observations.

-

K-Nearest Neighbour (k-NN): k-NN assigns the classification label following the largest posterior probability among to nearest neighbor’s values, which is calculated using a metric distance.

-

Support Vector Machine (SVM): SVM finds the optimal hyperplane (in a N-dimensional space) that separates the data by maximizing the margin between the classes.

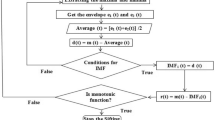

For all the experiments (see the next section), the classifiers were trained with 10 iterations using 80% of the data and the rest for testing. All experiments were run in Matlab using the classifier settings shown in Table 1. Finally, Fig. 1 summarizes graphically the methodology used in this research.

3 Results and Discussion

This work uses the Dunn test (Kruskal-Wallis with bonferroni correction) for performing comparisons among the classifiers. In order to evaluate as much as possible the performance of the classifiers, the final matrix with the selected features is used for doing five tests. All experiments are performed regarding the target class 1 which is the most important class for this research (seizure activity). Additionally, for evaluating the capacity of the methodology, the five classes of the original dataset were restructured to work with binary and three-class cases instead of multiclass cases (more complex and challenging). The combination of classes are described as follows:

3.1 Experiment 1

In this experiment the classes 2, 3, 4 and 5 are merged into a single class, with class 1 as target for classification. As result, there are significant differences (chi-squared = 30.8512, df = 3) among both LDA and QDA with KNN and SVM. SVM achieves the lowest error rate followed nearly by kNN. Figure 2 shows the result.

3.2 Experiment 2

For this experiment, we tried to classify the region where the seizure is presented; therefore, the seizure (class 1) and tumor localization (class 2) are used alone, and the rest of the classes are removed from the dataset. We found that LDA is significant different with SVM, meanwhile QDA is with both SVM and KNN (chi-squared = 34.929, df = 3). SVM presents again the lower error rate followed nearly by KNN. The worst of them is QDC. Figure 3 shows the result.

3.3 Experiment 3

Here, we intended to distinguished the seizure activity and the area where the tumor localization is, so the classes 1 and 2 form two classes individually, and the rest of the classes as a single one. This experiment presents the similar results as the previous one, that is, QDA as the worst classifier with a significant difference with both SVM and KNN, meanwhile LDA only with SVM. SVM achieves again the lowest error rate. Figure 4 shows the result.

3.4 Experiment 4

In this opportunity the classes 1, 2 and 3 represent different classes individually, with the rest of classes removed from the dataset. Therefore, class 3 can distinguish the healthy brain area. The results suggest that SVM achieves the lowest error rate and significantly differs with the other classifiers (chi-squared = 31.7648, df = 3). Meanwhile, QDA obtains the higher error rate. Figure 5 shows the result.

3.5 Experiment 5

Continuing with the multiclass problem, the classes 1 and 2 were merged as a single class, meanwhile class 3 individually and classes 4 and 5 as a single one as well. The results present again SVM as the best classifiers. There are significant differences between LDA and SVM, and QDA with both SVM and KNN. Again the worst one is QDA. Figure 6 shows the result.

Finally, Fig. 7 presents the comparison among classifiers along the five experiments, evidencing the SVM’s behavior as the best classifier.

4 Conclusions and Future Work

The comparison of the explored techniques suggests that the features from DWT decomposition along with Support Vector Machine are the best alternatives to build an Epilepsy-driven EEG analysis computer-aided system. The aim of combining several classes is to study the performance of classifiers, demonstrating that for binary classification both KNN and SVM are good alternatives, meanwhile, for the three-class problem, QDA shows the worst performance and SVM the best. Indeed, the seizure data are hardly separable classes, therefore, a data-driven method like SVM with kernel solution was necessary. Other alternatives should be considered for future studies, among them the feature extraction and deep learning approaches. Besides, given the number of methods (dozens of methods), it is necessary to scale the study with more performance measures to be able to do a comparison with other studies.

Notes

- 1.

Available on UCI machine learning repository https://archive.ics.uci.edu/ml.

References

Bajaj, V., Pachori, R.B.: EEG signal classification using empirical mode decomposition and support vector machine. In: Deep, K., Nagar, A., Pant, M., Bansal, J.C. (eds.) Proceedings of the International Conference on Soft Computing for Problem Solving (SocProS 2011) December 20-22, 2011. AISC, vol. 131, pp. 623–635. Springer, New Delhi (2012). https://doi.org/10.1007/978-81-322-0491-6_57

Christensen, J., Sidenius, P.: Epidemiology of epilepsy in adults: implementing the ILAE classification and terminology into population-based epidemiologic studies. Epilepsia 53, 14–17 (2012)

Duda, R.O., Hart, P.E., Stork, D.G.: Pattern Classification, 2nd edn. Wiley, New York (2000)

Hall, M.A.: Correlation-based feature subset selection for machine learning. Ph.D. thesis, University of Waikato, Hamilton, New Zealand (1999)

Hall, M., Frank, E., Holmes, G., Pfahringer, B., Reutemann, P., Witten, I.H.: The WEKA data mining software: an update. SIGKDD Explor. 11(1), 10–18 (2009)

Ocak, H.: Automatic detection of epileptic seizures in EEG using discrete wavelet transform and approximate entropy. Expert Systems with Applications 36(2), 2027–2036 (2009)

Qazi, K.I., Lam, H., Xiao, B., Ouyang, G., Yin, X.: Classification of epilepsy using computational intelligence techniques. CAAI Trans. Intell. Technol. 1(2), 137 – 149 (2016). https://doi.org/10.1016/j.trit.2016.08.001, http://www.sciencedirect.com/science/article/pii/S2468232216300142

Qiuyi, W., Ernest, F.: Epileptic Seizure Recognition Data Set. UCI Machine learning repository (2017). https://archive.ics.uci.edu/ml/datasets/Epileptic+Seizure+Recognition

Stafstrom, C.E., Carmant, L.: Seizures and epilepsy: an overview for neuroscientists. Cold Spring Harbor Perspect. Med. 5(6), a022426 (2015). https://doi.org/10.1101/cshperspect.a022426

Tsipouras, M.G.: Spectral information of EEG signals with respect to epilepsy classification. EURASIP J. Adv. Sig. Process. 2019(1), 10 (2019). https://doi.org/10.1186/s13634-019-0606-8

Übeyli, E.D.: Statistics over features: EEG signals analysis. Comput. Biol. Med. 39(8), 733–741 (2009)

Wang, L., et al.: Automatic Epileptic Seizure Detection in EEG Signals Using Multi-Domain Feature Extraction and Nonlinear Analysis, May 2017. https://www.mdpi.com/1099-4300/19/6/222

Zhou, M., et al.: Epileptic seizure detection based on EEG signals and CNN. Front. Neuroinformatics 12, 95–95 (2018). https://doi.org/10.3389/fninf.2018.00095, https://www.ncbi.nlm.nih.gov/pubmed/30618700, 30618700[pmid]

Acknowledgments

Authors thank to the SDAS Research Group (www.sdas-group.com) for its valuable support.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Vega-Gualán, E., Vargas, A., Becerra, M., Umaquinga, A., Riascos, J.A., Peluffo, D. (2019). Exploring the Characterization and Classification of EEG Signals for a Computer-Aided Epilepsy Diagnosis System. In: Liang, P., Goel, V., Shan, C. (eds) Brain Informatics. BI 2019. Lecture Notes in Computer Science(), vol 11976. Springer, Cham. https://doi.org/10.1007/978-3-030-37078-7_19

Download citation

DOI: https://doi.org/10.1007/978-3-030-37078-7_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-37077-0

Online ISBN: 978-3-030-37078-7

eBook Packages: Computer ScienceComputer Science (R0)