Abstract

The recent developments in the field of unmanned aerial vehicles (UAV or drones) technology has generated a lot of interdisciplinary applications, ranging from remote surveillance of energy infrastructure, to agriculture. However, in the context of national security, low-cost drone equipment has also been viewed as an easy means to cause destructive effects against national critical infrastructures and civilian population. Addressing the challenge of real-time detection and continuous tracking, this paper proposed presents a holistic architecture consisting of both software and hardware design. The software-based video analytics component leverages upon the advancement of Region based Fully Convolutional Network model for drone detection. The hardware component includes a low-cost sensing equipment powered by Raspberry Pi for controlling the camera platform for continuously tracking the orientation of the drone by streaming the video footage captured from the long-range surveillance camera. The novelty of the proposed framework is twofold namely the detection of the drone in real-time and continuous tracking of the detected drone through controlling the camera platform. The framework relies on the capability of the long-range camera to lock into the drone and subsequently track the drone through space. The analytics processing component utilises the NVIDIA\(\circledR \) GeForce\(\circledR \) GTX 1080 with 8 GB GDDR5X GPU. The experimental results of the proposed framework have been validated against real-world threat scenarios simulated for the protection of the national critical infrastructure.

X. Zhang—The author is pursuing doctoral dissertation at Multimedia and Vision Research Group, School of Electronic Engineering and Computer Science, Queen Mary Univeristy of London.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Intruder drones

- Surveillance camera

- Critical infrastructure

- Deep-learning

- Drone detection and tracking

- Raspberry Pi

1 Introduction

The evolution of aerial technology has seen exponential growth with the development of Unmanned Aerial Vehicles (UAV) which have found applications beyond military use and have become powerful business tools according to Goldman Sachs reportFootnote 1. Following the exponential increase in the commercial application of the drones, the threat associated with the misuse drones for malicious and terrorist activity has also been increased. Indeed, drones can be and are being used by terrorist groups in five primary ways: for surveillance; for strategic communications; to smuggle or transport material; to disrupt events or complement other activities; and as a weapon. This last category includes instances of a drone being piloted directly to a target, a drone delivering explosives, and a weapon being directly mounted to a droneFootnote 2.

Although the problem of detecting UAVs is considered a niche topic in the field of computer vision, there are some preliminary attempts that could be summarised. The use of morphological pre-processing and Hidden Markov Model (HMM) filters to detect and track micro-unmanned planes was reported in [10]. In addition, to the use of spatial information based methods, spatio-temporal approaches were also reported in the literature. In particular, the approach that first creates spatio-temporal cubes using sliding window method at different scales, applies motion compensation to stabilise spatio-temporal cubes, and finally utilises boosted tree and CNN based repressors for bounding box detection was reported in [13]. The reported approaches in the literature focus only on the detection of the drone in the horizon, the challenge of closely tracking the drone without the apriori knowledge of the drone trajectory has not yet been addressed. Therefore, in this paper a novel framework is proposed that is able to achieve visual lock of the drone and continuously monitor the trajectory of the drone flight path. The framework integrates a low-cost sensing equipment powered by the Raspberry Pi to control the servo motors to pan-tilt-zoom (PTZ) the camera equipment in order to track the drone. The continuous media stream captured from the real-world sensing is processed with the Region-based Fully Convolutional (RFCN) network in order to detect the presence or absence of the drone in the horizon.

The rest of the paper is structured as follows. In Sect. 2, an overview of various approaches reported in the literature for drone detection is presented. Subsequently, in Sect. 3, an overview of the overall proposed system framework is presented. The next two sections outline the training model generation for detecting drone and the implementation of tracking algorithm in Sects. 4 and 5 respectively. Section 6, provides an outline of the performance evaluation of the overall implementation followed by conclusion and future work reported in Sect. 7.

2 Literature Review

Traditionally, the task of object detection is to classify a region for any predefined objects from the training data set. Early attempts at drone detection also adopted a similar approach for the classification of an image region containing a drone. With formidable progress achieved in the field of deep learning algorithms within image classification tasks, similar approaches have started to be used for attacking the object detection problem. These techniques can be divided into two simple categories; region proposal based and single shot methods. The approaches in the first category differ from the traditional methods by using features learned from data with Convolutional Neural Networks (CNN) and selective search or region proposal networks to decrease the number of possible regions as reported in [4]. In the single shot approach, the objective is to compute bounding boxes of the objects in the image directly instead of dealing with regions in the image. One of the methodologies proposed in the literature considers extracting multi-scale features using CNNs and combining them to predict bounding boxes as presented in [5, 9]. Another approach reported in the literature, named You Only Look Once (YOLO) divides the final feature map into a 2D grid and predicts a bounding box using each grid cell [11]. The reported techniques derive from the overall objective of object detection and are not trained specifically to address the drone classification. The large-amount of datasets that are trained on well-known objects that are repetitively encountered in real-life are used to build the detection framework. In contrast to the reported techniques in the literature, the proposed framework relies on the RFCN network for drone detection and most importantly feedback the detected outcome to the sensing equipment to enable real-time tracking of the drone flight path.

3 Conceptual Design of the Proposed Framework

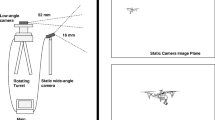

The overall conceptual design of the proposed framework is presented in Fig. 1. The conceptual model is divided into two main components namely (i) the sensing unit and (ii) analytics unit. In order to address the time-critical requirement for real-time tracking of intruder drones, the proposed framework implements five stage sequence of information analysis. The first phase of implementation requires the acquisition of video data, which is achieved through the high-definition block camera. In contrast of other object detection applications, in the context of drone detection, it is critical to be able to clearly identify the objects at distance (also referred as horizon). The next stage of the framework includes the transmission of the acquired data from the sensing unit to the main analytics unit, since the sensing unit is expected to be deployed at or beyond the perimeter of the critical infrastructure. The third stage in the framework is at the heart of the application, which triggers the object detection framework (the training of the network model is presented in Sect. 4).

The outcome of the object processing algorithm is to distinguish among several objects to detect the presence of drones. The next stage of the framework implements the drone localisation component, not only in terms of the 2D position as captured from the camera but also in terms of the area of the drone occupied within the entire frame. Since the motion of low-cost drones are expected to reach between 30 km/h to 60 km/h, it is critical to ensure that tracking algorithm is able to swiftly adapt to the drone motion. The final stage in the framework is to trigger the overall coordination of the tracking component deployed in the sensing unit with Raspberry Pi acting as an interface to control the servo motors that provide pan-tilt orientation. It is vital to note that the communication infrastructure in the proposed framework relies on dedicated bandwidth between the sensing unit and the analytics unit due to the absence of video encoding module, which inherently introduces latency in the video transmission.

4 Training Framework for Drone Detection

The training model used in the paper extends the architecture proposed in [3]. The region based object detection can be categorised into three sub-networks. The first sub-network is a feature extractor. The second sub-network provides bounding box based on region proposal network. The third sub-network performs the final classification and bounding box regression. This sub-network is related to region, and it has to run on every single region. The feature extractor used in the RFCN is ResNet 101 as proposed in [6].

In order to move the time-consuming convolutional neural network into first two sharing sub-networks, RFCN places the 100 layers in sharing sub-network and only uses one convolutional layer to calculate prediction. The last 1000-class fully connected layer in ResNet101 is replaced by 1*1 convolutional layer with depth 1024 to achieve dimension reduction. Then use \(K^2 (C + 1)\) -channel convolutional layer to present position sensitive score maps which will be introduced next. C means the number of classes. Since the object detection is trained to identify drones, in contrast to other objects that could potentially appear in the sky (such as birds, aeroplanes, etc), C is 3 which stand for drones, birds and aeroplanes. To increase the accuracy, RFCN propose position-sensitive score maps. After gaining the region proposal, the Region of Interest (RoI) is divided into \(K*K\) grid with \((C + 1)\) depth (1 corresponds to background). Each grid has its own score. The cumulative voting of the scores for each grid leads to the determination of the final scores of \(C+1\) classes in the respective RoI.

The loss function of RFCN is as follows, which contain classification loss and regression loss.

Here \(c^*\) is the ground truth label of RoI (\(c^*=0\) means background). \(\lambda \) is balance weight which is set to 1. Classification use the cross-entropy loss \(L_{cls}(s_{c^*})=-log(s_{c^*})\). tx, y, w, h is the bounding box coordinates. Lreg is doing bounding box regression. For training the models, we use 5000 annotated images of drones, aeroplanes and birds for each category. After 200,000 training steps, we export the model for prediction. In tracking progress, only when the bounding box category is drone can be shown and used to update the tracker.

5 Tracking Interface with Raspberry Pi Sensing Equipment

In order to achieve accurate and real-time visual tracking of the drone, three main challenges have to be addressed namely (i) computational time; (ii) motion blur, change in appearance of the drone and illumination changes caused due to environmental effects; (iii) drift between the object and the bounding box. Addressing these challenges, the novelty of the proposed framework relies on the integration of object detection component as described in Sect. 4 with the object tracking algorithm namely the Kernelised Correlation Filters (KCF) as presented in [7]. An overview of the implemented algorithm is presented in Algorithm 1. The integration of the KCF tracking algorithm, the tracker will update localised region of the drone based on previous frame (\(I_{i-1})\) and previous bounding box of the drone detected (B). However, for every fifth frame (k), if the detection algorithm identifies the drone, the tracker in next frame \((k+1)\) will be reinitialised based on this frame (k). If the detection algorithm failed to identify the drone, the tracker will continuously update based on the previous frame. The choice of updating the tracker for every 5 frames is empirically chosen in order to achieve a trade-off between the computational complexity of the detection algorithm with the need for correction of the drift caused by fast motion of the drones. If the update interval is in excess of more than 5 frames, the drift introduced by the KCF could lead to failure in tracking the drone accurately. On the other hand, if the interval is less than 5 frames, the computational complexity introduced by the detector is too high to achieve real-time visual lock on the drone.

The outcome of the detection and the tracking algorithm is fed into the sensing platform to control the motion of the pan-tilt-zoom parameters of the detection platform. The \(initiate\_servo\_position\) position(B) function calculates the relative pan-tilt-zoom parameters to trigger the servo motor controllers. The center point coordinate of detected drone B is used to determine the position according to the captured video frame. If the ratio of x coordinate is below 0.4 or beyond 0.6, the platform will triggered to turn left or turn right. If the ratio of y coordinates is below 0.3 or beyond 0.7, the platform will tilt up or down. The zoom parameter is calculate by frame width divided by box width. When the parameter is larger than 7 or smaller than 4, then trigger the camera to zoom in or zoom out.

6 Experimental Results

The analytics component of the proposed framework was trained the drone detection [2], with an extension of the classes to include aeroplanes. The experimental evaluation of the proposed critical infrastructure security framework against drone attacks using visual analytics is evaluated in two stages namely (i) the accuracy of the detection algorithm achieved by the use of RFCN with the inclusion of the KCF tracking algorithm and (ii) the tracking efficiency of the proposed approach to follow the trajectory of the drone flight. The experimental results were carried out on a set of video footage captured in the urban environment with DJI Phantom 3 Standard piloted from a range of 450 m from the point of attack. A total of 39 attack simulations were created with each attack ranging between 6 to 117 seconds resulting in a cumulative of 12.36 min of drone flights. The proposed RFCN Resnet101 network performance has been compared against three other deep-learning network models, namely SSD Mobilenet [8, 9], SSD Inception v2 [14] and Faster RCNN Resnet101 [12]. The performance of the proposed drone detection framework integrated within the analytics component results in 81.16% as average precision. In comparison, the use of SSD Mobilenet results in 30.39%, SSD Inception v2 results in 7.78% and Faster RCNN resnet101 results in 69.49% average precision. It is worth noting that, the detection accuracy presented only refers to the classification and localisation of the drones and does not include the performance of the drone detector as deployed in the real-world. Since, the RFCN Resnet101 has yielded the best detection results, the network is further integrated within the analytics component.

Detection and Tracking. According to [1, 15], the central distance curve metric for the performance assessment of object tracking is utilised to quantify the performance of the proposed detection and tracking framework. The central distance curve metric calculates the distance between the tracking bounding box centre and the ground truth box centre and summarise the proportion at different threshold. The evaluation result of the framework presented in Fig. 2 depicts the central distance curve metric for different drone flight paths. The various curves depict different scenarios under which the drone flight path took place. The scenarios include (i) the drone appears in front of the sensing platform; (ii) the drone crossing in front of sun; (iii) the drone flight takes place in the horizon; (iv) the velocity and acceleration of the drone is beyond the supported specification due to environmental facts and (v) the motion component introduced by the sensing equipment while capturing the drone footage.

A frame may be considered correctly tracked if the predicted target centre is within a distance threshold of ground truth. A higher precision at low thresholds means the tracker is more accurate, while a lost target will prevent it from achieving perfect precision for a very large threshold range. When a representative precision score is needed, the chosen threshold is 20 pixels. The overall average precision score of all footage is 95.2% is achieved for the five scenarios identified for the framework evaluation and is presented in Fig. 2.

Since the purpose of the camera platform is to keep the object at the centre of video and we do not have ground truth in practical testing, we can score the performance in every frame and calculate the average score of total frame. The evaluation methodology adopted is presented in Fig. 3. If the centre point of bounding box is inside the red region, the accuracy of the detection and tracking is assigned 100%. However, if the centre is in the yellow region, the accuracy score of 75% is assigned. Similarly, if the centre is in the blue region, 50% accuracy score is assigned to the frame. Finally, if the region contains no appearance of the drone, accuracy of 0% is assigned resulting in the loss of the drone either from the software implementation of the visual analytic module or through the latency in the camera control.

The overall functionality of pan-tilt-zoom parameters is presented in Fig. 4 mapped against the overall flight path undertaken by the drone. One of the critical challenges to be addressed in the context of real-time drone tracking is to ensure the size of the drone is sufficiently large enough to be suitably detected by the video analytics component. In this regard, it is vital to control and trigger the zoom parameter of the camera in order to inspect the horizon prior to the intrusion of the drone. In addition, due to the vast amount of changes in both the momentum and direction of the drone influenced by the environmental parameters such as wind speed and direction (for both head and tail wind), the accuracy of the tracking algorithm relies on the latency in controlling the hardware platform. Therefore, in the proposed framework three parameters are controlled namely pan, tilt and zoom to ensure that the tracking of the drone is achieved. In order to objectively quantify the performance of the sensing platform, it is vital to consider the feedback received from the analytics component. However, the input provided to the analytics component is dependent upon the visual information acquired from the horizon.

7 Conclusion and Future Work

In this paper, a novel framework for processing real-time video footage from surveillance camera for protecting critical infrastructures against intruder drone attacks is presented. The confluence of high-precision drone detection component using deep-learning network and the low-cost sensing equipment facilitates not only the detection but also continuous tracking of the drone trajectory. The orientation of the drone flight trajectory will subsequently facilitate the deployment of drone neutralisation solution as needed by the designed countermeasure deployment. As the topic of drone detection and tracking is still in its infancy for real-time and real-world applications, there are several research directions that could be considered as a pathway for improving the proposed framework. The overall objective of the future work is to improve stability of the sensing equipment in order to achieve smooth visual lock on the intruder drone. The current configuration of the low-cost sensing equipment utilises servo motors for controlling the motion of the camera that is aligned with the drone trajectory. The control of the servo motors could be enhanced to result in a smooth aggregation of the horizon video.

References

Cehovin, L., Leonardis, A., Kristan, M.: Visual object tracking performance measures revisited. CoRR abs/1502.05803 (2015). http://arxiv.org/abs/1502.05803

Coluccia, A., et al.: Drone-vs-bird detection challenge at IEEE AVSS2017. In: 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), pp. 1–6, August 2017. https://doi.org/10.1109/AVSS.2017.8078464

Dai, J., Li, Y., He, K., Sun, J.: R-FCN: object detection via region-based fully convolutional networks. CoRR abs/1605.06409 (2016). http://arxiv.org/abs/1605.06409

Girshick, R.: Fast R-CNN. In: 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015, pp. 1440–1448 (2015). https://doi.org/10.1109/ICCV.2015.169. IEEE International Conference on Computer Vision, Amazon; Microsoft; Sansatime; Baidu; Intel; Facebook; Adobe; Panasonic; 360; Google; Omron; Blippar; iRobot; Hiscene; nVidia; Mvrec; Viscovery; AiCure

He, K., Zhang, X., Ren, S., Sun, J.: Spatial pyramid pooling in deep convolutional networks for visual recognition. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8691, pp. 346–361. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10578-9_23

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. CoRR abs/1512.03385 (2015). http://arxiv.org/abs/1512.03385

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: High-speed tracking with kernelized correlation filters. CoRR abs/1404.7584 (2014). http://arxiv.org/abs/1404.7584

Howard, A.G., et al.: MobileNets: efficient convolutional neural networks for mobile vision applications. CoRR abs/1704.04861 (2017). http://arxiv.org/abs/1704.04861

Liu, W., et al.: SSD: single shot multibox detector. CoRR abs/1512.02325 (2015). http://arxiv.org/abs/1512.02325

Mejias, L., McNamara, S., Lai, J., Ford, J.: Vision-based detection and tracking of aerial targets for UAV collision avoidance. In: IEEE/RSJ 2010 International Conference on Intelligent Robots and Systems (IROS 2010), Taipei, Taiwan, 18–22 October 2010, pp. 87–92 (2010). https://doi.org/10.1109/IROS.2010.5651028

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: unified, real-time object detection. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, 27–30 June 2016, pp. 779–788. IEEE Computer Society; Computer Vision Foundation (2016). https://doi.org/10.1109/CVPR.2016.91

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137–1149 (2017). https://doi.org/10.1109/TPAMI.2016.2577031

Rozantsev, A., Lepetit, V., Fua, P.: Detecting flying objects using a single moving camera. IEEE Trans. Pattern Anal. Mach. Intell. 39(5), 879–892 (2017). https://doi.org/10.1109/TPAMI.2016.2564408

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.: Rethinking the inception architecture for computer vision. CoRR abs/1512.00567 (2015). http://arxiv.org/abs/1512.00567

Wu, Y., Lim, J., Yang, M.: Online object tracking: a benchmark. In: 2013 IEEE Conference on Computer Vision and Pattern Recognition, pp. 2411–2418 (2013). https://doi.org/10.1109/CVPR.2013.312

Acknowledgement

This work is partially funded by the European Union Horizon 2020 research and innovation program under grant agreement No. 740898 (DEFENDER IP project) and grant agreement No. 787123 (PERSONA RIA project).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, X., Chandramouli, K. (2019). Critical Infrastructure Security Against Drone Attacks Using Visual Analytics. In: Tzovaras, D., Giakoumis, D., Vincze, M., Argyros, A. (eds) Computer Vision Systems. ICVS 2019. Lecture Notes in Computer Science(), vol 11754. Springer, Cham. https://doi.org/10.1007/978-3-030-34995-0_65

Download citation

DOI: https://doi.org/10.1007/978-3-030-34995-0_65

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-34994-3

Online ISBN: 978-3-030-34995-0

eBook Packages: Computer ScienceComputer Science (R0)