Abstract

Information and communication technology, autonomy, and miniaturization in terms of, for example, microelectromechanical systems are enabling technologies with significant impact on the development of sensors, sensor-carrying platforms, control systems, data gathering, storage, and analysis methods. Sensor-carrying platforms are grouped in stationary devices such as landers and moorings to dynamic platforms such as marine robotics, ships, aerial systems, and remote-sensing satellites from space. Lately, the development of low-cost small satellites with customized payload sensors and accessible mission control centers has opened for a democratization of the space for remote sensing as well. The mapping and monitoring strategy may be carried out by each type of sensor-carrying platform suitable for the mission. However, we see a quantum leap by operating heterogeneous sensor-carrying platforms for the most efficient mapping and monitoring in spatial and temporal scales. We are facing a paradigm shift in terms of resolution and coverage capabilities. There have been several research efforts to improve the technology and methodology for mapping and monitoring of the oceans. Today, we see that the mapping coverage may be 100–1000 times higher than the state-of-the-art technology 6 years ago. The entailed increase in data harvesting does also create new challenges in handling of big data sets. It is an increasing need to update the oceanographic and ecosystem numerical model capabilities, taking full benefit of the ongoing shift in technology. The Arctic can truly be characterized as a remote and harsh environment for scientific operations and even more demanding during the Polar Night due to the darkness. During winter operations, extreme coldness may also be a challenge dependent on the weather conditions. Enabling technology and proper operational procedures may be the only way to reveal and understand the processes taking place there. The spatial scale is enormous, and as several research campaigns have already taught us, the variability is huge not only during the seasons but also over the years. This clearly also tells us the importance of prolonged presence. In this chapter, we will briefly present the various sensor-carrying platforms and payload sensors. We will also describe the philosophy behind integrated operations using heterogenous platforms and why and how to bridge science and technology being successful in the development of autonomous systems for efficient and safe operations. Examples and experience from Arctic missions will also be presented.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

9.1 Introduction

The understanding and corresponding management of the oceans including Arctic areas is crucial for a sustainable use of marine resources. However, a large part of the oceans is still unknown. This may be even more pronounced in Arctic areas where the dynamic processes and seasonal variability are high. The environment is extreme, challenging to access, and dangerous for humans to operate in. During the Polar Night with darkness and coldness, the operations become even more demanding. The possible undesired side effect of light pollution from ships and other infrastructure on the marine ecosystem has recently been documented by Ludvigsen et al. (2018; see also Chap. 5). Here, it was shown that artificial light changes the behavior and abundance of zooplankton; some escaped while others where attracted by light. Hence, the missions must be carefully planned subject to the objectives.

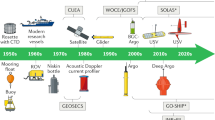

Exploring extreme environments calls for superior and reliable technology, robust and adaptable operational procedures, acceptance of risk, yet the ability to manage it. New instruments and sensor-carrying platforms are contributing to reveal the dynamic processes taking place across spatial and temporal scales. Heterogenous robotic systems (Fig. 9.1) such as autonomous underwater vehicle and surface ships to aerial and satellites have been an enabler for research in areas such as acoustic and optic sensing, inertia platforms, control and autonomy, risk management, big data analytics, and ocean modeling. In order to operate efficiently, the science and technology need to be bridged, and for autonomous systems, interdisciplinary is crucial in planning and execution of operations and for the development of adaptive mapping strategies. Here, the online replanning of the mission will be optimized subject to rewards concerning improved data harvesting and associated risk considerations for, for example, collisions with sea bottom, ice and moving objects, and possibly loss of vehicle (see Chap. 10).

We will, in this Chapter, address various aspects of sensor-carrying platforms. The chapter is organized as follows: Sect. 9.2 presents the main characteristics of sensor-carrying platforms. Sections 9.3 and 9.4 are about payload and navigation sensors, respectively. In Sect. 9.5, we present how the various platforms perform with respect to coverage and resolution in spatial and temporal domains. Autonomy aspects are discussed in Sect. 9.6. Examples from field campaigns are shown in Sect. 9.7, while an introduction to safe and efficient operations are discussed in Sect. 9.8.

9.2 Characteristics of Sensor-Carrying Platforms

The sensor-carrying platforms (Fig. 9.1) may be grouped into:

-

Underwater: Landers and buoys, remotely operated vehicles (ROVs), autonomous underwater vehicles (AUVs), gliders, and profilers.

-

Sea surface: Ships, and unmanned surface vehicles (USVs)

-

Air and space: Satellites, unmanned aerial vehicles (UAVs), and airplanes

Developments in sensor-carrying platforms, sensors, control methods, autonomy, communication, and networked vehicle systems have been driven by the needs in marine sciences as described in Brighenti (1990), Singh et al. (2001), Pizarro and Singh (2003), Moline et al. (2005), Ribas et al. (2008), Hagen et al. (2009), Bingham et al. (2010), Mair et al. (2010), Sotzing and Lane (2010), Berge et al. (2012), Williams et al. (2012), Sørensen et al. (2012), Seto (2013), Bellingham (2014), Dukan and Sørensen (2014), Ludvigsen et al. (2014), Fernandes et al. (2015), Nilssen et al. (2015), Williams et al. (2015), Ludvigsen and Sørensen (2016), Johnsen et al. (2018), and the references therein.

Each sensor-carrying platform has its own characteristics with pros and cons as listed in Tables 9.1 and 9.2. Dependent on installed payload sensors, they also have different capabilities in terms of spatial and temporal resolution and coverage as indicated in Fig. 9.2 and Tables 9.3 and 9.4. For many operations, it may be enough to consider only one of the platforms at a time. However, as shown in Nilssen et al. (2015), integrated operations with heterogenous robotic systems and swarms may be more efficient. For Arctic operations, there will be additional challenges related to harsh environments including icing and low temperature, remoteness, darkness (during the Polar Night), and not at least that the operations take place in an environmental sensitive area. Under such circumstances, proper planning regarding logistics and operation including health, safety, and environment impacts (HSE) must be seriously dealt with.

Spatial and temporal resolution and coverage of different instrument-carrying platforms. (Based on Nilssen et al. 2015 and Haury et al. 1978). Note that satellites equipped with optical imaging sensors are dependent on sunlight and cannot be used during the Polar Night. Abbreviations: ROVs remotely operated vehicles, AUVs autonomous underwater vehicles, USVs unmanned surface vehicles, UAVs unmanned aerial vehicles

9.3 Payload Sensors

Payload sensors are measurement units that are carried by a sensor-carrying platform for collecting data and images, either by remote sensing or by direct measurements in the habitat (in situ). The objective of the sensor-carrying platform is to position the sensor or instrument at a specific location or trajectory at a given time. If the biological or oceanographical process subject to the investigation is dynamic, there may also be temporal constraints that the platform needs to fulfill. Moving toward more autonomous vehicles with scientific mission objectives, rather than a preprogrammed behavior, may require that these instruments are no longer passive payloads but that their measurements are forwarded to the mission planning layer and the guidance and optimization system in the operations control for mission optimization (see Fig. 9.3).

Also, for mapping of almost static systems, that is, the seabed topology, archeological sites, etc., with increased autonomy , the sensor-carrying platform will have to consider findings and react on them in order to optimize the survey operation. Besides improved methods for online risk assessment (risk vs. reward), considering, for example, risk for collision against improved data quality operating closer to objects of interest (OOI) should be addressed. For dynamical processes, the development of the process must be considered along with their driving parameters. Sensor range and resolution vary with the technology and corresponding configurations and greatly depend on how the platform navigates in relation to the feature or process to be measured. When appropriate range and resolution have been determined, it is possible to consider efficiency of the sensor and platform combinations for a given mission purpose (Table 9.3).

9.3.1 Underwater Optics

Optical imaging of the seabed provides high-resolution qualitative information about shape, color, and texture of the seabed. To identify objects on the seafloor, optical imaging is still the most reliable method due to the high resolution of the color and texture information. However, to obtain quantitative data from optical imaging is challenging (see examples in Chap. 10).

Camera and Video

Underwater photogrammetry has experienced considerable advancements the last few years, driven by the developments in computer capacity and computer vision software (Nornes et al. 2015; Yamafune et al. 2017). Seawater and its optically active constituents (inherent optical properties, IOP; see Chap. 3), for example, phytoplankton, coloured dissolved organic matter (cDOM), and total suspended matter (TSM), alter the spectral light absorption and backscatter, limiting the range for the optical cameras constraining the distance between camera and OOI and the corresponding area coverage (see Chaps. 3 and 10). Optical imaging by use of still images or video cameras can be relevant for measuring, for example, geological conditions, archaeological features, and biological identification and behavior. In addition, regular cameras and videos obtain a low spectral resolution, combining three color bands in red, green, and blue (RGB), similar to the spectral sensitivity of the human eye (Johnsen et al. 2013).

Underwater Hyperspectral Imaging (UHI)

Applying hyperspectral imagers, the color information can be quantified at all wavelengths of the visible light as presented in Johnsen et al. (2013; see seafloor mapping example in Chap. 10). By measuring the full visible light spectrum (400–700 nm), the light absorption of the seabed and the seawater can be quantified and characterized. Using knowledge of the spectral distribution of the light applied, many substances can be characterized by their reflection spectrum, after correction of IOPs. The hyperspectral imager can hence be used to estimate presence of substances like chlorophyll a (Chl a) or optical fingerprints of other pigments. The UHI technology opens up for fast processing of data for automatic identification of any OOI at the seafloor (Chap. 10). As for camera and video, the optical properties of the water column affect the sensor range and data quality.

The spectral characteristics of optical backscatter and light attenuation measurements can be used for characterizing the seawater with instruments like fluorometers, turbidity sensors, and light scattering sensors. Monitoring the biological and chemical properties in the water masses, such as oxygen concentration and saturation, can be measured by an in situ optodes. These data can be used to distinguish between water bodies but also to investigate the biochemical development in the water by combining measurements of in situ temperature, salinity, nutrient concentration, current speed and direction, CO2 concentration, Chl a concentration (indication of phytoplankton biomass, detailed in Chap. 4), and zooplankton biomass using acoustical sensors such as ADCP (acoustic Doppler current profiler) and AZFP (acoustic zooplankton fish profiler) (detailed in Chaps. 5, 6, and 7).

9.3.2 Acoustics

Active sonars are devices that use transducers which generate sound waves of specific frequencies and listen for the echoes of these emitted sound waves reflected from objects on the seabed or in the water column. Active sonars have a large variety of applications, ranging from underwater navigation to seabed mapping. Different sensors that use active sonar technology are discussed below (see also Chap. 10).

Acoustic Doppler Current Profilers (ADCP) provide vertical and horizontal velocities of the ocean currents. The instrument measures the Doppler shift of the scattered acoustic signal, and from this, the velocity of the instrument relative to the scatters can be calculated (RDI 2011). ADCPs can be used to estimate a three-dimensional ocean current velocity vector by assuming that the planktonic scatters in the water column are drifting passively with the same speed and direction as the currents. Typical planktonic scatterers are copepods, euphausiids (krill), and pteropods (sea butterflies). The acoustic backscatter collected by the ADCPs provides information about the distribution, relative abundance, and vertical velocity of planktonic organisms in the water column (Deines 1999). Previous studies have used ADCPs mounted on moorings (e.g., Berge et al. 2009) or on autonomous platforms such as AUVs (Geoffroy et al. 2016) to study diel vertical migrations (DVM) and patchiness of zooplankton in the Arctic. Ocean currents are dynamic processes influenced by tides, lunar cycles, climatic variations, weather, and many other environmental factors. Therefore, current velocities as measured by ADCPs will vary on all timescales from sub-hourly to decadal.

Multifrequency echo sounders are sonars using one or several transducers pinging at different discrete narrowband frequencies to detect zooplankton or fish in the water column. Frequencies can be customized for a specific scientific mission but generally vary between 18 kHz and 769 kHz. Common models used for marine research in the Arctic are the Simrad EK60 and the Acoustic Zooplankton and Fish Profiler (AZFP; ASL Environmental Sciences; ASL 2016). Simrad EK60 mounted on research vessels and operating at 18, 38, and 120 kHz (RV Helmer Hanssen, Norway) or 38, 120, and 200 kHz (CCGS Amundsen, Canada) have been used to study fish and zooplankton during the Polar Night (e.g., Benoit et al. 2010; Geoffroy et al. 2019). A similar instrument mounted on a REMUS 600 AUV (Moline et al. 2015) mapped the distribution of mesopelagic organisms (600–1200 m depth). The AZFP is typically used for zooplankton or fish surveys (as suggested by its name) and has been operated on moorings (Darnis et al. 2017) and from Autonomous Surface Vehicles (Ludvigsen et al. 2018) to investigate DVM of zooplankton during the Polar Night, as well as on gliders to study spatial and temporal distribution of biomass (Chave et al. 2018).

Broadband echo sounders are progressively replacing narrowband echo sounders in marine research. Instead of emitting at a discrete narrowband frequency, each transducer of the broadband echo sounder emits a chirp centered around a nominal frequency. The bandwidth increases with the nominal frequency of the transducer. For instance, Simrad’s 38 kHz transducer emits a chirp between 34 and 45 kHz, and their 333 kHz transducer emits between 283 and 383 kHz. Compared to narrowband echo sounders that use the difference in mean volume backscattering strength between narrowband frequencies to classify scatterers into functional groups (Korneliussen et al. 2018), each animal detected by a broadband echo sounder produces a frequency response curve, which could improve the taxonomic resolution of acoustic signals (Bassett et al. 2016). Main models of broadband echo sounders used in marine research are the Simrad EK80 and Wideband Autonomous Transceiver (WBAT). Narrowband and broadband echo sounders have very high temporal resolution (seconds) and can be used to monitor changes in vertical or horizontal distributions of fish and zooplankton in the order of minutes to months.

Active sonars can also be used to measure range to objects on the seabed by measuring the time from transmit to the reflected acoustic signal that arrives back to the sensor (two-way travel time) and multiplying it by the speed of sound. Multibeam echo sounders (MBE) transmit fan-shaped acoustic pulses (pings) to cover across-track swaths of the seabed. Using directional receivers to determine angle and two-way travel time of each received beam, an MBE can measure hundreds of directions and ranges for each ping reflected off the seabed surface, resulting in a dense point cloud of the bathymetry of the seabed (L3 Communications 2000). Side-scan sonars (SSS) measure the surface reflectance of the seabed and reveal information about the seabed material composition due to different sound absorption characteristics (L3 Communications 2000). A side-scan sonar operates by sending out a ping and by measuring the time of flight and intensity of the reflected signal. From this, an image of the seabed’s acoustical reflectivity can be produced. From these images, it is possible to identify, for example, shipwrecks since wood and steel have significantly different reflectivity than the sea bottom. Initially, only time and intensity are measured, and a flat seabed assumption is necessary to provide an image. However, modern interferometric sonar systems also estimate the direction of the signal and produce bathymetric data. Sub-bottom profilers (SBP) produce information about the sub-seabed structures. The system transmits low-frequency, high-power acoustical pulses to penetrate the seabed. Measuring the intensity of the reflected signal, the sub-seafloor conditions are recorded in 2D in the along-track direction of the sensor.

During the last decade, synthetic aperture sonars (SAS) have been implemented on AUVs and other platforms. By constructing “false” arrays, considerably longer than the physical arrays, these systems use multiple pings simultaneously to map each seabed point. This method is independent of frequency and therefore provides significantly increased seabed resolution compared to conventional SSS at the same ranges (Hansen 2011). Interferometric SAS can produce high-resolution bathymetry data that are co-registered with the intensity-based imagery and also enables coherence calculations for data quality estimations.

9.3.3 Other Sensors

In addition to optical and acoustic sensors, other instruments are used to provide important measurements to describe environmental characteristics or other properties relevant for the mission purposes.

CT sensors measure conductivity and temperature. Salinity, speed of sound, and seawater density are calculated from these fundamental parameters. Salinity and density are key parameters for oceanography, while speed of sound is essential for all sonar applications such as seabed mapping and acoustic navigation.

Magnetometers can be used for localizing ferrous man-made objects like anchors and cannons in historical shipwrecks (Ballard 2008). They are also used to measure the magnetic characteristics of seabed rocks (Tivey et al. 1998). Magnetometers measure the strength, direction, and relative change of magnetic fields. For such measurements to give meaningful spatial representations of seabed features, appropriate sampling rate and navigation of the sensor platform must be selected and planned in compliance with the mission purpose.

9.4 Underwater Navigation Sensors

Acoustic baseline sensors

For several decades, acoustic baseline sensors like long baseline (LBL) and ultrashort baseline (USBL) have been the preferred positioning sensors for underwater operations. These systems measure the time of flight for the signals, and by applying the speed of sound, the range between the vehicle and a transducer is calculated. For LBL navigation, lateration using ranges to two or more transponders is used to determine the vehicle’s horizontal position (Kinsey et al. 2006). USBL also measures the phase shift of the incoming signal to determine the transponder bearing. The result is an XYZ position (3D) derived from range and phase angles. An important advantage of acoustic navigation is that the errors are observable and bounded at the cost of additional infrastructure. LBL navigation requires two or more transponders to be deployed in the operational area, while USBL navigation depends on ship presence in the operational area for the full duration of the mission. For ROV operations, this might be acceptable. However, one of the primary arguments of utilizing AUVs has been to decrease cost through lower dependence on preinstalled infrastructure and ships.

An ADCP can be employed as a Doppler velocity log (DVL ) , which measures the Doppler shift in the incoming acoustic signal reflected off the seabed (called bottom-track mode) or acoustic scatterers in the water column (called water-track mode). A DVL uses several transducers pointing in different directions to measure velocities in all three axes (typically four transducers in a so-called Janus configuration) (RDI 2011).

Pressure sensors

Depth is related to pressure through knowledge of the density of seawater. Both are easily observable with high precision, and therefore, the pressure sensor is typically the main sensor for depth even when acoustic navigation is available. Depth is a fundamental measurement for underwater vehicles and is necessary both for control and for referencing collected data.

The heading sensor will provide a measurement of the heading of the vehicle. There are three main concepts of measuring the orientation of the vehicle around the vertical axis: gyro-compassing by extracting the earth’s rotation, using a magnetic compass, or by determining the heading vector from the relative position of two or more points (Gade 2018). The former is the most common and accurate for underwater applications, albeit it relies on expensive and power-hungry sensors. The accuracy of both gyro-compassing and magnetic compassing will rapidly deteriorate when moving close to either of the poles, and alternative methods must be used when operating in these areas.

Inertial sensors form the basis for most dead-reckoning systems. By integrating the acceleration and linear and angular velocities, an inertial navigation system (INS) provides estimates of the position, orientation, and velocity of the vehicle. Integrating the acceleration and rate of changes of the orientation angles in the time domain, an observer provides state estimates for position, orientation angles, velocities, and accelerations. The error component in the inertial system will cause the position estimates to drift unbounded (Gade 2018). To limit this drift, the INS is typically aided by auxiliary sensors such as DVL and pressure sensors. However, to bound the error, an external positioning system must be used, such as LBL, USBL, or GNSS.

9.5 Spatial and Temporal Resolution and Coverage

Nilssen et al. (2015) proposed a concept for integrated environmental mapping and monitoring (IEMM) based on a holistic environmental monitoring approach adjusted to purpose and object/area of interest. The proposed IEMM concept describes the different steps in such a system from mission of survey to selection of parameters, sensors, sensor platforms, data collection, data storage, analysis, and data interpretation for reliable decision-making. In addition to measurements of essential parameters, the quality of the data interpretation is dependent on the spatial and temporal resolution and coverage. Hence, the dynamics in both space and time have to be considered in the mission planning process. The order of magnitudes for temporal and spatial resolution and coverage capabilities of relevant technology platforms is shown in Fig. 9.2. The spatial and temporal coverage and resolution mapping needs will vary dependent on the mission purpose (e.g., processes, organisms of different sizes), and the different decision-makers such as scientists, authorities, and industry may have individual needs and requirements. The sensor-carrying platforms’ capabilities and limitations (summarized in Tables 9.3 and 9.4), mission purpose, and object/area of interest are of importance.

The process accuracy and scale define both the sensors precision required and the navigation accuracy necessary. Processes with time constants more than 10 years can be considered constant in this context. When the time constant is between 10 years and 1 week, it can be documented using repeated surveys for a time series, and for when the time constant is lower than 1 week, it can be attempted resolved within a single operation. Lower time constants require higher temporal resolution, possibly requiring multiple vehicles, or may even be landers.

9.6 Autonomy Aspects

The field on autonomy is complex and multidisciplinary. Important aspects to consider are sensing, control theory, optimization, situation awareness, cognitive science, as well as risk assessment and management. Autonomous systems are often referred to as intelligent systems due to their ability to manage unexpected events in unstructured and unknown environments. More than mimicking a human operator, this means integrating mathematical models with real-time data from sensors and instruments and allowing algorithms optimizing responses realized by embedded computer systems.

9.6.1 Autonomy Levels

There are different definitions of autonomy levels, defining the steps from manual or remote control, teleoperation, semiautonomous, to fully autonomous vehicles. The levels of autonomy are characterized subject to the level of human–robot interaction (HRI), mission complexity, and environmental complexity.

-

1.

Automatic operation (remote control) means that even though the system operates automatically, the human operator directs and controls all high-level mission planning functions, often preprogrammed (human-in-the-loop/human operated).

-

2.

Management by consent (teleoperation) means that the system automatically makes recommendations for mission actions related to specific functions, and the system prompts the human operator at important points in time for information or decisions. At this level, the system may have limited communication bandwidth including time delay due to distance. The system can perform many functions independently of human control when delegated to do so (human delegated).

-

3.

Semiautonomous or management by exception means that the system automatically executes mission-related functions when response times are too short for human intervention. The human may override or change parameters and cancel or redirect actions within defined time lines. The operator’s attention is only brought to exceptions for certain decisions (human supervisory control).

-

4.

Highly autonomous , which means that the system automatically executes mission-related functions in an unstructured environment with ability to plan and replan the mission. The human may be informed about the progress. The system is independent and “intelligent” (human-out-of-the loop).

For more details, see for example, NIST (2015), National Research Council (2005), Ludvigsen and Sørensen (2016), and Utne et al. (2017).

9.6.2 Control Architecture

Three control levels are defined:

-

Mission planner level: Here the mission objective is defined, and the mission is planned. Subject to contingency handling, any input from payload sensor data analysis, and any other input from the autonomy layer, the mission may be replanned. This means that the payload data should be analyzed in near real time aboard the sensor-carrying platform.

-

Guidance and optimization level handles waypoints and references commands to the controller.

-

Control execution level: At this level, the plant control and actuator control take place.

If the data collected are not in accordance with the data request, a new adjusted data request can be made automatically and be a feedback for the controller to adjust the sampling area, sampling frequencies, range until the request is satisfied. Such a strategy will be pursued by the following to enable increased levels of autonomy. In Sect. 9.7.3, an example of adaptive sampling/measurement using AUV is shown.

Mathematical modeling may be achieved through a system’s perspective, integrating models and knowledge from the different domains such as oceanographic, ecosystem, and vessel models. Models at different fidelity will be used for mission design, simulation, real-time monitoring, decision, and control. In particular, fast low-fidelity models may be used in conjunction to the real-time control systems, while high-fidelity models may be used in offline studies. The states and parameters of the models may be updated using the measurements following established methods from state estimation and system identification. Hence, states and parameters may be estimated using real-time data in order to adaptively update models in order to detect normal and abnormal changes in the systems or their environment.

Data gathering including sensor fusion for perception of the environment and any OOI will include integration of imaging sensors such as radar, optics, and acoustics with inertial and navigation sensors for accurate detection and tracking of objects and environmental parameters. For many autonomous sensor-carrying platforms, energy is a limiting factor, where power demands for the sensors are in the same order as needed power for propulsion. Hence, proper strategy for enabling and disabling the payload and navigation sensors will be important both for receiving good data and precise and robust control as well as wanted endurance of the operation.

By combining various control and risk assessment methods such as nonlinear optimization, hybrid control, Bayesian networks and probabilistic reasoning, and machine learning the control execution level will be able to accommodate autonomy requirements. Risk and reward are closely related. For example, in order to record high-quality data, the robot may operate closer to the seabed or OOI still avoiding collision within reasonable risk margins. In case of networked systems with simultaneous operations, robotics, and mobile sensor networks, another level of agent control needs to be considered. Integrated guidance and path planning with high-level mission planning may be achieved using numerical optimization where data, decisions, rules, and models are represented as constraints, as well as discrete search algorithms and computational intelligence.

9.6.3 Risk Aspects

Risk management is crucial for successful operation of sensor-carrying platforms. Increasing the level of autonomy calls for a more systematic approach where more of the risk handling is transferred from the operator to the sensor-carrying platform itself. We may categorize risk for autonomous systems (NIST 2015; National Research Council 2005; Utne et al. 2017) according to three dimensions (Fig. 9.4):

-

1.

Mission complexity.

-

Complexity of mission tasks and subtasks and corresponding decision mechanisms and rules.

-

Organization and collaboration between various actors involved in the operation.

-

Needed performance including quality of payload sensor data and control accuracy of sensor-carrying platform.

-

Knowledge about operational area and environmental and operational conditions.

-

Situation awareness capabilities breaking down into three separate levels: level 1, perception of the elements in the environment; level 2, comprehension of the current situation; and level 3, projection of the future situation. It is obvious that situational awareness is crucial for the sensor-carrying platform to achieve needed control performance for satisfactory data gathering as well as acceptable risk management, for example, collision avoidance, loss of sensor-carrying platform, and failure handling.

-

-

2.

Environmental complexity.

-

Variability in the environment due to, for example, shifting weather conditions (see the trawler “Northguider” accident in Chap. 10).

-

Terrain variation in the areas of interest – flat, steep, etc.

-

Risk for collisions with possible static and moving objects. Object frequency, density, and intent are important to consider.

-

Particular climate risk due to, for example, low temperature, darkness, and icing.

-

Mobility constraints of the sensor-carrying platform.

-

Communication dependencies between platforms and with operator. Underwater operations with acoustical communication are normally far more limited with respect to bandwidth and range compared to radio communications in air.

-

-

3.

Human independence/level of autonomy.

-

Frequency and duration of robot-initiated interactions with the operator.

-

Operator workload and skill levels.

-

Risk models for autonomous systems (Seto 2013)

9.7 Case Studies

9.7.1 Under-Ice and Polar Night AUV Operations

The sea-ice extent in the Arctic has been severely reduced in the last decades, with a record low in September 2012, with all subsequent years among the top ten lowest sea-ice extents (NSIDC 2018) (see Chap. 2). The mean sea-ice thickness has also been reduced significantly from 3.64 m in 1980 to 1.89 m in 2008 (Farmer and Cook 2013). The changing Arctic environment not only influences how the Arctic is used by humans but also impacts the Arctic ecosystem and marine life. For example, the under-ice algae and phytoplankton blooms, which are important for the Arctic food chain, are hard to detect using remote sensing, and therefore, these processes are poorly understood (Johnsen et al. 2018) and suffer from under-sampling.

AUVs are an especially interesting sensor platform for sea-ice data collection due to its autonomous and untethered nature. In addition to collecting data about the biological processes described above, an AUV can be customized with a diverse sensor suite. For example, an AUV can be used for ice monitoring and provide detailed under-ice topography data using MBE mounted upside down, which is important input to decision-making in Arctic marine operations (Norgren 2018). Upward-looking MBE data can also be used as input to the navigation system when performing ice-relative navigation under drifting sea-ice or icebergs using a technique called Simultaneous Localization and Mapping (SLAM) (Norgren and Skjetne 2018).

Deploying an AUV under ice greatly increases the risk of losing the vehicle in the event of failure. Not only does the vehicle itself require specialized support systems and sensors, the operators require special experience and knowledge. Working toward operations under drifting and rotating sea-ice, an important first step is operations under landfast sea ice in real Arctic conditions. In the spring of 2017, the REMUS 100 (Figs. 9.5 and 9.6) was deployed under the ice in Van Mijenfjorden, outside the mining village Svea in Svalbard. The scientific objectives of the campaign were to collect oceanographic data for a related field campaign as well as to assess the ice-monitoring capabilities of small-size AUVs.

The REMUS 100, originally developed by Woods Hole Oceanographic Institute (WHOI), is a low-logistics, small-size AUV. The vehicle can easily be deployed and operated by two persons. The vehicle is rated for 100 m depth and has a typical endurance of about 6 hours at 1.5 m/s velocity. The REMUS 100 was chosen for this operation because of its robustness and prior track list in under-ice operations, including operations under coastal sea-ice offshore Barrow Alaska (Kukulya et al. 2010; Plueddemann et al. 2012) and operations under ice for mapping phytoplankton blooms (Johnsen et al. 2018).

The payload sensor suite consists of up- and down-looking ADCP/DVL, SSS, fluorometer, oxygen sensor, and Neil Brown CTD (for full specifications, see Norgren 2018). For the purpose of ice monitoring, the AUV was fitted with an up-looking Imagenex DeltaT MBE. For navigation, the AUV used Honeywell HG1700 IMU with ring-laser gyros for accurate inertial navigation using an aided inertial navigation system (AINS). External positioning was provided through GPS before launch and through LBL during the missions. To obtain as accurate positioning as possible, the LBL transponders were placed out using an accurate GPS base station providing a fix with accuracy of ~0.5 m. For safety purposes, the AUV was also equipped with an acoustic tracker with standalone battery so the AUV could be located even in the event of a critical power failure.

Looking toward long-range missions under drifting sea-ice, the main lesson learned was the need for a robust and fail-safe navigation system, specialized toward Arctic under-ice missions. Robust navigation is especially important in these operations due to the risk of loss of the vehicle during surface and recovery phases. Figure 9.7 shows the collected side-scan imagery from the recovery phase of one of the under-ice missions, and the recovery frame is clearly visible 17.5 m from the centerline. The intention was for the AUV to surface in the recovery frame, but due to a failure in the DVL, the quality of the navigation system was reduced significantly. Furthermore, due to multipath and noise from the sea ice, the LBL system was unable to provide accurate navigation fixed during the recovery phase due to the AUV’s proximity to the ice.

9.7.2 Marine Archeology

Marine research missions in the Arctic are often in initiated by and strongly coupled with knowledge gaps that are critical to fill for knowledge-based ocean management. Often, it may be an advantage to operate during the fall and winter seasons (including the Polar Night) as the visibility in the water normally is better due to less freshwater runoff bringing sediments into the water. Ecosystem-based management models are necessary for understanding and predictions of complex and interconnected processes that span different disciplines as well as great temporal and spatial scales. An integral part of marine ecosystems are the so-called cultural services , including underwater cultural heritage. One of the greatest impacts on marine Arctic ecosystems, until the climate changes we have seen in recent decades, is the commercial exploitation of marine mammal resources that started with European whaling in the early seventeenth century (Hacquebord 2001). During peak intensity, more than 300 ships hunted whales, walrus, and seals in the areas surrounding Svalbard and Greenland every year (Hacquebord 2010) until the resources neared depletion at the end of the nineteenth century, and the enterprises moved to Antarctic waters for even larger-scale industrial whaling activities. The Arctic whaling industry represented huge profits for European investors; however, it entailed great operational risks. More than 1000 historical wrecks from many western European countries are estimated to lie on the seabed between Greenland and the Svalbard archipelago, the majority of which are related to whaling expeditions that abruptly ended in unkind encounters with crushing ice and harsh weather conditions, often with tragic outcomes (Garcia et al. 2006). The underwater cultural heritage that can be found on the Arctic seabed represents not only direct evidence and knowledge of the profound anthropogenic impact on marine ecosystems but is also a unique and important source of insight into European history. Every ship with its crew must be seen as isolated miniature societies reflecting economic, political, and cultural conditions in contemporary Europe and as such have great value as complementing sources of historical and archaeological knowledge of their period.

In collaboration with UiT–The Arctic University of Norway, UNIS, and other partners, NTNU AMOS and AUR Lab have integrated marine archaeological activities into the scientific scopes of several research campaigns and other field activities. In 2015 and 2016, the wreck of Figaro was investigated with underwater robotics (Fig. 9.8). Figaro was a wooden hulled bark built in 1879 that sunk in 1908 while being used as a floating whaling station. Preliminary mapping of the wreck site was conducted with a portable AUV (Hydroid REMUS 100 with SSS) and a mini-ROV (Seabotix LBV 200 with HD video and scanning sonar) during a UNIS/NTNU student course in 2015 and provided baseline knowledge of the site for detailed investigations and full mapping with a work class ROV (Sperre Subfighter 7500) with several sensors (HD video, stereo camera, and UHI) during a visit to the site with RV Helmer Hansen on the scientific Polar Night Cruise in January 2016. The results from both investigations are currently being used in a research project on the Figaro, and its role as an example of transitional technology between traditional whaling methods used in the Arctic, and the highly specialized factory ships that characterize the Antarctic whaling in the first half of the twentieth century. Traditional diver-based marine archaeological methods are seldom serviceable at these latitudes, for both logistical and HSE reasons. Access to, and hence the ability to investigate, wrecks in such environments depends on adaptation and design of underwater robotics and sensors to both archaeological purposes and Arctic conditions. This is a novel research field that has seen focused attention at NTNU AMOS over the last 5 years. To our knowledge, Figaro is currently the world’s northernmost and, in Svalbard so far, the only underwater cultural heritage site to be scientifically investigated by archaeologists.

The 2016 Polar Night Cruise represents a major step forward in demonstrating the operational capabilities of underwater robotics in demanding environmental conditions. An AUV SSS seabed mapping mission in the Dane’s Gat in Smeerenburg fjord was successfully executed with launch and retrieval from a small open boat in darkness and subzero temperatures. Likewise, the full ROV-based mapping of the Figaro wreck site was undertaken in January, with the sun mostly lower than 12 degrees below the horizon. In addition, the limited runoff from glaciers during midwinter entailed a significant improvement in visibility, and in turn optical imaging capability, compared to the preliminary ROV inspection in early September 2015 (see Chap. 3).

During the same 2016 Polar Night Cruise, biologists and archaeologists made an alarming discovery of wood-devouring organisms present on the seabed in Rijpfjorden, an Arctic fjord at 80 °N on Nordaustlandet (Kintisch 2016; Berge et al., in prep). Shipworms (Fig. 9.9) and other similar organisms are by far the greatest threat to in situ preservation of historical shipwrecks, and the unexpected finding could indicate that the general assumption that the freezing cold Arctic waters are benign and stable environments for underwater cultural heritage is wrong or possibly being invalidated by changing properties in the marine ecosystem (Kortsch et al. 2012). The finding therefore accentuates the importance of and the need for more widely scoped trans- and interdisciplinary research efforts in these areas. Underwater robotics and advanced sensors are enabling technologies that can be adapted and tailored to such efforts, providing knowledge for a better holistic management of marine ecosystems (Nilssen et al. 2015).

Wood devouring organisms found on a log of Siberian larch during the Polar Night (January 2016) in Rijpfjorden (Fig. 1.2) at 250 m depth. (Photo Geir Johnsen)

9.7.3 Adaptive Mapping of Plankton Using AUV

As seen in Sects. 9.5 and 9.6, the design of proper mapping or measuring/sampling strategies to be implemented in the mission layer (Fig. 9.3) may be complex in order to achieve a proper reconstruction in time and space of the dynamic processes taking place in the oceans. Generally speaking, the dynamic processes may be regarded as undersampled even when using highly dynamic capable sensor-carrying platforms such as AUVs. Hence, the sampling strategies need to be optimized in order to make sure that the samples (measurements) are taken where the information value is the highest. See Fig. 9.10 as an illustration of mapping plankton biomass using AUVs with an adaptive or nonadaptive adaptive strategy. The latter will not automatically adjust the trajectory of the AUV to the area of interest. In order to conduct an adaptive mapping strategy, the AUV must be able to analyze the data online and accordingly update the trajectory of the AUV to the high-concentration areas of biomass. As seen in Fig. 9.3, the AUV will be subject to a replanning action. To address the need for improved observations in oceanography, adaptive sampling and numerical ocean modeling have been coupled (Fossum et al. 2018). By combining Gaussian Process (GP) modeling with onboard robotic autonomy , volumetric measurements of phytoplankton can be made at fine scales, informing studies of patchiness in phytoplankton biomass, biogeochemical processes, and primary productivity.

To autonomously map spatial distribution of phytoplankton biomass in 3D using AUVs, GP models and robotic sampling were employed to provide efficient adaptive sampling strategies. The method estimates and tracks layers of high Chl a concentration, focusing on sampling efforts and increasing accuracy along essential biological features such as the subsurface Chl a maxima (SCM). Using spatial modeling and interpolation, it becomes possible to reconstruct the distribution in 3D. Comparison of in-field data shows correspondence between AUV data and behavior, providing a broad and extensive perspective of the pelagic activity.

The method for adaptive mapping in Fossum et al. (2018, 2019) combines marine data from autonomous underwater vehicles (AUVs), model forecasts, remote sensing satellite, buoy, and ship-based measurements as a means to cross-validate and improve ocean model accuracy. Numerical ocean models (e.g., SINMOD, SINTEF Ocean) are connected to in situ models to provide basis for a sampling strategy, using ocean temperature. Using hindcast data from the SINMOD ocean model, a stochastic proxy model, based on GP, is used for assimilation of in situ measurements. The sampling algorithm, being both data- and model-driven, provides input for an online sampling algorithm and runs onboard the AUV, enabling the vehicle to optimize its path and strategy in real time. Figure 9.11 illustrates the concept.

In January 2016, UiT, UNIS, and NTNU deployed an autonomous surface vehicle (ASV) to measure the light response of zooplankton at 78° N in Kongsfjorden, Svalbard (Ludvigsen et al. 2018). To be able to collect data without anthropogenic light and a larger part of the water column, an autonomous surface vessel was introduced. The Jetyak (Kimball et al. 2014) was first deployed by the WHOI, was based on a commercially available polyethylene single-person kayak, and is fitted with a petrol engine and water jet propulsion. A control system is fitted to the vehicle to enable the vehicle to operate autonomously or remotely controlled. Low-bandwidth communication is provided by a radio frequency modem that ranges up to 20 km. An AZFP (Acoustic Fish Zooplankton Profile, ASL) multifrequency echo sounder mounted downward facing provided acoustic data at 125, 200, 455, and 769 kHz. Only the three lower frequencies had enough range to be used in this study. Due to the very low draft, the Jetyak provided shallower echo sounder readings compared to the research vessel. A fiber-optic spectrometer (Ocean Optics QE Pro) was also mounted on the vehicle to provide diffuse sky spectral irradiance. The light levels in the water column were modeled for this study.

During the experiment, the ASV Jetyak was programmed to patrol a predefined transect, and the vehicle was running a total of 54.7 km of survey lines. The system was deployed for 3 days aiming to collect data during the solar noon of Polar Night. The solar inclination was in the range 8–9° below the horizon at noon, and the acoustic data showed a 6–8 m vertical movement of zooplankton detected by the AZFP. The sensitivity to light pollution from the research vessel and from headlights worn by researchers in small workboats indicated high sensitivity to artificial light seen clearly in acoustics readings. Light avoidance was shown down to 80 m depth. As the zooplankton community appeared very sensitive to varying levels of illumination, great care was taken not to introduce artificial light disturbing the natural activity of the zooplankton under ambient light conditions.

ASVs do not require facilities for crew and can hence be made smaller than conventional research vessels lowering their human footprint. Also avoiding artificial light, the platform may be used to examine processes in natural ambient light conditions (see Chap. 3). But the Arctic does also present special challenges for unmanned vehicles; logistics for operational support is far sparser, and communication infrastructure is less developed. The ASV benefits from the facilities of research vessels and operations as represents a useful complement, and for the described research campaign, the introduction of ASV in the operation revealed knowledge hardly obtainable from manned platforms. The architecture of ASV systems further encourages the usage of autonomous and data drives sampling behaviors (Fossum et al. 2019).

9.7.4 Winter Observations in the Barents Sea Using Gliders

An example of the consideration of spatial and temporal scales in the selection of an observational technology for a scientific application can be seen when comparing the methods for making standard ocean sections from a ship or using ocean gliders. Historically, ships have been the workhorse of oceanography and still play a primary role in the collection of ocean data. However, the use of ships has limitations, particularly related to operations in high sea states, endurance to enable measurement on seasonal scales, spatial resolution to resolve small-scale features of the ocean (<1 km), and cost per day. Ocean gliders appear to occupy the same operational space as ships (Fig. 9.2) but are increasingly seen as capable of addressing many of the limitations of ships (Rudnick 2016; Testor et al. 2010) giving greater horizontal resolution and longer endurance with the capacity to operate in winter. In addition, gliders are able to operate close to and underneath sea ice (Lee and Thomson 2017) at low risk to ships and/or personnel. Therefore, these platforms are well suited to oceanographic observations during the Polar Night.

A program of field campaigns in the Barents Sea during 2018 (Fig. 9.12) aimed to investigate the changing oceanic properties in this critical Arctic inflow region (Chap. 2) from the Polar Night through to summer in relation to stratification, fronts, and phytoplankton blooms. Standard ocean sampling was conducted from a research vessel during three cruises in January, April, and June, but observations between these cruises were made with a Slocum Glider (Webb et al. 2001). The sensor package on the gliders comprised of CT and concentration measurements of dissolved oxygen, Chl a, cDOM, and total suspended matter (Chap. 3). The glider was able to dive to 200 m, and the mission plan was to observe along north-south transects in the region of open water in the southern Barents Sea.

Figure 9.13 shows one north-south section collected from January 11th to 26th, 2018. Glider profiles are typically spaced at 1 km intervals, and the entire section comprises about 470 dives. From the data we can see remnant stratification by salinity in the southern part of the section with a more uniformly mixed water column to the north. We also see a south to north gradient in temperature as the glider approaches an oceanographic structure called the Polar Front which separates the warmer, more Atlantic waters to the south from the colder, fresher Arctic waters to the north. Data from the fluorometer shows that the phytoplankton community is below detectable levels along the entire transect. The detail that is revealed in horizontal- and vertical-density gradients is far greater than can be obtained with a ship and allows the relationship between ocean structure and biological response to be investigated thoroughly along repeatable sections over seasonal timescales.

9.8 Safe and Efficient Operations

The Arctic environment is characterized by lack of infrastructure and harsh environment. Any incident in the Arctic will potentially result in a higher consequence than comparable incidents in more developed areas because all response assets have longer time constants and higher costs. For medical incidents, support will be far away requiring long distant travels, and for technical incidents, challenging logistics makes it hard to provide instruments, tools, or spares for situations that are not planned or foreseen (see Chap. 10). The harsh environment provided tough requirements for operational support.

A well-defined mission objective is important defining a data acquisition operation. The mission objective should contain a research question to be addressed. Performing natural science in Arctic area, the investigation site is often given by the process and objective of the research. Together, these form the base for determining parameters and variables of interest in the operation and associated entities to the parameters and variables like required accuracy, spatial and temporal resolution, spatial and temporal coverage, timing requirements, and required position accuracy (Nilssen et al. 2015). From these, one can determine and derive an appropriate platform and instrument suite.

In the further planning, one will consider the research location in terms of bathymetry, current and weather conditions, and marine traffic. Prior information of the area and the research subject is taken into account and implemented into the plan. An operation procedure is laid out including sequential steps like mobilization, testing, transiting, launch, map, recover, and demobilization. The level of fidelity of the plan will be adjusted according to the operation complexity and risk. High-risk and complex operations require a more detailed operation procedure. Before the operation can commence, there should be a clear plan for data processing and analysis as this often forms important premises for the data acquisition.

Having established the operational procedure, the five-step risk management methods can be applied to reduce risk (DNVGL 2001 RP-H101 risk management in marine and subsea). Risk is defined as the product of probability of occurrence and consequence. This includes HSE, project, and operational risks. The first step is to establish a process plan that contains an HSE philosophy. An example of such a strategy can be as follows: “Plan for safe and efficient operations, maintain focus on feasibility and optimize planning of the marine operation by reducing delay, improve ship time utilization, and minimize cost.” A goal can be to perform marine operations with no incidents, accidents, or losses. This should be reached through systematic risk identification and reduction, planning according to recognized standards, good coordination of involved operations, and presence of qualified personnel.

To be able to manage the risk in the operation, an acceptance criterion should be established in step two. This includes defining categories of consequences and compiling this into risk categories. Common criteria would be to comply with the ALARP principle (As Low As Reasonable Practicable). In step three, the risk of the operation is categorized and assessed often by using a method called HAZID (HAZard IDentification). Based on a principle description of the planned operation, undesirable consequences and hazards in the operation are identified and listed in a risk register.

Step four concerns risk identification and is based on a detailed procedure of the planned activities, and common tools applied are SJA (Safe Job Analysis) and HAZOP (HAZard and Operability Analysis). The SJA is used to analyze activities systematically and to establish risk management and preparedness (see Table 9.5). The final step to risk management is the risk-reducing activities including a feasibility assessment.

References

ASL (2016) AZFP (acoustic zooplankton fish profiler) operators manual. In: ASL environmental sciences

Ballard RD (2008) Archaeological oceanography. Princeton University Press, US

Bassett C, Weber TC, Wilson C, De Robertis A (2016) Potential for broadband acoustics to improve stock assessment surveys of midwater fishes. J Acoust Soc Am 140(4):3242–3243

Bellingham JG (2014) Have robot, will travel. Meth Oceanogr 10:5–20

Benoit D, Simard Y, Gagné J, Geoffroy M, Fortier L (2010) From polar night to midnight sun: photoperiod, seal predation, and the diel vertical migrations of polar cod (Boreogadus saida) under landfast ice in the Arctic Ocean. Polar Biol 33(11):1505–1520

Berge J, Cottier F, Last KS, Varpe Ø, Leu E, Søreide J, Eiane K, Falk-Petersen S, Willis K, Nygård H, Vogedes D (2009) Diel vertical migration of Arctic zooplankton during the polar night. Biol Lett 5(1):69–72

Berge J, Båtnes AS, Johnsen G, Blackwell SM, Moline MA (2012) Bioluminescence in the high Arctic during the polar night. Mar Biol 159(1):231–237

Bingham B, Foley B, Singh H, Camilli R, Delaporta K, Eustice R, Mallios A, Mindell D, Roman C, Sakellariou D (2010) Robotic tools for deep water archaeology: surveying an ancient shipwreck with an autonomous underwater vehicle. J Field Robot 27(6):702–717

Brighenti A (1990) Parametric analysis of the configuration of autonomous underwater vehicles. IEEE J Oceanic Eng 15(3):179–188

Chave R, Buermans J, Lemonm DD, Taylor CJ, Lembke C, DeCollibus C, Saba GK, Reiss C (2018) Adapting multi-frequency echo-sounders for operation on autonomous vehicles. In: MTS/IEEE Oceans. Charleston, USA pp 1–6

Darnis G, Hobbs L, Geoffroy M, Grenvald JC, Renaud PE, Berge J, Cottier F, Kristiansen S, Daase ME, Søreide J, Wold A (2017) From polar night to midnight sun: diel vertical migration, metabolism and biogeochemical role of zooplankton in a high Arctic fjord (Kongsfjorden, Svalbard). Limnol Oceanogr 62(4):1586–1605

Deines KL (1999) Backscatter estimation using broadband acoustic Doppler current profilers. In: IEEE conference on current measurement (Cat. No. 99CH36331), pp 249–253

Doneus M, Doneus N, Briese C, Pregesbauer M, Mandlburger G, Verhoeven G (2013) Airborne laser bathymetry – detecting and recording submerged archaeological sites from the air. J Archaeol Sci 40(4):2136–2151

Dukan F, Sørensen AJ (2014) Sea floor geometry approximation and altitude control of ROVs. Contr Eng Pract (CEP) 29:135–146

Farmer GT, Cook J (2013) Climate change trends. In: Climate change science: a modern synthesis, vol 1. Springer, Dordrecht pp 97–133

Fernandes DA, Sørensen AJ, Pettersen KY, Donha DC (2015) Output feedback motion control system for observation class ROVs based on a high-gain state observer: theoretical and experimental results. IFAC J Control Eng Pract (CEP) 39:90–102

Fossum TO, Eidsvik J, Ellingsen I, Alver MO, Fragoso GM, Johnsen G, Ludvigsen M, Rajan K (2018) Information-driven robotic sampling in the Coastal Ocean. J Field Robot 35(7):1101–1121

Fossum TO, Fragoso GM, Davies EJ, Ullgren JE, Mendes R, Johnsen G, Ellingsen I, Eidsvik J, Ludvigsen L, Rajan K (2019) Toward adaptive robotic sampling of phytoplankton in the coastal ocean. Sci Robot 4:eaav3041

Gade K (2018) Inertial navigation – theory and applications. PhD thesis. Department of Engineering Cybernetics, NTNU

Garcia EG, Ragnarsson S, Steingrimsson S, Nævestad D, Haraldsson H, Fosså J, Tendal O, Eiriksson H (2006) Bottom trawling and scallop dredging in the Arctic: impacts of fishing on non-target species, vulnerable habitats, and cultural heritage. Nordic Council of Ministers, Copenhagen

Geoffroy M, Cottier FR, Berge J, Inall ME (2016) AUV-based acoustic observations of the distribution and patchiness of pelagic scattering layers during midnight sun. ICES J Mar Sci 74(9):2342–2353

Geoffroy M, Daase M, Cusa M, Darnis G, Graeve M, Hernandez NS, Berge J, Renaud PE, Cottier F, Falk-Petersen S (2019) Mesopelagic sound scattering layers of the high Arctic: seasonal variations in biomass, species assemblages, and trophic relationships. Frontiers in Marine Science 6:364. https://doi.org/10.3389/fmars.2019.00364

Hacquebord L (2001) Three centuries of whaling and walrus hunting in Svalbard and its impact on the Arctic ecosystem. Environ History 7(2):169–185

Hacquebord L (2010) Op walvisjacht naar Spitsbergen. Een hachelijke onderneming in de Noordelijke IJszee. Walburg Pers, pp 1774–1778

Hagen PE, Hegrenæs Ø, Jalving B, Midtgaard Ø, Wiig M, Hagen OK (2009) Making AUVs truly autonomous underwater vehicles. In: Inzartsev AV (ed) Underwater vehicles. InTech, pp 129–152, ISBN 978–953-7619-49-7. Source: Underwater Vehicles, Book edited by: Alexander V. Inzartsev, ISBN 978-953-7619-49-7, pp. 582, December 2008, I-Tech, Vienna, Austria

Hansen RE (2011) Introduction to synthetic aperture sonar. In: Kolev PN (ed) Sonar systems. Intechopen, Rijeka, pp 3–28

Haury LR, McGowan JA, Wiebe PH (1978) Patterns and processes in the time-space scales of plankton distributions. In: Spatial pattern in plankton communities. Springer, Boston, pp 277–327

Johnsen G, Volent Z, Dierssen H, Pettersen R, Van Ardelan M, Søreide F, Fearns P, Ludvigsen M, Moline MA (2013) Underwater hyperspectral imagery to create biogeochemical maps of seafloor properties. Subsea optics and imaging, Edited by John Watson and Oliver Zielinski. A volume in Woodhead Publishing Series in Electronic and Optical Materials

Johnsen G, Norli M, Moline MA, Robbins I, von Quillfeldt C, Sørensen K, Cottier F, Berge J (2018) The advective origin of an under-ice spring bloom in the Arctic Ocean using multiple observational platforms. Polar Biol 41:1197–1216. https://doi.org/10.1007/s00300-018-2278-5

Kimball P, Bailey J, Das S, Geyer R, Harrison T, Kunz C, Manganini K, Mankoff K, Samuelson K, Sayre-McCord T, Straneo F, Traykovski P, Singh H (2014) The WHOI Jetyak: an autonomous surface vehicle for oceanographic research in shallow or dangerous waters. 2014 IEEE/OES Autonomous Underwater Vehicles (AUV)

Kinsey JC, Eustice RM, Whitcomb LL (2006) A survey of underwater vehicle navigation: recent advances and new challenges. In: Manoeuvering and control of marine craft. Lisbon, Portugal, pp 1–12

Kintisch E (2016) Arctic shipworm discovery alarms archaeologists. Science 351(6276):901

Korneliussen RJ, Berger L, Campanlla F, Chu D, Demer D, De Robertis A, Domokos R (2018) Acoustic target classification. ICES Cooperative Research Report No. 344. doi:https://doi.org/10.17895/ices.pub.4567

Kortsch S, Primicerio R, Beuchel F, Renaud PE, Rodrigues J, Lonne OJ, Gulliksen B (2012) Climate-driven regime shifts in Arctic marine benthos. Proc Natl Acad Sci U S A 109(35):14052–14057. https://doi.org/10.1073/pnas.1207509109

Kukulya A, Plueddemann A, Austin T, Stokey R, Purcell M, Allen B, Littlefield R, Freitag L, Koski P, Gallimore E, Kemp J, Newhall K, Pietro J (2010) Under-ice operations with a REMUS-100 AUV in the Arctic. In Proceedings of the IEEE/OES AUV, pp 1–8, Monterey, CA, USA

L3 Communications (2000) Multibeam sonar theory of operation. SeaBeam Instruments, USA pp 1–107

Lee CM, Thomson J (2017) Marginal Ice Zone, & Arctic Sea State Teams. An autonomous approach to observing the seasonal ice zone in the western Arctic. Oceanography 30:56–68

Ludvigsen M, Johnsen G, Sørensen AJ, Lågstad PA, Ødegård Ø (2014) Scientific operations combining ROV and AUV in the Trondheim Fjord. Mar Technol Soc J 48:59–71

Ludvigsen M, Sørensen AJ (2016) Towards integrated autonomous underwater operations for ocean mapping and monitoring. IFAC J Ann Rev Control 42:1–13

Ludvigsen M, Berge J, Geoffroy M, Cohen JH, De La Torre PR, Nornes SM, Singh H, Sørensen AJ, Daase M, Johnsen G (2018) Use of an autonomous surface vehicle reveals small-scale diel vertical migrations of zooplankton and susceptibility to light pollution under low solar irradiance. Sci Adv 4(1):eaap9887

Mair JA, Jamieson J, Tena I, Evans J (2010). Autonomous vehicle qualification demonstrates potential for a game change. Offshore Technology Conference, Texas

Moline MA, Blackwell SM, Von Alt C, Allen B, Austin T, Case J, Forrester N, Goldsborough R, Purcell M, Stokey R (2005) Remote environmental monitoring units: an autonomous vehicle for characterizing coastal environments. J Atmos Ocean Technol 22:1797–1808

Moline MA, Benoit-Bird K, O’Gorman D, Robbins IC (2015) Integration of scientific echo sounders with an adaptable autonomous vehicle to extend our understanding of animals from the surface to the bathypelagic. J Atmos Ocean Technol 32:2173–2186

National Research Council (2005) Autonomous vehicles in support of naval operations. Committee on Autonomous Vehicles in Support of Naval Operations, US, ISBN: 0-309-55115-3

Nilssen I, Ødegård Ø, Sørensen AJ, Johnsen G, Moline MA, Berge J (2015) Integrated environmental mapping and monitoring, a methodological approach to optimise knowledge gathering and sampling strategy. Mar Pollution Bull 96:374–383. https://doi.org/10.1016/j.marpolbul.2015.04.045

NIST (2015) Retrieved 18th March. http://www.nist.gov/el/isd/ks/autonomy_levels.cfm

Norgren P (2018) Autonomous underwater vehicles in Arctic marine operations: Arctic marine research and ice monitoring. PhD thesis, Norwegian University of Science and Technology

Norgren P, Skjetne R (2018) A multibeam-based SLAM algorithm for iceberg mapping using AUVs. IEEE Access 6:26318–26337

Nornes SM, Ludvigsen M, Ødegård Ø, Sørensen AJ (2015) Underwater photogrammetric mapping of an intact standing steel wreck with ROV. IFAC workshop on navigation, guidance, and control of underwater vehicles, April 28–30, Girona, Spain

NSIDC – National Snow, Ice Data Center (2018) Retrieved 8th Mar 2019. Arctic sea ice extent arrives at its minimum. http://nsidc.org/arcticseaicenews/2018/09/arctic-sea-ice-extent-arrives-at-its-minimum/

Pizarro O, Singh H (2003) Towards large-area mosaicing for underwater scientific applications. IEEE J Ocean Eng 28:651–672

Plueddemann AJ, Kukulya AL, Stokey R, Freita L (2012) Autonomous underwater vehicle operations beneath coastal sea ice. IEEE/ASME Trans Mechatronics 17:54–64

Quinn R, Dean M, Lawrence M, Liscoe S, Boland D (2005) Backscatter responses and resolution considerations in archaeological side-scan sonar surveys: a control experiment. J Archaeol Sci 32:1252–1264

RDI (2011) Acoustic Doppler current profiler: principles of operation: a practical primer. RD Instruments, Teledyne, USA pp 1–62

Ribas D, Ridao P, Tardós JD, Neira J (2008) Underwater SLAM in man-made structured environments. J Field Robot 25:898–921

Rudnick DL (2016) Ocean research enabled by underwater gliders. Annu Rev Mar Sci 8:519–541

Seto ML (2013) Marine robot autonomy. Springer, New York\Heidelberg\Dordrecht\London ISBN 978-1-4614-5658-2

Singh H, Bellingham JG, Hover F, Lerner S, Moran BA, von der Heydt K, Yoerger D (2001) Docking for an Autonomous Ocean Sampling Network. IEEE J Ocean Eng 26:498–514

Sørensen AJ, Dukan F, Ludvigsen M, Fernandes DA, Candeloro M (2012) Chapter 6, Development of dynamic positioning and tracking system for the ROV Minerva. In: Roberts G, Sutton B (eds) Further advances in unmanned marine vehicles. IET, UK, pp 113–128

Sotzing CC, Lane DM (2010) Improving the coordination efficiency of limited-communication multi–autonomus underwater vehicle operations using a multiagent architecture. Journal of Field Robotics 27:412–429

Testor P, Meyers G, Pattiaratchi C, Bachmayer R, Hayes DR, Pouliquen S, Villeon LP et al (2010) Gliders as a component of future observing systems. OceanObs’ 09

Tivey MA, Johnson HP, Bradley A, Yoerger D (1998) Thickness of a submarine lava flow determined from near-bottom magnetic field mapping by autonomous underwater vehicle. Geophys Res Lett 25:805–808

Utne IB, Sørensen AJ, Schjølberg I (2017) Risk Management of Autonomous Marine Systems and Operations. OMAE2017-61645, proceedings of the 36th international conference on Ocean, Offshore & Arctic Engineering, OMAE17, June 25–30. Trondheim, Norway, p 2017

Webb DC, Simonetti PJ, Jones CP (2001) SLOCUM: An underwater glider propelled by environmental energy. IEEE J Ocean Eng 26:447–452

Williams SB, Pizarro O, Jakuba M, Johnson CR, Barrett NS, Babcock RC, Kendrick GA, Steinberg PD, Heyward AJ, Doherty PJ, Mahon IJ, Johnson-Roberson M, Steinberg D, Friedman AL (2012) Monitoring of benthic reference sites: using an autonomous underwater vehicle. IEEE Robot Autom Mag 19:73–84

Williams G, Maksym T, Wilkinson J, Kunz C, Murphy C, Kimball P, Singh H (2015) Mapping ice thickness and extreme deformation of Antarctic Sea ice from an autonomous underwater vehicle. Nat Geosci 8:61–67

Yamafune K, Torres R, Castro F (2017) Multi-image photogrammetry to record and reconstruct underwater shipwreck sites. J Archaeol Method Theory 24:703–725

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Sørensen, A.J., Ludvigsen, M., Norgren, P., Ødegård, Ø., Cottier, F. (2020). Sensor-Carrying Platforms. In: Berge, J., Johnsen, G., Cohen, J. (eds) POLAR NIGHT Marine Ecology. Advances in Polar Ecology, vol 4. Springer, Cham. https://doi.org/10.1007/978-3-030-33208-2_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-33208-2_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-33207-5

Online ISBN: 978-3-030-33208-2

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)