Abstract

Eye-tracking technology allows to capture real-time visual behavior information and to provide insights about cognitive processes and autonomic function, by measuring gaze position and pupillary response to delivered stimuli. Over the recent years, the development of easy-to-use devices led to a large increase in the use of eye-tracking in a broad spectrum of applications, e.g. clinical diagnostics and psychological research. Given the lack of extensive material to characterize the performance of different eye-trackers, especially latest generation devices, the present study aimed at comparing a screen-mounted eye-tracker (remote) and a pair of wearable eye-tracking glasses (mobile). Seventeen healthy subjects were asked to look at a moving target on a screen for 90 s, while point of regard (POR) and pupil diameter (PD) were recorded by the two devices with a sampling rate of 30 Hz. First, data were preprocessed to remove artifacts, then correlation coefficients (for both signals) and magnitude-squared coherence (for PD) were calculated to assess signals agreement in time and frequency domain. POR measurements from remote and mobile devices resulted highly comparable (ρ > 0.75). PD showed lower correlation and major dispersion (ρ > 0.50), besides a higher number of invalid samples from the mobile device with respect to the remote one. Results provided evidence that the two instruments do share the same content at the level of information generally used to characterize subjects behavioral and physiological reactions. Future analysis of additional features and devices with higher sampling frequencies will be planned to further support their clinical use.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Inspection of the world through vision is crucial to gather information necessary to individuals for visual perception. Moreover, eyes and their movement are known to play a key role in the expression of a person’s desires, needs, cognitive processes, emotional states, and interpersonal relations [1]. Hence, visual behavior recording and measurement takes on particular importance. Eye-tracking is a recently devised technology which allows to capture visual behavior information in real time and obtaining gaze position within stimuli [2]. Specifically, it can provide moment-to-moment measures of the focus of attention underlying cognitive processes, i.e. eye gaze measures, and evaluation of the autonomic function, i.e. pupillary response [3].

Traditionally, eye-tracking technologies required invasive and uncomfortable techniques through supplemental components, such as scleral search coils [4] or electro-oculography [5]. Due to the physical constraints on the subjects, these techniques were confined to specific experimental conditions, thus limited in use. The need to overcome these limitations together with the increase in computational power and graphic boards of standard PCs led to the development of video-based measurements by means of infrared cameras, which are increasingly less intrusive and more accurate [6]. Such devices can be remote (table- or screen-mounted) or mobile, i.e. following the subject’s natural head movement (head- or eyeglass-mounted). The evolution to easy-to-use eye tracking technologies is providing devices suitable for a wide range of applications and fields, e.g. psychological research [7], biomarker identification for clinical diagnostics [8], medical decision-making [9].

Given the broad spectrum of applications and further implications, it is extremely important to assess the accuracy with which the eye movement and/or pupil size are measured. Even though some previous studies gave a hint about the possible occurrence of measurement differences among eye-tracking devices [10, 11], there is no extensive material to characterize and compare performance of different eye-trackers, especially for the latest generation devices, such as wearable eyeglasses. For this reason, the present study aimed at comparing two of the most recent eye tracking devices, specifically a screen-mounted eye-tracker and a pair of wearable eye-tracking glasses (i.e. a remote and a mobile device respectively). The two eye-trackers were tested simultaneously during an experimental protocol in which subjects were asked to look at a moving target on a computer screen. The recorded signals consisted of point of regard (POR) x and y coordinates and pupil diameter size (PD), distinctively for the left and right eye. Data were analyzed in both time and frequency domain for a comprehensive assessment in order to evaluate the devices performance and the eventual degree of agreement between them, as described in the following sections.

2 Methods

2.1 Materials

Two different eye-tracking systems were compared in this experiment: the SMI REDn eye-tracker and the SMI Eye Tracking Glasses 2 Wireless, hereafter referred to as remote and mobile eye-trackers respectively. The remote eye-tracker is a USB-powered ultra-light portable camera, which is usually screen-mounted, with a sampling frequency of 30 or 60 Hz. It offers an automatic compensation for head movements and can be comfortably used also by subjects wearing eyeglasses. Data are visualized on the PC with a dedicated software (iViewRED). The mobile eye-tracker is a non-invasive device designed to be used just like a normal pair of glasses, able to record data with a sampling frequency up to 60 Hz. Thanks to automatic parallax compensation, it is claimed to provide reliable real-time data. In this experiment, it was connected to an Android smartphone and interfaced with the PC with a specific software, iViewETG Recording Device.

2.2 Experimental Protocol

Seventeen healthy volunteers aged between 20 and 35 years (10 males and 7 females) were recruited for the present study. All measurements were performed at PHEEL (Physiology Emotion Experience Laboratory), a multi departmental laboratory of Politecnico di Milano. The subjects showed no medical conditions such as epilepsy or colour blindness. If present, visual impairments, e.g. myopia, were corrected via contact lenses to facilitate coupling with both employed instruments. All the recruited volunteers were welcomed and briefly instructed about the experiment. Also, they were asked to sign a written consent module before taking part to the trial. Subjects were comfortably seated in front of a PC monitor, which was used for stimuli delivery.

The experimental protocol design was created using the SMI Experiment Center, a proprietary software which supports the entire trial workflow from experimental design to data analysis. This tool allows to tailor stimulus presentation on the screen according to desired specifications. First of all, both devices were calibrated: the remote eye-tracker benefited from an automatic calibration session by using 9 points, followed by subsequent validation with 4 points; the mobile eye-tracker, instead, was manually tuned with the smartphone by means of a 3 points calibration, by using an image projected on the PC screen. After the previous steps of calibration and preparation of the devices, the volunteer watched a neutral image for 1 min. Neutral image is inserted in the protocol for physiological reasons as it allows the subjects to relax and rest his eyes. Then, the task for each participant consisted in following the path of a mobile target on the screen by changing the direction of the gaze and keeping the head as much still as possible. The target initially appears and disappears in different sections of the screen, while, in a second moment, the target moves with constant speed along the sides and the diagonal of the monitor to induce smooth pursuits. The task lasts 90 s.

Preliminary data acquisition showed extremely non-uniform data sampling for a nominal sampling rate of 60 Hz. As a result, we chose to acquire data at 30 Hz, since non-uniformity was less evident. Moreover, such sampling rate appears adequate for the sake of devices general characterization.

2.3 Data Processing

Both POR and PD signals were processed in Matlab environment. As stated before, the actual temporal difference between two consecutive samples was not constant over time. Thus, signals were resampled using the Piecewise Cubic Hermite Interpolating Polynomial (PCHIP) method. This technique takes advantages of several cubic interpolators to obtain curves with different slopes on each side of the nodes, so that interpolated data complies better to existing ones with respect to other curves computed through different polynomial interpolators [12].

A qualitative signals analysis showed, as expected, a great quantity of blinks and high frequency oscillations. Hence, the need for artifact removal.

First, a simple thresholding process based on physiological and physical constraints was applied:

-

Value of each sample (both POR and PD) must be strictly greater than 0;

-

PD must not be physiologically lower than 1 mm or higher than 8 mm.

This phase was followed by an adaptive thresholding step where, for each signal, a vector containing the algebraic difference between each sample and the previous one was computed. The mean value of such array was identified as the adaptive threshold. Then, samples having a distance from their following one higher than the threshold were categorised as blinks, removed and subsequently replaced with a cubic interpolation. The whole adaptive thresholding process was reiterated to ensure total artefact removal. Moreover, a tenth order median filter was applied to further reduce high-frequency, low-amplitude oscillations to POR signals only.

While remote eye-tracker automatically starts to record data at the beginning of the experiment, acquisition has to be manually launched for the mobile eye-tracker. Consequently, signals were synchronized in post-processing through the cross-correlation technique.

The correlation coefficients ρ between remote and mobile eye-trackers data (POR in the x and y axes, pupil diameter for left and right eye) were determined in order to evaluate the degree of correlation between signals in the time domain. Furthermore, an analysis in the frequency domain was conducted for the pupillary signals. PD signals were low-pass filtered with a FIR filter at 1 Hz and high-pass filtered with an IIR at 0.025 Hz, to enhance the band of interest of the autonomic nervous system action in analogy with heart rate variability measurements [13]. Afterwards, the Welch periodogram was calculated. Then, the magnitude-squared coherence was computed with the following formula:

where \( S_{xy} \left( \omega \right) \) represents the Cross-Spectrum of the two signals, while \( S_{x} \left( \omega \right) \) and \( S_{y} \left( \omega \right) \) are the Auto-Spectra of the same signals.

3 Results

Figure 1 shows the correlation coefficients for each participant, whereas all the obtained p-value are lower than 0.01. The correlation coefficients are extremely high for POR with slightly high values for the left eye. Pupil diameter shows high correlation as well, but with a major dispersion: this is probably due to some subjects’ recordings, which results more affected by noise.

Figure 2 shows the magnitude-squared coherence for each frequency, whereas Fig. 3 displays the mean values of the magnitude-squared coherence for the range 0–1 Hz in accordance with the standard measurements of the influence to the heart of the autonomic nervous system through heart rate variability (generally used in both psychophysiological and clinical settings [13]). From the results it is possible to notice that coherence values are high (on average > 0.5) along most of the frequency range of interest, indicating a good relation between the signals provided by the mobile device and the remote one.

Since the results presented so far were to verify the degree of association between the two eye-trackers signals after application of pre-processing algorithms, we also performed an analysis on the reliability of the raw pupil diameter signal returned by the two devices. Using physiological thresholds mentioned in Sect. 2.3, the samples deemed to be invalid were identified and we calculated the percentage with respect to the total number of samples. Figure 4 collects these percentage values related to pupil diameters. In 28 cases out of 34 (2 eyes for 17 subjects) the first device has a lower percentage of invalid data.

4 Discussions and Conclusions

The fields in which new eye-tracking technologies are used are increasingly diverse. Depending on the type of experiment or environment, researchers are required to decide whether to use mobile or remote devices. Hence, the purpose of our work was to verify if the information provided by these two macro-categories of instrumentation was comparable, and to what extent, in order to allow experts in the field to eventually use them interchangeably, or at least to choose the most suitable one.

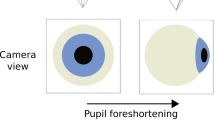

In this study, two types of signals extracted from the instruments were analysed, i.e. point of regards and pupil diameters. The general comprehensive assessment showed that after a pre-processing phase for artifact removal, signals from remote and mobile devices were highly comparable in both time and frequency domain. Nevertheless, the higher correlation coefficients for the left eye POR with respect to the right one (ρleft > 0.9, ρright > 0.75, see Fig. 1) showed a slight influence of the subject’s head position on the measurements. Instead, by looking at the raw pupil signal, it was possible to notice that, for most of the participants, the mobile device has a higher number of invalid samples than the remote one. It is reasonable to believe that this resulted also in a larger dispersion of the correlation coefficients and coherence (Figs. 1 and 3). Of note, data acquisition revealed that sampling frequency is less uniform also for the wearable eye-tracker.

In conclusion, in a common visual behavior recording the remote eye-tracker provided more stable and reliable data acquisition with respect to the mobile counterpart, given the considerably lower number of invalid data. However, after a simple but functioning pre-processing stage, such as the one presented in this work, we can infer that both signals share the same content at the level of information generally used to characterize the subjects behavioral and physiological reactions. Besides signal accuracy, physical comfort of the observers should also be considered, since wearing eyeglasses might be uncomfortable for someone. Another characteristic to bear in mind is the sampling frequency: these devices are adequate for preliminary data or pilot studies, but such low sampling frequencies are not adequate for all research applications [14]. As a result, a higher sampling frequency capable to capture characteristic pupillary oscillations might be considered for future assessment. The eye-trackers examined here represent only a sample of the available types and models. Thus, it is extremely important for researchers to continue to analyze the capabilities and accuracy of eye-tracking systems in order to determine the validity of the measures that each system provides, together with costs, strengths and weaknesses [15]. Future research should be addressed to examine different devices possibly with higher sampling frequencies by investigating other features (e.g. detection of saccades and fixations) in various experimental setups.

References

Underwood, G.: Cognitive Processes in Eye Guidance. Oxford University Press, Oxford (2005)

Hansen, D., Ji, Q.: In the eye of the beholder: a survey of models for eyes and gaze. IEEE Trans. Pattern Anal. Mach. Intell. 32(3), 478–500 (2010)

Eckstein, M.K., Guerra-Carrillo, B., Singley, A.T.M., Bunge, S.A.: Beyond eye gaze: what else can eyetracking reveal about cognition and cognitive development? Dev. Cogn. Neurosci. 25, 69–91 (2017)

Robinson, D.: A method of measuring eye movement using a scleral search coil in a magnetic field. IEEE Trans. Biomed. Electron. 10(4), 137–145 (1963)

Marmor, M.F., Zrenner, E.: Standard for clinical electro-oculography. Doc. Ophthalmol. 85(2), 115–124 (1993)

Holmqvist, K., Nyström, M., Andersson, R., Dewhurst, R., Jarodzka, H., Van de Weijer, J.: Eye Tracking: A Comprehensive Guide to Methods and Measures. Oxford University Press, Oxford (2011)

Mele, M.L., Federici, S.: Gaze and eye-tracking solutions for psychological research. Cogn. Process. 13(1), 261–265 (2012)

Pierce, K., Marinero, S., Hazin, R., McKenna, B., Barnes, C.C., Malige, A.: Eye tracking reveals abnormal visual preference for geometric images as an early biomarker of an autism spectrum disorder subtype associated with increased symptom severity. Biol. Psychiat. 79(8), 657–666 (2016)

Al-Moteri, M.O., Symmons, M., Plummer, V., Cooper, S.: Eye tracking to investigate cue processing in medical decision-making: a scoping review. Comput. Hum. Behav. 66, 52–66 (2017)

Nevalainen, S., Sajaniemi, J.: Comparison of three eye tracking devices in psychology of programming research. PPIG 4, 151–158 (2004)

Mello-Thoms, C.: Head-mounted versus remote eye tracking of radiologists searching for breast cancer: a comparison. Acad. Radiol. 13(2), 203–209 (2006)

Carlson, R.E., Fritsch, F.N.: Monotone piecewise bicubic interpolation. SIAM J. Numer. Anal. 22(2), 386–400 (1985)

Camm, A., Malik, M., Bigger, J., Breithardt, G., Cerutti, S., Cohen, R., et al.: Heart rate variability: standards of measurement, physiological interpretation and clinical use. Task force of the european society of cardiology and the north american society of pacing and electrophysiology. Circulation 93, 1043–1065 (1996)

Andersson, R., Nyström, M., Holmqvist, K.: Sampling frequency and eye-tracking measures: how speed affects durations, latencies, and more. J. Eye Mov. Res. 3(3), 6 (2010)

Funke, G., Greenlee, E., Carter, M., Dukes, A., Brown, R., Menke, L.: Which eye tracker is right for your research? Performance evaluation of several cost variant eye trackers. In: Proceedings of the Human Factors and Ergonomics Society Annual Meeting, vol. 60, no. 1, pp. 1240–1244. SAGE Publications, Los Angeles (2016)

Acknowledgement

The authors acknowledge PHEEL (http://pheel.polimi.it/) for the valuable support in both experimental and methodological development.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Ethics declarations

The authors declare that there is no conflict of interest regarding the publication of this article.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Lolatto, R. et al. (2020). Characterization of Eye Gaze and Pupil Diameter Measurements from Remote and Mobile Eye-Tracking Devices. In: Henriques, J., Neves, N., de Carvalho, P. (eds) XV Mediterranean Conference on Medical and Biological Engineering and Computing – MEDICON 2019. MEDICON 2019. IFMBE Proceedings, vol 76. Springer, Cham. https://doi.org/10.1007/978-3-030-31635-8_24

Download citation

DOI: https://doi.org/10.1007/978-3-030-31635-8_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-31634-1

Online ISBN: 978-3-030-31635-8

eBook Packages: EngineeringEngineering (R0)