Abstract

Over the past few decades, monaural speech separation has always been an interesting but challenging problem. The goal of speech separation is to separate a specific target speech from some background interferences and it has been treated as a signal processing problem traditionally. In recent years, with the rapid advances of deep learning techniques, deep learning has made a great breakthrough in speech separation. In this paper, recurrent neural networks (RNNs) which integrate multiple nonlinear masking layers (NMLs) to learn two-level estimation are proposed for speech separation. Experimental results show that our proposed model “RNN + SMMs + 3 NMLs” outperforms the baseline RNN without any mask in all the SDR, SIR and SAR indices, and it also obtains much better SDR and SIR than the RNN simply with original deterministic time-frequency masks.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Speech separation

- Deep learning

- Deep neural network

- Recurrent neural network

- DNN

- RNN

- Time-frequency masking

- Nonlinear masking layer

- Two-level estimation

1 Introduction

Deep learning has demonstrated great power and potentials on automatic speech recognition (ASR) in recent years [2, 14, 29]. However, it is well known that ASR aims mainly to recognize what a single speaker says and even though there is some progress in multi-talker mixed speech recognition, the results are far from satisfactory [3, 6, 25]. Another topic related to ASR is speech separation, which is originated from the famous cocktail party problem studied by Cherry [4]. Over the past 6 decades, many classical approaches have been set forth to solve this problem. The computational auditory scene analysis (CASA) introduced in [1], for example, is based on perceptual principles of auditory scene analysis, and it makes use of grouping cues such as pitch and onset to estimate time-frequency masks that isolate the signal components belonging to different speakers. These masks are used to separate the source signals. The non-negative matrix factorization (NMF) [13, 17], on the other hand, decomposes the spectrogram into two matrices \(\mathbf {W}\) and \(\mathbf {H}\), where \(\mathbf {W}\) denotes spectral features and \(\mathbf {H}\) denotes the importance of the features of each spectrum in the spectrogram. The basis in both two matrices is used to estimate mixing factors during evaluation.

Inspired by the success of deep learning in ASR, deep neural networks (DNNs) have played an increasingly important role in speech separation since 2010s [23]. Typically, some kinds of acoustic features from the waveform of the mixed speech are fed to a specific DNN, and then the DNN outputs several single-source signals from the mixture. In [20], the authors designed a DNN that stacks multiple hidden layers and made it a deep fully-connected neural network (FNN) for speech separation. Different from the FNN with a single output layer, this network contains two output layers and is able to predict both the target and the interference. In [10], an RNN with deterministic time-frequency masks was presented to perform speech separation. Similar to the previous neural network, this network contains two or more output layers, but the spectral features of the mixture are treated as sequential data in the input layer. Also, the discriminative training criterion is used for training the network to further enhance the separation performance. In [30], a novel deep learning training criterion called permutation invariant training was proposed to speed up the training procedure and deal with the label ambiguity (or permutation) problem of speech separation discussed in [25]. Alternatively, Hershey et al. developed the deep clustering approach in a neural network [8], which gives better generalized performance and provides some new views, i.e., clustering, for speech separation.

In this paper, deep RNNs with nonlinear masking layers and two-level estimation are proposed for speech separation. The whole model consists of two components: the first one is a regular stacked RNN which directly maps the spectral representation of the mixture to those of the sources. Usually, the output source spectral representations are used to recover the signals in time domain, however, in our new model, they are treated as the first level (level 1) estimation to build generic time-frequency masks [22, 28], which are called original deterministic masks or original masks instead in this paper, and then one or more nonlinear masking layers are used to correct the original masks and generate the second level (level 2) estimation, i.e., the original masks are enhanced via the nonlinear masking layers to obtain more powerful nonlinear feature extraction. More specifically, the post-processed masks output by the nonlinear masking layers are applied to separate sources from the mixtures, and these separated sources are the second level estimation. The definition of the nonlinear masking layer and two-level estimation will be explained in detail in Sect. 3. Finally, evaluation of the proposed model is carried out on the TIMIT corpus [7], which is designed for speech related research. Experimental results show that the performance of the new model exceeds that of RNNs without any mask or simply with original masks according to the BSS-EVAL metrics.

2 Speech Separation

The goal of speech separation is to obtain several individual estimated source signals in a linearly mixed signal, which means that the mixture is a summation of all the source signals in time domain. Suppose that there are N source signals denoted as a set \(\{\mathbf {y}^{(i)}|i=1,2,...,N\}\), the linearly mixed signal can be expressed as \(\mathbf {x}=\sum _{i=1}^N\mathbf {y}^{(i)}\). To make full use of the effective information in the mixed signals, a common method is to apply the short-time Fourier transform (STFT) on the signals in time domain to obtain the spectral representations of them. The spectral features of the signals are suitable to be handled by various DNNs. Let the STFT representation of the ith source signal be \(\mathbf {Y}^{(i)}\) and the time-frequency bin at time index t and frequency index f be \(\mathbf {Y}_{t,f}^{(i)}\), then a single time-frequency bin of the STFT representation of the linearly mixed signal can be given by \(\mathbf {X}_{t,f}=\sum _{i=1}^N\mathbf {Y}^{(i)}_{t,f}\).

Generally, there are two groups of training targets used in deep speech separation. The first one adopts the mapping-based targets [23, 24, 31]. Typically, this approach usually treats spectral magnitudes as training targets and minimizes the loss between the output spectral magnitudes and the real spectral magnitudes. More precisely, let the deep learning model be \(f(*)\) parameterized by \(\varvec{\theta }\), the model will minimize the loss to fit a function

Here the operation \(|*|\) obtains the spectral magnitude of a signal. The second one, by contrast, adopts the masking-based targets [23, 24, 31]. In this approach, the manually computed real time-frequency masks for different sources are treated as training targets. The loss between the output masks and the real masks is minimized during training. The output masks are used to recover the spectral magnitudes of the source signals from that of the mixture during evaluation. Let \(\mathbf {M}^{(i)}\) represent the ith time-frequency mask to separate the ith source \(\mathbf {Y}^{(i)}\) from the mixture \(\mathbf {X}\), the model will minimize the loss to learn

Then \(|\mathbf {Y}^{(i)}|\) is approximately given by

Here \(\odot \) denotes the element-wise product. After that, we can simply apply the inverse short-time Fourier transform (ISTFT) on the ith estimated spectral magnitude and the phase of the mixture to obtain the ith source signal \(\mathbf {y}^{(i)}\) in time domain approximately.

3 Proposed Model

3.1 Brief Introduction to the Model

For deep speech separation, designing a suitable neural network is crucial. Theoretically, FNNs, CNNs (convolutional neural networks) [12], RNNs, LSTMs (Long Short-Term Memory) [9] and GRUs (Gated Recurrent Neural Networks) [5] may all work if the architecture and the hyper-parameter settings of the neural network are designed appropriately. By treating spectral features as sequential data, RNNs, LSTMs or GRUs are commonly considered to be better choices and hence are more suitable to be used as basic neural networks. By using the context window, continuous frames of the spectral feature matrix transformed through the STFT are concatenated together to construct one input feature vector at a single time step. These input feature vectors are called meta-frames hereafter. More specifically, suppose the input feature vector at time step t is a single frequency vector of the spectral magnitude of the mixture \(\mathbf {X}\) at time index t, the activation of a single-layer RNN at time step t can be represented as

Here \(\mathbf {W}_h\), \(\mathbf {U}_h\) and \(\mathbf {b}_h\) are the parameters of a single RNN hidden layer and \(\sigma (*)\) is the activation function.

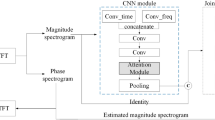

Figure 1 illustrates the architecture of our proposed model. The output of the RNN, the LSTM or the GRU is considered as the level 1 estimation (intermediate estimation), which is used to construct original time-frequency masks for different sources

The Eq. (5) gives the mathematical expression of the spectral magnitude mask (SMM) [16, 19, 23], which is a kind of soft time-frequency mask that ignores the phase information. Here \(\tilde{\mathbf {Y}}^{(i)}\) indicates the ith level 1 estimated source (level 1 estimation) and \(\tilde{\mathbf {M}}^{(i)}\) is the ith corresponding estimated time-frequency mask for \(\tilde{\mathbf {Y}}^{(i)}\). Another popular choice could be the ideal ratio mask (IRM) [16, 23, 24], a similar but more sophisticated mask

Here \(\beta \) is a hyper-parameter and commonly chosen to be 0.5 [23].

These masks are used as original time-frequency masks and nonlinear enhancement on these original masks is performed by multiple nonlinear masking layers to output post-processed masks. The nonlinear masking layer will be detailedly introduced in Sect. 3.2. After that, the element-wise product operation \(\odot \) is applied between the spectral representation of the mixture and the post-processed masks, which forms the level 2 estimated sources (level 2 estimation). Finally, the level 2 estimation will be compared with the real sources, and the model tries to minimize the loss between them during the training phase or use the ISTFT to reconstruct source signals in time domain during the evaluation phase.

3.2 Nonlinear Masking Layers and Two-Level Estimation

Indeed, the SMMs or IRMs constructed from the level 1 estimated sources are treated as deterministic masking layers without any trainable parameter in [10]. The deterministic masking layers are simple but rough since there are not only source signals but also some other interferences such as background noise or reverberation in the mixture. Take the deterministic SMM layer as an example, it is more accurate to define it as

In the Eq. (7), \(\epsilon \) indicates other interferences different from the sources. Although those interferences can still be learned by the DNNs, they will more or less end up in the isolated source signals during evaluation. In this paper, we propose the nonlinear masking layer which is applied on the original deterministic masks to correct the masks themselves. Here “nonlinear” means that there are one or more nonlinear functions which accept the time-frequency masks as input to output the post-processed masks that perform more powerful nonlinear feature extraction, i.e., these post-processed masks probably allow other interferences to be taken into account in the original masks in a more complex way. We believe that the post-processed masks perform nearly the same effect as the Eq. (7) in some form. Therefore, the nonlinear masking layer can help the model obtain better separation performance in the environment with various interferences but the model doesn’t need to learn these interferences explicitly. Moreover, multiple nonlinear masking layers can be stacked together to construct much more powerful nonlinear time-frequency masks. In the following section, “masking layers” and “nonlinear masking layers” are treated as synonyms when there is no confusion. The simplest nonlinear masking layer which is applied on the SMMs can be given by the following equation

Formally, there is a little change on the Eq. (8), i.e., we add parameter \(\alpha ^{(i)}\), where \(i = 1, 2, 3, ..., N\), to the corresponding ith level 1 estimated source signal \(\tilde{\mathbf {Y}}^{(i)}\) to control how much the source contributes to the final nonlinear time-frequency masks. Note that \(\alpha ^{(i)}\) is a hyper-parameter which needs to be manually tuned and we empirically recommend setting the value of it between 1 and 2. By default, \(\alpha ^{(i)} = \alpha ^{(j)}\), where \(i \ne j\). This means that all sources are equally important for constructing the nonlinear masks.

The Eq. (9) gives the full mathematical expression of a single nonlinear masking layer which is applied on the SMMs, where both \(\mathbf {W}\) and \(\mathbf {b}\) denote the trainable parameters of the nonlinear masking layer, \(g(*)\) denotes the activation function and \(\hat{\mathbf {M}}^{(i)}\) is the ith output post-processed mask corresponding to the ith source. In fact, the representation of a single nonlinear masking layer is similar to that of a single fully-connected layer. If multiple nonlinear masking layers are stacked together to correct the original time-frequency masks, it is just like training a FNN on the original masks to obtain masks with more powerful nonlinear feature extraction. Let L and the superscript l denote the number and the index of the nonlinear masking layer, respectively, where \(l=1, 2, 3, ..., L\), the ith output post-processed mask of the lth nonlinear masking layer can be represented as

In the Eq. (10), the input features are the spectral magnitudes of the level 1 estimation if \(l = 1\). By contrast, the input features are the hidden post-processed masks from the previous nonlinear masking layer if \(1 < l \le L\). In general, \(L \ge 1\), but in particular, we say \(L = 0\) means that there are no nonlinear masking layers, i.e., only the original masks (Eq. (5)) are kept in the model. Finally, the Eq. (3) is used to obtain estimated sources one more time. The estimated sources here are the level 2 estimation. Figure 2 shows the architecture of two nonlinear masking layers which are applied on the SMMs.

3.3 Objective Function

The supervised speech separation can be treated as a multi-class regression problem and the least squares error (LSE, also called L2-norm loss) is commonly used as an objective function to minimize the loss between the predictions and the targets (ground truth). Let \(\hat{\mathbf {Y}}^{(i)}\) be the STFT representation of the ith level 2 estimated source, the objective function can be given by

Here the subscript F denotes the Frobenius norm, which is similar to the L2-norm. Moreover, taking the difference among sources into account leads to the discriminative objective function in [10], which would be helpful to increase Source to Interference Ratio

Here \(\gamma \) is a hyper-parameter commonly chosen between 0 and 0.2. When \(\gamma \) is set to 0, the objective function degenerates to the LSE. In practice, to suppress signals from other sources in the current source prediction, just increase \(\gamma \).

4 Experimental Settings

4.1 Speech Data

The model proposed in this paper is evaluated on the TIMIT corpus, which is a collection of read speech designed for acoustic-phonetic study. Similar to the way of generating experimental data in [10], eight sentences from a male speaker and a female speaker are used for training, respectively. The circular shift algorithm [26] is used for data argumentation and carried out to generate more training signals in time domain. With the remaining sentences, one sentence from the male and one from the female are used as the validation set and the others are used as the test set. Still, the circular shift algorithm is used on both the validation set and the test set to increase the amount of the data. Also, test sentences are added up to form mixed signals at 0 dB SNR. Finally, we obtain about 36 h training data, 6 h validation data and 8 h test data.

4.2 Feature Preprocessing

In the experiments, the spectral magnitude is utilized as input to the proposed model and the phase information is ignored. The spectral representation is extracted using a 512-point STFT with hop size of 256, and the window size being the same as the number of Fast Fourier transform (FFT) points. Following the transformation, a spectral magnitude matrix is created for each signal with the shape of (513, 105), where 513 is the number of frequency bins and 105 is the number of time steps. Then, context windows of size 1, 3 and 5 are employed to construct meta-frames from the original frames of the spectral magnitude, respectively. A context window of size 1 means that there is no context, i.e., only the current frame is used. A context window of size 3 means concatenating one past frame, the current frame and one future frame to form a meta-frame of size 513 by 3, i.e., an input feature vector of size 1539 is constructed. A context window of size 5 can be interpreted analogously. The introduction of applying the context window is to capture more contextual information from the spectral magnitude.

4.3 Evaluation

Following the BSS-EVAL metrics mentioned in [21], the performance of blind source separation can be evaluated using three quantitative values: Source to Distortion Ratio (SDR), Source to Interference Ratio (SIR) and Source to Artifacts Ratio (SAR). SDR measures the overall separation quality, SIR measures the ability to reduce interferences from other sources and SAR measures the systematic error in the separation process.

5 Experimental Results

To begin with, a vanilla RNN, a LSTM and a GRU with two hidden layers of 150 hidden units (the hyper-parameter settings, i.e., the number of layers, the number of hidden units, etc., come from [10]) are set as baseline models. This means that they are simply mapping-based models without any time-frequency mask at all. In addition, no context windows are adopted for the baseline models and the RELU function [15] is taken to be the activation function in their hidden layers

The Adam algorithm [11] is chosen to perform gradient descent with the discriminative training loss function, and the training procedure of each model is kept for about 10 epochs. All experiments are repeated more than 10 times and the BSS-EVAL results are obtained by taking average. We also compare the performance of the bidirectional recurrent neural networks (BRNN, BLSTM or BGRU) [18] to that of the unidirectional recurrent neural networks (RNN, LSTM or GRU). The baseline experimental results on the test set have been listed in Table 1. From the vertical of Table 1, it turns out that the vanilla RNN obtains better SDR, SIR and SAR than both the LSTM and the GRU no matter \(\gamma \) is 0.0 or 0.1. Also, from the horizontal of Table 1, larger \(\gamma \) relatively maintains SDR and SAR but improve SIR, which helps reduce the interferences of one source to another. The vanilla RNN with \(\gamma =0.1\) obtains the best performance among all baseline models, which yields 6.18 dB SDR, 8.97 dB SIR, 8.02 dB SAR. Both the LSTM and the GRU are a little overfitting in the experiments. Furthermore, the bidirectional recurrent neural networks have not shown much effect because the separation procedure does not rely on the information of the long future frames to predict the current frame. The remaining experiments are all about the nonlinear masking layer, and we choose the vanilla RNN with \(\gamma =0.1\) as the basic neural network for the remaining experiments. In fact, the other variants of the RNN are all available for the experiments of the nonlinear masking layer since our main target is to figure out the powerful nonlinear feature extraction of the nonlinear masking layer but not just the effect of different RNNs. However, the selection of the neural network will essentially determine the upper bound of the performance of the model, and the nonlinear masking layer helps the network further reach that upper bound. Our basic experiments in Table 1 show that the performance limit of the LSTM and the GRU don’t exceed the vanilla RNN (Though in many other deep learning applications, the LSTM/GRU may perform better than the RNN), so the architecture of “LSTM/GRU + NMLs” may not perform any better than that of “RNN + NMLs”, where “NML” is short for the nonlinear masking layer.

Different from the activation function used in the hidden layers of the RNN, we use the leaky RELU function [27] as the activation function in the nonlinear masking layers

To explore the effect of the nonlinear masking layer, the output sources of the RNN are treated as the level 1 estimation, and the deterministic SMMs are constructed from the level 1 estimation to be used as original time-frequency masks. After that, we correct the deterministic SMMs by using 1, 2 and 3 nonlinear masking layers, respectively. In our experiments, when a single masking layer is used, all parameters \(\alpha ^{(i)}\)’s are set to 1.0. Otherwise, \(\alpha ^{(i)}\)’s of the first masking layer are set to 1.0 while those of the remaining masking layers are set to 1.5. Finally, we obtain the level 2 estimation. By contrast, we also train a model which combines the RNN and the original SMMs, and compare this model to the models with multiple nonlinear masking layers.

The evaluation performance of different models on the validation set during the training procedure is illustrated in Fig. 3. Both the SMM and the IRM are evaluated with the nonlinear masking layer, respectively. However, no matter which original mask is used, it turns out that SDRs and SIRs reach the maximum in about 1–5 epochs (and begin to decrease) on most of the models with one or more nonlinear masking layers. By contrast, if the original deterministic masks are not corrected at all, the models converge much more slowly on SDRs and SIRs since the values of them become stable nearly after 5–7 epochs. However, almost all models achieve best SARs in 1–4 epochs whether they integrate nonlinear masking layers or not. It demonstrates that the nonlinear masking layer is capable of accelerating the training procedure of the model especially for SDR and SIR. Also, the models with one or more nonlinear masking layers achieve much better SDRs and SIRs than those with original masks. By contrast, the effect of using the nonlinear masking layer is not necessarily better than that of not using the nonlinear masking layer for SAR. These results show that according to the evaluated values of SDRs, the nonlinear masking layer can improve the overall quality of speech separation. Moreover, according to the evaluated values of SIRs, the nonlinear masking layer can greatly reduce the interferences of one speech to another. However, the downside is, the evaluated values of SARs reflect that using the nonlinear masking layer is likely to increase the systematic error of the entire model, although this error is relatively small.

Table 2 shows the results of all experiments about the nonlinear masking layer on the test set. It is found that both SDRs and SIRs improve with the increasement of the number of nonlinear masking layers no matter what kind of original mask (SMM or IRM) is used. However, for both these two masks, SARs maintain relatively stable since they don’t show obvious rising trends like SDRs and SIRs. When the original masks are chosen to be SMMs and 3 nonlinear masking layers are added, the model yields 10.20 dB SDR, 16.33 dB SIR and 10.03 dB SAR. It turns out that this model achieves better SDR and SIR than those with SMMs followed by 1 or 2 nonlinear masking layers. However, SAR obtained by this model doesn’t improve but is relatively closed to best SAR obtained by the model with deterministic SMMs. By contrast, the model that uses IRMs followed by 3 nonlinear masking layers yields 8.69 dB SDR, 16.33 dB SIR and 10.27 dB SAR. Similarly, this model achieves better results than those with IRMs followed by 1 or 2 nonlinear masking layers. These experimental results demonstrate that the nonlinearity of multiple nonlinear masking layers is more obvious than a single nonlinear masking layer. Stacking multiple nonlinear masking layers within a certain number range can further enhance the nonlinear feature extraction of the original masks.

Moreover, the selection of the original deterministic mask will also affect the performance of a model. The model with SMMs followed by multiple nonlinear masking layers performs better than those with IRMs followed by the same number of nonlinear masking layers especially for SDR and SIR. It reflects that the choice of the original masks is related to the upper bound of the performance of the model, and the nonlinear masking layer helps the model reach that upper bound. This is similar to the effect of the basic neural network selection. The IRM emphasizes the ratio of the clean speech energy to the noisy speech energy, and the SMM can be viewed as a form of the IRM defined in the STFT domain [23]. The SMM is empirically better than the IRM since the STFT domain is similar to our auditory perception. Therefore, the model with SMMs followed by 3 nonlinear masking layers, which obtain both best SDR and SIR, is chosen to be fine-tuned in the remaining experiments.

In addition, we evaluate the effect of the context window size to the performance of the model on the test set. The experimental results have been shown in Fig. 4. Surprisingly, it is found that increasing the size of the context window may harm the performance of the model. One possible reason is that the RNN is capable of extracting as much as contextual information from the mixture and increasing the size of the context window may cause overfitting.

Finally, the spectrograms of an utterance example are illustrated in Fig. 5 (The estimated sources are output by the model “RNN + SMMs + 3 NMLs”). They are the spectrograms of the mixture, the first real source, the first estimated source, the second real source and the second estimated source from left to right, respectively. The model generates relatively good results since the spectral representations of the estimated sources are quite closed to those of the the real sources, and this demonstrates the ability of the nonlinear masking layer to form more powerful nonlinear time-frequency masks for speech separation.

6 Conclusion

In this paper, deep RNNs with nonlinear masking layers and two-level estimation are proposed for monaural speech separation. The output of the RNN is called the level 1 estimation which is used to construct original deterministic time-frequency masks (SMM, IRM, etc.). With the help of the additional nonlinear masking layers, original masks are enhanced and obtain more powerful nonlinear feature extraction. Finally, the element-wise product operation is applied between the mixtures and the nonlinear post-processed masks to output the level 2 estimation. Both deep RNNs without any mask and with original masks are used for comparison and taken to evaluate the effectiveness of the new models, respectively. The best results come from the RNN with SMMs followed by 3 nonlinear masking layers, giving an improvement of 4.02 dB SDR, 7.36 dB SIR and 2.01 dB SAR over the baseline RNN without any mask while that of 2.44 dB SDR and 4.58 dB SIR over the RNN with original masks (original SMMs). Future work on the design of appropriate and more complex integrated models on speech separation is a promising direction.

References

Brown, G.J., Cooke, M.: Computational auditory scene analysis. Comput. Speech Lang. 8(4), 297–336 (1994). https://doi.org/10.1006/csla.1994.1016

Chan, W., Jaitly, N., Le, Q., Vinyals, O.: Listen, attend and spell: a neural network for large vocabulary conversational speech recognition. In: 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 4960–4964. IEEE (2016). https://doi.org/10.1109/ICASSP.2016.7472621

Chang, X., Qian, Y., Yu, D.: Adaptive permutation invariant training with auxiliary information for monaural multi-talker speech recognition. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5974–5978. IEEE (2018). https://doi.org/10.1109/ICASSP.2018.8461570

Cherry, E.: Some experiments on the recognition of speech, with one and with two ears. J. Acoust. Soc. Am. 25(5), 975–979 (1953). https://doi.org/10.1121/1.1907229

Chung, J., Gulcehre, C., Cho, K., Bengio, Y.: Empirical evaluation of gated recurrent neural networks on sequence modeling. CoRR abs/1412.3555 (2014)

Cooke, M., Hershey, J.R., Rennie, S.J.: Monaural speech separation and recognition challenge. Comput. Speech Lang. 24(1), 1–15 (2010). https://doi.org/10.1016/j.csl.2009.02.006

Garofolo, J.S., et al.: TIMIT corpus. Linguistic Data Consortium. https://catalog.ldc.upenn.edu/LDC93S1

Hershey, J.R., Chen, Z., Roux, J.L., Watanabe, S.: Deep clustering: discriminative embeddings for segmentation and separation. In: 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 31–35. IEEE (2016). https://doi.org/10.1109/ICASSP.2016.7471631

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997). https://doi.org/10.1162/neco.1997.9.8.1735

Huang, P.S., Kim, M., Hasegawa-Johnson, M., Smaragdis, P.: Deep learning for monaural speech separation. In: 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1562–1566. IEEE (2014). https://doi.org/10.1109/ICASSP.2014.6853860

Kingma, D.P., Ba, J.L.: Adam: a method for stochastic optimization. In: 3rd International Conference on Learning Representations, ICLR 2015 (2015)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012). https://doi.org/10.1145/3065386

Lee, D.D., Seung, H.S.: Learning the parts of objects by non-negative matrix factorization. Nature 401(6755), 788 (1999). https://doi.org/10.1038/44565

Maas, A.L., Le, Q.V., O’Neil, T.M., Vinyals, O., Nguyen, P., Ng, A.Y.: Recurrent neural networks for noise reduction in robust ASR. In: INTERSPEECH 2012, 13th Annual Conference of the International Speech, pp. 22–25 (2012)

Nair, V., Hinton, G.E.: Rectified linear units improve restricted boltzmann machines. In: Proceedings of the 27th International Conference on Machine Learning (ICML-10), pp. 807–814 (2010)

Pandey, A., Wang, D.: On adversarial training and loss functions for speech enhancement. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5414–5418. IEEE (2018). https://doi.org/10.1109/ICASSP.2018.8462614

Schmidt, M.N., Olsson, R.K.: Single-channel speech separation using sparse non-negative matrix factorization. In: INTERSPEECH 2006 - ICSLP, Ninth International Conference on Spoken Language Processing (2006)

Schuster, M., Paliwal, K.K.: Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 45(11), 2673–2681 (1997). https://doi.org/10.1109/78.650093

Srinivasan, S., Roman, N., Wang, D.: Binary and ratio time-frequency masks for robust speech recognition. Speech Commun. 48(11), 1486–1501 (2006). https://doi.org/10.1016/j.specom.2006.09.003

Tu, Y., Du, J., Xu, Y., Dai, L., Lee, C.H.: Speech separation based on improved deep neural networks with dual outputs of speech features for both target and interfering speakers. In: The 9th International Symposium on Chinese Spoken Language Processing, pp. 250–254. IEEE (2014). https://doi.org/10.1109/ISCSLP.2014.6936615

Vincent, E., Gribonval, R., Févotte, C.: Performance measurement in blind audio source separation. IEEE Trans. Audio Speech Lang. Process. 14(4), 1462–1469 (2006). https://doi.org/10.1109/TSA.2005.858005

Wang, D.: Time-frequency masking for speech separation and its potential for hearing aid design. Trends Amplif. 12(4), 332–353 (2008). https://doi.org/10.1177/1084713808326455

Wang, D., Chen, J.: Supervised speech separation based on deep learning: an overview. IEEE/ACM Trans. Audio Speech Lang. Process. 26(10), 1702–1726 (2018). https://doi.org/10.1109/TASLP.2018.2842159

Wang, Y., Narayanan, A., Wang, D.: On training targets for supervised speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 22(12), 1849–1858 (2014). https://doi.org/10.1109/TASLP.2014.2352935

Weng, C., Yu, D., Seltzer, M.L., Droppo, J.: Deep neural networks for single-channel multi-talker speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. (TASLP) 23(10), 1670–1679 (2015). https://doi.org/10.1109/TASLP.2015.2444659

Wikipedia. https://en.wikipedia.org/wiki/Circular_shift. Circular Shift

Xu, B., Wang, N., Chen, T., Li, M.: Empirical evaluation of rectified activations in convolutional network. CoRR abs/1505.00853 (2015)

Yilmaz, O., Rickard, S.: Blind separation of speech mixtures via time-frequency masking. IEEE Trans. Signal Process. 52(7), 1830–1847 (2004). https://doi.org/10.1109/TSP.2004.828896

Yu, D., Deng, L., Dahl, G.E.: Roles of pre-training and fine-tuning in context-dependent DBN-HMMs for real-world speech recognition. In: Proceedings of the NIPS Workshop on Deep Learning and Unsupervised Feature Learning (2010)

Yu, D., Kolbæk, M., Tan, Z.H., Jensen, J.: Permutation invariant training of deep models for speaker-independent multi-talker speech separation. In: 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 241–245. IEEE (2017). https://doi.org/10.1109/ICASSP.2017.7952154

Zhang, H., Zhang, X., Gao, G.: Multi-target ensemble learning for monaural speech separation. In: INTERSPEECH, pp. 1958–1962 (2017). https://doi.org/10.21437/Interspeech.2017-240

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, J., Zhang, P. (2019). Deep Recurrent Neural Networks with Nonlinear Masking Layers and Two-Level Estimation for Speech Separation. In: Tetko, I., Kůrková, V., Karpov, P., Theis, F. (eds) Artificial Neural Networks and Machine Learning – ICANN 2019: Text and Time Series. ICANN 2019. Lecture Notes in Computer Science(), vol 11730. Springer, Cham. https://doi.org/10.1007/978-3-030-30490-4_32

Download citation

DOI: https://doi.org/10.1007/978-3-030-30490-4_32

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-30489-8

Online ISBN: 978-3-030-30490-4

eBook Packages: Computer ScienceComputer Science (R0)