Abstract

In this chapter we describe a study in which we analyzed student modeling-based learning discourse data to investigate young students’ understandings of models and model use in science. We used rich case studies of modeling-based learning of 48 fifth-grade students in three different classes, who met with the same teacher once a week for a total of seven months. Transcripts from video taped conversations and the models constructed by the students served as the primary sources of data. We present findings from applying the framework of model competence to the modeling work of young/novice modelers. Findings revealed insights on fifth-grade students’ model competence, regarding the nature of their models, the existence of multiple models, the purpose of their models, and the process of testing and changing models. The implication from these findings is that a modeling resource-based framework of model competence might be used productively as a teacher guide throughout modeling-based learning in science. Thus, instead of regarding this as a need to help students develop modeling abilities they lack, it might be more productive to view this as a need to help students develop more reliable access to modeling resources they already have and might be context dependent. Lastly, we also suggest that the teacher might act as an activator of different modeling resources.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

Models and modeling are considered to be integral parts of science learning, primarily because they provide a context in which students can explain and predict natural phenomena. Externalized through various means, a concrete model could provide students with a tool that enables them to understand a phenomenon, as well as to make new predictions concerning this phenomenon (Schwarz et al., 2009; Sect. A). NRC (2012) categorizes modeling as among the scientific practices that K-12 students should learn to apply. NRC argues that “models make it possible to go beyond observables and imagine a world not yet seen” (NRC 2012, p. 50). In other words, external models and modeling enable learners not only to see but also to re-see the natural world, becoming tools for scientific reasoning and for envisioning (otherwise theoretical) ideas in science.

Recognizing models and the process of modeling as core components of science education (NGSS Lead States, 2013) suggests two important issues. The first is the construction and use of external models as ways to represent the function/mechanism underlying natural phenomena at the core of learning in science (Chap. 3). Second, learning in science entails learning with and about the process of scientific modeling (Linn, 2003; Chap. 1). Science proceeds through the construction and refinement of external models of natural phenomena (NRC, 2012), and therefore, learning science includes developing an understanding of natural phenomena by constructing models as well as learning the processes of developing and refining those models (White & Frederiksen, 1998). For the purposes of this chapter, we refer to the processes of learning through and about modeling as modeling-based learning (MBL; e.g., Louca, Zacharia, & Constantinou, 2011; Louca & Zacharia, 2015; Gilbert & Justi, 2016; Chap. 12), emphasizing the process of constructing models and, through this construction, learning about phenomena and the process of modeling itself. We use the term MBL to differentiate this process from model-based learning, which has been given a totally different meaning by other scholars [e.g., Gobert & Buckley (2000) defined model-based learning as the construction of mental models of phenomena]. In this chapter, MBL denotes that it is the process of modeling itself that we are interested in. Using the term model-based learning could also be misleading in other ways. For instance, it could denote learning that occurs only when a model is used as an end product.

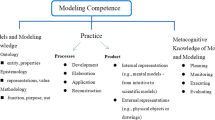

MBL has been widely advocated as an approach that can be applied to meaningfully engage students in authentic practices of learning of and about science (Louca et al., 2011; Louca & Zacharia, 2015), and over the years, a number of studies have verified its effectiveness. However, these studies have focused mostly on high-school and university students. We know much less about MBL among K-6 students, specifically in reference to detailed descriptions of how young students work with MBL in science. Such information is vital for designing curricula and learning materials that enable younger students to develop their modeling competence. By modeling competence, we mean students’ understanding of modeling, models, and the use of models in science (Upmeier zu Belzen & Krüger, 2010; Chap. 1). Finally, by modeling epistemologies, we are referring to students’ views and knowledge of the models they construct and use through MBL. Their understanding of the nature of the process of model development through MBL is similar to what Louca, Elby, Hammer, and Kagey (2004) described in their view of students’ personal epistemologies and their effect on students’ learning of science: Students with sophisticated epistemological views use the process of learning more actively, which leads to a better conceptual understanding of the various physical phenomena.

In this chapter, we describe an investigation of whether an already established framework for modeling competence (FMC; Chap. 1) captures/describes the modeling competence of young novice modelers. In previous research, young novice modelers (i.e., K-6 students) engaged in modeling in different ways than older individuals (Louca & Zacharia, 2015). For this chapter, we analyzed data from young learners who specifically followed MBL as described by Louca and Zacharia (2015). The analyses used the FMC as a reference for investigating whether it could capture the modeling competence of young novice modelers, or if it could not, whether it could enrich the existing framework to include/accommodate young students’ understandings of modeling competence.

2 Theoretical Background

MBL has been recognized as a learning approach that could support formal science learning as early as the pre-school years. MBL has also been highlighted in the most recent NRC Framework for K-12 Science Education (NRC, 2012). It is noted as one of the basic scientific practices, and its added value is being argued for across K-12. In fact, the authors of this framework highlight the fact that MBL should be applied as early as possible:

Modeling can begin in the earliest grades, with students’ models progressing from concrete “pictures” and/or physical scale models (e.g., a toy car) to more abstract representations of relevant relationships in later grades, such as a diagram representing forces on a particular object in a system. Young students should be encouraged to devise pictorial and simple graphical representations of the findings of their investigations and to use these models in developing their explanations of what occurred (p. 58).

In an MBL context, science learning is accommodated through a recursive modeling process in which students are involved in several steps: constructing, using, evaluating, and revising/reconstructing models that represent physical phenomena (Lesh & Doerr, 2003; Schwarz et al., 2009). Learning is facilitated by observing the natural phenomenon or system at work and representing it through a model, which consists of “elements, relations, operations, and rules governing interactions that are expressed using external notation systems” (Lesh & Doerr, 2003, p. 10). It should be noted that learning occurs through both successes and failures. For instance, after constructing a model to represent a phenomenon, it is often the case that the students notice unforeseen effects and implications from the presence or absence of a particular representational choice, and they proceed with changes, which result in revising and reconstructing their model. The latter illustrates the recursive nature of modeling and indicates that MBL is a gradual learning process.

In addition to representing a phenomenon through a series of steps, MBL involves the development of meta-modeling knowledge (i.e., knowledge about how and why models are used and what their strengths and limitations are; for more details, see Schwarz & White, 2005). MBL is a construct that blends the steps of practicing modeling with meta-knowledge (Nicolaou & Constantinou, 2014; Gilbert & Justi, 2016; Chap. 3). This means that on top of following a series of steps for enacting the modeling process, learners need to understand the purpose of each of these steps as well as the characteristics (i.e., understanding the purpose of the elements, relations, operations, and interactions) of the model.

Looking across the MBL literature, various frameworks exist concerning the steps that need to be followed to enact modeling (e.g., Louca & Zacharia, 2015; Hestenes, 1997; Lesh, Hoover, Hole, Metcalf, Krajcik, & Soloway, 2000; Windschitl, Thompson, & Braaten, 2008). However, there is a significant overlap in several basic principles across all these frameworks. More or less, all frameworks involve a construction step and an evaluation step. The differences emerge when the details of each framework are unearthed. For instance, there are frameworks that support the idea that modeling is a cyclical process in which the learners go through the same steps in each modeling cycle (e.g., Constantinou, 1999). On the other hand, other frameworks depict modeling as a process that spirals; here, learners do not necessarily go through all the steps of the modeling process in each cycle (Louca & Zacharia, 2015). In addition to the significant overlap in the steps of the modeling process across the frameworks of the domain, Samarapungavan, Tippins, and Bryan (2015) argued that all these frameworks also overlap in terms of the way modeling (a) impacts children’s epistemic learning goals, such that they learn to inquire on their own, (b) transforms students into producers of knowledge (i.e., inventors of models) rather than into consumers of knowledge as traditional approaches usually do, and (c) blends learning about “content” and “process” together, which enables students to view and perceive science learning in its correct dimensions.

Finally, the MBL approach falls under the inquiry-based learning approaches. Through the inquiry prism, MBL could be seen as a fine blend of cognitive (science concepts and scientific inference processes), epistemic (knowledge validation and evaluation), and social (understanding the sociocultural norms and practices of science) dimensions (for details, see Duschl & Grandy, 2008). According to Windschitl et al. (2008), model-based inquiry may be able to provide a more epistemically congruent representation of how science works nowadays. For example, it could “embody the five epistemic features of scientific knowledge: that it is testable, revisable, explanatory, conjectural, and generative” (p. 964).

2.1 The MBL “Cycle” of Young Modelers

When engaging in modeling, K-6 modelers follow a different route (Louca et al., 2011; Louca & Zacharia, 2012, 2015) than the ones described in the general literature for older modelers (e.g., Krell & Krüger 2016).Footnote 1 More specifically, the contents of the various modeling practices/steps differ as well as the sequence in which these modeling practices occur. Young students’ modeling work initially follows a four-step MBL cycle (Fig. 14.1). The same steps have been found in other modeling cycles (e.g., Hestenes, 1997; Krell & Krüger 2016; Lesh et al., 2000; Windschitl et al., 2008); however, for K-6 modelers, the level of sophistication is different. For instance, these students usually start modeling at a superficial level, in which they represent only parts of the phenomenon without including any aspects of the underlying mechanism. Additionally, K-6 students’ modeling begins as a cyclical process, but it usually evolves into a spiraling one. The latter implies that not all modeling steps/practices are followed in consecutive modeling rounds in which the K-6 modelers aim to improve their models (by modeling rounds, we mean a complete enactment of the four steps of the MBL “cycle”).

The modeling-based learning “cycle” (adopted from Louca & Zacharia, 2015)

The MBL “cycle” begins with young students observing and investigating a physical phenomenon or part(s) of it. In doing so, the young students start building a story for the phenomenon. This story is based on observations, prior knowledge, ideas, and experiences. It begins at a superficial level, but as the modeling progresses, its level of sophistication gradually increases (e.g., aspects of the phenomenon’s underlying mechanism are added). Prior student knowledge, ideas, and experiences appear to be a significant repository of data and information for students engaging in modeling, with K-6 students heavily referring to their experience during the MBL “cycle”. After K-6 modelers develop a story that describes the physical phenomenon under study, they proceed directly to the construction of an external, concrete model, while skipping the construction of an internal, mental model. In the case of older learners/modelers, the start of the modeling cycle is more sophisticated. It involves observation, understanding the purpose of the model, prior knowledge and experience, and the construction of a mental model (Nersessian, 2008) that can later be translated into an external model (Mahr, 2015).

In the construction step of the MBL “cycle,” K-6 modelers follow two different practices: planning and development. Planning mostly consists of breaking down the phenomenon under study into small pieces that can be incorporated into an external model. In this sense, the process of planning for K-6 modelers is a process of identifying parts of the phenomenon and treating them separately, rather than envisioning and treating all of, or at least a number of, the phenomenon’s parts together. The latter explains why these modelers skip or fail to build mental models before proceeding with the construction of an external one. Simply, when the K-6 modelers start modeling, they fail to collect the minimum amount of information needed to put a mental model of the phenomenon together. Previous research (Louca et al., 2011) has suggested that novice modelers need to start developing an external model in order to realize that they need to look for the missing components of the model.

The development of the model looks like the “writing and debugging” process of formal programming. The young modelers write their story (see above) and identify the components of the model described in this story, and then they proceed to construct a model. They talk about their model, revise their story and proceed with small changes, talk about the model some more, and make additional small changes until they feel that this representation matches their story. This back-and-forth process is not a formal evaluation of the constructed model but rather a process of reaching the representation/model they agreed to construct in their story through a process of trial-and-error (Chap. 13). During this step, students actually “invent” the physical objects (e.g., ball), the physical processes (e.g., moving the ball), and the physical entities (e.g., velocity, acceleration) comprising the phenomenon (e.g., free fall of objects). Invent means finding ways to represent these aspects in the models that are under construction; for example, finding a way to represent velocity. Older modelers follow a more sophisticated modeling approach right from the beginning in which the necessary model objects, processes, and entities are usually present, due to prior knowledge or observations (Krell & Krüger 2016). Furthermore, the internal and external models they construct have some sort of an underlying mechanism right from the beginning of the modeling process (e.g., older students usually know and use mathematical formulas that are related to the phenomenon). When older students feel that they have constructed a satisfactory model, they move toward a process of formal evaluation (e.g., Hestenes, 1997). For K-6 modelers, this process does not begin automatically; rather, the teacher needs to initiate it (Loucas et al., 2011). After a formal evaluation is in place, the process of model evaluation usually takes two major forms. First, learners use their model to see whether it can explain the data they collected or the experiences they recalled or used as a starting point for the model’s construction. Second, they evaluate their model in terms of logic; that is, whether the model represents a plausible mechanism that can account for what is observed. For example, K-6 students start by comparing their model to the actual phenomenon and by examining whether their model represents and simulates the phenomenon under study (usually on the surface; e.g., for free fall, K-6 modelers will be happy to see their object fall to the ground. No issues of velocity or acceleration will be considered at this point, unless the teacher points them out). Finally, over the years, we have found limited data where novice modelers deploy or decontextualize their model into a new situation or phenomenon in an effort to evaluate its explanatory power, as suggested by Constantinou (1999).

Another major difference between the modeling “cycle” and other modeling cycles (e.g., Krell & Krüger 2016) is that any revisions made to the constructed model by K-6 modelers in any subsequent modeling cycle occur within the investigation (i.e., during the formulation of the story) and construction steps. In this sense, revision becomes an epistemological or a meta-modeling process (Papaevripidou et al., 2009; Schwarz et al., 2009) in which students decide which route is more appropriate to follow to revise their model (i.e., students stop following a sequential modeling procedure and pick the modeling step that needs to be revisited, that is, the investigation or the construction step, in order to revise their model).

To sum up, the MBL “cycle” begins as a cycle and gradually evolves into a spiral. In this context, K-6 students skip certain steps of the modeling process as the previous modeling rounds are enacted. (Louca et al. 2011; Louca & Zacharia, 2012, 2015) suggested that during the first round of modeling, novice modelers usually identify the physical objects and focus on obtaining a model that looks like the phenomenon they have observed in real life. Only after this, during the second modeling round, can they identify and represent the physical behaviors of the identified objects, thus moving from how their model looks to how it functions, which in fact represents a shift from an ontological to an epistemological perspective of modeling. In the subsequent consecutive modeling rounds, novice modelers can progress to identifying, characterizing, and representing physical entities, which usually consist of concepts represented as variables.

Finally, data from previous studies (Louca et al., 2011; Louca & Zacharia, 2012, 2015) have not shown that novice modelers engage in solid thought experiments during modeling (Krell & Krüger 2016). In addition, novice modelers have not been found to use the resulting models to formulate hypotheses that they later test through experimentation in the real world. The latter prevents K-6 students from understanding how the model and experiential world connect and how the model can be applied.

2.2 MBL in K-6 Science Education

Research focusing on the K-6 age range has shown that students can engage in the process of modeling (e.g., Acher, Arca, & Sanmartı, 2007; Forbes, Zangori, & Schwarz, 2015; Manz, 2012; Schwarz et al., 2009). For example, Schwarz et al. (2009) showed that K-6 modelers are able to enact the steps involved in the modeling process, namely, constructing, using, evaluating, and revising/reconstructing models to represent physical phenomena. The modeling-based cycle’s hands-on and minds-on nature is a good fit for science learning at such young ages (Louca & Zacharia, 2015; Samarapungavan, Tippins, & Bryan, 2015; Zangori & Forbes, 2016).

According to the literature in this domain, models serve as sense-making tools that provide bridges for these students between their conceptual understanding, their observations, and the underlying scientific theory (Coll & Lajium, 2011; Gilbert, 2004). Given this, K-6 students could develop models that represent their understanding of a phenomenon or system and then use this model to engage in scientific reasoning and to form explanations for how and why the phenomenon or system works (Forbes et al., 2015; Schwarz et al., 2009; Verhoeff, Waarlo, & Boersma, 2008).

In a study of classroom discourse during MBL (Louca, Zacharia, et al., 2011), we described three distinct types of discourse (modeling frames) that learners engaged in: (a) (an initial) phenomenological description, (b) operationalization of the physical system’s story, and (c) construction of algorithms. By modeling frames, we mean the different ways in which students understand the learning process that is taking place and how they participate in the process. All these findings suggest that the students who engage in MBL by following the same modeling practices may be understood as being engaged in different modeling frames. In other words, they engage in modeling with different purposes, different end goals, and different combinations of modeling practices.

In a different MBL study (Louca & Zacharia, 2015), a number of modeling practices that young students tend to follow were identified, suggesting that novice modelers appear to enact modeling in a different manner than more advanced modelers. For instance, we have argued that the revision phase of MBL is an epistemological procedure, and any revisions of the constructed model arising during a particular iteration of modeling occur within the investigation and construction phases. Additionally, the decomposition of the phenomenon under study into smaller parts happens within the constructing the model phase of the modeling process and not during the investigating phase (Louca & Zacharia, 2015).

These findings suggest that when students are engaged in MBL, even when they follow the same modeling practices, they might engage in modeling with different purposes, different end goals, and different combinations of modeling practices. In earlier work (Louca & Zacharia, 2008, 2012), it was suggested that student modeling may take several different forms, depending on how students frame their work: the process may become technical (with respect to the code underlying their programming decisions) or conceptual (with respect to the way causal agents such as velocity are represented through code). It can also become procedural (by describing how something happens through time) or causal (by describing how an agent affects a physical process).

A different line of research has investigated students’ understanding of models and modeling in science (Chap. 1). The most important contribution of this framework is that it has identified a number of model-related issues that can be used to describe students’ understanding of models and modeling in science. Equally as important, the framework’s differentiation of the three different levels of student understanding proposes a differentiation between the descriptive, explanatory, and predictive natures of students’ understanding of the use and function of models in science. There may be other types of differentiation that can be applied and could be valuable (e.g., the functional nature of models, that is, models that represent how a phenomenon is caused or functions, instead of simply describing the phenomenon). However, this distinction and the ability to differentiate between students’ understanding of models and their use is valuable.

Given the particular ways in which K-6 students engage in modeling, the goal of the study was to investigate their understanding of models and their use in application. Thus, we examined how fifth graders’ MBL experience influenced their modeling competence concerning the five described aspects (Fig. 14.2). In line with work on student epistemologies in science (e.g., Louca et al., 2004), and in an effort to account for and describe the ways students see and use models during science learning, we analyzed data that supported the ways in which students work in authentic classroom contexts as described by MBL (Louca & Zacharia, 2012, 2015; Gilbert & Justi, 2016). The idea was to enrich an existing FMC to include/accommodate young students’ understanding of models and modeling.

The framework for modeling competence (cf. Fig. 1.3)

3 Methods, Data Sources, and Analyses

3.1 Study Context

This study involved three groups of fifth-grade students in two public metropolitan elementary schools in Cyprus (a total of 48 students working in groups of 2 or 3 students). Students in both classes met with the same teacher once a week for 80 min for a total of 7 months. Following a case study approach (Yin, 1994), different physical phenomena with each class of students were treated as a different case. For this study, data from nine cases (three student classes x three topics) were used in order to describe in detail the process of developing models for physical phenomena (Fig. 14.3). All students had access to a variety of modeling media (computer-based programming environments, paper-and-pencil, three-dimensional materials) to construct models for three similar and two different physical phenomena (a total of five phenomena for the entire study; Fig. 14.3). The duration of the study for each group ranged from 3 to 5 weeks.

3.2 Data Sources

Whereas studies that have investigated students’ understanding of models and modeling have collected data from student interviews and questionnaires, in our study, we investigated students’ understanding using discourse and artifact data, in an effort to inform research about findings from alternative data sources. Our effort focused on identifying elements related to the five aspects of models in FMC (Fig. 14.2).

Transcripts from videotaped conversations from all the case studies served as the primary source of data. A total of 1151 min of student conversations were analyzed. To triangulate the findings, student-constructed models collected at the end of each student meeting session were analyzed.

3.3 Data Analysis

Following previous research, the analyses of student discourse and student-constructed models focused on the prime constituents/players of models in physical phenomena (Louca et al., 2011), which include: physical objects, physical entities, and physical processes. As the first step of the analysis, Louca and Zacharia, (2012) coding scheme for discourse and artifact data was used. As presented in Fig. 14.4, the discourse coding scheme differentiates between the discussion of physical objects, physical entities, and physical processes amongst students, while providing the different ways that these can be characterized. The discussion of physical objects is usually about two different things: (a) the description of the story of the physical objects or physical system under study and (b) the description students’ experiences in support of these stories. Then, descriptions of physical processes and physical entities included three different ways students talked about them (conceptually, quantitatively, and operationally).

Codes used to analyze modeling practices, adopted from Louca et al. (2011)

Student-constructed models were analyzed using an artifact analysis adopted from another study (Louca, Zacharia, Michael, & Constantinou, 2011). Codes from this analysis included the ways in which students represented different elements in their models: physical objects (characters), physical entities (variables), physical processes (procedures), and physical interactions. Figure 14.5 presents the codes used for the artifact analysis. The findings from this analysis were added to timeline graphs, aligning the analysis and timing of the construction of each model with the graphs so that these could support the initial data.

Codes used for the analysis of student-constructed models adopted from Louca et al. (2011)

After all discourse and artifact data were coded, the discourse data were used to develop nine separate timeline graphs, one per case study, to present the sequence of student conversation as characterized by our analysis. Coded utterances were displayed in timeline graphs to reveal the temporal interrelationships of the coded statements. For each case, one graph was developed. Then, based on previous work (Louca & Zacharia, 2015), the timeline graphs were structured on the basis of the MBL “cycle” iterations that students engaged in during the study. Each time students went through a modeling “cycle” and they were about to begin a new one, we viewed this as an MBL “cycle” iteration.

Based on these data, nine case studies from the MBL “cycle” were developed, including transcript excerpts and examples of student-constructed models. During this step, descriptions of the context of each round of modeling in each case study were added, with the goal of having a detailed account for each case study to the largest possible extent. The description of context included a description of students’ overall goals during the MBL “cycle.” The idea of modeling frames from Louca and Zacharia, (2012) was used to describe this context: Modeling frame I: (Initial) Phenomenological description; Modeling frame II: Operationalization of the story of the physical system; and Modeling frame III: Construction of algorithms.

In the last step of the analysis, each of the nine case studies were revised, trying to apply the three levels from the FMC for each round, focusing on: (1) the nature of the models, (2) the existence of multiple models, (3) the purpose of the models, (4) the process of testing models, and (5) the process of changing models. Students’ discourse and the models they developed in each round were described separately for each aspect of the framework. For this, the transcript was not coded line-by-line, but rather, a description was given for the timeline section (round of modeling) of each of the case studies.

4 Findings

4.1 Nature of Models

Both analyses (discourse and artifact) focused on elements of models that students included either in the models they constructed or in the models they discussed during their modeling group work. Physical objects were addressed in student conversations (and presented in student-constructed models) from modeling round 1 of the students’ work (for all nine sub-cases with 3 rounds of modeling), appearing to suggest that the development of models first requires students to address the need to represent the “players” involved in the phenomenon (physical objects) before moving on to the rest of the model’s properties. This finding was verified by the artifact analysis of the models of all student groups in all sub-cases (Fig. 14.6).

However, physical processes (including interactions) and physical entities were found to be context dependent. A discourse analysis revealed that physical processes and physical entities were discussed in most of the student groups during round 1 of modeling only at the conceptual and quantitative levels, whereas discussions about operationalizing them appeared only in modeling rounds 2 and 3. This is also supported by the artifact data analysis, which indicated that the physical processes and the physical entities appeared to be non-causal in the models in most cases and were derived as early as round 1; physical processes, however, appeared in the models as a mixture of semi-causal and causal representations in both rounds 2 and 3. On the other hand, physical entities appeared in student models in semi-causal forms only in round 2 and in causal forms only in round 3. Interestingly, all of the abovementioned findings were confirmed in all student groups and in all different phenomena studied, independent of the number of previously modeled phenomena.

These findings suggest that usually modeling round 1 of student work is characterized by a process of developing models as phenomenological descriptions of the phenomena under study. Previously, this discourse was characterized as modeling frame I (Louca & Zacharia, 2012). Working within modeling frame I, students described the story of the overall physical system and/or the story of the individual physical objects involved in the phenomenon under study. These stories were described as a temporal sequence of “scenes” that captured the phenomenon, without dealing with the individual objects’ behaviors that resulted in the overall phenomenon. Similarly, students did not talk about any of the necessary components of the scientific model or how the phenomenon took place. Everyday experiences were used as reality checks to support students’ ideas about what would happen in the phenomenon under study. All these fit with level I of the aspect of the nature of the model in the FMC (Fig. 14.2), thus suggesting that students develop models that are, to the greatest possible extent, copies of the reality (phenomenon) they study.

By contrast, the data suggest that modeling rounds 2 and 3 reflect students’ understanding of the nature of models at level II (Fig. 14.2), with students’ focus at the end of modeling round 1 on improving the extent to which their model is good for developing idealized representations of the phenomena under study. Further, in subsequent rounds of modeling, there were some limited indications of level III, where students’ efforts were focused on developing a representation of the phenomenon that would cause (through the relationships between physical processes and physical entities) the phenomenon instead of simply depicting the phenomenon.

4.2 Purpose of Models

In different rounds of modeling, the discourse data suggest that students seemed to view, use, and/or visualize the models they constructed differently. Adopting the terminology from earlier work in modeling (Louca & Zacharia, 2012), during modeling round 1, students conceptualized their models so the models would act as phenomenological descriptions of the phenomenon under study, simply describing the story of the overall physical system and/or the story of the individual physical objects involved in the phenomenon under study. This was also the case when, in subsequent rounds of modeling, students had discussions about a new phenomenon or the new features that they wanted to add to their models. An artifact analysis suggested that these discussions led to the development of descriptive models of physical phenomena that simply provided scenes from the phenomenon in a temporal sequence without any reference to the causal mechanism underlying the phenomenon.

In modeling round 2, the students’ purpose seemed to focus on the description of the story of the physical entities and included the objects’ characteristics (i.e., velocity and acceleration) and the objects’ behaviors (i.e., accelerated motion) in an effort to operationalize the story of the physical system. This discussion led to the construction of models of physical phenomena that would have both descriptive and causal features. This view of the purpose of the models and the modeling process seemed to occur in the process of translating the story of the physical system into programmable code so that models of the phenomenon could be developed.

In modeling round 3, students identified and investigated the relationships between physical entities. The fact that their models needed to have this property motivated students to develop a construction-of-algorithms view of the model construction process, helping them to operationally define both the physical entities and the physical processes. This was done in a process of translating descriptive ideas about the phenomenon into operationally defined causal representations of relationships between different components of the phenomenon.

In terms of the FMC, the data showed that students in the study appeared to progress across different levels of understanding with respect to the purposes of the models they constructed. Students in all nine cases started at level I and progressed to level II, despite the fact that they had prior experience with the MBL “cycle.” Moreover, the study revealed that data showing students progressing to level III, in which students used the models they constructed to predict something about the phenomenon under study, were not consistent. Conversations about level III occurred only in cases 3 and 4 (water cycle and diffusion). During the evaluation stages, students brought into the conversation similar phenomena in order to evaluate the accuracy of the model under evaluation. For instance, in the case of diffusion (the phenomenon studied was the diffusion of a drop of red food coloring in a beaker of water), students used their experience with the dissolution of sugar in water to test whether their model was accurate enough to predict this phenomenon. In this sense, similarly to the identification of level I and level II, the data suggested that the activation of more advanced levels seems to be context dependent. However, this dependency seems to differ: To move from level I to level II, the dependency seems to be the modeling round the students are in and, thus, the context or content of their actual modeling work. In all the cases, in the beginning of their work with a new model or phenomenon, students started with level I, and in subsequent rounds, they had some conversations that fell into level II. For level III, there seemed to be an additional layer of context dependency, seemingly related to the type of phenomenon under study. Kinematics phenomena (cases 1, 2, and 5) did not activate or “spark” level III conversations related to the purpose of models.

4.3 Multiple Models

In terms of the notion of the existence and usefulness of multiple different models representing the same phenomenon, the analysis suggested that prior to modeling round 2, students did not discuss this. As noted above, during modeling round 1, students focused on obtaining a model that works (Louca & Zacharia, 2008), acting as a phenomenological description of the phenomenon under study (Louca & Zacharia, 2012). The fact that modeling round 1 ended with an evaluation of the first model they constructed (Louca, Zacharia, Michael et al., 2011) seemed to work as a context in which students adopted and discussed the idea that it is possible to have multiple different models that represent the phenomenon in different ways, along with limitations and advantages. The discourse data from student modeling conversations suggested that this idea remained active for the rest of the modeling unit (for all remaining rounds) but disappeared again during the first round of the next phenomenon modeled, possibly suggesting a contextual relationship with the type of student work—particularly with the modeling rounds—along with students’ notions of the purpose of the model.

During modeling round I, students seemed to view and talk about differences between multiple models of the same phenomenon as differences between the models themselves and not as differences between alternative representations of different phenomena. This depicts level I. However, the process or step of evaluating the models they constructed seemed to help students see differences in their models as alternative ways of representing different parts of the phenomenon under study, which is depicted in level II. Nevertheless, none of the data showed that students viewed models as tools for making predictions about the phenomenon under study, although this might be related to the role of the teachers and how they approached modeling in their science teaching.

4.4 Testing and Changing Models

Only after modeling round 1 did students start talking about testing, editing, and making changes to their models. In round 1, students focused on obtaining a model that showed reality, and their main concern was to make their models look like the real phenomenon. Once activated, the idea of revising and testing their models remained active for the rest of the modeling unit but disappeared again during the first round of the next phenomenon they were modeling.

Students did not have any conversations that reflected level III, which includes the view of models as theoretical tools that can be used to make predictions about aspects of the phenomenon under study. Given that the rest of the findings were related to other aspects of the FMC, it is still unclear whether this finding was due to the students’ lack of knowledge, modeling experiences, or abilities. Rather, it might be related to the role of the teacher and the data collection period, where the emphasis was placed primarily on students’ development of models for the phenomenon and not the use of models as tools for investigating and learning about phenomena. Therefore, the FMC might serve as an instructional guide for teachers and researchers in preparing or designing lesson plans for MBL in science.

Further, most student work in modeling round 1 reflected level I because students emphasized the testing or changing of a model itself. However, level II appeared in modeling rounds 1 and 2, with no apparent pattern regarding when students tested and changed models after they compared their models with the phenomenon under study.

5 Discussion and Conclusions

In this chapter, findings from applying the FMC to K-6 modelers were presented. Specifically, the purpose was to investigate fifth-graders’ understanding of models and modeling during MBL. Adopting a discourse-based perspective instead of directly asking students for their understanding in interviews and questionnaires, students’ modeling work was analyzed to identify the levels on which these students’ understanding of models and modeling could be located within the various aspects of the framework.

One of the main themes that runs across all the findings is that, overall, there was not substantial evidence to show that the students could reach level III with respect to any of the five elements that were investigated. This could suggest several things. It is possible that the FMC accounts for an understanding of models and modeling in older students or in students across a wide spectrum of ages, while suggesting that for K-6 students, it might be reasonable to expect that they might not reach level III. In this sense, some understanding or abilities related to modeling processes such as using models as tools to predict natural phenomena do not develop until later ages. Of course, this needs to be investigated in more detail.

Nevertheless, throughout the various aspects, students sometimes worked at level I and sometimes at level II. At first glance, there did not seem to be a developmental pattern to this in the sense that the same students in one modeling unit started working at level I, then moved to level II, and then, in the next modeling unit (which took place 2–3 weeks after the end of the first unit), once again started working at level I. This last part is in line with the theoretical idea of resources by Hammer and colleagues (Hammer, 2000; Hammer & Elby, 2002, 2003; Louca et al., 2004) by which students may simultaneously hold an understanding of a particular idea at different levels, only activating one level at a time on the basis of the context.

Primarily derived from physics education research, the idea of modeling resources is used to identify student knowledge, abilities, or reasoning skills in relation to various modeling tasks. Instead of seeing the absence of a particular level as a need to help students develop the modeling abilities they lack, it might be more productive to view this as a need to help students develop more reliable access to the modeling resources they might already have and might be context-dependent (Gilbert & Justi, 2016; Krell, Upmeier zu Belzen, & Krüger, 2013; Chap. 13).

For example, as presented in the findings, the model evaluation tasks administered at the end of round 1 of MBL seemed to activate the notion that it is possible to have multiple different models for the same phenomenon, each one with its own advantages and disadvantages. This idea remained alive for the rest of the modeling unit, but it disappeared when a new modeling unit began until students again reached the evaluation point of their first models.

For instance, students’ views of the nature of models as theoretical reconstructions of the phenomenon (level III) seemed to be in sync with students identifying and investigating the relationships between physical entities. For instance, a causal model in which physical entities and physical processes are operationally defined suggests that students likely viewed the process as developing a theoretical reconstruction of the phenomenon that included the mechanism that underlies/causes the phenomenon. In this study, students were not explicitly asked to reflect on those issues and articulate their understanding of the nature of the models they had developed. Overall, while the data from this study did not indicate that students had an understanding at level III within a predictive frame, it was possible to see some level III understanding (as in the case of the nature of models) in some of the aspects of the FMC (Grünkorn et al., 2014).

The context-dependencies of modeling resources (Krell et al., 2013) also deserve to be highlighted. The context of creating computer-based programs that could create general models of the phenomenon under study was vital for leading students to invent and define physical entities in the form of program variables. They would then use these in the program rules, which would include interactions between physical objects, their behaviors, and characteristics.

Given all this, we contend that instead of seeing the absence of particular levels as an indicator that there is a need to help students develop the modeling abilities they lack, it might be more productive to view this as a need to help students develop more reliable access to modeling resources they already have but might be context-dependent. This approach has different implications for MBL and instruction and may shape research on modeling competences in different ways. Further investigation of this issue is of course needed, particularly focusing on how novice modelers can be supported to access these resources in a better, more reliable manner.

If we start sketching a framework for modeling resources, there is at least another important relevant implication. The role of the teacher as a possible activator of different modeling resources needs to be considered (Samarapungavan, Tippins, & Bryan, 2015; Zangori & Forbes, 2016). As previously identified (Louca & Zacharia, 2012), there are instances in MBL with novice modelers where the teacher needs to push student thinking in a particular direction (i.e., toward a specific modeling step), especially when students’ prior experience with the modeling process is limited. This is relevant to the findings here because it is possible that the absence of level III is related to the way the teacher enacted the MBL “cycle” or to his goals for each modeling unit with students.

A second but related implication is that the FMC might be used productively as a guide for teachers throughout MBL in science (Fleige, Seegers, Upmeier zu Belzen, & Krüger, 2012). In this sense, in addition to the designing of modeling units or learning sequences, this framework could help teachers identify and respond to students’ modeling difficulties during teaching and learning, thus providing teachers with a productive tool for helping students reach level III with respect to various aspects of the framework.

Finally, the data from this study included only student work and conversations through MBL in science. Of course this is a limitation of the study, creating the need for a more thorough examination of MBL across other disciplines. However, as we have argued elsewhere (Hammer & Louca, 2008), different ways of investigating the same phenomenon may reveal different aspects or pictures of reality, suggesting that a detailed investigation might need to consider a number of different research methods.

Notes

- 1.

The quotation marks denote that it is not always a cyclical process; it could also turn into a spiraling process as we discuss later in the chapter.

References

Acher, A., Arca, M., & Sanmartı, N. (2007). Modeling as a teaching learning process for understanding materials: A case study in primary education. Science Education, 91, 398–418.

Coll, R. K., & Lajium, D. (2011). Modeling and the future of science learning. In M. S. Khine & I. M. Saleh (Eds.), Models and modeling cognitive tools for scientific enquiry (pp. 3–21). New York: Springer.

Constantinou, C. P. (1999). The cocoa microworld as an environment for modeling physical phenomena. International journal of continuing education and life-long. Learning, 8(2), 65–83.

Duschl, R. A., & Grandy, R. E. (2008). Teaching scientific inquiry: Recommendations for research and implementation. Rotterdam, The Netherlands: Sense Publishers.

Fleige, J., Seegers, A., Upmeier zu Belzen, A., & Krüger, D. (2012). Förderung von Modellkompetenz im Biologieunterricht. [Fostering Modeling Competence in Biology Classes]. Der mathematische und naturwissenschaftliche Unterricht, 65(01), S. 19–S. 28.

Forbes, C. T., Zangori, L., & Schwarz, C. V. (2015). Empirical validation of integrated learning performances for hydrologic phenomena: Third-grade students’ model-driven explanation-construction. Journal of Research in Science Teaching, 52(7), 895–921.

Gilbert, J. K. (2004). Models and modelling: Routes to more authentic science education. International Journal of Science and Mathematics Education, 2(2), 115–130.

Gilbert, J., & Rosária, J. (2016). Modelling-based teaching in science education. Switzerland: Springer.

Gobert, J. D., & Buckley, B. C. (2000). Introduction to model-based teaching and learning in science education. International Journal of Science Education, 22(9), 891–894.

Grünkorn, J., Belzen, A. U. z., & Krüger, D. (2014). Assessing students’ understandings of biological models and their use in science to evaluate a theoretical framework. International Journal of Science Education, 36(10), 1651–1684.

Hammer, D. & Louca, L. (2008). Challenging accepted practice of coding. Paper presented at the Symposium “How to study learning processes? Reflection on methods for fine-grain data analysis” at the Conference of the Learning Science Society (ICLS), The Netherlands, 24–28 June.

Hammer, D. M. (2000). Student resources for learning introductory physics. American Journal of Physics, Physics Education Research Supplement, 68(S1), S52–S59.

Hammer, D. M., & Elby, A. (2002). On the form of a personal epistemology. In B. K. Hofer & P. R. Pintrich (Eds.), Personal epistemology: The psychology of beliefs about knowledge and knowing (pp. 169–190). Mahwah, NJ: Lawrence Erlbaum.

Hammer, D. M., & Elby, A. (2003). Tapping epistemological resources for learning physics. Journal of the Learning Sciences, 12(1), 53–90.

Hestenes, D. (1997). Modeling methodology for physics teachers. In E. F. Redish & J. S. Rigden (Eds.), The changing role of physics departments in modern universities: Proceedings of international conference on undergraduate physics education (p. 935957). New York, NY: The American Institute of Physics.

Justi, R. S., & Gilbert, J. K. (2002). Science teachers’ knowledge about and attitudes towards the use of models and modelling in learning science. International Journal of Science Education, 24(12), 1273–1292.

Krell, M., & Krüger, D. (2016). Testing models: A key aspect to promote teaching-activities related to models and modelling in biology lessons? Journal of Biological Education, 50, 160–173.

Krell, M., Upmeier zu Belzen, A., & Krüger, D. (2013). Students’ levels of understanding models and modelling in biology: Global or aspect-dependent? Research in Science Education, 44(1), 109–132.

Lesh, R., & Doerr, H. M. (2003). Beyond constructivism: Models and modeling perspectives on mathematics problem solving, learning and teaching. Mahwah, NJ: Lawrence Erlbaum.

Lesh, R., Hoover, M., Hole, B., Kelly, A., & Post, T. (2000). Principles for developing thought revealing activities for students and teachers. In A. Kelly & R. Lesh (Eds.), The handbook of research design in mathematics and science education (pp. 591–646). Mahwah, NJ: Lawrence Erlbaum Associates.

Linn, M. C. (2003). Technology and science education: Starting points, research programs, and trends. International Journal of Science Education, 25, 727–758.

Louca, L., Elby, A., Hammer, D. M., & Kagey, T. (2004). Epistemological resources: Applying a new epistemological framework to science instruction. Educational Psychologist, 39(1), 57–68.

Louca, T. L., & Zacharia, C. Z. (2008). The use of computer-based programming environments as computer modeling tools in early science education: The cases of textual and graphical program languages. International Journal of Science Education, 30(3), 137.

Louca, T. L., & Zacharia, C. Z. (2012). Modeling-based learning in science education: A review. Educational Review, 64(1), 471–492.

Louca, T. L., & Zacharia, C. Z. (2015). Learning through Modeling in K-6 science education: Re-visiting the Modeling-based learning cycle. Journal of Science Education and Technology, 24(2), 192–215.

Louca, T. L., Zacharia, C. Z., & Constantinou, P. C. (2011). In quest of productive modeling-based learning discourse in elementary school science. Journal of Research in Science Teaching, 48(8), 919–951.

Louca, T. L., Zacharia, Z., Michael, M., & Constantinou, P. C. (2011). Objects, entities, behaviors and interactions: A typology of student-constructed computer-based models of physical phenomena. Journal of Educational Computing Research, 44(2), 173–201.

Manz, E. (2012). Understanding the codevelopment of modeling practice and ecological knowledge. Science Education, 96(6), 1071–1105.

National Research Council [NRC]. (2012). A framework for K-12 science education: Practices, crosscutting concepts, and core ideas. Washington, DC: National Academies Press.

NGSS Lead States (Hrsg.). (2013). Next generation science standards: For states, by states. Washington, DC: National Academies Press.

Nicolaou, C. T., & Constantinou, C. P. (2014). Assessment of the modeling competence: A systematic review and synthesis of empirical research. Educational Research Review, 13, 52–73.

Papaevripidou, M. Constsantinou, C. P., & Zacharia, Z. C. (2009). Unpacking the Modeling ability: A framework for developing and assessing the Modeling ability of learners. Paper presented at the American Educational Research Association (AERA) annual conference, April 13–18 2009, San Diego, CA

Samarapungavan, A., Tippins, D., & Bryan, L. (2015). A modeling-based inquiry framework for early childhood science learning. In Research in early childhood science education (pp. 259–277). Amsterdam, The Netherlands: Springer.

Schwarz, C. V., Reiser, B. J., Davis, E. A., Kenyon, L., Achér, A., Fortus, D., et al. (2009). Developing a learning progression for scientific modeling: Making scientific modeling accessible and meaningful for learners. Journal of Research in Science Teaching, 46(6), 632–654.

Upmeier zu Belzen, A., & Kruger, D. (2010). Modellkompetenz im Biologieunterricht. [Modeling Competence in Biology Classes]. Struktur und Entwicklung. In: Zeitschrift fur Didaktik der Naturwissenschaften 16, 41–57. http://www.ipn.uni-kiel.de/zfdn/pdf/16_Upmeier.pdf

Verhoeff, R. P., Waarlo, A. J., & Boersma, K. T. (2008). Systems modelling and the development of coherent understanding of cell biology. International Journal of Science Education, 30(4), 543–568. https://doi.org/10.1080/09500690701237780

White, B. Y., & Frederiksen, J. R. (1998). Inquiry, modeling and metacognition: Making science accessible to all students. Cognition & Instruction, 16(11), 3–118.

Windschitl, M., Thompson, J., & Braaten, M. (2008). Beyond the scientific method: Model-based inquiry as a new paradigm of preference for school science investigations. Science Education, 92, 941–967.

Yin, K. R. (1994). Case study research: Design and methods. Thousands Oaks, CA: Sage Publications, Inc.

Zangori, L., & Forbes, C. T. (2016). Exploring third-grade student model-based explanations about plant relationships within an ecosystem. International Journal of Science Education, 37(18), 2942–2964.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Louca, L.Τ., Zacharia, Z.C. (2019). Toward an Epistemology of Modeling-Based Learning in Early Science Education. In: Upmeier zu Belzen, A., Krüger, D., van Driel, J. (eds) Towards a Competence-Based View on Models and Modeling in Science Education. Models and Modeling in Science Education, vol 12. Springer, Cham. https://doi.org/10.1007/978-3-030-30255-9_14

Download citation

DOI: https://doi.org/10.1007/978-3-030-30255-9_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-30254-2

Online ISBN: 978-3-030-30255-9

eBook Packages: EducationEducation (R0)