Abstract

Most existing ordinal regression methods are adapted from traditional supervised learning algorithms (e.g., support vector machines and neural networks) which have shown to work well mostly on dense data. However, the use of existing ordinal regression methods on sparse data has received less scrutiny. This paper proposes to address the sparsity issue arose in many real-world ordinal regression applications by leveraging the feature interaction modeling techniques. Following the popular threshold methodology in ordinal regression studies, we extend Factorization Machines, an effective solution to modeling pairwise feature interactions in sparse feature space, to ordinal regression. The proposed model, namely Factorization Machines for Ordinal Regression (FMOR), combines the ability of threshold methodology in predicting targets of ordinal scale with the advantages of factorization models in handling high-dimensional sparse data. Through extensive experimental studies, we show that the proposed FMOR is both effective and efficient against state-of-the-art baselines.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Ordinal regression is an important type of supervised learning paradigm, which aims to learn predictive models for ordinal targets. Ordinal regression problems are very common in massive domains from social sciences [9] to financial technology [1] and clinical research [23]. In recent years, ordinal regression has experienced significant developments, with many prevalent methods adapted from traditional machine learning algorithms such as support vector machines [3, 24], neural networks [2, 4, 6, 13], boosting [11] and discriminant learning [21, 22]. These methods have shown to be effective in many scenarios, but unavoidably, retain substantial weaknesses of the original methods. One significant challenge comes from the fact that the feature space can be of very high dimension but sparse in many real-world ordinal regression applications, e.g., collaborative filtering, click-through rate prediction, and computer-aided pathology diagnosis. It is known that the sparse representation problem greatly hinders the performance of traditional machine learning methods, as well as their extensions for ordinal regression [7].

One successful solution to the sparse representation problem is to model the inherent interactions among features because co-occurrence of features often helps reveal high-level domain knowledge about the task under consideration. One effective approach to model feature interaction is Factorization Machines (FM) [19], which embeds high-dimensional sparse features into a rank-low latent space and learns pairwise feature interactions via the inner product of features’ embedding vectors. Although originally proposed in the context of recommender systems, FM has yielded great promise in a wide range of prediction tasks, especially those with very high and sparse feature space [16,17,18, 25]. However, the target variables of traditional FM models can only be either discrete or continuous. Thus, the tradition FM does not yet cater for the ordinal relationship among learning targets. To our best knowledge, there is little work adapting FM for ordinal regression.

In this paper, we propose a novel Factorization Machines for Ordinal Regression (FMOR), in which the sparsity issue in ordinal regression is tackled through factorized feature interactions. Motivated by the popular threshold methodology of ordinal regression studies, the proposed FMOR extends the traditional FM by introducing a set of threshold parameters that map real-valued outputs of FM to ordinal labels. We implement the learning algorithm of FMOR based on stochastic gradient descent, and further claim that the ordinal threshold constraint required by threshold-based ordinal regression methods can be automatically satisfied by the derived model. Finally, we perform comprehensive experiments on several benchmark datasets and compare FMOR with state-of-the-art approaches. The results show that FMOR noticeably outperforms all counterparts, especially in case of sparse feature space.

The rest of this paper is organized as follows. Section 2 briefly discusses the literature of ordinal regression. Section 3 gives the details of the proposed factorization machines for ordinal regression. Section 4 reports the experimental results. Section 5 gives some conclusive remarks.

2 Related Work

Generally, ordinal regression methods can be classified into three categories: (i) naive methods, (ii) ordinal binary decomposition methods, and (iii) threshold-based methods.

Naive Methods. Ordinal regression, akin to nominal classification and metric regression, can be simplified into these conventional supervised learning paradigms by either ignoring the ordinal relationship among classes or casting ordinal labels into real values. A more advanced method of this type is to transform ordinal regression as cost-sensitive classification, in which the ordinal information is encoded as misclassification costs [10].

Ordinal Binary Decomposition Methods. The main idea of ordinal binary decomposition methods is to decompose the ordinal classes into several binary pairs, each modeled by single or multiple traditional classifiers. Lin et al. [12] proposed a reduction framework from ordinal regression to binary classification: each sample is extended with a series of ordinal patterns, then a binary classifier is learned for each ordinal class that answers the question: “Is the rank of \(\mathbf {x}\) greater than r or not?”. Liu et al. [14] made use of triplets with each element from a different rank as samples and a binary classifier is learned for each ordinal class that answers the question: “Is the rank of \(\mathbf {x}\) greater than \(r-1\) and smaller than \(r+1\)?”.

Threshold-Based Methods. Threshold-based methods have been one popular technique for handling ordinal samples. Threshold-based methods aim to estimate: (i) a latent regression function \(f(\mathbf {x})\) that maps the input feature space to a one-dimensional real space; and (ii) a set of thresholds \(b_1 \le \cdots \le b_R\) that cast the real-valued \(f(\mathbf {x})\) into an interval corresponding to an ordinal class. The proportional odds model (POM) [15] is one of the first threshold-based methods and inspires many subsequent studies. Another well-known threshold-based ordinal regression method is Support Vector Ordinal Regression (SVOR) [3, 20] that generalize the “large margin” principle adopted by support vector machines to ordinal regression. Two solutions to SVOR have been developed: one maximizes the margin of the closest neighboring classes (called fixed-margin strategy) and one maximizes the sum of margins of all classes (called sum-of-margin strategy). In a recent survey on ordinal regression [5], SVOR is proved to be the best threshold-based methods for its competitive performance on both prediction accuracy and training time.

3 The Proposed Method

In this section, we first give a preliminary introduction to the traditional FM, and then elaborate our proposed FMOR method.

3.1 Preliminary

Factorization Machines (FM) [19] are a generic model class that capable of dealing with high-dimensional and sparse features. Formally, FM takes as input a real valued vector \(\mathbf {x} \in \mathbb {R}^d\), and estimates the target by modelling pairwise interactions of sparse features using low-rank latent factors. The model equation of FM is formulated as:

where the parameters \({\Theta }\) have to be estimated are:

In Eq. 1, the first two items are linear combinations of every features with weights \(w_j \ (1\le j\le d)\) and a global bias \(w_0\), and the last item is pairwise feature interactions using a factorized weighting schema \(\hat{w}_{jj'}=\langle \mathbf {v}_j,\mathbf {v}_{j'} \rangle =\sum _{k=1}^p{v_{jk}\cdot v_{j'k}}\), where \(\mathbf {v}_j \) is factor vector of the j-th feature, and \(p\in \mathbb {N}^+\) is the hyper-parameter that defines the dimensionality of factor vectors. Feature factors in FM are commonly said to be low-rank, due to \(p\ll d\). Compared with traditional ways (e.g., polynomial SVM) to model feature interactions using separated interaction weights, the factorization schema of FM can reduce the model complexity from \(O(d^2)\) to \(O(p\cdot d)\), which is a favored property for high-dimensional feature space.

Furthermore, FM is practically efficient for its linear computation time complexity. The model equation of FM in Eq. 1 can be reformulated as:

Equation 2 indicates that the model equation of FM has only linear time complexity in both d and p. In fact, the pairwise feature interaction can be only computed over the non-zero elements of \(\mathbf {x}\), i.e., the computation complexity is \(O(p\cdot N_z)\). Under sparsity settings, \(N_z\) can be much smaller than d, thus the computation of decision function of FM can be very efficient. In brief, FM provides a promising framework for handling high dimensional and sparse data.

3.2 Factorization Machines for Ordinal Regression

We realize Factorization Machines for Ordinal Regression (FMOR) by leveraging the threshold methodology. The basic idea is to introduce a set of consecutive thresholds to partition real line into several intervals which define the boundaries of ordinal classes.

Given an ordinal regression problem with R ordinal classes, OrdinalFM estimates the target of an input vector \(\mathbf {x}\in \mathbb {R}^d\) as:

where \(b_1,\cdots ,b_{R}\in \mathbb {R}\) are the R thresholds partitioning real line into intervals, each corresponding to an ordinal class. Besides, the thresholds are required to satisfy the constraint \(b_1\le \cdots \le b_{R}\). For mathematical convenience, \(b_R\) is simply set as \(+\infty \). f(x) is the latent factor regression function that captures all possible interactions between features (up to second-order, practically):

Note that \(f(\mathbf {x})\) is the same as the traditional FM model in Eq. 1 except the global bias item. In essence, FMOR extend the traditional FM by introducing a set of thresholds instead of the single global bias. The thresholds are used to map the regression function value \(f(\mathbf {x})\) into ordinal targets. Particularly, an input is predicted as r if and only if \(b_{r-1}<f(\mathbf {x})\le b_r\).

The model parameters of FMOR that have to be estimated are:

Next, we discuss the learning procedure of FMOR, including the learning objective and the optimization algorithm.

The Learning Objective. Following the traditional supervised learning framework, the parameters \({\Theta }\) are learned from a given training set \(\mathcal {D}\) that minimizes the following regularized empirical risk:

where \(R_{\mathrm {emp}}\) is the empirical risk of an ordinal regression model on the training data, and \({\Omega }(\cdot )\) is the regularization item. \(\lambda \) is the trade-off between the empirical risk and regularizer of model parameters.

In order to account for the ordinal relationship among targets when calculating the empirical risk, we consider measuring the predicting errors w.r.t. each ordinal class. Formally, give a training set \(\mathcal {D}=\mathcal {D}^{(1)}\cup \cdots \cup \mathcal {D}^{(R)}\), where \(\mathcal {D}^{(r)}=\{(\mathbf {x}_i,r),\cdots ,(\mathbf {x}_{N_k},r)\}\ (1\le r \le R)\) is the set of training samples with the class r, the empirical risk of a FMOR model is defined as:

where \(\ell (\cdot )\) is the surrogate loss function that penalizes an erroneous prediction. In fact, the empirical risk is contributed over all thresholds, including the lower-grading ones (\(k=1,\cdots ,r-1\)) and the upper-grading ones (\(k=r,\cdots ,R\)), involved when a predicting error occurs.

Generally, a surrogate loss function is required to be monotonically decreasing in true positives. Moreover, smoothness is a derivable property such that efficient optimization techniques can be applied to estimate model parameters. Here, we adopt the smoothed hinge loss:

As mentioned above, the threshold parameters need to satisfy the ordinal inequality constraint \(b_1 \le \cdots \le b_{R}\). Interestingly, the constraint, although not being imposed on the learning procedure explicitly, can be automatically satisfied at the optimal solution of OrdinalFM, as will be shown in the following theorem.

Theorem 1

Let \({\Theta }^*=(b_1^*,\cdots ,b_{R-1}^*, \mathbf {w}^*, \mathbf {v}_1^*,\cdots ,\mathbf {v}_d^*)\) be the optimal solution of the regularized empirical risk minimization problem in Eq. 5, i.e.,

Then we have \(b_1^* \le \cdots \le b_{R-1}^*\).

Theorem 1 not only establishes a nice property of FMOR but also induces a heuristic that is helpful for finding a better FMOR model. Theorem 1 leads to the following corollary.

Corollary 1

Given two solution \(\dot{\Theta }=(\dot{b}_1,\cdots ,\dot{b}_{R-1},\dot{\mathbf {w}},\dot{\mathbf {v}}_1,\cdots ,\dot{\mathbf {v}}_d)\) and \(\ddot{\Theta }=(\ddot{b}_1,\cdots ,\ddot{b}_{R-1},\dot{\mathbf {w}},\dot{\mathbf {v}}_1,\cdots ,\dot{\mathbf {v}}_d)\), where \((\ddot{b}_1,\cdots ,\ddot{b}_{R-1})\) is sorted in an ascending order of \((\dot{b}_1,\cdots ,\dot{b}_{R-1})\), we have \(R_{\mathrm {emp}}(\ddot{{\Theta }},\mathcal {D})\le R_{\mathrm {emp}}(\dot{{\Theta }},\mathcal {D})\).

Due to space limitation, the proofs will be provided in the full version of the paper.

The Learning Algorithm. We employ the Adaptive Moment Estimation (Adam) [8] algorithm, a popular variant of stochastic gradient descent algorithm that uses adaptive per-parameter learning rates, to solve the regularized empirical risk minimization problem in Eq. 5. The main idea is to iterate over each sample \((\mathbf {x},r)\) in the training set, and update model parameters towards the direction of negative gradient of the objective:

where \(\eta ^{(\theta ,t)}\) is the individual adaptive learning rate for \(\theta \) at the t-th iteration.

For the empirical risk in Eq. 6, the gradient is given by

From Eq. 7, the gradient of the smoothed hinge loss is:

From Eq. 4, the gradient of the factorized-based regression function is:

Through embedding Eqs. 10 and 11 into Eq. 9, we can obtain the gradient used in the optimization algorithm.

One thing to be noted here is gradient-based algorithms, though simple and efficient, are not guaranteed to find the global optimum solution, since the regularized empirical risk minimization problem is usually highly non-convex empirically. Thus the ordinal inequality constraint might be violated in the estimated parameters. Fortunately, according to Corollary 1, we can find better parameters, which not only satisfies the ordinal inequality constraint but also achieve lower regularized empirical risk, by sorting the learned threshold parameters in ascending order.

4 Empirical Study

In this section, we report the results of the empirical studies on the proposed FMOR using several benchmark datasets.

4.1 Experimental Settings

As the proposed FMOR is essentially a threshold-based method, we select several state-of-the-art threshold-based ordinal regression methods as baselines. We also compare FMOR against the traditional FM.

-

ORBoost: The thresholded ensemble model for ordinal regression proposed by Lin and Li [11]. The two implementations, namely ORBoost-LR (Ordinal Regression Boosting with Left-Right margins) and ORBoost-All (Ordinal Regression Boosting with All margins), are used as the baselines.

-

SVOR: The support vector formulation for ordinal regression proposed by Chu and Keerthi [3]. The two implementations, namely SVOREX (Support Vector Ordinal Regression with EXplicit constraints) and SVORIM (Support Vector Ordinal Regression with IMplicit constraints), are used as the baselines. Both methods are implemented with Gaussian kernel (with kernel width as 1) and linear kernel, respectively.

-

POMNN: The ordinal neural network based on the proportional odds model proposed by Gutiérrez [6].

-

FM: The original factorization machines proposed by Rendle [19]. The FM model is learned with the regression least-squares loss and the predictions are rounded to the nearest ordinal class.

The ORBoost methods, the SVOR methods and the traditional FM are run using the publicly available implementations provided by the authorsFootnote 1. We implemented the POMNN method using TensorFlow. As for the proposed FMOR, we implemented it on basis of LibFM. The hyper-parameters of each method are chosen from a certain range (shown in Table 1) using 5-fold cross-validation within the training set. For other parameters of each method, we use default settings provided by the implementations.

All the methods are evaluated using the following measures:

-

1.

MZE: The Mean Zero–one Error (MZE) is the fraction of incorrect predictions:

$$\begin{aligned} MZE=\frac{1}{N}\sum _{i=1}^{N}\llbracket \hat{y}(\mathbf {x}_i) \ne y_i \rrbracket \end{aligned}$$ -

2.

MAE: The Mean Absolute Error (MAE) is the average absolute deviation of the predictions from the ground-truth:

$$\begin{aligned} MAE=\frac{1}{N}\sum _{i=1}^{N}|\hat{y}(\mathbf {x}_i)-y_i| \end{aligned}$$

4.2 Prediction Accuracy

In this experiment, we compared the proposed FMOR against the baselines on 9 benchmark datasets which are taken from public machine learning data repositoriesFootnote 2. All these datasets are real ordinal datasets with a varying number of samples, features and classes. We preprocess each dataset by normalizing every numeric attributes into [0, 1] and transforming every categorical attributes to binary forms with one-hot encoding (one feature per value). As for the winequality dataset, we generate one more preprocessed dataset by transforming all attributes, including both numeric ones and categorical ones, to binary forms. To be specific, each numeric attribute in the original dataset is discretized into pre-defined bins and then converted into one-hot vectors. This dataset, denoted as winequality0/1 in Table 2, is of high sparsity as each sample is described by a 180-dimension binary feature vector. The characteristics of benchmark datasets are described in Table 2.

Each dataset is randomly split 5 times into training and testing sets with ratio 2:1. The averaged MZE and MAE over 5 runs along with the standard deviations are reported in Tables 3 and 4 (best in bold), respectively. From the results, we can see the proposed FMOR beats all baselines 6 of 10 times in terms of both MZE and MAE. Among the datasets that the proposed FMOR performs best, the most significant improvement is obtained on the winequality0/1 dataset of which the feature space is sparser than others. This indicates that the advantage of the proposed FMOR can be more significant as the level of sparsity gets higher. Actually, in these datasets (i.e., user-knowledge, eucalyptus and turkiye-eval) that FMOR or traditional FM fails to outperform traditional ordinal regression methods, the sparsity issue rarely occurs. Taking the user-knowledge datasetFootnote 3 as an example, the attributes are all numerical ones such as the exam performance or the study time of a student. However, this result does not necessarily mean that the proposed FMOR cannot be applied to the ordinal regression problems with dense feature space. In fact, there is only a small gap between FMOR and the best-performed baselines on these datasets. Also note that the proposed FMOR still achieves the best performance on the dense dataset winequality. Among all the baselines, SVOR methods perform best in most cases. We also notice that POMNN does not perform as well as expected. We argue that more advanced techniques for training deep neural networks need to be employed to learn a better neural networks model for ordinal regression.

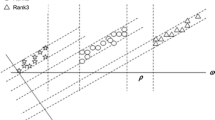

4.3 Training Efficiency

In this experiment, we evaluate the training efficiency of the proposed FMOR by comparing training time with other methods. In this experiment, we only consider SVOR methods for comparison for the consistent outperformance over other baselines. All comparison methods were run on a single core of an Intel(R) Xeon(R) CPU E7-4830 processor clocked at 2.13 GHz with access to 24 GB RAM. Due to space limitation, only the results on the turkiye-eval dataset are reported. Figure 1 plots the training time of every comparison methods with varying sizes of the training dataset. It can be clearly seen that the proposed FMOR scales much better than SVOR methods. On large training sets with thousands of samples, training a FMOR model takes only seconds while training SVOR models can take several hours.

5 Conclusion

In this paper, we put forward Factorization Machines for Ordinal Regression (FMOR), a latent factor model addressing the sparsity issue in ordinal regression problems. Using the factorization machines as the base generic framework for modeling sparse feature space, we incorporate the threshold methodology to handle the ordinal targets in a proper way. We experimentally show that FMOR has the dual advantages of effectiveness and efficiency, and can be applied not only to sparse ordinal data, but competitive results can even be obtained for dense data. Future work includes applying FMOR to model ordinal user preference scores in recommender systems.

References

Alp, A.: Structural shifts in credit rating standards. J. Financ. 68(6), 2435–2470 (2013)

Beckham, C., Pal, C.: Unimodal probability distributions for deep ordinal classification, pp. 411–419 (2017)

Chu, W., Keerthi, S.S.: New approaches to support vector ordinal regression. In: Proceedings of the 22nd International Conference on Machine Learning, pp. 145–152 (2005)

Goh, C.K., Liu, Y., Kong, A.W.: A constrained deep neural network for ordinal regression. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 831–839 (2018)

Gutierrez, P.A., Perez-Ortiz, M., Sanchez-Monedero, J., Fernandez-Navarro, F., Hervas-Martinez, C.: Ordinal regression methods: survey and experimental study. IEEE Trans. Knowl. Data Eng. 28(1), 127–146 (2016)

Gutiérrez, P.A., Tiňo, P., Hervás-Martínez, C.: Ordinal regression neural networks based on concentric hyperspheres. Neural Netw. 59, 51–60 (2014)

Huang, X., Zhang, L., Wang, B., Zhang, Z., Li, F.: Feature weight estimation based on dynamic representation and neighbor sparse reconstruction. Pattern Recogn. 81, 388–403 (2018)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Koren, Y., Sill, J.: Collaborative filtering on ordinal user feedback. In: Proceedings of the 23th International Joint Conference on Artificial Intelligence (2013)

Li, L., Lin, H.T.: Ordinal regression by extended binary classification. In: Advances in Neural Information Processing Systems, pp. 865–872 (2007)

Lin, H.T., Li, L.: Large-margin thresholded ensembles for ordinal regression: theory and practice. In: Proceedings of the International Conference on Algorithmic Learning Theory, pp. 319–333 (2006)

Lin, H.T., Li, L.: Reduction from cost-sensitive ordinal ranking to weighted binary classification. Neural Comput. 24(5), 1329–1367 (2012)

Liu, X., Zou, Y., Song, Y., Yang, C., You, J., Kumar, B.V.K.V.: Ordinal regression with neuron stick-breaking for medical diagnosis. In: Leal-Taixé, L., Roth, S. (eds.) ECCV 2018. LNCS, vol. 11134, pp. 335–344. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-11024-6_23

Liu, Y., Kong, A.W.K., Goh, C.K.: Deep ordinal regression based on data relationship for small datasets. In: Proceedings of the 26th International Joint Conferences on Artificial Intelligence, pp. 2372–2378 (2017)

McCullagh, P.: Regression models for ordinal data. J. Roy. Stat. Soc.: Ser. B (Methodol.) 42(2), 109–127 (1980)

Ni, W., Liu, T., Zeng, Q., Zhang, X., Duan, H., Xie, N.: Robust factorization machines for credit default prediction. In: Geng, X., Kang, B.-H. (eds.) PRICAI 2018. LNCS (LNAI), vol. 11012, pp. 941–953. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-97304-3_72

Pan, Z., Chen, E., Liu, Q., Xu, T., Ma, H., Lin, H.: Sparse factorization machines for click-through rate prediction. In: Proceedings of the IEEE 16th International Conference on Data Mining, pp. 400–409 (2016)

Qiang, R., Liang, F., Yang, J.: Exploiting ranking factorization machines for microblog retrieval. In: Proceedings of the 22nd ACM International Conference on Conference on Information & Knowledge Management, pp. 1783–1788 (2013)

Rendle, S.: Factorization machines with libFM. ACM Trans. Intell. Syst. Technol. 3(3), 57 (2012)

Shashua, A., Levin, A.: Ranking with large margin principle: two approaches. In: Advances in Neural Information Processing Systems, pp. 961–968 (2003)

Sun, B.Y., Li, J., Wu, D.D., Zhang, X.M., Li, W.B.: Kernel discriminant learning for ordinal regression. IEEE Trans. Knowl. Data Eng. 22(6), 906–910 (2010)

Tian, Q., Zhang, W., Wang, L., Chen, S., Yin, H.: Robust ordinal regression induced by lp-centroid. Neurocomputing 313, 184–195 (2018)

Tran, T., Phung, D., Luo, W., Venkatesh, S.: Stabilized sparse ordinal regression for medical risk stratification. Knowl. Inf. Syst. 43(3), 555–582 (2015)

Wang, H., Shi, Y., Niu, L., Tian, Y.: Nonparallel support vector ordinal regression. IEEE Trans. Cybern. 47(10), 3306–3317 (2017)

Zhu, M., Aggarwal, C.C., Ma, S., Zhang, H., Huai, J.: Outlier detection in sparse data with factorization machines. In: Proceedings of the 2017 ACM Conference on Information and Knowledge Management, pp. 817–826 (2017)

Acknowledgement

This work is partially supported by Natural Science Foundation of China (61602278, 71704096 and 31671588), Sci. & Tech. Development Fund of Shandong Province of China (ZR2017MF027), the Humanities and Social Science Research Project of the Ministry of Education (18YJAZH017), the Taishan Scholar Climbing Program of Shandong Province, and SDUST Research Fund (2015TDJH102).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Ni, W., Liu, T., Zeng, Q. (2019). Sparse Ordinal Regression via Factorization Machines. In: Nayak, A., Sharma, A. (eds) PRICAI 2019: Trends in Artificial Intelligence. PRICAI 2019. Lecture Notes in Computer Science(), vol 11671. Springer, Cham. https://doi.org/10.1007/978-3-030-29911-8_13

Download citation

DOI: https://doi.org/10.1007/978-3-030-29911-8_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-29910-1

Online ISBN: 978-3-030-29911-8

eBook Packages: Computer ScienceComputer Science (R0)