Abstract

In this paper, we present an algorithm for drowsiness detection in drivers of several vehicles based on eye-shape. We use a combination of HOG Linear SVM to locate the face in real-time video, and feature point detection on face region for delimiting the ocular area. The feature point detector use 68-point facial landmark, but we constrain landmarks to the ocular area. We calculate the ocular aspect ratio (EAR), in order to detect driver eye closure, i.e., to detect drowsiness. Results show high sensitivity of the algorithm in the tests performed on a vehicle with a webcam and a warning alert, nevertheless, there is affected by the illumination.

The affiliations of the Universitat Politècnica de Catalunya and Escuela Politécnica Nacional are exclusively of the corresponding author Dr. Wilbert G. Aguilar. The payment of the paper registration was funded exclusively by Universidad de las Fuerzas Armadas ESPE.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Drivers and operators of several transportation vehicles, including aerial vehicles [1, 2], must be professionally prepared to have a quick and efficient reaction on the roads. Completing a long trip could take a large amount of time, in which the driver is expected to remain attentive at all times. However, after a long period of driving fatigue symptoms develop quickly until succumbing to drowsiness, which causes the driver to fall asleep causing serious accidents. In fact, one of the major factors in traffic accident causation is the effects of fatigue on drivers, but the contribution of fatigue to accidents is often underestimated in official reporting [3].

Statistics show that in a 24-h period about 38% of truck drivers exceed 14 h of driving, and 51% exceed 14 h of driving plus other non-driving work [4]. In one of several working days many of these drivers experience less than 4 h of sleep, causing a favorable scenario for the appearance of symptoms of fatigue. Driving in a state of fatigue causes the driver to lose attention and the quick and adequate response to an unexpected or dangerous situation [5], and lack of concentration, as well as poorer decision-making and worsened mood [6].

In the field of professional drivers fatigue [7] various studies have been carried out on truck drivers [4, 8, 9], aircraft pilots [10, 11], car drivers [8, 10], taxi drivers [6] and bus drivers [9]. And as a method to recognize fatigue and somnolence, neurophysiological measurements have been used, such as electroencephalography: EEG, electrooculography: EOG and heart rate: HR [10]. Psychological measures have also been used, such as mood [6], accumulated sleep [4, 6, 8] and stress [7].

In the field of software and signal analysis, systems capable of detecting fatigue of drivers have been developed through heart rate monitoring and grip pressure on the steering wheel [12], other systems include electroencephalography-based monitoring (EEG) and electrooculography (EOG) in driving simulators [13]. On the other hand, in the field of computer vision [14,15,16,17], specifically for perception [18,19,20,21], has been used the recognition and monitoring of the eyes [22, 23], pupils and mouth [24, 25] in videos of drivers [26], road detection [27], path planning [28], object detection [29], and in real time [11, 30, 31].

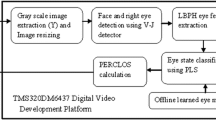

Our proposal for detection of fatigue in drivers is detect and monitoring the shape of the eye. To do this we first detect the people face [32] in the video of a HD camera that will be right in front of the driver. This is done using HOG [33, 34] linear SVM [35], which detects the movement vectors of the face, by placing it in a visible area on the PC screen. Then we build a face landmark using a trained algorithm based on the 68-point facial landmark detector. Our intention is delimiting the eye area and build an eye-landmark, through which we will calculate the ocular aspect ratio (EAR). So, we can know if the eyes are open or closed. The second state being the characteristic for the detection of drowsiness in the drivers.

2 Related Works

Two important algorithms for face detection and object detection in general have been developed: Haar-like features [36] and HOG [37] by Dalal and Tiggs. Both algorithms have been used in many applications and have generated more than 40 new approaches [38]. Several methods for face detection include feature extraction algorithms: HAAR [36], HOG [37], HOG-LBP [39], working with machine learning based on SVMs [39] [40] or Adaboost [41]. Other methods, a bit more robust and accurate, use deep learning [42, 43].

On the other hand, for detection of key points of interest [44,45,46] and the development of a landmark along the shape of the face, a very important algorithm has been developed: The facial landmark detector [47] by Kazemi and Sullivan. Other methods of shape detection include the diffuse spatial clustering c-means (s-FCM) [26], edge recognition [23] and LDA [24]. Also, more robust methods have been developed based on deep learning [48] and constrained neural fields [49].

For detection of faces and people, different datasets have been created, such as ETH [50], focused on detection of locomotion patterns, and MIT-CBCL [51] based on edge detection. Within the algorithm to develop the facial landmark there are three main pre-trained facial landmark detectors, based on different datasets created by several research groups. The most common is the shape predictor with 68 key points trained on 300-W dataset [52,53,54]. There is also the shape predictor with 194 key points trained on HELEN dataset [55], and the most recent, with 5 key points trained on FRGC [52, 54].

The combination between HOG Linear SVM facial detection and facial landmark detector has been widely used for facial recognition [56], 3D facial scans [57], estimation of posture [58]. Other authors have delimited the detection of certain areas of the face, for example the ocular zone, for Eye blink detection [59], face alignment [60] and drowsiness detection in car drivers [61].

3 Our Approach

3.1 Face Detection

Our approach is the use of HOG [37] for detection of faces in real time. We decided to use this method because, although there is slower, it provides greater accuracy with less falses positives than Haar cascades. We combined this method with machine learning based on SVM, through which we can train the tracking of the moving face and classify it with a high accurate.

For the training we used the CBCL [6] face data base, from where we obtained the positive samples (P, that we want to detect) and the negative samples (N, that we do not want to detect). From both we extracted the HOG descriptors. Then, we trained at SVM on the positive and negative samples. Finally, we applied the hard-negative mining technique to record the false-positives and sort them according to their classification probability, and then re-train the classifier using them as negative samples (Fig. 1).

A very important factor is obtaining the face bounding box (the (x, y)-coordinates of the face in the image). Based on it we will realize the facial landmark. We applied the technique of the sliding window in all the test images, extracting the HOG descriptors and applying the classifier in each case. The process must iterate until a sufficiently large probability is detected, and then record the bounding box.

3.2 Facial Landmarks Detection

Facial landmarks are used to localize and represent salient regions of the face, such as eyes, eyebrows, nose, mouth and jawline. In this context, our goal was detected important facial structures on the face using shape prediction methods.

The facial landmark detector is an implementation of [47] by Kazemi and Sullivan. This method starts by using a training set of labeled facial landmarks on an image and the probability on distance between pairs of input pixels. We used the original pre-trained facial landmark detector with 68 (x, y)-coordinates. The indexes of the 68 coordinates can be visualized on the image below (Fig. 2).

These annotations are part of the iBUG 300-W data set [52,53,54], which the facial landmark predictor was trained on.

Eye Aspect Ratio (EAR).

To detect the drowsiness, we analyzed the driver eyes using a metric called the eye aspect ratio (EAR) introduced by Soukupová and Čech [59]. This method was fast, efficient, and easy to implement.

We extracted the ocular structure of the facial landmark. Each eye is represented by 6 (x, y)-coordinates starting at the left-hand corner of the eye and then working clockwise around the remainder of the region (Fig. 3: Top-left).

Top-left: A visualization of eye landmarks when then the eye is open. Top-right: Eye landmarks when the eye is closed. Bottom: The eye aspect ratio EAR in Eq. (1) plotted for several frames of a video sequence.

The EAR is a relationship between the width and the height of these coordinates, and is calculated by the following equation:

Where p1, …, p6 are 2D facial landmark locations.

The EAR is approximately constant while the eye is open, but it will rapidly fall to zero when the eye is closed. Through this information we could know if the driver has been closing his eyes for a considerable time, a clear sign of drowsiness. To make this clear, consider the following figure:

I applied a threshold to know if a closed or open eye is considered. The threshold will be a value between 65–70%.

The calibration was based on the two eyes. The average is made between the threshold values found individually. This is because the program has problems detecting the blinking of a single eye.

Finally, we implemented a timer to avoid activating events through involuntary flashes. While the eye is not closed for a certain time, no action will be triggered.

3.3 Drowsiness Detection Algorithm

First, we detected the face of the driver through HOG Linear SVM and got the face bounding box. Then we detected the facial landmarks using the original pre-trained detector with 68 (x, y)-coordinates. We delimited the ocular zone of the facial structure formed, i.e., we obtained the eyes landmark. Finally, we calculated the EAR and performed the respective calibration for each frame of the video.

To detect drowsiness, we set a threshold of 0.3 for the EAR value. If this metric exceeds or stays at the value of 0.3 it means that the driver is awake. If the metric is smaller, and stays that way for more than one second (approximately 40 frames of consecutive video) it means that the driver has symptoms of drowsiness and is falling asleep at the wheel. If the second case occurs, an alarm is triggered that alerts the driver to be alert.

This is presented graphically in Fig. 4.

4 Results and Discussion

To test the operation of our algorithm we did several tests on a car. We placed a high-resolution webcam on the upper part of a car dash connected to a standard computer. We made sure the camera clearly focused on the driver face (Fig. 5).

We used a PC because there is the best device to perform tests and thus evaluate the performance of our algorithm. But for a real application a microcontroller will be used that is compatible with the algorithm and can execute it without difficulty.

Then, once the test devices were ready, we ran the algorithm and went out to drive normally. The eyes landmarks are detected and developed normally, and the EAR is calculated continuously in real time (Fig. 6 Left). When the person pretended to fall asleep, the value of the EAR decreased to a value less than 0.3 for a little more than a second, after which the warning alarm that woke person up and alerted him was activated (Fig. 6 Right).

The parameters considered for the evaluation of a good performance of the algorithm were the EAR and the number of false positives.

In Fig. 3 it can be clearly seen that the EAR for normal conditions is equal to or greater than 0.3, which is within the usual value. On the other hand, the EAR drops to a value less than 0.3 when the driver narrows his eyes. In this case the value of the ratio was 0.16. This also depends on the geometry of the eye.

In the experiment participated 10 drivers. Five tests were performed for each driver. The number of true positives and false negatives were counted in 3 situations. The data found are shown below.

When the driver looks down the algorithm recognizes that as a sign of drowsiness. Something similar happens when very abrupt changes in brightness are made, like when the driver is against light.

From the data in Table 1 we can calculate the sensitivity of the system:

We obtain the table below (Table 2):

It is an acceptable value of sensitivity, although it could be increased by filters and an algorithm with a more sophisticated structure.

The best situation for our algorithm is a cloudy day, because it presents a sensitivity of 98%. The recognition of the ocular zone was carried out effectively, and landmark being visible in the contours of the eyes of the person detected. The method was very stable and robust because the detection ran without errors for a long period of time and is not sensitive to sudden movements. The method worked so well that it even detect the shape of the eyes through lenses. The number of frames allowed before the alarm was activated was adequate. There is not so short that it detects a flicker as a sign of drowsiness and nor so long that the driver falls asleep long enough to produce an accident. The same can be said for the ratio of the eyes (EAR): the value 0.3 was the most appropriate, i.e., the better threshold between activity and drowsiness.

5 Conclusions and Future Works

Our system uses a predictor file and detection algorithm for the detection of face landmarks, focusing on the eyes which is the main area that shows a symptom of fatigue in a driver. By means of a timer we set a time to determine that the EAR has been reduced to zero, therefore it is determined that the driver has fallen asleep and will emit an alarm. At first, we used the Haar algorithm but due to the fact that many false positives came out and it was not robust, we decided to lean towards landmarks that through a mathematical relationship manages to establish an EAR that decides whether the driver is awake or asleep. Improve the algorithm to be immune to the lack of illumination or inaccuracy that occurs when turning the head or extending and contracting the neck. The detection of the eye contour by the “Landmark detection” method was effective in terms of robustness and precision. This was evidenced in the continuous recognition of the eyes of the person detected, even with changes in luminosity and sudden movements. Getting to detect even when the person is in profile. A good feature of the algorithm is that it performs continuous recognition and detection for long periods of time. This was due to the while cycle programmed for this purpose, which only breaks if the program is completely closed. A very suitable application is the detection of drowsy drivers, since it would be possible to prevent accidents due to drivers who are sleeping while driving. This represents a high social impact, since it would prevent many accidents and save more than one life. The final objective is to apply the algorithm in a real situation. For that objective we pretend to install or simulate a situation where the driver starts to feel fatigated and through the algorithm, the car starts to stop.

References

Orbea, D., Moposita, J., Aguilar, W.G., Paredes, M., León, G., Jara-Olmedo, A.: Math model of UAV multi rotor prototype with fixed wing aerodynamic structure for a flight simulator. In: De Paolis, L.T., Bourdot, P., Mongelli, A. (eds.) AVR 2017. LNCS, vol. 10324, pp. 199–211. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-60922-5_15

Orbea, D., Moposita, J., Aguilar, W.G., Paredes, M., Reyes, R.P., Montoya, L.: Vertical take off and landing with fixed rotor. In: Chilean Conference on Electrical, Electronics Engineering, Information and Communication Technologies (CHILECON), Pucón, Chile (2017)

Brown, L.D.: Driver fatigue. Hum. Factors 36, 238–314 (1994)

Arnold, P.K., Hartley, L.R., Corry, A., Hochstadt, D., Penna, F., Feyer, A.M.: Hours of work, and perceptions of fatigue among truck drivers. Accid Anal and Prev. 29(4), 471–477 (1997)

Wang, T., Shi, P.: Yawning detection for determining driver drowsiness. In: IEEE 1768 (2015)

Dalziel, J.R., Job, R.F.S.: Motor vehicles accidents, fatigue and optimism bias in taxi drivers. Accid. Anal. Prev. 29(4), 489–494 (1997)

Taylor, A.H., Dorm, L.: Stress, fatigue, health, and risk of road traffic accidents among professional drivers: the contribution of physical inactivity. Annu. Rev. Public Health 27, 371–391 (2006)

Summala, H., Mikkola, T.: Fatal accidents among car and truck drivers: effects of fatigue, age, and alcohol consumption. Hum. Factors 36(2), 315–326 (1994)

Milosevic, S.: Driver’s fatigue studies. Ergonomics 40(3), 381–389

Borghini, G., Astolfi, L., Vecchiato, G., Mattia, D., Babiloni, F.: Measuring neurophysiological signals in aircraft pilots and car drivers for the assessment of mental workload, fatigue and drowsiness. Neurosci. Biobehav. Rev. 44, 58–75 (2014)

McKinley, R.A., McIntire, L.K., Schmidt, R., Repperger, D.W., Cadwell, J.A.: Evaluation of eye metrics as a detector of fatigue. Hum. Factors 53(4), 403–414 (2011)

Rogado, E., García, J.L., Barea, R., Bergasa, L.M., López, E.: Driver fatigue detection system. In: IEEE International Conference on Robotics and Biomimetics (2008)

Bouchner, P., Pieknic, R., Novotný, S., Pekný, J., Hajný, M., Borzová, C.: Fatigue of car drivers – detection and classification based on the experiments on car simulators. In: 6th WSEAS International Conference on Simulation, Modelling and Optimization (2006)

Aguilar, W.G., Angulo, C.: Real-time model-based video stabilization for microaerial vehicles. Neural Process. Lett. 43(2), 459–477 (2016)

Aguilar, W.G., et al.: Real-time detection and simulation of abnormal crowd behavior. In: De Paolis, L.T., Bourdot, P., Mongelli, A. (eds.) AVR 2017. LNCS, vol. 10325, pp. 420–428. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-60928-7_36

Aguilar, W.G., Angulo, C.: Real-time video stabilization without phantom movements for micro aerial vehicles. EURASIP Journal on Image and Video Processing 1, 1–13 (2014)

Aguilar, W.G., et al.: Statistical abnormal crowd behavior detection and simulation for real-time applications. In: Huang, Y., Wu, H., Liu, H., Yin, Z. (eds.) ICIRA 2017. LNCS (LNAI), vol. 10463, pp. 671–682. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-65292-4_58

Basantes, J., et al.: Capture and processing of geospatial data with laser scanner system for 3D modeling and virtual reality of Amazonian Caves. In: IEEE Ecuador Technical Chapters Meeting (ETCM), Samborondón, Ecuador (2018)

Aguilar, W.G., Rodríguez, G.A., Álvarez, L., Sandoval, S., Quisaguano, F., Limaico, A.: On-Board visual SLAM on a UGV using a RGB-D camera. In: Huang, Y., Wu, H., Liu, H., Yin, Z. (eds.) ICIRA 2017. LNCS (LNAI), vol. 10464, pp. 298–308. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-65298-6_28

Aguilar, W.G., Rodríguez, G.A., Álvarez, L., Sandoval, S., Quisaguano, F., Limaico, A.: Real-time 3D modeling with a RGB-D camera and on-board processing. In: De Paolis, L.T., Bourdot, P., Mongelli, A. (eds.) AVR 2017. LNCS, vol. 10325, pp. 410–419. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-60928-7_35

Aguilar, W.G., Rodríguez, G.A., Álvarez, L., Sandoval, S., Quisaguano, F., Limaico, A.: Visual SLAM with a RGB-D camera on a Quadrotor UAV using on-board processing. In: Rojas, I., Joya, G., Catala, A. (eds.) IWANN 2017. LNCS, vol. 10306, pp. 596–606. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-59147-6_51

Aguilar, W.G., Estrella, J.I., López, W., Abad, V.: Driver fatigue detection based on real-time eye gaze pattern analysis. In: Huang, Y., Wu, H., Liu, H., Yin, Z. (eds.) ICIRA 2017. LNCS (LNAI), vol. 10463, pp. 683–694. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-65292-4_59

Devi, M.S., Bajaj, P.R.: Driver fatigue detection based on eye tracking. In: IEEE First International Conference on Emerging Trends in Engineering and Technology (2008)

Fan, X., Yin, B.-C., Sun, Y.-F.: Yawning detection for monitoring driver fatigue. In: IEEE International Conference on Machine Learning and Cybernetics (2007)

Saradadevi, M., Bajaj, P.: Driver fatigue detection using mouth and yawning analysis. Int. J. Comput. Sci. Network Secur. 8(6), 183–188 (2008)

Azim, T., Jaffar, M.A., Mirza, A.M.: Fully automated real time fatigue detection of drivers through fuzzy expert systems. Appl. Soft Comput. 18, 25–38 (2014)

Galarza, J., Pérez, E., Serrano, E., Tapia, A., Aguilar, W.G.: Pose estimation based on monocular visual odometry and lane detection for intelligent vehicles. In: De Paolis, L.T., Bourdot, P. (eds.) AVR 2018. LNCS, vol. 10851, pp. 562–566. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-95282-6_40

Aguilar, W.G., Morales, S.: 3D environment mapping using the Kinect V2 and path planning based on RRT algorithms. Electronics 5(4), 70 (2016)

Aguilar, W.G., Casaliglla, V.P., Pólit, J.L.: Obstacle avoidance based-visual navigation for micro aerial vehicles. Electronics 6(1), 10 (2017)

Dong, W., Wu, X.: Fatigue detection based on the distance of eyelid. In: IEEE International Workshop on VLSI Design and Video Technology (2005)

Horng, W.-B., Chen, C.-Y., Chang, Y., Fan, C.-H.: Driver fatigue detection based on eye tracking and dynamic template matching. In: IEEE International Conference on Net-working, Sensing and Control (2004)

Andrea, C.C., Byron, J.Q., Jorge, P.I., Inti, T.C.H., Aguilar, W.G.: Geolocation and counting of people with aerial thermal imaging for rescue purposes. In: De Paolis, L.T., Bourdot, P. (eds.) AVR 2018. LNCS, vol. 10850, pp. 171–182. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-95270-3_12

Aguilar, W.G., et al.: Pedestrian detection for UAVs using cascade classifiers and saliency maps. In: Rojas, I., Joya, G., Catala, A. (eds.) IWANN 2017. LNCS, vol. 10306, pp. 563–574. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-59147-6_48

Aguilar, W.G., Luna, M., Moya, J., Abad, V., Parra, H., Ruiz, H.: Pedestrian detection for UAVs using cascade classifiers with meanshift. In: IEEE 11th International Conference on Semantic Computing (ICSC), San Diego (2017)

Aguilar, W.G., Cobeña, B., Rodriguez, G., Salcedo, V.S., Collaguazo, B.: SVM and RGB-D sensor based gesture recognition for UAV control. In: De Paolis, L.T., Bourdot, P. (eds.) AVR 2018. LNCS, vol. 10851, pp. 713–719. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-95282-6_50

Viola, P., Jones, M.: Rapid object detection using a boosted cascade of simple features. In: Conference on Computer Vision and Pattern Recognition, pp. 1–9 (2001)

Dalal, N., Triggs, W.: Histograms of oriented gradients for human detection. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR05, vol. 1, no. 3, pp. 886–893 (2004)

Benenson, R., Omran, M., Hosang, J., Schiele, B.: Ten years of pedestrian detection, what have we learned? In: Agapito, L., Bronstein, Michael M., Rother, C. (eds.) ECCV 2014. LNCS, vol. 8926, pp. 613–627. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-16181-5_47

Wang, X., Han, T.X., Yan, S.: An HOG-LBP human detector with partial occlusion handling. In: IEEE 12th International Conference on Computer Vision (2009)

Osuna, E., Freud, R., Girosit, F.: Training support vector machines: an application to face detection. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (1997)

Viola, P., Jones, M.J.: Robust real-time face detection. Int. J. Comput. Vision 57(2), 137–154 (2004)

Rosebrock, A.: Face detection with OpenCV and deep learning, pyimagesearch (2018). https://www.pyimagesearch.com/2018/02/26/face-detection-with-opencv-and-deep-learning/. Accessed 01 2019

Aguilar, W.G., Quisaguano, F.J., Rodríguez, G.A., Alvarez, L.G., Limaico, A., Sandoval, D.S.: Convolutional neuronal networks based monocular object detection and depth perception for micro UAVs. In: Peng, Y., Yu, K., Lu, J., Jiang, X. (eds.) IScIDE 2018. LNCS, vol. 11266, pp. 401–410. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-02698-1_35

Amaguaña, F., Collaguazo, B., Tituaña, J., Aguilar, W.G.: Simulation system based on augmented reality for optimization of training tactics on military operations. In: De Paolis, L.T., Bourdot, P. (eds.) AVR 2018. LNCS, vol. 10850, pp. 394–403. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-95270-3_33

Aguilar, W.G., Salcedo, V.S., Sandoval, D.S., Cobeña, B.: Developing of a video-based model for UAV autonomous navigation. In: Barone, D.A.C., Teles, E.O., Brackmann, C.P. (eds.) LAWCN 2017. CCIS, vol. 720, pp. 94–105. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-71011-2_8

Salcedo, V.S., Aguilar, W.G., Cobeña, B., Pardo, J.A., Proaño, Z.: On-board target virtualization using image features for UAV autonomous tracking. In: Boudriga, N., Alouini, M.-S., Rekhis, S., Sabir, E., Pollin, S. (eds.) UNet 2018. LNCS, vol. 11277, pp. 384–391. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-02849-7_34

Kazemi, V., Sullivan, J.: One millisecond face alignment with an ensemble of regression trees. In: IEEE Conference on Computer Vision and Pattern Recognition (2014)

Zhang, Z., Luo, P., Loy, C.C., Tang, X.: Facial landmark detection by deep multi-task learning. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8694, pp. 94–108. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10599-4_7

Baltrusaitis, T., Robinson, P., Morency, L.P.: Constrained local neural fields for robust facial landmark detection in the wild. In: IEEE International Conference on Computer Vision, pp. 354–361 (2013)

Ess, A., Leibe, B., Schindler, K., Gool, L.V.: Robust multiperson tracking from a mobile platform. IEEE Trans. Pattern Anal. Mach. Intell. 31(10), 1831–1846 (2009)

MIT: Face Data: CBCL face database No. 1. MIT (2000)

Sagonas, C., Antonakos, E., Tzimiropoulos, G., Zafeiriou, S., Pantic, M.: 300 faces In-the-wild challenge: database and results. In: Image and Vision Computing (IMAVIS), Special Issue on Facial Landmark Localisation “In-The-Wild” (2016)

Sagonas, C., Tzimiropoulos, G., Zafeiriou, S., Pantic, M.: 300 faces in-the-wild challenge: the first facial landmark localization Challenge. In: IEEE International Conference on Computer Vision (ICCV-W), 300 Faces in-the-Wild Challenge (300-W) (2013)

Sagonas, C., Tzimiropoulos, G., Zafeiriou, S., Pantic, M.: A semi-automatic methodology for facial landmark annotation. In: IEEE International Conference on Computer Vision and Pattern Recognition (CVPR-W), 5th Workshop on Analysis and Modeling of Faces and Gestures (AMFG 2013) 2013

Le, V., Brandt, J., Lin, Z., Bourdev, L., Huang, Thomas S.: Interactive facial feature localization. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7574, pp. 679–692. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33712-3_49

Segundo, M.P., Silva, L., Pereira, O.R., Queirolo, C.C.: Automatic face segmentation and facial landmark detection in range images. IEEE Trans. Syst. Man, Cybernet. Part B (Cybernetics) 40(5), 1319–1330 (2010)

Perakis, P., Passalis, G., Theoharis, T., Kakadiaris, L.A.: 3D facial landmark detection under large yaw and expression variations. IEEE Trans. Pattern Anal. Mach. Intell. 35(7), 1552–1564 (2013)

Zhu, X., Ramanan, D.: Face detection, pose estimation, and landmark localization in the wild. In: IEEE Conference on Computer Vision and Pattern Recognition (2012)

Soukupová, T., Cech, J.: Real-time eye blink detection using facial landmark. In: 21st Computer Vision Winter Workshop (2016)

Rosebrock, A.: “Face alignment with OpenCV and Python,” pyimagesearch (2017). https://www.pyimagesearch.com/2017/05/22/face-alignment-with-opencv-and-python/. Accessed 01 2019

Rosebrock, A.: Drowsiness detection with OpenCV. pyimagesearch (2017). https://www.pyimagesearch.com/2017/05/08/drowsiness-detection-opencv/. Accessed 01 2019

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Galindo, R., Aguilar, W.G., Reyes Ch., R.P. (2019). Landmark Based Eye Ratio Estimation for Driver Fatigue Detection. In: Yu, H., Liu, J., Liu, L., Ju, Z., Liu, Y., Zhou, D. (eds) Intelligent Robotics and Applications. ICIRA 2019. Lecture Notes in Computer Science(), vol 11744. Springer, Cham. https://doi.org/10.1007/978-3-030-27541-9_46

Download citation

DOI: https://doi.org/10.1007/978-3-030-27541-9_46

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-27540-2

Online ISBN: 978-3-030-27541-9

eBook Packages: Computer ScienceComputer Science (R0)