Abstract

Many traditional feature extraction methods takes the global or the local characteristics of training samples into consideration during the process of feature extraction. How to fully utilize the global or the local characteristics to improve the feature extraction efficiencies is worthy of research. In view of this, a new Manifold-based Feature Extraction Method (MFEM) is proposed. MFEM takes both the advantage of Linear Discriminant Analysis (LDA) in keeping the global characteristics and the advantage of Locality Preserving Projections (LPP) in keeping the local characteristics into consideration. In MFEM, Within-Class Scatter based on Manifold (WCSM) and Between-Class Scatter based on Manifold (BCSM) are introduced and the optimal projection can be obtained based on the Fisher criterion. Compared with LDA and LPP, MFEM considers the global information and local structure and improves the feature extraction efficiency.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Linear Discriminant Analysis (LDA) [1] is popular in practice, in which the non-singularity problem has greatly influent its improvement of efficiencies. In view of this, many effective improvements are made by scientists: Regularized Discriminant Analysis (RDA) proposed by Friedman [2] efficiently solves the above problem; 2D-LDA is proposed to directly extract the features based on Fisher criterion [3]; Orthogonal LDA (OLDA) tries to diagonalize the scatter matrix so as to obtain the discriminant vectors [4]; Direct LDA (DLDA) [5] carries no discriminative information by modifying the simultaneous diagonalization procedure. Besides, the commonly-used improvement approach include Pseudo-inverse LDA (PLDA) [6], Two-stage LDA [7], Penalized Discriminant Analysis (PDA) [8], Enhanced Fisher Linear Discriminant Model (EFM) [9]. In recent years, as to the under-sampled problems, we proposed Scalarized LDA (SLDA) [10], and Matrix Exponential LDA (MELDA) [11].

The general strategy of above approach is to solve the singularity problem firstly and then uses the Fisher criterion to obtain the optimal projections. LDA only takes the sample global information into consideration but always neglects the local structure. On the other hand, many popular manifold learning approach such as Locality Preserving Projection (LPP) [12], only focus on the local structure.

Therefore, all the sample information including the global characteristics and local structure is taken into consideration, and propose a new Manifold-based Feature Extraction Method (MFEM). MFEM inherits the advantage of Fisher criterion and manifold learning and effectively improve the feature extraction efficiency.

2 Background Knowledge

2.1 LDA

Given a dataset matrix X = [x1, x2, …, xN] = [x1, x2, …, xc] where xi(i = 1, …, N), N and c are respectively the training size and the class size. Ni denoting the number of sample in the i th class.

In LDA, two scatters named between-class scatter SB and within-class scatter SW are defined as:

where \( \bar{\varvec{x}}_{\varvec{i}} = \frac{1}{{N_{i} }}X_{i} e_{i} \) with \( e_{i} = [1, {\ldots}, 1]^{T} \in R^{{N_{i} }} \) is the centroid of class i and \( \bar{\varvec{x}} = \frac{1}{N}Xe \) with \( e_{i} = [1, {\ldots}, 1]^{T} \in R^{N} \) is the global centroid.

The optimal function of LDA is:

The Eq. (3) is equivalent to:

and

where W is the optimal projection.

The projection W can be obtained by calculating the eigenvectors.

It can be seen from the above analysis, LDA tries to preserve the global characteristics invariant before and after feature extraction. Its efficiency can not be improved because it neglects the local structure of each class.

2.2 LPP

The optimal problem of LPP is:

where W is the optimal projection, Sij is the weight function which reflects the similarity of samples, \( \varvec{D}_{{\varvec{ii}}} = \sum\nolimits_{j} {S_{ij} } \).

The above optimization problem can be transformed as follows based on the linear algebra theory:

where L = D − S.

The optional projection matrix is obtained by computing all the nonzero eigenvectors of \( \varvec{XLX}^{\text{T}} \varvec{W}\text{ = }\varvec{\lambda XDX}^{\text{T}} \varvec{W} \).

In conclusion, LPP tries to preserve the local characteristic but does not take the global characteristics into consideration, especially, when encountering noise, the feature extraction efficiency of LPP is greatly influenced.

3 MFEM

Feature extraction is a classical preprocessed approach in dealing with the high-dimensional samples. Though they are widely-used in practice, the feature extraction efficiency is limited due to neglecting the global characteristics and local structure. In order to take all the characteristics of the training samples, a new Manifold-based Feature Extraction Method (MFEM) is proposed. In MFEM, Within-Class Scatter based on Manifold (WCSM) and Between-Class Scatter based on Manifold (BCSM) are introduced and the optimal projection can be obtained based on the Fisher criterion.

3.1 Between-Class Scatter Based on Manifold

Inspired by manifold learning, we firstly construct the adjacency graph \( \varvec{G}_{\varvec{D}} = \{ \varvec{X},\varvec{D}\} \) where \( \varvec{G}_{\varvec{D}} \) donates a graph with different classes, X and D donate the dataset and the weight of different classes respectively. The different-class weight function of two random samples xi and xj can be defined:

where li (i = 1, 2, …, N) donates the class label and d is a constant.

The different-class weight function verifies that if the samples xi and xj belong to different classes, the weight of them is large; or else, the weight is zero.

In order to preserve the local characteristics of different classes, the samples xi and xj belonged to different classes will be far away after feature extraction. The optimization problem can be described as follows.

Where \( \varvec{y}_{\varvec{i}} = \varvec{W}^{\text{T}} \varvec{x}_{\varvec{i}} \), W donates the projection matrix and \( \varvec{x}_{\varvec{i}} \in \varvec{X} \).

\( \sum\limits_{i,j} {(\varvec{y}_{\varvec{i}} - \varvec{y}_{\varvec{j}} )^{2} D_{ij} } \) is reformulated to the following equations based on the algebraic transformation.

where \( \varvec{S}_{\varvec{D}} = \varvec{X}(\varvec{D}^{{\prime }} - \varvec{D})\varvec{X}^{\text{T}} \), \( \varvec{D}^{{\prime }} \) is a diagonal matrix and \( \varvec{D}^{{\prime }} = \sum\nolimits_{j} {D_{ij} } \).

By taking (12) to (11), (11) is reformulated to

Based on the above analysis, we can see Eqs. (4) and (13) reflect the global characteristics of different classes and local structure of each class respectively. In order to fully utilize all the above information, we can obtain the following optimization expression based on (4) and (13).

where \( \varvec{M}_{\varvec{B}} = \alpha \varvec{S}_{\varvec{B}} + (1 - \alpha )\varvec{S}_{\varvec{D}} \) and \( \alpha \) is a parameter balancing \( \varvec{S}_{\varvec{B}} \) and \( \varvec{S}_{D} \). \( \varvec{M}_{\varvec{B}} \) is called Between-Class Scatter based on Manifold (BCSM).

3.2 Within-Class Scatter Based on Manifold

Similarity with BCSM, the same-class weight function of two random samples xi and xj is defined:

where li (i = 1, 2, .., N) donates the class label and s is a constant.

The same-class weight function verifies that if the samples xi and xj with the same class label, the weight of them is large; or else, the weight is zero.

In order to keep the neighborhood close, it can be described as:

where \( \varvec{y}_{\varvec{i}} = \varvec{W}^{\text{T}} \varvec{x}_{\varvec{i}} \), W donates the projection matrix and \( \varvec{x}_{\varvec{i}} \in \varvec{X} \).

\( \sum\limits_{i,j} {(\varvec{y}_{\varvec{i}} - \varvec{y}_{\varvec{j}} )^{2} S_{ij} } \) is reformulated to the following equations based on the algebraic transformation.

where \( \varvec{S}_{\varvec{S}} = \varvec{X}(\varvec{S}^{{\prime }} - \varvec{S})\varvec{X}^{T} \), \( \varvec{S}^{{\prime }} \) is a diagonal matrix and \( \varvec{S}^{\varvec{'}} = \sum\nolimits_{j} {S_{ij} } \).

By taking (17) to (16), (16) is reformulated to

The following optimization expression based on (5) and (18).

where \( \varvec{M}_{\varvec{W}} = \beta \varvec{S}_{\varvec{W}} + (1 - \beta )\varvec{S}_{\varvec{S}} \) and \( \beta \) is a parameter balancing \( \varvec{S}_{W} \) and \( \varvec{S}_{S} \). \( \varvec{M}_{W} \) is called Within-Class Scatter based on Manifold (WCSM).

3.3 The Optimization Problem

Inspired by the Fisher criterion, the above optimization problem can be described as follows.

The solution of the maximization of (20) is given by computing all the nonzero eigenvectors of \( \varvec{M}_{\varvec{B}} \varvec{W} = \lambda \varvec{M}_{\varvec{W}} \varvec{W} \).

From the optimization expression of MFEM, it can be seen MFEM not only takes the global characteristics into consideration, but also preserves the local structure. MFEM inherits the advantages of LDA and LPP and improves the feature extraction efficiency to some extent. When \( \alpha = \beta = 1 \) or \( d = s = \infty \), MFEM is equivalent to LDA; When \( \alpha = \beta = 0 \), \( d = \infty \) and \( s < \infty \), MFEM is equivalent to LPP.

In practice, \( \varvec{M}_{\varvec{W}} \) maybe singular and the optimal projection can not be obtained by the above approach. For the sake of convenience, the singular value perturbation by adding a little positive number to the diagonal of \( \varvec{M}_{\varvec{W}} \) is introduced to solve the singular problem.

3.4 Optimization Algorithm

Input: the original dataset X and the reduced dimension d

Output: the corresponding lower dimensional dataset Y = [y1, y2, …, yd]

Step1: Construct the adjacency graph \( \varvec{G}_{\varvec{D}} = \{ \varvec{X},\varvec{D}\} \) and \( \varvec{G}_{\varvec{S}} = \{ \varvec{X},\varvec{S}\} \) where \( \varvec{X} = {\{ }\varvec{x}_{{\mathbf{1}}} \text{,}\varvec{x}_{{\mathbf{2}}} ,\ldots, \varvec{x}_{\varvec{N}} \} \) donates the original dataset, D and S respectively donate the weights of different classes and the same class. We put an edge between xi and xj if they are in different classes in the GD, or else, put an edge between them in the Gs.

Step2: Compute the different-class weights and the same-class weights. If different-class samples xi and xj are connected, utilize Eq. (10) to compute the different-class weights; else utilize Eq. (15) to compute the same-class weights.

Step3: Compute \( \varvec{S}_{W} \), \( \varvec{S}_{\varvec{B}} \), \( \varvec{M}_{W} \) and \( \varvec{M}_{\varvec{B}} \).

Step4: Solving the singular problem of \( \varvec{M}_{W} \). The singular value perturbation is introduced to solve the singular problem. Let \( \varvec{M}_{W} \) transform to \( \varvec{M}_{W}^{{\prime }} \) after perturbation.

Step5: Compute the optimal projection W. The solution of the optimal projection W is given by computing all the nonzero eigenvectors of \( \varvec{M}_{W}^{ - 1} \varvec{M}_{\varvec{B}} \varvec{W}\text{ = }\lambda \varvec{W} \) or \( \varvec{M^{\prime}}_{W}^{ - 1} \varvec{M}_{\varvec{B}} \varvec{W}\text{ = }\lambda \varvec{W} \). The nonzero eigenvectors corresponding to the biggest d eigen-values are combine to form the optimal projection W = [w1, …, wd].

Step6: As to a certain sample \( \varvec{x}_{i} \in X \), the corresponding lower dimensional sample can be obtained by \( \varvec{y}_{\varvec{i}} = \varvec{W}^{\text{T}} \varvec{x}_{\varvec{i}} \).

4 Experimental Analysis

4.1 UCI Two-Dimensional Visualization

Wine dataset, including 178 samples with 3 classes, in UCI machine learning repository is used in the experiment. Set the reduced dimension is two, successively run PCA, LPP, LDA, MFEM on the wine dataset, and we can obtain the experimental results, shown in Table 1.

It can be seen from Fig. 1, some different-class samples are overlapped after feature extraction by PCA. LPP, LDA and MFEM can mainly fulfill the feature extraction task but the efficiencies are different. After feature extraction by LPP, some samples lying near the three-class boundary are overlapped. Therefore, compared with LDA and MFEM, the efficiency of LPP is lowest. The efficiencies of LDA and MFEM are both high, but in the respect of distribution, MFEM shows much more perfect than LDA. This is because MFEM tries to preserve the original distribution by taking both the global and local characteristics, while LDA only focus on the global characteristics based on the Fisher criterion so as to make the different-class samples far and the same-class samples close. Although the within-class scatter reflects the closeness of the same-class samples, yet it does not take the relationship of adjacent samples before and after feature exaction.

4.2 Experiments on Face Datasets

Experiment datasets include ORL face dataset and Yale face dataset. We will discuss the relationship between the sizes of training samples and recognition rates as well as the relationship between the reduced dimensions and recognition rates.

Relationship Between the Size of Training Samples and the Recognition Rate.

The training dataset consists of the first k images of each subject, and the remainders are used for test. The values of k in ORL and Yale dataset are selected from 3, 4, 5, 6, 7 and from 3, 5, 7, 9 respectively. The comparative experimental results are show in Table 1. In order to overcome the small size problem in LDA, we utilize PCA + LDA instead for LDA in the experiment.

It can be seen from Table 1, compared with PCA, LPP, LDA, MFEM performs best on the ORL dataset and except k = 8, the efficiency of MFEM is highest on the Yale dataset.

Relationship Between the Reduced Dimensions and Recognition Rates.

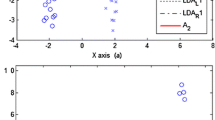

The training dataset consists of the first 5 images of each subject, and the remainders are used for test. We can obtain the experiment results shown in Fig. 2.

It can be seen from Fig. 2, as the reduced dimension becomes higher, the corresponding recognition rate mainly has an upward tendency. Compared with PCA, LPP, LDA, MFEM performs best.

5 Conclusions

Researches on current feature extraction approaches can be reduced to two ways, one pays more attention on the global structure, and the other originates from local structure and tries to make the relationship between samples before and after feature extraction be invariant. In view of shortages of classical feature extraction approaches, MFEM is proposed. MFEM considers all the information and improves the feature extraction efficiency. Experiments on some standard datasets verify the effectiveness of MFEM. In practice, linear inseparability is a quite common problem and how to solve it is attracting more and more researchers’ interest. MFEM proposed in this paper is suitable to the linear separability situation, how to expand MFEM to linear inseparability is our next work.

References

Martinez, A.M., Kak, A.C.: PCA versus LDA. IEEE Trans. Pattern Anal. Mach. Intell. 23(2), 228–233 (2001)

Friedman, H.: Regularized discriminant analysis. J. Am. Stat. Assoc. 84(405), 165–175 (1989)

Li, M., Yuan, B.: 2D-LDA: a novel statistical linear discriminant analysis for image matrix. Pattern Recogn. Lett. 26(5), 527–532 (2005)

Ye, J.P., Xiong, T.: Computational and theoretical analysis of null space and orthogonal linear discriminant analysis. J. Mach. Learn. Res. 7, 1183–1204 (2006)

Yu, H., Yang, J.: A direct LDA algorithm for high-dimensional data with application to face recognition. Pattern Recogn. 34(11), 2067–2070 (2001)

Ji, S.W., Ye, J.P.: Generalized linear discriminant analysis: a unified framework and efficient model selection. IEEE Trans. Neural Netw. 19(10), 1768–1782 (2008)

Belhumeur, P.N., Hespanha, J.P., Kriegman, D.J.: Eiegnfaces vs. Fisherfaces: recognition using class specific linear projection. IEEE Trans. Pattern Anal. Mach. Intell. 19(7), 711–720 (1997)

Hastle, T., Ruja, A., Tibshirani, R.: Penalized discriminant analysis. Ann. Stat. 23(1), 73–102 (1995)

Liu, C.J., Wechsler, H.: Gabor feature based classification using the enhanced fisher linear discriminant model for face recognition. IEEE Trans. Image Proc. 11(4), 267–276 (2002)

Liu, Z., Wang, S.: Improved linear discriminant analysis method. J. Comput. Appl. 31(1), 250–253 (2011)

Liu, Z., Wang, S.: An improved LDA algorithm and its application to face recognition. Comput. Eng. Sci. 33(7), 89–93 (2011)

He, X.F., Niyogi, P.: Locality preserving projections. In: Advances in Neural Information Processing Systems (NIPS), Vancouver, Canada, pp. 153–160 (2003)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Liu, Z. (2019). A New Manifold-Based Feature Extraction Method. In: Huang, DS., Huang, ZK., Hussain, A. (eds) Intelligent Computing Methodologies. ICIC 2019. Lecture Notes in Computer Science(), vol 11645. Springer, Cham. https://doi.org/10.1007/978-3-030-26766-7_31

Download citation

DOI: https://doi.org/10.1007/978-3-030-26766-7_31

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-26765-0

Online ISBN: 978-3-030-26766-7

eBook Packages: Computer ScienceComputer Science (R0)