Abstract

Social robots are increasingly used in different areas of society such as public health, elderly care, education, and commerce. They have also been successfully employed in autism spectrum disorders therapy with children. Humans strive to find in them not only assistants but also friends. Although forms and functionalities of such robots vary, there is a strong tendency to anthropomorphize artificial agents, making them look and behave as human as possible and imputing human attributes to them. The more human a robot looks, the more appealing it will be considered by humans. However, this linear link between likeness and liking only holds to the point where a feeling of strangeness and eeriness emerges. We discuss possible explanations of this so-called uncanny valley phenomenon that emerges in human–robot interaction. We also touch upon important ethical questions surrounding human–robot interaction in different social settings, such as elderly care or autism spectrum disorders therapy.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Recently, Sophia, a humanoid robot created by Hanson robotics, was interviewed on various television shows. There, she talked about fashion, dreams for life, about a good job, and made jokes.Footnote 1 Interestingly, this robot appears very human-like and even holds the official citizenship of Saudi Arabia. This example shows that, because of their amazing technological capabilities, some people perceive social robots as having already achieved some sort of human-like intelligence. Nevertheless, a robot who walks and talks, and even seems to feel like a human being, still appears futuristic to most of us.

When we think of a robot, we probably imagine them in manufacturing, the military, or perhaps in space exploration. In more traditional spheres of life, such as childcare or medicine, robots have not found a similarly intuitive place yet. But there is a broad range of possible social roles for robots, ranging from artificial agents to real companions (Scopelliti, Giuliani, & Fornara, 2005). Especially in tasks of daily assistance, such as lifting heavy things or people (Broadbent, 2017) or controlling and recording vital signs (Alaiad & Zhou, 2014), robots have since turned out to be useful tools. Nevertheless, negative attitudes toward technology in general, and toward social robots in particular, are today a crucial obstacle for active use of robotic technologies in areas such as health care or education (see, for example, Nomura, Suzuki, Kanda, & Kato, 2006).

Both onboard circuitry and appearance of robots have much improved with technological advances. Today’s robot assistant does not necessarily look like a machine anymore but can appear as a pet or even as a human being. As they look and move less and less like simple machines, the question emerges, how such highly developed robots are perceived by humans. Especially humanoid robots must be investigated in this regard because their appearance is becoming very similar to humans. On these grounds, this chapter addresses the shape of recently constructed social robots that can potentially become a part of our daily life. Moreover, attitudes toward social robots are investigated by explaining why a feeling of eeriness emerges when encountering these machines. In addition, the use of social robots in daily life is summarized by giving examples from the domains of elderly care, child therapy, and sexual interventions.

2 The Appearance of Social Robots

At the outset, it is important to highlight the difference between humanoid and nonhumanoid social robots. According to Broadbent (2017), a social robot is considered humanoid if it has a human-like body shape with a head, two arms, and two legs. Humanoid robots have been created for the purpose of modeling human development and functionality. An example is CB2, a child robot with a biomimetic body, created at Osaka University for studying human child development (Minato et al., 2007), or the robotic version of a human mouth, built by scientists at Kagawa University, Japan, for voice training and studying human speech production (Sawada, Kitani, & Hayashi, 2008). There are also nonhumanoid robots that have functional abilities such as walking, based on passive dynamics inspired by a human body (see, for example, Collins, Ruina, Tedrake, & Wisse, 2005; Wilson & Golonka, 2013).

Some social robots are even more human-like than humanoids: the so-called androids. They do not only feature a human body shape but also a human-like face (e.g., Philip K. Dick,Footnote 2 produced by Hanson Robotics), gesturing, and speech. Furthermore, some android robots have been built to completely resemble a human individual (e.g., Hiroshi Ishiguro’s geminoid produced by ATR Hiroshi Ishiguro Laboratory,Footnote 3 cf. Broadbent, 2017). The crucial difference between a humanoid and a geminoid is that the latter is built as a duplicate of an existing person (Nishio, Ishiguro, & Hagita, 2007). Of course, being a perfect morphological copy does not necessarily imply similar degrees of freedom, either in gesturing or facial expressions (Becker-Asano & Ishiguro, 2011; Becker-Asano, Ogawa, Nishio, & Ishiguro, 2010). Geminoid robots are normally teleoperated, enabling live human–robot interaction. For instance, Professor Hiroshi Ishiguro gives lectures through his geminoid. Students not only listen to him passively, as they might for a recorded lecture that is projected on a screen, but can actively interact with him and ask questions, thereby bidirectionally exchanging social signals. Another geminoid, Geminoid-DK,Footnote 4 was used for lectures as well, leading to mixed reactions (Abildgaard & Scharfe, 2012). After interacting with a geminoid, people reported to have felt some human presence even though they knew that the geminoid was only a machine, resulting in a feeling of strangeness and unease. This feeling vanished after they realized that a human being with his or her own personality spoke through the geminoid (Nishio et al., 2007). An outstanding example of geminoid use are theatrical performances with Geminoid FFootnote 5 (D’Cruz, 2014). In some cases, the audience even seemed to prefer an android to a human performer, suggesting that the android might have an advantage for communicating the meaning of poetry (Ogawa et al., 2018). Furthermore, the use of geminoids for security purposes as doubles in sensitive public appearances is being discussed (Burrows, 2011). Not only humanoid and geminoid robots have been used as social companions, as there are also smaller nonhumanoid pet robots, which have been used in both daily live and therapy scenarios recently, such as Genibo (a puppy), Pleo (a baby dinosaur), Paro (a seal), and Aibo (a dog). They have been developed and applied for therapeutic purposes, with variable success (Bharatharaj, Huang, Mohan, Al-Jumaily, & Krägeloh, 2017; Broadbent, 2017). Table 1 provides an overview of the 21 robots that are mentioned in this chapter.

3 Anthropomorphism and Dehumanization

As the design of social robots is more and more adapted to the appearance of animate entities such as pets or humans, it becomes an interesting question what our social relationships to these robots are, or what they can and should be. One possible answer is that we simply endure social robots around us and do not think too much about their existence. Another possibility is that we admire social robots, as they are technically fascinating for us and might even be superior in several ways, including their abilities to tend to our physical and even psychological needs. It is also possible that our attitude toward such robots is affected by the fear of identity loss when social robots are perceived to be as self-reliant as a human being. Finally, we may even design sufficiently many emotional trigger features into our creations that we cannot help but fall in love with them (Levy, 2008).

In order to distinguish between these possibilities, it is helpful to assess how much human likeness is valued in a humanoid robot. Why do we want to build something that looks like us and that can even become a friend for us? Our urge to create a friend may have its roots in human nature, starting from birth. Small children often create “imaginary friends,” invisible pets, or characters to play with (see, for example, Svendsen, 1934). The human desire to socialize, our natural tendency to explain self-initiated behaviors through attribution of agency, a strong tendency to anthropomorphize in all spheres of human life, be it fairy tales, art, or language, compel us to make robots as human-looking as possible. Anthropomorphism (anthropos, greek for human; morphe, greek for form) stands for the tendency to ascribe human-like qualities or emotions to nonhuman agents (Epley, Waytz, & Cacioppo, 2007). Therefore, it is not surprising that the majority of gods and mythological creatures were conceived with human features: face, head, arms, legs, thoughts, and language (Bohlmann & Bürger, 2018). A classic example of anthropomorphism is the Golem creature in Jewish mythology, created from clay with a full human-like body and controlled by a human (Minsky, 1980).

There are two main forms of anthropomorphism, namely perceptual and functional anthropomorphism, that is, visual human likeness versus behavior mapping (Liarokapis, Artemiadis, & Kyriakopoulos, 2012). These two kinds of anthropomorphism are interconnected: the more human-like a robot looks, the more we expect it to act like a human (Hegel, Krach, Kircher, Wrede, & Sagerer, 2008). Discrepancies between expectation and reality potentially become the source of aversion. The key to this tendency to attribute human-like qualities to nonhuman agents is our need to better understand them and to build more stable communication patterns as a matter of evolutionary design (Duffy, 2003).

Anthropomorphism results in attributing agency to robots; this was confirmed by both behavioral experiments and neuroimaging data (Hegel et al., 2008). Therefore, not only the appearance and technical abilities of robots, such as their manual dexterity or walking speed, have to be taken into consideration but also their emotional impact on human beings. Companies specializing in emotionally engaging humanoids (such as Realbotix™) add artificial breathing and other “background behaviors” to their creations specifically to activate our anthropomorphizing habit.

Recently, the emotional impact of humanoid robots has been discussed under the term “dehumanization” (Haslam & Loughnan, 2014; Wang, Lilienfeld, & Rochat, 2015). There are two forms of dehumanization, which are denial of human uniqueness and denial of human nature. The former describes categorizing a human being as animalistic so that the person perceived as dehumanized lacks a high level of intelligence and self-control. The latter form of dehumanization describes categorizing a human being as mechanistic, meaning that the person lacks warmth and emotions (Angelucci, Bastioni, Graziani, & Rossi, 2014; Haslam, 2006). The phenomenon of dehumanization is a relevant issue in human–robot interaction, because when we assign human-like traits to social robots, a mismatch is created between the human-like appearance of that robot and its mechanistic behavior.

In general, it is important to ask how exactly humans perceive robots subconsciously so that they can be integrated into our social life and eventually become social agents. Thus, in the next chapter, the possible origins of the feeling of eeriness toward robots that are similar to humans are discussed in detail.

4 Effects of Social Robots’ Design on Human Perception: The Uncanny Valley Phenomenon

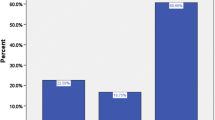

In the last half century, research has emerged on the question of how humans perceive humanoid robots depending on their degree of human likeness. Mori (1970) was the first to postulate that the more a robot resembles a human, the more it is liked by humans. This perhaps linear relationship between human likeness and liking only continues to a certain point, when the sensation of liking dramatically drops. This drop (see Fig. 1 below) has frequently been described as the “valley of uncanniness,” which includes sensations such as fear, eeriness, and avoidance.

The existence of this “valley,” where similarity to a human is quite high while at the same time the observer feels disgust, was shown in experiments with adult participants in both rating and behavioral studies (Appel, Weber, Krause, & Mara, 2016; Ho & MacDorman, 2017; MacDorman & Ishiguro, 2006; Sasaki, Ihaya, & Yamada, 2017; Tschöpe, Reiser, & Oehl, 2017). The same relationship also holds with primates and human babies (Lewkowicz & Ghazanfar, 2012; Matsuda, Okamoto, Ida, Okanoya, & Myowa-Yamakoshi, 2012; Steckenfinger & Ghazanfar, 2009). This is in line with other studies that showed that atypical human forms, such as enlarged eyes or incongruence between face and voice, can cause a cognitive conflict leading to the feeling of uncanniness (Mitchell et al., 2011). Typical human forms were found to receive more positive attitudes than faces with deviant features (Rhodes et al., 2001). The original term used by Mori (1970) is “Bukimi No Tani” and can be translated into “the valley of eeriness” (Hsu, 2012), which was later adapted to “uncanny valley,” the more familiar expression to native English speakers (Appel et al., 2016). In German, the term is close to “Das Unheimliche,” interpreted already by Sigmund Freud as a fear connected to something unknown (Freud, 1919). Ernst Jentsch, a German psychiatrist, explained this feeling as resulting from uncertainty regarding the nature of the observed object (Jentsch, 1906/1997). Most of the studies that investigated observers’ attitudes toward social robots have used static images of robotic faces. Interestingly, Mori (1970) already suggested that the uncanny valley effect is even stronger for dynamic stimuli, an idea that may today apply to animated social robots or videos. What has not, however, systematically been considered is whether an observer may interact with the probe object prior to giving a judgment. We surmise that interaction might be a crucial factor in enhancing acceptance of robot use by establishing and calibrating expectancies. Evidence for the importance of social interaction history as a factor in human–robot relationships comes from developmental robotics studies (reviewed in Cangelosi & Schlesinger, 2015). This effect is reminiscent of Allport’s (1954/2012) contact hypothesis, an influential theory postulating that intergroup contacts reduce prejudice and enhance attitudes toward a foreign group. Thus, for further research, it is useful to not only use static robot pictures but also real-time interaction with robots to investigate the “uncanny valley” phenomenon.

4.1 The Neural Basis of the Uncanny Valley Phenomenon

Only a few studies evaluated the neural basis of the “uncanny valley” sensation to identify human brain regions involved in the generation of this impression. Schindler, Zell, Botsch, and Kissler (2017) detected in a study with electroencephalogram (EEG) recordings that activity in visual and parietal cortices increased when more realistic faces were visually presented to their participants. The authors confirmed their hypothesis by finding the early negative EEG component N170, which was interpreted to reflect the sensation of uncanniness when looking at unrealistic faces. Another EEG study replicated the “uncanny valley” sensation by showing that it might be involved in the violation of the brain’s predictions—the so-called predictive coding account (Urgen et al., 2015). This evidence is in line with a study with functional magneto-resonance imaging (fMRI) that suggested that the “uncanny valley” sensation might be based on a violation of the brain’s internal predictions and emerges through conflicting perceptual cues (Saygin, Chaminade, Ishiguro, Driver, & Frith, 2012). However, by measuring late positive potentials in the EEG, as well as facial electromyograms, Cheetham, Wu, Pauli, and Jancke (2015) did not find any difference in the affective responses between ambiguous and unambiguous images of morphed human and avatar faces.

4.2 Possible Explanations of the Uncanny Valley Phenomenon

One possible explanation for the phenomenon of the “uncanny valley” sensation is offered by the Mirror Neuron System theory. According to this view, there is a mismatch between the appearance and actions of a humanoid robot, and the observer’s ability to mirror these actions which are not part of her action repertoire. Mirror neurons were first found in the premotor and parietal part of monkey cortex (Rizzolatti et al., 1988). Interestingly, mirror neurons are driven by both motor and visual input: in studies with monkeys they have been found to activate not only when the monkey itself performed an action but also when it observed another monkey performing the same action. This phenomenon has been called “resonance behavior” since then (Rizzolatti, Fadiga, Fogassi, & Gallese, 1999), and it is believed to be the basis of understanding others’ actions. Further research has shown that these neurons are located in the inferior frontal gyrus (Kilner, Neal, Weiskopf, Friston, & Frith, 2009) and in the inferior parietal lobe (Chong, Cunnington, Williams, Kanwisher, & Mattingley, 2008). The human Mirror Neuron System is believed to be a key mechanism for action understanding, empathy, communication, language, and many other high-order functions and thus plays an important role in the evaluations that we perform on our social interaction partners.

In their recent review, Wang and colleagues summarized several other explanations for the uncanny valley phenomenon (Wang et al., 2015), only some of which are discussed here. Under the “pathogen avoidance” account, when perceiving a humanoid robot, our brain might categorize the robot as having a disease because the almost human-like appearance does not fit the defective, non-perfect behavior most robots exhibit today, thereby inducing the sensation of disgust (see also MacDorman & Ishiguro, 2006). Supporting this perceptually driven hypothesis, it has been shown that individual differences in disgust sensitivity predict the magnitude of the feeling of uncanniness (MacDorman & Entezari, 2015). Accordingly, the feeling of uncanniness might go back to the fear of leprosy which also causes symptoms like dry, smooth, and thickened skin and face deformation—features that are still present in today’s humanoids and geminoids. Crucially, the stronger the resemblance cues are, the stronger the disgust is. Therefore, the danger of getting infected from a genetically close species is activated (Ferrey, Burleigh, & Fenske, 2015). Relatedly, MacDorman (2005) suggested that humanoids remind us of our inevitable death, as they look human-like but have inanimate faces like a dead body. Moosa and Minhaz Ud-Dean (2010) classified this reasoning as danger avoidance in general. They claimed that necrophobia (fear of death) causes the feeling because dead corpses of any kind may carry danger, such as contagious diseases or contamination. Humans wish to isolate dead bodies from the living by burying or cremating them. Consequently, if humanoid robots are categorized as being dead bodies, they do not fit into our daily life.

A different account reviewed by Wang et al. (2015) assumes that humans have problems categorizing humanoid robots as humans, which results in avoidance behavior. This “categorization uncertainty hypothesis” was first suggested by Jentsch (1906/1997) and focuses on a knowledge-driven origin of the uncanny valley phenomenon. Similar to the fear of contagious diseases, fear of unidentifiable species may be the reason for the “uncanny” sensation. These species can be compared to artificial creatures which only partly match human appearance, such as Frankenstein’s monster (Burleigh & Schoenherr, 2015). When a new stimulus is perceived, we try to categorize it relative to our existing experiences that are laid down as knowledge categories, perhaps on the basis of necessary or sufficient features that establish a (graded) category membership (Murphy, 2002). This cognitive classification account makes a clear and testable prediction: if the new stimulus is too close to a category boundary, then categorization uncertainty and resulting uneasiness increases (Schoenherr & Lacroix, 2014; Yamada, Kawabe, & Ihaya, 2013). Another valuable prediction of this account is that our brain categorizes species not only by their proximity to a category boundary but also makes use of the frequency-based exposure to exemplars from various categories (Burleigh & Schoenherr, 2015). The number of human individuals we have encountered in our lives is larger than the number of humanoids; therefore, the human category “wins” as the normative solution. In this vein, Burleigh and Schoenherr (2015) showed in their study that participants’ ratings of eeriness were affected by exemplar frequency. A related explanation for the phenomenon that humans have a sensation of uncanniness when interacting with humanoid robots is that they are perceived as creatures between the categories “animate” and “inanimate,” which causes the “uncanny valley” sensation (Brenton, Gillies, Ballin, & Chatting, 2005; Pollick, 2010; Saygin et al., 2012).

On these grounds, the memory-based categorization account is also a probable explanation for the “uncanny valley,” but its relation to the sensation of uncanniness when perceiving humanoid robots is more complex, as recent studies have shown. For instance, Mathur and Reichling (2016) instructed their participants in an “investment game” to entrust robots, which were presented to them on a screen, with as much money as they wanted to. The authors demonstrated that the participants’ implicit decisions concerning robots’ social trustworthiness were influenced by the “uncanny valley” sensation that the robots provoked. Nevertheless, category confusion did not seem to mediate the likability of the robots. On the other hand, MacDorman and Chattopadhyay (2016) demonstrated in a categorization task on animacy (living vs. inanimate) and realism (computer-animated vs. real) that the least ambiguous faces presented to their participants were characterized as the eeriest and coldest. This result can be explained by the fact that most natural human faces are asymmetric to a small degree so that overly symmetric and perfect faces are unfamiliar and might thus create a feeling of eeriness. However, experimental studies with morphed facial photographs discovered a clear preference for symmetrical over asymmetrical faces (Perrett, 2010). There are historically and evolutionarily developed aesthetic norms, such as youth, vitality, clear skin and hair, and facial proportions—signaling fertility in general—universal across cultures and rooted in human biology (Jones, Little, Burt, & Perrett, 2004; Rhodes et al., 2001). Thus, baby schema features such as large eyes and forehead or small nose and jaw are found to be most attractive (Breazeal, 2002). Smooth and effective movements and bilateral bodily symmetry are additional signals of beauty (MacDorman & Entezari, 2015). Regular facial proportions are a sign of hormonal health. These beauty templates (Hanson, 2005) should guide our design decisions with a view toward fostering judgments of liking and attraction but are sometimes violated in today’s humanoid robots, which then leads to a feeling of repulsion and rejection.

Several studies have investigated in how far humans attribute human feelings and sensations, such as hunger, pain, love, and joy (see Appel et al., 2016), to social robots. By manipulating descriptions of a humanoid robot (as being capable of planning actions vs. feeling pain), Gray and Wegner (2012) found that the degree of ascribing the ability to feel to the robot predicts the emergence of the “uncanny valley” sensation. This finding is in line with the results of Appel et al. (2016), who found that children over nine rated human-like robots as being eerier than machine-looking robots because they assumed that they had more human thinking abilities. Younger children, on the other hand, did not show any differences in ratings, which can be explained by an underdevelopment of their theory of mind (Brink, Gray, & Wellman, 2017). Adding emotional expressions to the behavioral repertoire of a robot was reported to significantly reduce perceptions of uncanniness (Koschate, Potter, Bremner, & Levine, 2016). But there is also evidence of especially uncanny feelings toward emotionally aware social robots and humans with apparently artificially “programmed” emotional responses (Stein & Ohler, 2017).

From this selective review, it becomes clear that the “uncanny valley” sensation is a multi-level phenomenon: Perceptual cues are constantly evaluated and, in turn, lead to knowledge-driven expectations about our human as well as humanoid interaction partners. An important point for robotic design decisions is that the ever-increasing similarity between humanoids and humans should not be justified as an attempt to reduce the repulsion captured by the uncanny valley effect. For instance, simple computer programs installed on an artificial pet-like social agent such as a TamagochiFootnote 6 show that interactivity and the availability of social cues and contingencies are more important for acceptability than the mere physical similarity to humans. Instead, humanoids are built to inhabit the very same space that humans have evolved to survive in. The physical similarity between humanoids and humans in the light of increasing technological feasibility is thus merely a consequence of their shared purpose, including social exchanges. Consistent with this view, a review of seventeen studies on uncanny valley by Kätsyri, Förger, Mäkäräinen, and Takala (2015) failed to find convincing evidence in favor of a specific uncanniness-inducing mechanism. Multiple factors, such as the personality of the human, cultural differences and previous exposure to social robots may play a role (Strait, Vujovic, Floerke, Scheutz, & Urry, 2015), such as age (Scopelliti et al., 2005), gender and technical background (Kuhnert, Ragni, & Lindner, 2017; Nomura et al., 2006) as well as nationality (Nomura, Syrdal, & Dautenhahn, 2015). Using the Frankenstein Syndrome Questionnaire, Nomura et al. (2015) showed, in particular, that social robots found more acceptance among people in Japan than in Europe. Moreover, Europeans, especially young people in Britain had higher expectations from social robots. The Frankenstein Syndrome is the fear of an artificial agent created as a merge between a machine and a human as a potential transgression.

In summary, the phenomenon of the “uncanny valley” sensation is a very complex mechanism which depends on many factors that future robot design is aimed to meet. Moreover, besides general factors such as collective fear of technology and culture, individual differences play a role in perceiving social robots. Thus, at this point, we have not yet found out exactly how to optimally design humanoid robots for the purpose of being social agents. Nevertheless, experience with them in our daily social life gives possible cues on how improvement of design and technology leads to better acceptance of social robots.

5 Robots as Social Agents

To overcome the “uncanny valley” phenomenon, there are initial attempts to integrate biologically inspired mechanisms into robotic architectures. For instance, the Linguistic Primitives Model uses the natural babbling mechanism of children to teach robots how to speak. In this approach, robots learn words similar to babies by producing syllables first. After mastering those, they move to voluntary pronunciation (Franchi, Sernicola, & Gini, 2016). The idea behind it is not only a novel method of speech learning, but a way to enhance the cognitive development and learning capacity of robots, since language is assumed to drive cognition (Pulvermüller, Garagnani, & Wennekers, 2014; Whorf, 1956). Moreover, the ability to partake in linguistic exchanges is a potential key for accepting humanoid robots into our social spaces.

On the one hand, technological improvement of both the exterior robot architecture, leading to humanoid and geminoid robots, and the capabilities of these machines lead to a high degree of acceptance in our society. On the other hand, similarity to human beings leads to the “uncanny valley” phenomenon. This discrepancy raises the questions of whether robots can become social agents at all, and which stage of acceptance they have already reached in our society. To give an answer, the following sections summarize studies on the use of robots in daily domains and the attitude of their environment toward them.

5.1 Robots as Caring Machines for the Elderly

People face physical and mental decline with aging; for instance, they suffer from dementia or Parkinson’s disease. This leads to a high physical and social dependency on either their social environment or professional care. Thus, to assist elderly caregivers and retirement home staff, caregiving robots are used. Thanks to much improved sensing and moving capacities, robots have become potential life-time companions for humans in the last years. They take care of elderly people and also accompany them during errands and excursions (Sharkey & Sharkey, 2012; Shim, Arkin, & Pettinatti, 2017).

An example for a robot that is used in elderly care is the “nursebot” Pearl (see Pollack et al., 2002) which merely reminds its owner of daily routines. Another example is the social robot Robovie (Sabelli & Kanda, 2016) that was introduced as interaction partner in an elderly care center. Robovie turned out to play three major roles: for information exchange, for emotional interaction and for basic interaction. The elderly people told Robovie about their sorrows, such as family and health conditions, as well as distress caused by physical pain. Crucially, the elderly people preferred to imagine that the social robot was a child in the human-robot interaction. This helped them to create a more natural situation since the elderly people enjoyed spending time with their small grandchildren. In general, the authors agree that the role of a social robot in elderly care should be carefully conceived by the caregivers and discussed with the elderly themselves (Sabelli, Kanda, & Hagita, 2011). A futuristic example is the End of Life Care MachineFootnote 7, a so-called mechanical assisted intimacy device or robotic intimacy device. This machine even has an “end-of-life detector.” It is remarkable that a social robot can detect when humans pass away while humans have such difficulties with categorizing social robots as animate: we perceive them as being animate, similar to humans, yet we know on a deeper level that they do not live.

In a mixed-method literature review of 86 studies positive effects of social robot use in elderly care was reported (Kachouie, Sedighadeli, Khosla, & Chu, 2014). In particular, use of social robots was found to enhance elderly people’s well-being and decrease the workload on caregivers. On the other hand, a recent study showed that elderly people preferred to be alone or helped by humans and did not want social robots to be their personal assistants (Wu et al., 2014). This might be explained with an absence of previous experience of using social robots, as well as by general suspicions of older generations against modern technology.

Thus, it is crucial to adapt social robotic features for specific needs in elderly care. The potential positive role of social robots is twofold: on the one hand, they can be used in everyday duties, such as reminding users of their medication taking and performing their household duties, in order to help the elderly owners to remain independent; on the other hand, social robots can serve as social agents to create social relationships with, which can in turn contribute to healthy aging and emotional stability and help the elderly to better confront and handle the natural limitations of aging. But the social robots can only fulfill their role as assistants and social agents for the elderly if the design is elaborated to a degree that the machine attains the acceptance of its patients.

5.2 Robots as a Therapy Tool for Children

Pet robots and robots in general have become a therapy tool for children, as they are believed to be less complicated and easier to communicate with compared to humans and even animals. Especially autistic children have difficulties with complex social interaction and communication so that therapy robots with simplified interaction capabilities help children with autism overcome social interaction difficulties, as well as their problems with relationship building, verbal and nonverbal communication, and imagination (Cabibihan, Javed, Ang, & Aljunied, 2013). Also non-pet robots have been used for this purpose, for example, the Aurora project (AUtonomous RObotic platform as a Remedial tool for children with Autism; Robins, Dautenhahn, te Boekhorst, & Billard, 2004) has shown encouraging results in promoting more interaction, joint attention, imitation, eye contacts with robots, and between children with robots as a mediator (Dautenhahn & Werry, 2004). Interacting with the small creature-like social robot Keepon encouraged children to spontaneously initiate play and communication with him, which in turn generated improvement in typical autism symptoms (Kozima, Nakagawa, & Yasuda, 2005). Similarly, humanoid robots such as Nao (see, for example, Huskens, Palmen, Van der Werff, Lourens, & Barakova, 2015) and KASPAR (see, for example, Huijnen, Lexis, Jansens, & de Witte, 2017) have successfully been employed to interact with autistic children, perhaps reflecting their better social predictability when compared to human interaction partners.

In general, both humanoid and other social robots have repeatedly elicited certain target behaviors in children, such as imitation, eye contact, self-initiation, and communication, although not with all children (Cabibihan et al., 2013; Ricks & Colton, 2010). The effect, however, remained small and unstable, perhaps due to the small number of children participating in these studies. Thus, there is currently no clear evidence that social robots might be more effective than a human trainer (Huskens, Verschuur, Gillesen, Didden, & Barakova, 2013). In line with that view, it is generally agreed that the caregiver still plays the main role in treatment, even when a social robot is used as a tool, and independently of the appearance of the social robot (humanoid vs. nonhumanoid). Thus, it is of great importance that a human caregiver leads the intervention sessions and always has full control over the machine (see Sect. 5 for further justification). The implication of this stance is that caregivers should (a) undergo an extensive training with the social robot before applying it in therapy sessions (Huijnen et al., 2017) and (b) prevent the child from being totally distracted from human beings and spending only attention to the social robot instead (Huskens et al., 2015). Moreover, Huskens et al. (2013) compared therapy with the humanoid robot Nao to that with a human trainer. Nao possesses a very simple version of a human-like face and was administered in this study with pre-recorded speech and a remote control. Interestingly, the authors found no significant differences between these two conditions (Nao vs. human) regarding the number of self-initiated questions as a measure of communication skills improvement. So if autistic children do not show any behavioral differences in the interaction with a social robot or a human, then the question is raised whether and how autistic children perceive the social robot as a human being or a robot. However, due to the small sample size of only six children, caution must be applied, as the findings of Huskens et al. (2013) might not be transferable to all children with autism. Kuhnert et al. (2017) performed a comparison of human’s attitude toward social robots in general and human’s expectation of an ideal, everyday life social robot by means of the Semantic Differential Scale. Autistic children, who tend to avoid looking at the eyes of others (Richer & Coss, 1976; Tanaka & Sung, 2016), have a curious attitude toward therapy robots and tend to quickly start interacting with them (Huskens et al., 2015); some even show gaze avoidance toward social robots (Kozima et al., 2005), which suggests that they perceive social robots as being similar to human beings, as children suffering from autism typically show gaze avoidance behavior toward other human beings (see, for example, Tanaka & Sung, 2016).

Healthy children have also been introduced to social robots, for childcare (PaPeRo, Osada, Ohnaka, & Sato, 2006), education (Movellan, Tanaka, Fortenberry, & Aisaka, 2005; Tanaka, 2007; Tanaka, Cicourel, & Movellan, 2007; Tanaka & Kimura, 2009), socializing (Kanda, Sato, Saiwaki, & Ishiguro, 2007), and in hospitals (Little Casper; MOnarCH—Multi-Robot Cognitive Systems Operating in Hospitals; Sequeira, Lima, Saffiotti, Gonzalez-Pacheco, & Salichs, 2013). The typical outcome is that children are motivated when interacting with social robots, especially when they appear weaker than the children themselves, thereby not frightening the children (see, for example, Tanaka & Kimura, 2009). Thus, developmental robotics is an emerging field which combines different areas of research such as child psychology, neuroscience, and robotics (for an overview, see Cangelosi & Schlesinger, 2015). Nevertheless, ethical implications in the use of social robots with children always have to be taken into account carefully, especially the long-term effects which might appear when social robots are used as learning assistants for toddlers.

5.3 Sex Robots in Daily Life and Intervention

Social robots will eventually enter into the most intimate sphere of human life—our sexual relationships. Commenting on the newest developments in robotic research, David Levy, co-author of the book chapter “Love and Sex with Robots” (2017), predicted that by the year 2050, marriages between social robots and humans will become normal (Cheok, Levy, Karunanayaka, & Morisawa, 2017): In the past years, both the material of the surface of sex dolls and the programming of interactive humanoid robots have improved, resulting in high-quality interactive sex robots. For instance, Harmony, an android robot produced by Realbotix™,Footnote 8 is programmed to be a perfect companion according to the developing company. Crucially, the robot is customizable so that it matches the preferences of its user by having the skill to hold long-term conversations with the user on different topics. Moreover, the social robot can be adapted according to the preferences of the clients regarding its visual appearance, its voice, and its character. These so-called companionship robots are configurable in several different personalities, with selectable skin, eye, hair color, as well as nationality, they can “feel” touch and react to it, can hear, and talk. Thus, the relationship between humans and (sex) robots will more and more develop to affectionate relationships, which is why Cheok et al. (2017) made their prediction about marriages between humans and social robots. On the other hand, we understand that they cannot truly love us nor have true emotions.

Little is known about social opinions regarding sex robots and the limitations of their possible use. In one of the few existing surveys, Scheutz and Arnold (2016) asked US participants how they imagine sex robots’ form and abilities and if they would consider employing them. Most of the suggested forms (like those of friends, one’s deceased spouse, a family member, an animal, a fantasy creature, etc.) were rated as appropriate, except for child-like forms which might indicate pedophilic interests and thus signals clear moral limits. Most of the suggested uses of companionship robots (such as cheating on a partner, for sex education, for sex offenders, or for disabled people) were considered acceptable, especially the proposition of using sex robots instead of human prostitutes. In this specific question, the most substantial gender differences were found, with men being more open than women to the use of sex robots in general. What both men and women agree upon is that sex robots could be used (a) to maintain a relationship between people, (b) to assist in the training of intimate behaviors or for preventing sexual harassment, and (c) in places which are extremely isolated from the rest of society, such as prisons and submarines. These findings indicate that sex robots are mostly seen as mediators in human relationships and rarely as substitutes for them.

Multiple ethical questions arise in connection with sex robots’ use. Will they level human sexual relationships and make them perhaps even unnecessary once procreation is not desired, since the robot is always ready to talk or play, while human relationships require effort? Will they liberate sex workers or rather discriminate against women by focusing on women as sex objects? Will they enrich therapy options for sexual crimes or sexual malfunctions? Will they make human life richer or lonelier? According to a recent report by the Foundation for Responsible Robotics (FRR),Footnote 9 all these perspectives are currently possible (Responsible Robotics, 2018). Especially a widespread state of loneliness and associated mental and physical health problems are one of the most feared outcomes of the use of sex robots.

Nevertheless, society may gain unexpected benefits from employing sex robots. In recent years, there has been an increasing amount of public interest in possible uses of sex robots in sexual crime prevention in the future and as assistants for disabled people. Empirical research is urgently needed in this area to clarify the added value of such therapies. The results are debatable: on the one hand, it is claimed that sex robots may ease pathological sexual desires; on the other hand, they may remove current boundaries between social robots and humans and thereby reinforce inappropriate sexual practices. According to surveys conducted in Germany (Ortland, 2016), Italy (Gammino, Faccio, & Cipolletta, 2016) and Sweden (Bahner, 2012), there are serious challenges for caretakers of disabled people in special care homes regarding the sexual drive of the inhabitants. One option for compensating this issue is to organize an official sexual assistance service. This has been organized already in many countries for mentally and physically disabled people who have difficulties exercising their right to intimate relationships and tenderness as well as sexual autonomy. Studies have shown that this service is positively accepted, both by the disabled people and their families (Ortland, 2017). The human sexual assistants do not perceive themselves as sex workers but rather as mentors in intimate issues; sexual intercourse as such takes place extremely seldom. Crucially, this service is executed by real humans and not by robots. In the light of this recent development, it is conceivable that sex robots could possibly be used as such sex assistants. On the other hand, arguments have been made that disabled and elderly people might be misled regarding the emotional involvement of such robotic sex workers, eventually treating them as humans and expecting “true” empathy and feedback. In a recent and pioneering Swedish TV series named “Real Humans,”Footnote 10 these issues were illustrated by showing an alternative reality in which android robots are part of human social life. To date, there has been little agreement on possible applications of sex robots in health care. Debate continues about the ethical issues and the methodological background. There is abundant room for further progress in determining guidelines for the proper use of sex robots.

6 Conclusion

At the present time, research on social robots has become an important field for the development of transformative technologies. Technology still has to improve considerably in order to build robots that are becoming part of our daily lives not only in the industry but also in assistance of daily tasks and even in social domains. In the social domain, humanoid robots can assist us if they possess the cues we expect from our interaction partners and provide appropriate social as well as sensory and motor contingencies. This chapter summarized the types of social robots that are already used in daily life, such as elderly care, therapy of children, and sexual intervention.

The pet-like or human-like appearance of social robots can cause an initial feeling of alienation and eeriness both consciously and unconsciously. We summarized effects of robot appearance on human perception and the reasons for the feelings toward social robots. Ethical implications always have to be taken into account when interacting with social robots, in particular when they become interaction partners and teachers for children. Thus, future studies have to assess whether an ever-more human-like appearance or rather an improved social functionality of robots can best avoid “uncanny valley” sensations in their customers. All these venues of current study will eventually converge in the development of humanoid companions.

Notes

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

References

Abildgaard, J. R., & Scharfe, H. (2012). A geminoid as lecturer. In S. S. Ge, O. Khatib, J. J. Cabibihan, R. Simmons, & M. A. Williams (Eds.), Social robotics. ICSR 2012. Lecture notes in computer science (Vol. 7621). Berlin, Germany: Springer. https://doi.org/10.1007/978-3-642-34103-8_41

Alaiad, A., & Zhou, L. (2014). The determinants of home healthcare robots adoption: An empirical investigation. International Journal of Medical Informatics, 83(11), 825–840.

Allport, G. (2012). W. 1954. The nature of prejudice, 12.

Angelucci, A., Bastioni, M., Graziani, P., & Rossi, M. G. (2014). A philosophical look at the uncanny valley. In J. Seibt, R. Hakli, & M. Nørskov (Eds.), Sociable robots and the future of social relations: Proceedings of robophilosophy (Vol. 273, pp. 165–169). Amsterdam, Netherlands: IOS Press. https://doi.org/10.3233/978-1-61499-480-0-165

Appel, M., Weber, S., Krause, S., & Mara, M. (2016, March). On the eeriness of service robots with emotional capabilities. In The Eleventh ACM/IEEE International Conference on Human Robot Interaction (pp. 411–412). IEEE Press. https://doi.org/10.1109/HRI.2016.7451781

Bahner, J. (2012). Legal rights or simply wishes? The struggle for sexual recognition of people with physical disabilities using personal assistance in Sweden. Sexuality and Disability, 30(3), 337–356.

Becker-Asano, C., & Ishiguro, H. (2011, April). Evaluating facial displays of emotion for the android robot Geminoid F. In 2011 IEEE Workshop on Affective Computational Intelligence (WACI) (pp. 1–8). IEEE. https://doi.org/10.1109/WACI.2011.5953147

Becker-Asano, C., Ogawa, K., Nishio, S., & Ishiguro, H. (2010). Exploring the uncanny valley with Geminoid HI-1 in a real-world application. In Proceedings of IADIS International Conference Interfaces and Human Computer Interaction (pp. 121–128).

Bharatharaj, J., Huang, L., Mohan, R., Al-Jumaily, A., & Krägeloh, C. (2017). Robot-assisted therapy for learning and social interaction of children with autism spectrum disorder. Robotics, 6(1), 4. https://doi.org/10.3390/robotics6010004

Bohlmann, U. M., & Bürger, M. J. F. (2018). Anthropomorphism in the search for extra-terrestrial intelligence—The limits of cognition? Acta Astronautica, 143, 163–168. https://doi.org/10.1016/j.actaastro.2017.11.033

Breazeal, C. (2002). Designing sociable machines. In Socially intelligent agents (pp. 149–156). Boston, MA: Springer.

Brenton, H., Gillies, M., Ballin, D., & Chatting, D. (2005). The uncanny valley: Does it exist? Wired, 730(1978), 2–5. Retrieved from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.160.6952&rep=rep1&type=pdf

Brink, K. A., Gray, K., & Wellman, H. M. (2017). Creepiness creeps in: Uncanny valley feelings are acquired in childhood. Child Development. https://doi.org/10.1111/cdev.12999

Broadbent, E. (2017). Interactions with robots: The truths we reveal about ourselves. Annual Review of Psychology, 68(1), 627–652. https://doi.org/10.1146/annurev-psych-010416-043958

Burleigh, T. J., & Schoenherr, J. R. (2015). A reappraisal of the uncanny valley: Categorical perception or frequency-based sensitization? Frontiers in Psychology, 5, 1488. https://doi.org/10.3389/fpsyg.2014.01488

Burrows, E. (2011). The birth of a robot race. Engineering & Technology, 6(10), 46–48.

Cabibihan, J. J., Javed, H., Ang, M., & Aljunied, S. M. (2013). Why robots? A survey on the roles and benefits of social robots in the therapy of children with autism. International Journal of Social Robotics, 5(4), 593–618. https://doi.org/10.1007/s12369-013-0202-2

Cangelosi, A., & Schlesinger, M. (2015). Developmental robotics: From babies to robots. Cambridge, MA: MIT Press.

Cheetham, M., Wu, L., Pauli, P., & Jancke, L. (2015). Arousal, valence, and the uncanny valley: Psychophysiological and self-report findings. Frontiers in Psychology, 6, 1–15. https://doi.org/10.3389/fpsyg.2015.00981

Cheok, A. D., Levy, D., Karunanayaka, K., & Morisawa, Y. (2017). Love and sex with robots. In R. Nakatsu, M. Rauterberg, & P. Ciancarini (Eds.), Handbook of digital games and entertainment technologies (pp. 833–858). Singapore: Springer.

Chong, T. T. J., Cunnington, R., Williams, M. A., Kanwisher, N., & Mattingley, J. B. (2008). fMRI adaptation reveals mirror neurons in human inferior parietal cortex. Current Biology, 18(20), 1576–1580. https://doi.org/10.1016/j.cub.2008.08.068

Collins, S., Ruina, A., Tedrake, R., & Wisse, M. (2005). Efficient bipedal robots based on passive-dynamics walkers. Science, 307(5712), 1082–1085. https://doi.org/10.1126/science.1107799

D’Cruz, G. (2014). 6 things I know about Geminoid F, or what I think about when I think about android theatre. Australasian Drama Studies, (65), 272.

Dautenhahn, K., & Werry, I. (2004). Towards interactive robots in autism therapy: Background, motivation and challenges. Pragmatics & Cognition, 12(1), 1–35. https://doi.org/10.1075/pc.12.1.03dau

Duffy, B. R. (2003). Anthropomorphism and the social robot. Robotics and Autonomous Systems, 42(3–4), 177–190. https://doi.org/10.1016/S0921-8890(02)00374-3

Epley, N., Waytz, A., & Cacioppo, J. T. (2007). On seeing human: A three-factor theory of anthropomorphism. Psychological Review, 114(4), 864–886. https://doi.org/10.1037/0033-295X.114.4.864

Ferrey, A. E., Burleigh, T. J., & Fenske, M. J. (2015). Stimulus-category competition, inhibition, and affective devaluation: A novel account of the uncanny valley. Frontiers in Psychology, 6, 1–15. https://doi.org/10.3389/fpsyg.2015.00249

Franchi, A. M., Sernicola, L., & Gini, G. (2016). Linguistic primitives: A new model for language development in robotics. In Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics) (pp. 207–218). Cham, Switzerland: Springer. https://doi.org/10.1007/978-3-319-43488-9_19

Freud, S. (1919). 1947. Das Unheimliche. In Gesammelte Werke XII. London, UK: Imago.

Gammino, G. R., Faccio, E., & Cipolletta, S. (2016). Sexual assistance in Italy: An explorative study on the opinions of people with disabilities and would-be assistants. Sexuality and Disability, 34(2), 157–170.

Gray, K., & Wegner, D. M. (2012). Feeling robots and human zombies: Mind perception and the uncanny valley. Cognition, 125(1), 125–130. https://doi.org/10.1016/j.cognition.2012.06.007

Hanson, D. (2005). Expanding the aesthetic possibilities for humanoid robots. In IEEE-RAS International Conference on Humanoid Robots (pp. 24–31). Retrieved from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.472.2518&rep=rep1&type=pdf

Haslam, N. (2006). Dehumanization: An integrative review Nick Haslam. Personal and Social Psychology Review, 10(3), 214–234. https://doi.org/10.1207/s15327957pspr1003

Haslam, N., & Loughnan, S. (2014). Dehumanization and infrahumanization. Annual Review of Psychology, 65(1), 399–423. https://doi.org/10.1146/annurev-psych-010213-115045

Hegel, F., Krach, S., Kircher, T., Wrede, B., & Sagerer, G. (2008). Understanding social robots: A user study on anthropomorphism. In RO-MAN 2008—The 17th IEEE International Symposium on Robot and Human Interactive Communication (January 2016) (pp. 574–579). https://doi.org/10.1109/ROMAN.2008.4600728

Ho, C. C., & MacDorman, K. F. (2017). Measuring the uncanny valley effect. International Journal of Social Robotics, 9(1), 129–139. https://doi.org/10.1007/s12369-016-0380-9

Hsu, J. (2012). Robotics’ uncanny valley gets new translation. Livescience.

Huijnen, C. A. G. J., Lexis, M. A. S., Jansens, R., & de Witte, L. P. (2017). How to Implement Robots in Interventions for Children with Autism? A Co-creation Study Involving People with Autism, Parents and Professionals. Journal of Autism and Developmental Disorders, 47(10), 3079–3096. https://doi.org/10.1007/s10803-017-3235-9

Huskens, B., Palmen, A., Van der Werff, M., Lourens, T., & Barakova, E. (2015). Improving collaborative play between children with autism spectrum disorders and their siblings: The effectiveness of a robot-mediated intervention based on Lego® therapy. Journal of Autism and Developmental Disorders, 45(11), 3746–3755. https://doi.org/10.1007/s10803-014-2326-0

Huskens, B., Verschuur, R., Gillesen, J., Didden, R., & Barakova, E. (2013). Promoting question-asking in school-aged children with autism spectrum disorders: Effectiveness of a robot intervention compared to a human-trainer intervention. Developmental Neurorehabilitation, 16(5), 345–356. https://doi.org/10.3109/17518423.2012.739212

Jentsch, E. (1997). On the psychology of the uncanny (1906). Angelaki, 2(1), 7–16.

Jones, B. C., Little, A. C., Burt, D. M., & Perrett, D. I. (2004). When facial attractiveness is only skin deep. Perception, 33(5), 569–576. https://doi.org/10.1068/p3463

Kachouie, R., Sedighadeli, S., Khosla, R., & Chu, M. T. (2014). Socially assistive robots in elderly care: A mixed-method systematic literature review. International Journal of Human-Computer Interaction, 30(5), 369–393.

Kanda, T., Sato, R., Saiwaki, N., & Ishiguro, H. (2007). A two-month field trial in an elementary school for long-term human-robot interaction. IEEE Transactions on Robotics, 23, 962–971. https://doi.org/10.1109/TRO.2007.904904

Kätsyri, J., Förger, K., Mäkäräinen, M., & Takala, T. (2015). A review of empirical evidence on different uncanny valley hypotheses: Support for perceptual mismatch as one road to the valley of eeriness. Frontiers in Psychology, 6, 1–16. https://doi.org/10.3389/fpsyg.2015.00390

Kilner, J. M., Neal, A., Weiskopf, N., Friston, K. J., & Frith, C. D. (2009). Evidence of mirror neurons in human inferior frontal gyrus. The Journal of Neuroscience, 29(32), 10153–10159. https://doi.org/10.1523/JNEUROSCI.2668-09.2009

Koschate, M., Potter, R., Bremner, P., & Levine, M. (2016, April). Overcoming the uncanny valley: Displays of emotions reduce the uncanniness of humanlike robots. In ACM/IEEE International Conference on Human-Robot Interaction (Vol. 2016, pp. 359–365). https://doi.org/10.1109/HRI.2016.7451773

Kozima, H., Nakagawa, C., & Yasuda, Y. (2005). Interactive robots for communicative-care. A case study in autism therapy. Resource document. Retrieved June 24, 2018, from http://ieeexplore.ieee.org/xpl/mostRecentIssue.jsp?punumber=10132

Kuhnert, B., Ragni, M., & Lindner, F. (2017, August). The gap between human’s attitude towards robots in general and human’s expectation of an ideal everyday life robot. In 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) (pp. 1102–1107). IEEE.

Lewkowicz, D. J., & Ghazanfar, A. A. (2012). The development of the uncanny valley in infants. Developmental Psychobiology, 54(2), 124–132. https://doi.org/10.1002/dev.20583

Liarokapis, M. V., Artemiadis, P. K., & Kyriakopoulos, K. J. (2012). Functional Anthropomorphism for human to robot motion mapping. In Proceedings - IEEE International Workshop on Robot and Human Interactive Communication (pp. 31–36). https://doi.org/10.1109/ROMAN.2012.6343727

MacDorman, K. (2005). Androids as an experimental apparatus: Why is there an uncanny valley and can we exploit it. In CogSci-2005 Workshop: Toward Social Mechanisms of Android Science (Vol. 3, pp. 106–118). Retrieved from https://www.lri.fr/~sebag/Slides/uncanny.pdf

MacDorman, K. F., & Chattopadhyay, D. (2016). Reducing consistency in human realism increases the uncanny valley effect; increasing category uncertainty does not. Cognition, 146, 190–205. https://doi.org/10.1016/j.cognition.2015.09.019

MacDorman, K. F., & Entezari, S. O. (2015). Individual differences predict sensitivity to the uncanny valley. Interaction Studies, 16(2), 141–172. https://doi.org/10.1075/is.16.2.01mac

MacDorman, K. F., & Ishiguro, H. (2006). The uncanny advantage of using androids in cognitive and social science research. Interaction Studies, 7(3), 297–337. https://doi.org/10.1075/is.7.3.03mac

Mathur, M. B., & Reichling, D. B. (2016). Navigating a social world with robot partners: A quantitative cartography of the uncanny valley. Cognition, 146, 22–32. https://doi.org/10.1016/j.cognition.2015.09.008

Matsuda, Y.-T., Okamoto, Y., Ida, M., Okanoya, K., & Myowa-Yamakoshi, M. (2012). Infants prefer the faces of strangers or mothers to morphed faces: An uncanny valley between social novelty and familiarity. Biology Letters, 8(5), 725–728. https://doi.org/10.1098/rsbl.2012.0346

Minato, T., Yoshikawa, Y., Noda, T., Ikemoto, S., Ishiguro, H., & Asada, M. (2007, November). CB2: A child robot with biomimetic body for cognitive developmental robotics. In 2007 7th IEEE-RAS International Conference on Humanoid Robots (pp. 557–562). IEEE. https://doi.org/10.1109/ICHR.2007.4813926

Minsky, M. (1980). Telepresence. Omni, 2(9), 44–52.

Mitchell, W. J., Szerszen, K. A., Sr., Lu, A. S., Schermerhorn, P. W., Scheutz, M., & MacDorman, K. F. (2011). A mismatch in the human realism of face and voice produces an uncanny valley. i-Perception, 2(1), 10–12. https://doi.org/10.1068/i0415

Moosa, M. M., & Minhaz Ud-Dean, S. M. (2010). Danger avoidance: An evolutionary explanation of the uncanny valley. Biological Theory, 5, 12–14. https://doi.org/10.1162/BIOT_a_00016

Mori, M. (1970). The uncanny valley. Energy, 7(4), 33–35. https://doi.org/10.1109/MRA.2012.2192811

Movellan, J. R., Tanaka, F., Fortenberry, B., & Aisaka, K. (2005). The RUBI/QRIO project: Origins, principles, and first steps. In The 4th International Conference on Development and Learning (pp. 80–86). IEEE. https://doi.org/10.1109/DEVLRN.2005.1490948

Murphy, G. L. (2002). The big book of concepts. Harvard, MA: MIT Press.

Nishio, S., Ishiguro, H., & Hagita, N. (2007). Geminoid: Teleoperated android of an existing person. In A. Carlos de Pina Filho (Ed.), Humanoid robots: New developments (pp. 343–3252). London, UK: InTech. Retrieved from http://www.intechopen.com/books/humanoid_robots_new_developments/geminoid__teleoperated_android_of_an_existing_person

Nomura, T., Suzuki, T., Kanda, T., & Kato, K. (2006). Measurement of negative attitudes toward robots. Interaction Studies, 7(3), 437–454.

Nomura, T. T., Syrdal, D. S., & Dautenhahn, K. (2015). Differences on social acceptance of humanoid robots between Japan and the UK. In Proceedings of the 4th International Symposium on New Frontiers in Human-Robot Interaction. The Society for the Study of Artificial Intelligence and the Simulation of Behaviour (AISB).

Ogawa, K., Bartneck, C., Sakamoto, D., Kanda, T., Ono, T., & Ishiguro, H. (2018). Can an android persuade you? In Geminoid studies: Science and technologies for humanlike teleoperated androids (pp. 235–247). Singapore: Springer.

Ortland, B. (2016). Sexuelle Selbstbestimmung von Menschen mit Behinderung: Grundlagen und Konzepte für die Eingliederungshilfe. Stuttgart, Germany: Kohlhammer Verlag.

Ortland, B. (2017). Realisierungs (un) möglichkeiten sexueller Selbstbe-stimmung bei Menschen mit Komplexer Behinde-rung. Schwere Behinderung & Inklusion: Facetten einer nicht ausgrenzenden Pädagogik, 2, 111.

Osada, J., Ohnaka, S., & Sato, M. (2006). The scenario and design process of childcare robot, PaPeRo. In Proceedings of the 2006 ACM SIGCHI International Conference on Advances in Computer Entertainment Technology - ACE ’06 (p. 80). https://doi.org/10.1145/1178823.1178917

Perrett, D. (2010). In your face. The new science of human attraction. Basingstoke, UK: Palgrave Macmillan.

Pollack, M. E., Brown, L., Colbry, D., Orosz, C., Peintner, B., Ramakrishnan, S., … Thrun, S. (2002, August). Pearl: A mobile robotic assistant for the elderly. In AAAI Workshop on Automation as Eldercare (Vol. 2002, pp. 85–91). Retrieved from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.16.6947&rep=rep1&type=pdf

Pollick, F. E. (2010). In search of the uncanny valley. In Lecture Notes of the Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering (Vol. 40 LNICST, pp. 69–78). https://doi.org/10.1007/978-3-642-12630-7_8

Pulvermüller, F., Garagnani, M., & Wennekers, T. (2014). Thinking in circuits: Toward neurobiological explanation in cognitive neuroscience. Biological Cybernetics, 108(5), 573–593. https://doi.org/10.1007/s00422-014-0603-9

Responsible Robotics. (2018). FRR report: Our sexual future with robots. Responsible Robotics. [online]. Retrieved May 29, 2018, from https://responsiblerobotics.org/2017/07/05/frr-report-our-sexual-future-with-robots/

Rhodes, G., Yoshikawa, S., Clark, A., Kieran, L., McKay, R., & Akamatsu, S. (2001). Attractiveness of facial averageness and symmetry in non-western cultures: In search of biologically based standards of beauty. Perception, 30(5), 611–625. https://doi.org/10.1068/p3123

Richer, J. M., & Coss, R. G. (1976). Gaze aversion in autistic and normal children. Acta Psychiatrica Scandinavica, 53(3), 193–210. https://doi.org/10.1111/j.1600-0447.1976.tb00074.x

Ricks, D. J., & Colton, M. B. (2010). Trends and considerations in robot-assisted autism therapy. In Proceedings - IEEE International Conference on Robotics and Automation (June) (pp. 4354–4359). https://doi.org/10.1109/ROBOT.2010.5509327

Rizzolatti, G., Camarda, R., Fogassi, L., Gentilucci, M., Luppino, G., & Matelli, M. (1988). Functional organization of inferior area 6 in the macaque monkey - II. Area F5 and the control of distal movements. Experimental Brain Research, 71(3), 491–507. https://doi.org/10.1007/BF00248742

Rizzolatti, G., Fadiga, L., Fogassi, L., & Gallese, V. (1999). Resonance behaviors and mirror neurons. Archives Italiennes de Biologie, 137(2), 85–100. https://doi.org/10.4449/AIB.V137I2.575

Robins, B., Dautenhahn, K., te Boekhorst, R., & Billard, A. (2004). Robotic assistants in therapy and education of children with autism: Can a small humanoid robot help encourage social interaction skills? Universal Access in the Information Society, 4(2), 105–120. https://doi.org/10.1007/s10209-005-0116-3

Sabelli, A. M., & Kanda, T. (2016). Robovie as a mascot: A qualitative study for long-term presence of robots in a shopping mall. International Journal of Social Robotics, 8(2), 211–221.

Sabelli, A. M., Kanda, T., & Hagita, N. (2011, March). A conversational robot in an elderly care center: An ethnographic study. In 2011 6th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (pp. 37–44). IEEE.

Sasaki, K., Ihaya, K., & Yamada, Y. (2017). Avoidance of novelty contributes to the uncanny valley. Frontiers in Psychology, 8, 1792. https://doi.org/10.3389/fpsyg.2017.01792

Sawada, H., Kitani, M., & Hayashi, Y. (2008). A robotic voice simulator and the interactive training for hearing-impaired people. BioMed Research International, 2008, 768232. https://doi.org/10.1155/2008/768232

Saygin, A. P., Chaminade, T., Ishiguro, H., Driver, J., & Frith, C. (2012). The thing that should not be: Predictive coding and the uncanny valley in perceiving human and humanoid robot actions. Social Cognitive and Affective Neuroscience, 7(4), 413–422. https://doi.org/10.1093/scan/nsr025

Scheutz, M., & Arnold, T. (2016, March). Are we ready for sex robots? In The Eleventh ACM/IEEE International Conference on Human Robot Interaction (pp. 351–358). IEEE Press. Retrieved from https://hrilab.tufts.edu/publications/scheutzarnold16hri.pdf

Schindler, S., Zell, E., Botsch, M., & Kissler, J. (2017). Differential effects of face-realism and emotion on event-related brain potentials and their implications for the uncanny valley theory. Scientific Reports, 7, 45003. https://doi.org/10.1038/srep45003

Schoenherr, J. R., & Lacroix, G. (2014). Overconfidence in nonlinearly separable category structures as evidence for dissociable category learning systems. Canadian Journal of Experimental Psychology, 68(4), 264. https://doi.org/10.1126/science.1164582

Scopelliti, M., Giuliani, M. V., & Fornara, F. (2005). Robots in a domestic setting: A psychological approach. Universal access in the information society, 4(2), 146–155.

Sequeira J., Lima, P., Saffiotti, A., Gonzalez-Pacheco, V., & Salichs, M. A. (2013) Monarch: Multi-robot cognitive systems operating in hospitals. In ICRA workshop Crossing the Reality Gap from Single to Multi- to Many Robot Systems. Karlsruhe, Germany.

Sharkey, A., & Sharkey, N. (2012). Granny and the robots: Ethical issues in robot care for the elderly. Ethics and Information Technology, 14(1), 27–40. https://doi.org/10.1007/s10676-010-9234-6

Shim, J., Arkin, R., & Pettinatti, M. (2017, May). An Intervening Ethical Governor for a robot mediator in patient-caregiver relationship: Implementation and Evaluation. In 2017 IEEE International Conference on Robotics and Automation (ICRA) (pp. 2936–2942). IEEE.

Shin, N., & Kim, S. (2007, August). Learning about, from, and with robots: Students’ perspectives. In The 16th IEEE International Symposium on Robot and Human Interactive Communication, 2007. RO-MAN 2007 (pp. 1040–1045). IEEE.

Steckenfinger, S. A., & Ghazanfar, A. A. (2009). Monkey visual behavior falls into the uncanny valley. Proceedings of the National Academy of Sciences of the United States of America, 106(43), 18362–18366. https://doi.org/10.1073/pnas.0910063106

Stein, J. P., & Ohler, P. (2017). Venturing into the uncanny valley of mind—The influence of mind attribution on the acceptance of human-like characters in a virtual reality setting. Cognition, 160, 43–50. https://doi.org/10.1016/j.cognition.2016.12.010

Strait, M., Vujovic, L., Floerke, V., Scheutz, M., & Urry, H. (2015). Too much humanness for human-robot interaction: Exposure to highly humanlike robots elicits aversive responding in observers. In Proceedings of the ACM CHI’15 Conference on Human Factors in Computing Systems (Vol. 1, pp. 3593–3602). https://doi.org/10.1145/2702123.2702415

Svendsen, M. (1934). Children’s imaginary companions. Archives of Neurology and Psychiatry, 32(5), 985–999. https://doi.org/10.1001/archneurpsyc.1934.02250110073006

Tanaka, F. (2007). Care-receiving robot as a tool of teachers in child education. Interaction Studies, 11(2), 263–268. https://doi.org/10.1075/is.11.2.14tan

Tanaka, F., Cicourel, A., & Movellan, J. R. (2007). Socialization between toddlers and robots at an early childhood education center. Proceedings of the National Academy of Sciences, 104(46), 17954–17958. https://doi.org/10.1073/pnas.0707769104

Tanaka, F., & Kimura, T. (2009, September). The use of robots in early education: A scenario based on ethical consideration. In The 18th IEEE International Symposium on Robot and Human Interactive Communication, 2009. RO-MAN 2009 (pp. 558–560). IEEE. https://doi.org/10.1109/ROMAN.2009.5326227

Tanaka, J. W., & Sung, A. (2016). The “eye avoidance” hypothesis of autism face processing. Journal of Autism and Developmental Disorders, 46(5), 1538–1552. https://doi.org/10.1007/s10803-013-1976-7

Tschöpe, N., Reiser, J. E., & Oehl, M. (2017, March). Exploring the uncanny valley effect in social robotics. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction (pp. 307–308). ACM. https://doi.org/10.1145/3029798.3038319

Urgen, B. A., Li, A. X., Berka, C., Kutas, M., Ishiguro, H., & Saygin, A. P. (2015). Predictive coding and the uncanny valley hypothesis: Evidence from electrical brain activity. Cognition: A Bridge between Robotics and Interaction, 15–21. https://doi.org/10.1109/TAMD.2014.2312399

Wang, S., Lilienfeld, S. O., & Rochat, P. (2015). The uncanny valley: Existence and explanations. Review of General Psychology, 19(4), 393–407. https://doi.org/10.1037/gpr0000056

Whorf, B. L. (1956). Language, thought, and reality: Selected writings of…. (edited by John B. Carroll.). Oxford, UK: Technology Press of MIT.

Wilson, A. D., & Golonka, S. (2013). Embodied cognition is not what you think it is. Frontiers in Psychology, 4, 58. https://doi.org/10.3389/fpsyg.2013.00058

Wu, Y. H., Wrobel, J., Cornuet, M., Kerhervé, H., Damnée, S., & Rigaud, A. S. (2014). Acceptance of an assistive robot in older adults: A mixed-method study of human–robot interaction over a 1-month period in the Living Lab setting. Clinical Interventions in Aging, 9, 801.

Yamada, Y., Kawabe, T., & Ihaya, K. (2013). Categorization difficulty is associated with negative evaluation in the “uncanny valley” phenomenon. Japanese Psychological Research, 55(1), 20–32. https://doi.org/10.1111/j.1468-5884.2012.00538.x

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix: A Visual Sample of Select Social Robots

Appendix: A Visual Sample of Select Social Robots

1.1 AIBO

Used by permission of Sony Electronics Inc. All Rights Reserved

1.2 KASPAR

Used with permission from Adaptive Systems Research Group, University of Hertfordshire, UK

1.3 iCAT

Used with permission from Royal Philips N.V./Philips Company Archives

1.4 GENIBO

Used with permission from DST Robot

1.5 Keepon

Used with permission from BeatBots LLC (Hideki Kozima & Marek Michalowski). Kozima, H., Michalowski, M. P. & Nakagawa, C. Int J of Soc Robotics (2009) 1: 3. https://doi.org/10.1007/s12369-008-0009-8

1.6 Kismet

© Sam Ogden

1.7 Little Casper

Used with permission from The MOnarCH Consortium, Deliverable D2.2.1, December 2014

1.8 Paro

Used with permission from AIST, Japan

1.9 Harmony

Used with permission from Realbotix™, USA

1.10 Robovie R3 Robot

Uluer, P., Akalın, N. & Köse, H. Int J of Soc Robotics (2015) 7: 571. https://doi.org/10.1007/s12369-015-0307-x

1.11 Robot-Era Robotic Platforms for Elderly People

The three Robot-Era robotic platforms: Outdoor (left), Condominium (center), and Domestic (right). Di Nuovo, A., Broz, F., Wang, N. et al. Intel Serv Robotics (2018) 11: 109. https://doi.org/10.1007/s11370-017-0237-6

1.12 Philip K. Dick

Used with permission from Hanson Robotics Limited

1.13 Sophia

Used with permission from Hanson Robotics Limited

1.14 Telenoid

Telenoid™ has been developed by Osaka University and Hiroshi Ishiguro Laboratories, Advanced Telecommunications Research Institute International (ATR)

1.15 Geminoid HI-2

Geminoid™ HI-2 has been developed by Hiroshi Ishiguro Laboratories, Advanced Telecommunications Research Institute International (ATR)

1.16 Geminoid F

Geminoid™ F has been developed by Osaka University and Hiroshi Ishiguro Laboratories, Advanced Telecommunications Research Institute International (ATR)

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Mende, M.A., Fischer, M.H., Kühne, K. (2019). The Use of Social Robots and the Uncanny Valley Phenomenon. In: Zhou, Y., Fischer, M.H. (eds) AI Love You. Springer, Cham. https://doi.org/10.1007/978-3-030-19734-6_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-19734-6_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-19733-9

Online ISBN: 978-3-030-19734-6

eBook Packages: Behavioral Science and PsychologyBehavioral Science and Psychology (R0)