Abstract

In the last two decades, the IR community has seen numerous advances in top-k query processing and inverted index compression techniques. While newly proposed methods are typically compared against several baselines, these evaluations are often very limited, and we feel that there is no clear overall picture on the best choices of algorithms and compression methods. In this paper, we attempt to address this issue by evaluating a number of state-of-the-art index compression methods and safe disjunctive DAAT query processing algorithms. Our goal is to understand how much index compression performance impacts overall query processing speed, how the choice of query processing algorithm depends on the compression method used, and how performance is impacted by document reordering techniques and the number of results returned, keeping in mind that current search engines typically use sets of hundreds or thousands of candidates for further reranking.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Over the past few decades, the IR community have been making a continuous effort to improve the efficiency of search in large collections of documents. Advances have been made in virtually all aspects of text retrieval, including index compression and top-k query processing. Although a multitude of authors have reported experimental results, comparing them across different publications poses a challenge due to varying data sets, parameters, evaluation metrics, and experimental setups. We aim to address this issue by providing an extensive experimental comparison across many index compression techniques and several query processing algorithms. Our comparison includes many recent methods, and thus provides a useful snapshot of the current state of the art in this area.

The most common structure used for text retrieval is an inverted index. For each term in a parsed collection, it stores a list of numerical IDs of documents containing this term, typically along with additional data, such as term frequencies or precomputed quantized impact scores. We call all values associated with a (term, document)-pair a posting. Postings are typically sorted in order of increasing document IDs, although there are other index organizations. We assume document-sorted posting lists throughout this paper.

The first problem we encounter is efficient index representation. In particular, compression of posting lists is of utmost importance, since they account for much of the data size and access costs. In practice, the problem we must solve is efficient encoding of non-negative integers, such as document IDs or their gaps, frequencies, positions, or quantized scores. Some encoding schemes, such as Golomb [23] or Binary Interpolative [34], can be very space-efficient but slow to decode. Other methods achieve very fast decoding while sacrificing compression ratio. In recent years, a significant boost in encoding efficiency has been achieved due to application of SIMD (Single Instruction, Multiple Data) instructions available on many modern CPUs [26, 27, 36, 44].

Likewise, the choice of a retrieval algorithm is crucial to query efficiency. Due to large sizes of most search collections, retrieving all potential matches is infeasible and undesirable. In practice, only the top k highest ranked documents are returned. Ranking methods can be grouped into fast and simple term-wise scoring methods [39, 51], and more complex rankers such as the Sequential Dependence Model (SDM) [31] or learning to rank methods [28, 49]. For term-wise techniques, the score of a document with respect to a query is simply the sum of the partial scores, also called impact scores, of the document with respect to each term. Complex rankers give up this term independence assumption. They are more accurate, but also much more expensive, as they require the evaluation of fairly complex ranking functions on up to hundreds of features that need to be fetched or generated from index and document data. Thus, it would be infeasible to evaluate such complex rankers on large numbers of documents.

To combine the speed of term-wise scoring with the accuracy of the complex rankers, a cascade ranking architecture is commonly deployed: First, a fast ranker is used to obtain \(k_c > k\) candidate results for a query; then the \(k_c\) candidates are reranked by a slower complex ranker to retrieve the final top k documents. In this paper, we address the first problem, also known as candidate generation, as reranking can be considered a separate, largely independent, problem. In particular, we focus on the performance of different index compression and query processing algorithms for candidate generation. We limit ourselves to safe Document-At-A-Time (DAAT) algorithms for disjunctive top-k queries.

Following the RIGOR Generalizability property [3], we focus on assessing how well technology performs in new contexts. There are four dimensions to our comparison study: index compression method, query processing algorithm, document ordering, and the number k of retrieved candidates. Published work proposing new compression methods or query processing algorithms typically only looks at a small slice of possible configurations, say, a new query processing algorithm compared against others using only one or two compression methods and document orderings, and only on a very limited range of k.

Catena et al. [8] showed the impact of compressing different types of posting information on the space and time efficiency of a search engine. Although they investigate several compression methods, their focus is mostly on different variations of the FOR family. They also limit their query processing comparison to exhaustive DAAT retrieval strategy, while we consider dynamic pruning techniques. On the other hand, they study several aspects not considered here, such as compression of different types of index data. Thus, we feel that our study answers different research questions by exploring many combinations of techniques that have never been reported. It can serve as a guide for choosing the best combinations of techniques in a given setup.

Contributions. We make the following major contributions:

-

1.

We provide experimental results for an extensive range of configurations. We include almost all of the state-of-the-art compression techniques, the most commonly used DAAT query processing approaches, and several document reorderings over a wide range of k. To our knowledge, this is the most extensive recent experimental study of this space of design choices.

-

2.

We combine already established open-source libraries and our own implementations to create a code base that provides means to reproduce the results, and that can also serve as a starting point for future research. We release this code for free and open use by the research community.

2 Outline of Our Methods

We now describe the various methods and settings we explore, organized according to the four dimensions of our study: compression method, query processing algorithm, document ordering, and number of candidates k. We decided not to include impact score quantization, another possible dimension, in this paper. Score quantization raises additional issues and trade-offs that we plan to study in a future extension of this work.

2.1 Index Compression Methods

We include in our study a total of 11 index compression methods that we consider to be a good representation of the current state of the art. For each method, we integrated what we believe to be the fastest available open-source implementation. We now briefly outline these methods.

Variable Byte Encoding. These methods encode each integer as a sequence of bytes. The simplest one is Varint (also known as Varbyte or VByte), which uses 7 bits of each byte to encode the number (or a part of it), and 1 remaining bit to state if the number continues in the next byte. Although compression of Varint is worse than that of older bit-aligned algorithms such as Elias [21], Rice [38], or Golomb [23] coding, it is much faster in memory-resident scenarios [40].

Varint basically stores a unary code for the size (number of bytes used) of each integer, distributed over several bytes. To improve decoding speed, Dean [14] proposed Group Varint, which groups the unary code bits together. One byte is used to store 4 2-bit numbers defining the lengths (in bytes) of the next 4 integers. The following bytes encode these integers.

Recently, several SIMD-based implementations of variable-byte encodings have been shown to be extremely efficient [14, 36, 44]. Stepanov et al. [44] analyzed a family of SIMD-based algorithms, including a SIMD version of Group Varint, and found the fastest to be VarintG8IU: Consecutive numbers are grouped in 8-byte blocks, preceded by a 1-byte descriptor containing unary-encoded lengths (in bytes) of the integers in the block. If the next integer cannot fit in a block, the remaining bytes are unused.

Stream VByte [27] combines the benefits of VarintG8IU and Group Varint. Like Group Varint, it stores four integers per block with a 1-byte descriptor. Thus, blocks have variable lengths, which for Group Varint means that the locations of these descriptors cannot be easily predicted by the CPU. Stream VByte avoids this issue by storing all descriptors sequentially in a different location.

PForDelta. PForDelta [54] encodes a large number of integers (say, 64 or 128) at a time by choosing a k such that most (say, 90%) of the integers can be encoded in k bits. The remaining values, called exceptions, are encoded separately using another method. More precisely, we select b and k such that most values are in the range \([b, b + 2^k - 1]\), and thus can be encoded in k bits by shifting them to the range \([0, 2^k - 1]\). Several methods have been proposed for encoding the exceptions and their locations. One variant, OptPForDelta [50], selects b and k to optimize for space or decoding cost, with most implementations focusing on space. A fast SIMD implementation was proposed in [26].

Elias-Fano. Given a monotonically increasing integer sequence S of size n, such that \(S_{n-1} < u\), we can encode it in binary using \(\lceil \log u\rceil \) bits. Instead of writing them directly, Elias-Fano coding [20, 22] splits each number into two parts, a low part consisting of \(l = \lceil \log \frac{u}{n}\rceil \) right-most bits, and a high part consisting of the remaining \(\lceil \log u\rceil - l\) left-most bits. The low parts are explicitly written in binary for all numbers, in a single stream of bits. The high parts are compressed by writing, in negative-unary form (i.e., with the roles of 0 and 1 reversed), the gaps between the high parts of consecutive numbers. Sequential decoding is done by simultaneously retrieving low and high parts, and concatenating them. Random access requires finding the locations of the i-th 0- or 1-bit within the unary part of the data using an auxiliary succinct data structure. Furthermore, a NextGEQ(x) operation, which returns the smallest element that is greater than or equal to x, can be implemented efficiently. Observe that \(h_x\), the higher bits of x, is used to find the number of elements having higher bits smaller than \(h_x\), denoted as p. Then, a linear scan of \(l_x\), the lower bits of x, can be employed starting from posting p of the lower bits array of the encoded list.

The above version of Elias-Fano coding cannot exploit clustered data distributions for better compression. This is achieved by a modification called Partitioned Elias-Fano [35] that splits the sequence into b blocks, and then uses an optimal choice of the number of bits in the high and low parts for each block. We use this version, which appears to outperform Elias-Fano in most situations.

Binary Interpolative. Similar to Elias-Fano, Binary Interpolative Coding (BIC) [34] directly encodes a monotonically increasing sequence rather than a sequence of gaps. At each step of this recursive algorithm, the middle element m is encoded by a number \(m - l - p\), where l is the lowest value and p is the position of m in the currently encoded sequence. Then we recursively encode the values to the left and right of m. BIC encodings are very space-efficient, particularly on clustered data; however, decoding is relatively slow.

Word-Aligned Methods. Several algorithms including Simple-9 [1], Simple-16 [52], and Simple-8b [2] try to pack as many numbers as possible into one machine word to achieve fast decoding. For instance, Simple-9 divides each 32-bit word into a 4-bit selector and a 28-bit payload. The selector stores one of 9 possible values, indicating how the payload is partitioned into equal-sized bit fields (e.g., 7 4-bit values, or 9 3-bit values). Some of the partitionings leave up to three of the 28 payload bits unused. Later enhancements in [2, 52] optimize the usage of these wasted bits or increase the word size to 64 bits.

Lemire and Boytsov [26] proposed a bit-packing method that uses SIMD instructions. The algorithm, called SIMD-BP128, packs 128 consecutive integers into as few 128-bit words as possible. The 16-byte selectors are stored in groups of 16 to fully utilize 128-bit SIMD reads and writes.

Very recently, Trotman [46] proposed QMX encoding (for Quantities, Multipliers, and eXtractor), later extended by Trotman and Lin [47]. It combines the Simple family and SIMD-BP128 by packing as many integers as possible into one or two 128-bit words. Furthermore, the descriptors are run-length encoded, allowing one selector to describe up to 16 consecutive numbers.

Asymmetric Numeral Systems. Asymmetric Numeral Systems (ANS) [19] are a recent advance in entropy coding that combines the good compression rate of arithmetic coding with a speed comparable to Huffman coding. ANS represents a sequence of symbols as a positive integer x, and depends on the frequencies of symbols from a given alphabet \(\varSigma \). Each string over \(\varSigma \) is assigned a state, which is an integer value, with 0 for the empty string. The state of a string wa is computed recursively using the state of w and the frequency of symbol a. A more detailed description of ANS is beyond the scope of this paper, and we refer to [19, 33]. Very recently, ANS was successfully used, in combination with integer encodings such as VByte and the Simple family, to encode documents and frequencies in inverted indexes [32, 33].

2.2 Query Processing

Next, we describe the top-k disjunctive DAAT query processing algorithms that we study. We limit ourselves to safe methods, guaranteed to return the correct top-k, and select methods that have been extensively studied in recent years.

MaxScore. MaxScore is a family of algorithms first proposed by Turtle and Flood [48], which rely on the maximum impact scores of each term t (denoted as \(\max _t\)). Given a list of query terms \(q = \{t_1, t_2, \dots , t_m\}\) such that \(\max _{t_i} \ge \max _{t_{i+1}}\), at any point of the algorithm, query terms (and associated posting lists) are partitioned into essential \(q_+ = \{t_1, t_2, \dots , t_p\}\) and nonessential \(q_- = \{t_{p+1}, t_{p+2}, \dots , t_m\}\). This partition depends on the current threshold T for a document to enter the top k results, and is defined by the smallest p such that \(\sum _{t \in q_-} \max _t < T\). Thus, no document containing only nonessential terms can make it into the top-k results. We can now perform disjunctive processing over only the essential terms, with lookups into the nonessential lists. More precisely, for a document found in the essential lists, we can compute a score upper bound by adding its current score (from the essential lists and any non-essential lists already accessed) and the \(\max _t\) of those lists not yet accessed; if this bound is lower than T, then no further lookups on this document are needed. There are TAAT and DAAT versions of MaxScore; we only consider the DAAT variant. In this case, we attempt to update p whenever T changes.

WAND. Similar to MaxScore, WAND [6] (Weighted or Weak AND) also utilizes the \(\max _t\) values. During DAAT traversal, query terms and their associated posting lists are kept sorted by their current document IDs. We denote this list of sorted terms at step s of the algorithm as \(q_s = \langle t_1, t_2, \dots , t_m \rangle \). At each step, we first find the pivot term \(t_p\), where p is the lowest value such that \(\sum _{i = 1}^p \max _{t_i} \ge T\). Let \(d_p\) be the current document of \(t_p\). If all \(t_i\) with \(i < p\) point to \(d_p\), then the document is scored and accumulated, and all pointers to \(d_p\) are moved to the next document. Otherwise, no document \(d < d_p\) can make it into the top-k; thus, all posting lists up to \(t_p\) are advanced to the next document \(\ge d_p\), and we resort and again find the pivot. This is repeated until all documents are processed.

Block-Max WAND. One shortcoming of WAND is that it uses maximum impact scores over the entire lists. Thus, if \(\max _t\) is much larger than the other scores in the list, then the impact score upper bound will usually be a significant overestimate. Block-Max WAND (BMW) [18] addresses this by introducing block-wise maximum impact scores.

First, regular WAND pivot selection is used to determine a pivot candidate. The candidate is then verified by shallow pointer movements: The idea is to search for the block in a posting list where the pivot might exist. This operation is fast, as it involves no block decompression. Shallow pointer movement is performed on each \(t_{i<p}\), and the block-wise maximum score is computed. If it is greater than T, then \(t_p\) is the pivot. In this case, if all \(t_{i\le p}\) point at \(d_p\), we perform the required lookups, following by advancing the pointers by one; otherwise, we pick the \(t_{i<p}\) with the largest IDF, and advance its pointer to a document ID \(\ge d_p + 1\). If the block-size maximum score is less than T, we must find another candidate. We consider the documents that are at the current block boundaries for \(t_{i \le p}\), and all the current documents for \(t_{i > p}\). We select the minimum document ID among them and denote it as \(d'\). Finally, we select the \(t_{i<p}\) with the largest IDF, and move its pointer to \(d'\). We repeat the entire process until all terms are processed.

Variable Block-Max WAND. [30] generalizes BMW by allowing variable lengths of blocks. More precisely, it uses a block partitioning such that the sum of differences between maximum scores and individual scores is minimized. This results in better upper bound estimation and more frequent document skipping.

Block-Max MaxScore. The idea of using per-block maximum impact scores can also be applied to MaxScore, leading to the Block-Max MaxScore [9] (BMM) algorithm. Before performing look-ups to nonessential lists, we further refine our maximum score estimate using maximum impacts of the current blocks in nonessential lists, which might lead to fewer fully evaluated documents.

2.3 Document Ordering

It is well known that a good assignment of IDs to documents can significantly improve index compression. Many query processing algorithms are also sensitive to this assignment, with speed-ups of 2 to 3 over random assignment observed for some orderings and algorithms.

The problem of finding a document ordering that minimizes compressed index size has been extensively studied [4, 5, 15, 17, 41,42,43]. Shieh et al. [41] propose an approach based on an approximate maximum travelling salesman problem; they build a similarity graph where documents are vertices, and edges indicate common terms. Blandford and Blelloch [5] use a similarity graph with edges weighted with cosine similarity, and run a recursive partitioning to find the ordering. A considerable downside of such algorithms are their time and space complexity. Silvestri [42] shows that a simple URL-based ordering works as well as more complex methods on many data sets. The simplicity and efficiency of this approach makes it a very attractive choice in practice. Recently, Dhulipala et al. [15] proposed the Recursive Graph Bisection algorithm for graph and index compression, and experiments show their algorithm to exhibit the best compression ratio across all tested indices. We consider three orderings in our study, random assignment, URL-based, and Recursive Graph Bisection.

While most work on document ID assignment focuses on index compression, reordering also impacts query efficiency. Yan et al. [50] found that document reordering can significantly speed up conjunctive queries. Subsequent experiments show similar results for several disjunctive top-k algorithms, and in particular for all the algorithms introduced in the previous subsection [16, 18, 24, 25, 30, 45]. Thus, query processing speeds depend on both compression method and document ordering, though the trade-offs as not yet well understood.

2.4 Choice of k

The final dimension of our study is the choice of the number of results k. Much previous work has focused on smaller values of k, such as 10 or 100. However, when query processing algorithms are used for subsequent reranking by a complex ranker, more results are needed, though the optimal value of k varies according to several factors [29]. Suggested values include 20 [10], 200 [53], 1000 [37], 5000 [13], and up to tens of thousands [11], suggesting that the optimal k is context-dependent. Also, recent work in [12] indicates that many top-k algorithms slow down significantly as k becomes larger, but not always at the same rate. Given this situation, we decided to perform our evaluation over a large range of values, from \(k=10\) up to 10000.

3 Experimental Evaluation

Testing Environment. All methods are implemented in C++17 and compiled with GCC 7.3.0 using the highest optimization settings. The tests are performed on a machine with an Intel Core i7-4770 quad-core 3.40 GHz CPU, with 32 GiB RAM, running Linux 4.15.0. The CPU is based on the Haswell micro architecture which supports the AVX2 instruction set. The CPUs L1, L2, and L3 cache sizes are 32 KB, 256 KB, and 8 MB, respectively. Indexes are saved to disk after construction, and memory-mapped to be queried, so that there are no hidden space costs due to loading of additional data structures in memory. Before timing the queries, we ensure that any required lists are fully loaded in memory. All timings are measured taking the results with minimum value of five independent runs, and reported in milliseconds. We compute BM25 [39] scores during retrieval.

Data Sets. We performed experiments on two standard datasets: Gov2 and ClueWeb09 [7], summarized in Table 1. For each document in the collection the body text was extracted using Apache Tika, the words lowercased and stemmed using the Porter2 stemmer; no stopwords were removed.

Document Ordering. We experimented with three document orderings: Random, URL, and BP. The first, with IDs randomly assigned to documents, serves as the baseline. URL assigns IDs in lexicographic order of URLs [42]. BP is based on the Recursive Graph Bisection algorithm [15].

Implementation Details. Our codebase is a fork of the ds2iFootnote 1 library, extended with many additional encoding, query processing, and reordering implementations used in this study. The source code is available at https://github.com/pisa-engine/pisa for readers interested in further implementation details or in replicating the experiments. We integrated what we believe are the currently best open-source implementations of the various compression algorithms. We use the FastPForFootnote 2 library for implementation of VarintGB, VarintG8IU, OptPFD, Simple16, Simple8b, SIMD-BP128, and StreamVByte. PEF and Interpolative are based on the code of the ds2i library. The QMX implementation comes from JASSv2Footnote 3. We used the reference implementation of ANSFootnote 4 with 2d max:med contexts mechanism. All block-wise encodings use blocks of 128 postings per block.

We implemented the original Recursive Graph Bisection algorithm by Dhulipala et al. [15] and validated the results obtained against those reported in their paper. Our implementations of BMW and BMM store maximum impact scores for blocks of size 128, while VBMW uses blocks of average length 40. Both these values were also used in previous work.

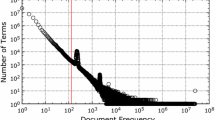

Queries. To evaluate query processing speed, we use TREC 2005 and TREC 2006 Terabyte Track Efficiency Task, drawing only queries whose terms are all in the collection dictionary. This leaves us with 90% and 96% of the total TREC 2005 and TREC 2006 queries for Gov2, and 96% and 98% of the total TREC 2005 and TREC 2006 queries for ClueWeb09. From each sets of queries, we randomly selected 1000 queries for each query length from 2 to \(6+\). Figure 1 shows the query length distributions. For Figs. 2 and 3, for each data point we sample 500 queries from each query set. We call this set Trec05-06 .

4 Results and Discussion

Compressed Index Size. All compression results are summarized in Table 2, sorted by increasing space for the Gov2 collection under the URL ordering. We find that this order is mostly preserved across all tested scenarios. The exceptions are Packed+ANS2 and Interpolative, which compete for the top spot. However, relative differences change quite significantly. For instance, variable byte methods benefit little from URL or BP reordering. This is expected, since virtually all gain comes from decreased document gaps, and no improvement is seen for frequency encodings. On the other hand, packing methods are highly sensitive to ordering, and achieve significantly better compression with URL or BP. For instance, when using Packed+ANS2, the sizes of Gov2 and ClueWeb09 with URL ordering decrease by 43% and 27%, respectively, compared to Random. Further improvements are seen for BP.

Query Efficiency. We first executed the five early termination algorithms in all configurations with \(k=10\). The results are shown in Table 3. As expected, for a fixed ordering there is a clear trade-off between index size and query speed. Variable byte encoding is extremely fast, but also gains the least from reordering. Interestingly, SIMD-BP128 basically matches the performance of Varint-G8IU and VarintGB while achieving significantly better compression. Other packing methods—QMX, Simple8b, and Simple16—along with OptPFD, fall slightly yet noticeably behind. PEF is on average less efficient. However, it almost matches the performance of the top four encodings for algorithms utilizing many skips or lookups: BMW and especially VBMW . This is significant, as PEF decreases the index size by more than 50% over the variable byte techniques. Finally, the entropy-based encodings perform the worst across all settings, with Interpolative being by far the slowest. Based on these results, we select a set of four encoding techniques for further analysis: OptPFD, PEF, SIMD-BP128, and Varint-G8IU, each representing a different group of fast algorithms.

Overall, the fastest retrieval algorithm is VBMW . Moreover, it facilitates efficient query processing on a space-efficient PEF-encoded index. Unsurprisingly, it improves upon BMW , which in turn improves upon WAND . These two also fall short of MaxScore , which is the fastest when testing Random ordering but does not benefit as much from reordering. We find BMM to provide no improvement over MaxScore . Given these facts, and due to space constraints, we focus further experiments on the MaxScore and VBMW algorithms.

We also notice a significant difference between different document orderings. Queries on randomly ordered indexes can be almost 3 times slower than on URL ordered ones. Quite interesting, although limited, is the improvement obtained by BP over URL ordering. Even though there is some variability of the gain for Gov2, which depends on the algorithm and encoding used, it is quite evident and constant for ClueWeb09. To the best of our knowledge, this result for BP has not been discussed in the literature, and it would be interesting to further investigate the reasons for the improvement, with the aim of further improvements.

Query Length. Figure 2 shows average query times for different query term counts using Trec05-06 queries, under the URL ordering. Interestingly, MaxScore performs better for long queries (except when PEF encoding is used). For ClueWeb09 the difference is significant: about 10 ms. This could justify a hybrid retrieval method that switches between algorithms based on a query length.

Varying k . The results for a range of values of k (using URL ordering) are shown in Fig. 3 on a log-log scale for better readability. First, we notice a significant time increase with larger k across all encoding techniques. We find this increase to be faster for VBMW . Both algorithms are roughly equally fast for \(k=100\) using Varint-G8IU or SIMD-BP128, while for larger k MaxScore becomes faster. We note that at \(k=10,000\) even the performance of PEF, which previously performed well only for VBMW , is similar for both algorithms. This suggests that MaxScore might be better suited for some cases of candidate generation.

5 Conclusions

In this paper, we performed an experimental evaluation of a whole range of previously proposed schemes for index compression, query processing, index reordering, and the number of results k. Our experiments reproduce many previous results while filling some remaining gaps and painting a more detailed picture. We confirm known correlation between index size and query speed, and provide comprehensive data that may help to find the right trade-off for a specific application. In particular, we find SIMD-BP128 to perform on a par with the variable byte techniques while providing a significantly higher compression ratio. Moreover, PEF is both space and time-efficient when using the VBMW algorithm. VBMW is the fastest query processing method for small k while MaxScore surpasses it for \(k > 100\). Query cost increases significantly with k for DAAT methods, justifying TAAT and SAAT approaches for candidate generation such as [12]. The good performance of MaxScore on long queries motivates hybrid methods that select algorithms based on query length.

References

Anh, V.N., Moffat, A.: Inverted index compression using word-aligned binary codes. Inf. Retrieval 8(1), 151–166 (2005)

Anh, V.N., Moffat, A.: Index compression using 64-bit words. Softw. Pract. Exp. 40(2), 131–147 (2010)

Arguello, J., Diaz, F., Lin, J., Trotman, A.: SIGIR 2015 workshop on reproducibility, inexplicability, and generalizability of results. In: 38th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1147–1148. ACM (2015)

Blanco, R., Barreiro, Á.: Document identifier reassignment through dimensionality reduction. In: Losada, D.E., Fernández-Luna, J.M. (eds.) ECIR 2005. LNCS, vol. 3408, pp. 375–387. Springer, Heidelberg (2005). https://doi.org/10.1007/978-3-540-31865-1_27

Blandford, D., Blelloch, G.: Index compression through document reordering. In: 2002 Data Compression Conference, pp. 342–351 (2002)

Broder, A.Z., Carmel, D., Herscovici, M., Soffer, A., Zien, J.: Efficient query evaluation using a two-level retrieval process. In: Proceedings of the Twelfth International Conference on Information and Knowledge Management, pp. 426–434. ACM (2003)

Callan, J., Hoy, M., Yoo, C., Zhao, L.: Clueweb09 data set (2009). http://lemurproject.org/clueweb09/

Catena, M., Macdonald, C., Ounis, I.: On inverted index compression for search engine efficiency. In: de Rijke, M., et al. (eds.) ECIR 2014. LNCS, vol. 8416, pp. 359–371. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-06028-6_30

Chakrabarti, K., Chaudhuri, S., Ganti, V.: Interval-based pruning for top-k processing over compressed lists. In: Proceedings of the 2011 IEEE 27th International Conference on Data Engineering, pp. 709–720 (2011)

Chapelle, O., Chang, Y.: Yahoo! learning to rank challenge overview. In: Proceedings of the Learning to Rank Challenge, pp. 1–24 (2011)

Chapelle, O., Chang, Y., Liu, T.Y.: Future directions in learning to rank. In: Proceedings of the Learning to Rank Challenge, pp. 91–100 (2011)

Crane, M., Culpepper, J.S., Lin, J., Mackenzie, J., Trotman, A.: A comparison of document-at-a-time and score-at-a-time query evaluation. In: Proceedings of the Tenth ACM International Conference on Web Search and Data Mining, pp. 201–210. ACM (2017)

Craswell, N., Fetterly, D., Najork, M., Robertson, S., Yilmaz, E.: Microsoft research at TREC 2009 web and relevance feedback tracks. Technical report, Microsoft Research (2009)

Dean, J.: Challenges in building large-scale information retrieval systems: invited talk. In: Proceedings of the Second ACM International Conference on Web Search and Data Mining, pp. 1–1. ACM (2009)

Dhulipala, L., Kabiljo, I., Karrer, B., Ottaviano, G., Pupyrev, S., Shalita, A.: Compressing graphs and indexes with recursive graph bisection. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1535–1544 (2016)

Dimopoulos, C., Nepomnyachiy, S., Suel, T.: Optimizing top-k document retrieval strategies for block-max indexes. In: Proceedings of the sixth ACM International Conference on Web Search and Data Mining, pp. 113–122. ACM (2013)

Ding, S., Attenberg, J., Suel, T.: Scalable techniques for document identifier assignment in inverted indexes. In: Proceedings of the 19th international conference on World wide web, pp. 311–320. ACM (2010)

Ding, S., Suel, T.: Faster top-k document retrieval using block-max indexes. In: Proceedings of the 34th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 993-1002. ACM (2011)

Duda, J.: Asymmetric numeral systems as close to capacity low state entropy coders. CoRR abs/1311.2540 (2013)

Elias, P.: Efficient storage and retrieval by content and address of static files. J. ACM 21(2), 246–260 (1974)

Elias, P.: Universal codeword sets and representations of the integers. IEEE Trans. Inf. Theory 21(2), 194–203 (1975)

Fano, R.M.: On the number of bits required to implement an associative memory. Massachusetts Institute of Technology, Project MAC (1971)

Golomb, S.W.: Run-length encodings (corresp.). IEEE Trans. Inf. Theory 12(3), 399–401 (1966)

Hawking, D., Jones, T.: Reordering an index to speed query processing without loss of effectiveness. In: Proceedings of the Seventeenth Australasian Document Computing Symposium, pp. 17-24. ACM (2012)

Kane, A., Tompa, F.W.: Split-lists and initial thresholds for wand-based search. In: The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, pp. 877-880. ACM (2018)

Lemire, D., Boytsov, L.: Decoding billions of integers per second through vectorization. Softw. Pract. Exper. 45(1), 1–29 (2015)

Lemire, D., Kurz, N., Rupp, C.: Stream vbyte: faster byte-oriented integer compression. Inf. Process. Lett. 130, 1–6 (2018)

Liu, T.Y.: Learning to rank for information retrieval. Found. Trends Inf. Retrieval 3(3), 225–331 (2009)

Macdonald, C., Santos, R.L., Ounis, I.: The whens and hows of learning to rank for web search. Inf. Retr. 16(5), 584–628 (2013)

Mallia, A., Ottaviano, G., Porciani, E., Tonellotto, N., Venturini, R.: Faster blockmax WAND with variable-sized blocks. In: Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 625–634. ACM (2017)

Metzler, D., Croft, W.B.: A Markov random field model for term dependencies. In: Proceedings of the 28th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 472–479 (2005)

Moffat, A., Petri, M.: ANS-based index compression. In: Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, pp. 677-686. ACM (2017)

Moffat, A., Petri, M.: Index compression using byte-aligned ANS coding and two-dimensional contexts. In: Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, pp. 405-413. ACM (2018)

Moffat, A., Stuiver, L.: Binary interpolative coding for effective index compression. Inf. Retr. 3(1), 25–47 (2000)

Ottaviano, G., Venturini, R.: Partitioned elias-fano indexes. In: Proceedings of the 37th international ACM SIGIR conference on Research & Development in Information Retrieval, pp. 273–282. ACM (2014)

Plaisance, J., Kurz, N., Lemire, D.: Vectorized VByte decoding. CoRR abs/1503.07387 (2015)

Qin, T., Liu, T.Y., Xu, J., Li, H.: LETOR: a benchmark collection for research on learning to rank for information retrieval. Inf. Retr. 13(4), 346–374 (2010)

Rice, R., Plaunt, J.: Adaptive variable-length coding for efficient compression of spacecraft television data. IEEE Trans. Commun. Technol. 19(6), 889–897 (1971)

Robertson, S.E., Jones, K.S.: Relevance weighting of search terms. J. Am. Soc. Inf. Sci. 27(3), 129–146 (1976)

Scholer, F., Williams, H.E., Yiannis, J., Zobel, J.: Compression of inverted indexes for fast query evaluation. In: Proceedings of the 25th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 222-229. ACM (2002)

Shieh, W.Y., Chen, T.F., Shann, J.J.J., Chung, C.P.: Inverted file compression through document identifier reassignment. Inf. Process. Manage. 39(1), 117–131 (2003)

Silvestri, F.: Sorting out the document identifier assignment problem. In: Proceedings of the 29th European Conference on IR Research, pp. 101–112 (2007)

Silvestri, F., Orlando, S., Perego, R.: Assigning identifiers to documents to enhance the clustering property of fulltext indexes. In: Proceedings of the 27th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 305-312. ACM (2004)

Stepanov, A.A., Gangolli, A.R., Rose, D.E., Ernst, R.J., Oberoi, P.S.: SIMD-based decoding of posting lists. In: Proceedings of the 20th ACM International Conference on Information and Knowledge Management, pp. 317–326 (2011)

Tonellotto, N., Macdonald, C., Ounis, I.: Effect of different docid orderings on dynamic pruning retrieval strategies. In: Proceedings of the 34th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1179–1180. ACM (2011)

Trotman, A.: Compression, SIMD, and postings lists. In: Proceedings of the 2014 Australasian Document Computing Symposium, pp. 50:50–50:57. ACM (2014)

Trotman, A., Lin, J.: In vacuo and in situ evaluation of SIMD codecs. In: Proceedings of the 21st Australasian Document Computing Symposium, pp. 1–8. ACM (2016)

Turtle, H., Flood, J.: Query evaluation: strategies and optimizations. Inf. Process. Manage. 31(6), 831–850 (1995)

Wang, L., Lin, J., Metzler, D.: Learning to efficiently rank. In: Proceedings of the 33rd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 138–145. ACM (2010)

Yan, H., Ding, S., Suel, T.: Inverted index compression and query processing with optimized document ordering. In: Proceedings of the 18th International Conference on World Wide Web, pp. 401–410. ACM (2009)

Zhai, C., Lafferty, J.: A study of smoothing methods for language models applied to information retrieval. ACM Trans. Inf. Syst. 22(2), 179–214 (2004)

Zhang, J., Long, X., Suel, T.: Performance of compressed inverted list caching in search engines. In: Proceedings of the 17th International Conference on World Wide Web, pp. 387–396. ACM (2008)

Zhang, M., Kuang, D., Hua, G., Liu, Y., Ma, S.: Is learning to rank effective for web search? In: SIGIR 2009 Workshop: Learning to Rank for Information Retrieval, pp. 641–647 (2009)

Zukowski, M., Heman, S., Nes, N., Boncz, P.: Super-scalar RAM-CPU cache compression. In: Proceedings of the 22nd International Conference on Data Engineering (2006)

Acknowledgments

This research was supported by NSF Grant IIS-1718680 and a grant from Amazon.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Mallia, A., Siedlaczek, M., Suel, T. (2019). An Experimental Study of Index Compression and DAAT Query Processing Methods. In: Azzopardi, L., Stein, B., Fuhr, N., Mayr, P., Hauff, C., Hiemstra, D. (eds) Advances in Information Retrieval. ECIR 2019. Lecture Notes in Computer Science(), vol 11437. Springer, Cham. https://doi.org/10.1007/978-3-030-15712-8_23

Download citation

DOI: https://doi.org/10.1007/978-3-030-15712-8_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-15711-1

Online ISBN: 978-3-030-15712-8

eBook Packages: Computer ScienceComputer Science (R0)