Abstract

Delineation of scientific domains (fields, areas of science) is a preliminary task in bibliometric studies at the mesolevel, far from straightforward in domains with high multidisciplinarity, variety, and instability. The Sect. 2.2 shows the connection of the delineation problem to the question of disciplines versus invisible colleges, through three combinable models: ready-made classifications of science, classical information-retrieval searches, mapping and clustering. They differ in the role and modalities of supervision. The Sect. 2.3 sketches various bibliometric techniques against the background of information retrieval ( ), data analysis, and network theory, showing both their power and their limitations in delineation processes. The role and modalities of supervision are emphasized. The Sect. 2.4 addresses the comparison and combination of bibliometric networks (actors, texts, citations) and the various ways to hybridize. In the Sect. 2.5, typical protocols and further questions are proposed.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

- scientific field/domain delineation/delimitation

- science mapping/clustering

- science networks

- scientific information retrieval

- science classification

- citation mapping/clustering

- text mining

- text clustering benchmark

- community detection

- hybrid text-citation mapping

1 Shaping the Landscape of Scientific Fields

Collecting literature that is both relevant and specific to a domain is a preliminary step of many scientometric studies: description of strategic fields such as nanosciences, genomics and proteomics, environmental sciences; research monitoring and international benchmarks; science community analyses. Although our focus here is on the intermediate levels, informally described in such terms as areas, specialties, subfields, fields, subdisciplines… this subject is connected to general science classification and, at the other end of the range, to narrow topic search.

In Sect. 2.2 we place delineation at the crossroads of two concepts: the first one is disciplinarity (what is a scientific discipline?), which crystallizes various dimensions of scientific activity in epistemology and sociology. The second one is invisible colleges in resonance with the core of bibliometrics, the study of networks created explicitly or implicitly by publishing actors. From this point of view, domains of science can be viewed as a generalized form of invisible colleges, sometimes in the form of relatively dense and segregated areas—at some scale. In other cases however, the structure is less clear and bounded, with high levels of both internal diversity and external connections and overlaps. Given a target domain, its expected diversity, interdisciplinarity, and instability are challenging issues. We outline the main approaches to delineation: external formalized resources, such as science classifications; ad hoc information retrieval (IR) search; network exploration resources (clustering–mapping).

Section 2.3 is devoted to the main approaches in domain delineation, IR search, and science clustering–mapping, when off-the-shelf classifications are not sufficient. Both take root in the information networks of science, but start from different vantage points, with some simplification: ex ante heavy supervision for IR search, typically with bottom-up ad hoc queries; ex post supervision for bibliometric mapping, with top-down pruning. In difficult cases, these approaches appear complementary, often within multistep protocols. As a result of the complex structure and massive overlaps of aspects of science, of the multiple bibliometric networks involved, of the multiple points of view, the frontiers are far from unique at a given scale of observation. The experts' supervision process is a key element. Its organization depends on the studies' context and demand, to reach decisions through confrontation and negotiation, especially in high-stakes contexts. Beforehand, we shall briefly address the toolbox of data analysis methods for clustering–mapping purposes.

Section 2.4 focuses on the multinetwork approach for delineation tasks, stemming from pragmatic practices of information retrieval ( ) and bibliometrics . The main networks are actor's graphs and other relations connected with invisible colleges based on documents and their main attributes, texts, and citations. Other scientometric networks (teaching, funding, science social networks, etc.) offer potential resources. The hybridization covers a wide scope of forms. There is a strong indication that multinetwork methods improve IR performance and offer a richer substance to experts'/users' discussions.

2 Context

2.1 Background: Disciplinarity and Invisible Colleges

Generally speaking there is no ground truth basis for defining scientific domains. Given a target domain, assigned by sponsors in broad and sometimes fuzzy terms, delineation is the first stage of a bibliometric study. It is tantamount to a rule of decision involving sponsors/stakeholders, scientists/experts, and bibliometricians on extraction of the relevant literature. Delineation also matters as research communities are an object of science sociology as well as a playground for network theoreticians.

The delineation of scientific domains should be understood in the context of the structure of science and scientific communities, especially through the game between diversity, source of speciation, and interdisciplinarity drive towards reunification. Disciplinarity and invisible colleges are two concepts from the sociology of science that symbolize two kinds of communities, the first one more formal and institutional, the second one constructed on informal linkages made visible by bibliometric analysis of science networks . The tradition of epistemology has contributed to highlight the specificity of science by contrast to other conceptions of knowledge. Auguste Comte proposed the first modern classification of science and at the same time condemned the drift of specialization [2.1], considered a threat to a global understanding of positive science. In reaction both to epistemology and normative Mertonian tradition [2.2, 2.3], Kuhn emphasized the role of central paradigms in disciplines at some point of their evolution [2.4]. The post-Kuhnian social constructivism proceeded along two lines—at times conflicting [2.5]—of relativist thinking: the strong programme (see Barnes et al [2.6]) and the no less radical actor–network theory ( ). The first one was initiated by Barnes and Bloor [2.7] and flourished in the science studies movement [2.8, 2.9]. The ANT also borrowed from Serres (translation concept [2.10]) and from the poststructuralist French theory (Foucault, Derrida, Bourdieu, Baudrillard), see [2.11, 2.12, 2.13]. These schools of thought emphasize disciplinarity rather than unity. Lenoir notes that [2.14, pp. 71–72, 82]:

A major consequence of [social constructivism] has been to foreground the heterogeneity of science. [… Disciplines are] crucial sites where the skills [originating in labs] are assembled and political institutions that demarcate areas of academic territory, allocate privileges and responsibilities of expertise, and structure claims on resources.

Bourdieu stressed the importance of personal relationship and shared habitus. Disciplines exhibit both a strong intellectual structure and a strong organization. The institutional framework , with, in most countries, an integration of research and higher education systems, ensures evaluation and career management. Some communities coin their own jargon, amongst signs of differentiation, and norms and patterns. Potentially, all dimensions of research activity (paradigms and theories, classes of problems, methodology and tools, shared vocabulary, corroboration protocols, construction of scientific facts and interpretation) appear as discipline-informed, with particular tensions between superdisciplines, natural sciences and social sciences and humanities. Scientists discuss, within their own disciplines, the subfield breakdown and the structuring role of particular dimensions, for example research objects in microbiology, versus integration drive [2.15, 2.16].

The endless process of specialization and speciation in science, erecting barriers to the mutual understanding of scientists, is partly counteracted by interdisciplinary linkages which maintain and create solidarity between neighbor or remote areas of research. Piaget [2.17] coined the term transdisciplinarity as the new paradigm re-engaging with unity of science. A few rearrangements of large magnitude, such as the movement of convergence between nanosciences, biomedicine, information, and cognitive sciences and technologies ( , Nanotechnology, biotechnology, information technology, and cognitive science; concept coined by NSF (National Science Foundation) in 2002), tend to reunite distant areas or at least create active zones of overlap.

In contrast with disciplinarity, the concept of invisible college in its modern acceptation, popularized by Price and Beaver [2.18] and Crane [2.19], chiefly refers to informal communication networks, personal relationship, and possibly interdisciplinary scope. These direct linkages tend to limit the size of the colleges, although no precise limit can be given. Science studies devote a large literature to those informal groups, which exemplify how networks of actors operate at various levels of science [2.20, 2.21].

Although more formal expressions emerge from the self-organization of those microsocieties (workshops, conferences, journals), the invisible colleges do not claim the relative stability and the social organization of disciplines. The various communication phenomena of the colleges are revealed by sociological studies or, more superficially but systematically, by analysis of bibliometric networks such as coauthorship, text relations and citations. The bibliometric hypothesis assumes that the latter process mirrors essential aspects of science: the traceable publication activity, in a broad sense, expresses the collective behavior of scientific communities in most relevant aspects (contents and certification, production and structure of knowledge, diffusion and reward, cooperation and self-organization). It does not follow that bibliometrics can easily operationalize all hypotheses [2.22]. Affiliations can, in the background, connect to the layers of academic institutions or corporate entities. Mentions to funding bodies are increasingly required in articles reporting grant-supported works. These relations, however, as well as personal interactions, generally require extrabibliometric information. Variants of the invisible colleges in sociology of science are known as epistemic communities, involving scientists and experts with shared convictions and norms [2.23, 2.8] and community of practice [2.24]. The mix of behavior, stakes and power games, in the interaction of virtual colleges and institutions, remains an appealing question. A revival of the interest for delineation studies has been observed at the crossroads of sociology of science and analyses of networks [2.25, 2.26].

Disciplinary views, as well as colleges revealed by bibliometrics, lead to different partitions of literature, depending on the vantage points. In particular, bibliometricians can be confronted with conflictual situations when revealed networks and institutional normative perceptions and claims as to the disciplinary structure and boundaries diverge. The exercise of delineation generally consists in reaching some form of consensus, or at least a few consensual alternatives amongst sponsors, stakeholders, experts, and scientists. The toolbox contains information retrieval, data analysis, and mapping. Bibliometricians act as organizers of experts' supervision, suppliers of quantitative information, and facilitators of negotiations (Fig. 2.1).

Actors' models/bibliometric models . This scheme evokes the interaction between actors' mental or social models of science, disciplines, and domains on the one hand and models from data analyses (clustering–mapping) on bibliometric data sources, based on different methods and networks on the other. The two sides are engaged separately or together in negotiated combinations to reach (almost) consensual views. Two ways of domain delineation are singled out, ad hoc IR search and extraction from maps, with different degrees and moments of supervision. A third way, allowing direct IR search, supposes permanent classification resources

2.2 Operationalization: Three Models of Delineation

In their review of (inter)disciplinarity issues, Sugimoto and Weingart [2.27] stress that the rich conceptualization of disciplinarity, quite elaborate in sociology and iconic of science diversity, does not imply clear operationalization solutions for defining fields. Scientists' claims and co-optation (‘‘Mathematicians are people who make theorems'' with several formulations, including a humoristic one by Alfréd Rényi), university organizations and traditions, epistemology, sociology, bibliometrics offer many entry points. The stakes associated to disciplinary interests and funding, for both scientists and policy makers may interfere with definitions. Introducing the national dimension, for example, shows that the coverage of disciplines is perceived differently in national research systems. Bibliometrics cannot capture the deep sociocognitive identity of disciplines but contributes to enlighten some of the facets that collective scientists' behavior let appear. The difficulty extends to multidisciplinarity measurement.

In practice, the description of disciplines available in scientific information systems takes the form of classification schemes at some granularity (articles, journals) from a few sources: higher education or research organizations for management and evaluation needs (international bodies or national institutions, for example the National Center for Scientific Research (CNRS ) in France); schemes associated to databases from academic societies, generally thematic; and/or from publishers or related corporations (Elsevier, ISI/Thomson Reuters/Clarivate Analytics) dedicated to scientific information retrieval.

We term model A the principle of these institutional science classifications, which do not chiefly proceed from bibliometrics but from the interaction between scientists and librarians. Subcategories and derived sets offer ready-made delineation solutions. The effect of methodological options, the social construction of disciplines by institutions or scientific societies, with struggles for power and games of interests are unlikely to yield convergence: the various classifications of science available, not necessarily compatible, should be taken with caution. Depending on the update system, they also tend to give a cold image of science. Often based on nonoverlapping schemes, they tend to handle multidisciplinarity phenomena poorly. Resources associated with classifications in S&T databases which often include various nomenclatures (species, objects) are a distinct advantage. With its limitations this model nevertheless offers a rich substance to bibliometric studies. Since the development of evaluative scientometrics in the 1970s, in the wake of Garfield and Narin's works, categories are used as bases for normalization of bibliometric measures, especially citation indicators , but classification-free alternatives exist (Sect. 2.3.2). The rigidity of classifications has an advantage, making a virtue out of a necessity, the easy measure of knowledge exchanges between categories over time. Techniques of coclassification [2.28, 2.29], coindex, or coword methods (see below) make it possible to transcend the rigidity of the classification scheme.

The concept of virtual college, originally thought of as micro- or mesoscale communities with informal contours, exchanging in various ways, can be generalized to communities in science networks at any scale. Since the 1980s, this is implicit in most bibliometric studies [2.30]. Global models of science, either small worlds or self-similar fractal models, are consistent with this perspective. This scheme, termed here model C, is the very realm of bibliometrics. Formal and institutional aspects are partly visible through bibliometric networks but need other scientometric information on institutional structure of science systems. Bibliometrics and also scientometrics are blind to other networks/relations such as interpersonal networks and to the complete picture of science funding and science society relations. It follows that the delineation of fields in model A, which accounts for complex mixes nontotally accessible to bibliometric networks, cannot be retrieved by model C approaches. The other way round, model C makes visible implicit structures ignored by the panel of actors involved in model A classification design.

For large academic disciplines, model C merely proposes high-level groupings which might emulate the categories disciplines from model A and share the same label, however with a quite coarse correspondence. In the practice of model C, large groups receive a sort of discipline label through expert supervision. Neither the bibliometric approach nor model A have the property of uniqueness. Various tests were conducted by external bibliometricians on (Science Citation Index of the Web of Science) subject categories, and the agreement is not, usually, that good [2.31] and the existing ready-made classifications cannot pretend to the status of ground truth or gold standard for domain delineation. Depending on the organization, the clustering–mapping operations often fulfill two needs in bibliometric studies, first helping domain delineation, secondly identifying subdomains/topics within the target. In the absence of ground truth, the challenge of model C is to find trade-offs for reflecting a fractal reality quite difficult to break down, since boundaries are hardly natural except for configurations with clear local minima. They are then subject to optimization with partial information and negotiations [2.32, 2.33].

Model B based on IR search, borrows from both A and C. In model A, the operationalization of discipline definition and classification relied on heavily supervised schemes, aiming chiefly at information retrieval. Model B shares the same ground, with an ad hoc search strategy established by bibliometricians and experts for the needs of the study. Ad hoc search is sometimes necessary in order to go beyond the synthetic views provided by clustering and mapping, and to address analytical questions from users (in terms of theory, methods, objects, interpretation). The default granularity is the document level.

The three models can incorporate a semantic folder. Some indexing and classifications systems provide elaborate structures of indexes and keywords: thesaurus and ontologies (Sect. 2.2.4). Model B depends on expert's competence and resources of queried databases to coin semantically robust queries. Model C can treat metadata of controlled language, indexes of any kind, as well as natural language texts, and reciprocally shed light, through data/queries treatment, on the revealed semantic structures of universes.

Reflexivity is present under many aspects: scientists are involved in heavy ex ante input in ready-made classifications (model A), in IR ad hoc search (model B), and in softer ad hoc intervention on bibliometric maps (model C). The supervision/expertise question goes beyond within-community reflexivity, with partners associated to projects: decision-makers and stake-holders and bibliometricians.

Table 2.1 sums up the main features of the three models. They are just archetypes: in practice, blending is the rule. If classical disciplinary classification schemes belong to the first model, the Science Citation Index and variants incorporate bibliometric aspects. Purely bibliometric classifications, if maintained and widely available, give birth to ready-made solutions. In the background of the three models, the progressive rapprochement of bibliometrics and IR tools, addressed below in Sect. 2.3 should be kept in mind.

2.3 Challenges at the Mesolevel

2.3.1 Interdisciplinarity

Interdisciplinarity is quite an old question and rose to the forefront in the early 1970s with an OECD (Organization for Economic Cooperation and Development) conference devoted to the topic, which gave rise to a wealth of literature and programs. The distinction between multi-, inter-, and transdisciplinarity formulates various degrees of integration, see [2.34, 2.5]. As Choi and Park put it [2.35]:

Multidisciplinarity draws on knowledge from different disciplines but stays within their boundaries. Interdisciplinarity analyses, synthesizes and harmonizes links between disciplines into a coordinated and coherent whole.

Jahn et al [2.36] examine two interpretations of transdisciplinarity in literature. Both make sense in a delineation context. One privileges the science–society relationship: integration between social sciences and humanities ( ) and natural sciences with the participation of extrascientific actors, as a response to heavy and controversial socioscientific problems such as climatic change, genetically modified organisms, medical ethics, etc. The second interpretation considers that transdisciplinarity simply pushes the logic of interdisciplinarity towards integration. Russell et al [2.37], cited by Jahn et al [2.36],

emphasize that where interdisciplinarity still relies on disciplinary borders in order to define a common object of research in areas of overlap […] between disciplines, transdisciplinarity truly transgresses or transcends [them].

Klein [2.38] and Miller et al [2.39] stress the theoretical and problem-solving capability of the transdisciplinary view. Many publications evoke the paradox of multidisciplinarity, a source of radical discoveries, laboring however to convince evaluators in the science reward system. Yegros-Yegros et al [2.40] list a few controversial studies on the topic, and note a specific difficulty for distal transfers. Solomon et al [2.41] recall that the impact of many multidisciplinary journals is misleading in this respect, since their individual articles are not especially multidisciplinary.

Bibliometric operationalization has to account for those different multi/inter/transdisciplinarity forms. Multidisciplinarity involves sustained knowledge exchanges in a roughly stable structure; interdisciplinarity, with an organization and systematization nuance, supposes strong exchanges creating some structural strain, between domain overlap and autonomization of merging fractions; transdisciplinarity paves the way for the autonomy of the overlapping region, within the strong interpretation involvement of SSH and possibly of extrascientific considerations. Clearly model C is apter than A to depict those forms and their transitions when they occur, rather than waiting for the institutionalization of the emerging structures.

Interdisciplinarity may be outlined at the individual level by copublications of scholars with different educational or publication backgrounds, by measures of knowledge flows (citations), contents proximity, authors' coactivity or thematic mobility—if such data exist [2.42]. Other sources include joint programs, joint institutions or labs claiming disciplinary affiliation, generally found in metadata. Most disciplinary databases lagged behind the Garfield SCI model as to the integral mention of all authors' affiliations on an article. The large scope of bibliometric measures of multidisciplinarity was reviewed in many articles, e.g., [2.27, 2.43].

In model A the first entry point to multidisciplinary phenomena is the category classification schemes, with measures of knowledge exchanges by citation flows between categories (Pinski and Narin's seminal work on journal classifications [2.44], Rinia et al [2.45]), transposable to textual proximity (on patents [2.46]) or authors coactivity. Despite the heavy input of experts in science classification, the delimitation of particular fields varies across information providers and none can be held as a gold standard. It finds its limits in the inertia and often the hard scheme of classes, albeit the derived coclassification and coindex treatments noticed above relax the constraint and instil some of the bibliometric potential of model C.

Model C is more realistic in depicting the combinatory, flexible, multinetwork relationships in science and the demography of topics. Ignoring disciplinarity as such, it conveys a broader definition of interdisciplinarity, ranging from close to distant connections, the latter loosely interpretable, in the common acceptation, as interdisciplinary and possibly forerunners of more integrated relations. More generally, the network perspective of model C builds bridges between networks formalization and scientific communities life, leaving open the question of how profoundly the sociocognitive phenomena are captured. Data analysis methods such as correspondence analysis ( ), latent semantic analysis ( ), latent Dirichlet allocation ( ) addressed below, claim light semantic capabilities at least. Bibliometrics cannot substitute for sociological analysis, which exploits the same tools but goes further with specific surveys. Similarly, it is dependent on computational linguistics and semantic analysis for deep investigations of the knowledge contents. Model C is a potential competitor for offering taxonomies, with recent advances (Sect. 2.2.4). It does not follow that dynamics captured by this model are easy to handle: for example, flow variations in a fixed structure (A) read more conveniently than multifaceted structural change (C).

2.3.2 Internal Diversity

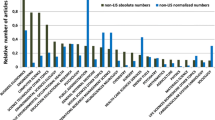

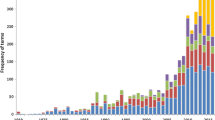

Diversity and multidisciplinarity are two facets of a coin. Internal diversity in a delineation process qualifies communities inside the target domain. Figure 2.2a-cb,c expresses the internal diversity of multidisciplinary domains , already striking for nanosciences and massive for proteomics (Fig. 2.2a-c).

Map of science and multidisciplinary projections . (a) A world-map-type science map from a spherical representation [2.47]. (b) and (c) Hotspots of activity of nanoscience and proteomics projected in a fraction of a global science map. It basically crosses the map's holistic picture with an overlay of hits from simple term queries. After Boyack and Klavans [2.48]

Internal diversity is treated in quite different ways depending on the model. In the cluster analysis part of model C, the balance of internal diversity and external connectivity (multidisciplinarity in the looser sense) is part of the mechanism which directly or indirectly rules the formation of groups, with a wide choice of protocols. Many solutions of density measurement are available in clustering or network analysis, with some connections with diversity measures developed in ecology and economics especially. The synthetic Rao index discussed by Stirling [2.49] combines three measures on forms/categories: variety (number of categories), balance (equality of category populations), and disparity (distance of categories). Delineation through mapping will use smaller scale clusters rather than attempting to capture the target as a whole large-scale cluster. There is no risk of missing large parts of the domain, but the way the different methods conduct the process raises questions about the homogeneity of clusters obtained and the loss of weak signals especially in hard clustering (Sect. 2.3).

In model B internal diversity, especially when generated by projected multidisciplinarity, is a threat on recall. Entire subareas may be missed out if the diversity in supervision (panels of experts) does not match the diversity of the domain. Unseen parts will alter the results. In contrast, on prerecognized areas, model B can be tuned to recover weak signals.

In model A, the existence of a systemic silence risk particularly depends on how interdisciplinary bridges are managed.

2.3.3 Unsettlement

The third challenge of domain delineation lies in the science network dynamics. Conventional model A classifications hardly follow evolutions and need periodic adjustments. The convenience of measures within a fixed structure is paid for by structural biases. Bibliometric mapping can translate evolutions in cluster or factor reconfiguration, but the handling of changes in a robust way remains delicate (Sect. 2.3). Model B pictures networks, but intuitively, a fast rhythm of reconfiguration in the somewhat chaotic universe of science networks makes it particularly difficult to settle delineation on firm roots. This casts a shadow on the time robustness of the solutions reached on one-shot exercises, but also on the predictive value of extrapolations on longitudinal trends. We will return later to dynamic studies and semantic characterization (Sect. 2.3.2). Emerging domains seldom embody institutional organization but bear bibliometric signatures of early activity. The difficulty is to capture weak signals with a reasonable immediacy. Fast manifestations of preferential attachment around novel publications, whatever the measure (citations, concept markers, or altmetric linkages) are amongst the classical alerts of topic emergence at small scale, to confirm by later local cluster growth.

2.3.4 Source Coverage

For memory's sake, the question of data coverage is recurrent in practical bibliometrics and is raised at the delineation stage of any study. The literature on the subject is abundant, conveying different points of view: Hicks [2.50] first stressed the limitations of both the reference database SCI and the mapping algorithm of cocitation for research policy purposes. Moed's review [2.51, esp. Sect. 6.2.2] and Van Raan et al [2.52] showed the differential coverage of disciplines by journals in SCI-WoS using references to nonsource items. Keeping pace with the growth of visible science is another challenge. The latest United Nations Educational, Scientific and Cultural Organization (UNESCO) science report estimates that \(\mathrm{7.8}\) million scientists worldwide publish \(\mathrm{1.3}\) million publications a year [2.53]. SCI-WoS producers proposed new products beginning to fill the gap of book literature, essential to social sciences and humanities (SSH) and conference proceedings, essential to computer science [2.54]. The coverage of social science and humanities with issues of publication practices and national biases was addressed in many works, e.g., [2.55, 2.56, 2.57]. This is distinct from the within-discipline approach where an extensive coverage causes instability of indicators due to tails (language biases, national journals biases), to document types or adaptation issues [2.58, 2.59, 2.60, 2.61]. Former studies' figures are outdated but the basic principles remain.

Extensive databases with enhanced coverage for IR purposes (modern WoS, Scopus) might require truncation of tails for comparative international studies. The PageRank selection tool limits the noise of a massive extension of sources in Google. However, Google Scholar is not considered a substitute for bibliographic databases for common librarian tasks, but rather a complement especially for coverage extension in long tails [2.62] with variations amongst disciplines. The same applies to another large bibliographic database: the Microsoft Academic Graph [2.63, 2.64, 2.65]. The lack of transparency in the inclusion process and the lack of tools beyond original ranking (sorting, subject filters) are stressed by Gray et al [2.66]. Strong concerns with the quality of bibliographic records were also reported [2.67, 2.68]. The coverage of databases has recently been compared by several authors [2.69, 2.70], with an extension to alternative sources such as altmetrics: http://mendeley.com, http://academia.edu, http://citeulike.org, http://researchgate.com, http://wikipedia.org, http://twitter.com, etc. [2.71, 2.72]. Online personal libraries like Mendeley shed new light on knowledge flows between disciplines through publication records stored together [2.73]—a kind of cocitation data from readers instead of authors. In addition, these sources, often difficult to qualify properly [2.74], have been addressed by altmetric studies [2.42, 2.75, 2.76]. The way scientists and the general public communicate about science on (social) media is field-dependent and it is not easy for now to anticipate the complementary role of altmetrics and traditional data in delineation of fields. Altmetric resources can help exploratory and supervision tasks.

In emerging and multidisciplinarity topics that typically justify careful delineation, controversies and conflicting interests are frequent and the importance of transdisciplinary problems makes the issues of sources coverage, experts panel selection, and supervision organization more acute.

2.4 Ready-Made Classifications

2.4.1 Classifications

Table 2.2 presents some types of science classifications valuable in domain delineation. These coexisting classification schemes reflect various perspectives, such as cognitive, administrative, organizational, and qualification-based rationales according to Daraio and Glänzel [2.77] who stress the difficulties arising when trying to harmonize them.

The first named classifications directly stem from professional expertise of scientists and librarians (pure model A). Some are linked to institutional or national research systems, mainly oriented towards staff management or evaluation, or international instances (UNESCO). More relevant for bibliometric uses are classifications within complete information systems on S&T literature, proceeding from a few sources: specialized academic societies ( (Chemical Abstracts Service), Inspec, Biosis, MathSciNet, Econlit, etc. which usually extend beyond their core discipline) and/or scientific publishers, and patent offices for technology. Classifications are typically hierarchical, complemented by metadata (keywords of various kinds, indexes from object nomenclatures: vegetable or chemical species, stellar objects, and so on).

Bibliometrics then entered the competition for science classifications, in contrast with the traditional documentation model involving heavy manpower for indexing individual documents. The prototype is Garfield's SCI/WoS based on the journal molecule and a selection tool, the impact factor [2.81, 2.82]. The supervision was still heavy in the elaboration of classification, although the journal citation report is a powerful auxiliary for actual bibliometric classification based on journals' citation exchanges [2.83]. The model of citation index inspired Elsevier's Scopus [2.84, 2.85]. The Google Scholar alternative, with a larger scope of less normalized sources, is the extreme case with very little supervision and does not include a classification scheme.

Following Narin's works, several journal classifications were developed (factor analysis in [2.86], core–periphery clustering in [2.87]). Many others have been proposed over the past decades, some with overlay facilities for positioning activities [2.88]. Other proposals use prior categories and expert judgments as seeds [2.89, 2.90], with reassignment of individual papers. Boyack and Klavans, whose experience covers mapping and clustering at several granularity levels (journals, papers) [2.91], recently reviewed seven journal-level classifications (Elsevier/Scopus (All Science Journal Classification), UCSD (University of California San Diego), Science-Metrix, (Australian Research Council), ECOOM (The Center for Research and Development Monitoring), (Web of Science), NSF, (Journal IDentification) and ten article-level classification (five from ISI and Center for Research Planning (CRP), four from MapOfScience, one from CWTS (Center for Science and Technology Studies)) [2.92]. The latter authors privilege the concentration of references in review articles (\(> {\mathrm{100}}\) references) considered as gold standard literature, as an accuracy measure (a heavy hypothesis). They conclude in favor of paper-level (versus journal-level approaches) and in favor of direct citations (versus cocitations or bibliographic coupling) for long-term smoothed taxonomies, distinguished from current literature analysis, for which they rank first bibliographic coupling.

Those developments mark a new turn in the competition between institutional classification and bibliometric approaches for long-term classifications of science. It is not clear, however, whether the variety of classifications from bibliometric research, not always publicly available, can supersede the quasistandards of SCI type for current use in bibliometric studies. High-quality delineation of fields cannot solely rely on journal-level granularity, and this is still more conspicuous for emerging and complex domains.

2.4.2 Semantic Resources

Science institutions and database producers have a continuous tradition of maintenance of linguistic and semantic resources, in relation to document indexing. The best known is probably the (Medical Subject Headings; National Library of Medicine) used in Medline/PubMed. INSPEC, CAS, and now Public Library of Science ( ) offer such resources. Controlled vocabulary and indexes, archetypal tools of traditional IR search were also the main support of new coword analysis in the 1980s. A revival of controlled vocabulary and linguistic resources is observed in recent works, associated to the description of scholarly documents [2.93] and bibliometric mapping [2.94]. We shall return to the role of statistical tools in the shaping of semantic resources.

2.5 Conclusion

Science, seen through scientific networks, is highly connected, including long-range links reflecting interdisciplinary relations of many kinds. Global maps of science, with the usual reservation on methods settings and artifacts, display a kind of continuity of clouds along preferential directions (Fig. 2.2a-cc, from [2.47]). The extension of domains has to be pragmatically limited by IR trade-off with the help, in the absence of ground truth, of more or less heavy supervision. Three models of delineation appear: ready-made delimitation in databases, rather limited and rigid as is, but prone to creative diversions from strict model A (coclassification, etc.); model B, ad hoc search strategies combining several types of information; model C, by extraction of the field from a more extended map, regional or global.

Networks of science may locally show cases of domains ideal for trivial delineation: a perfect correspondence between the target and ready-made categories, or insulated continents surrounded by sea. Such domains will not require sophisticated delineation. This is the exception not the rule.

Areas such as environmental studies, nanosciences, biomedicine, information and cognitive sciences and technologies (converging NBIC, concept coined by NSF in 2002) exhibit both internal diversity and strong multidisciplinary connections. Commissioned studies often target emerging and/or high-tech strategic domains which witness science in action prone to socioscientific controversies à la Latour. These areas combine high levels of instability and interdisciplinarity. As to transdisciplinarity, the question arises of whether to include SSH and alternative sources in data sources and panels of experts.

3 Tools: Information Retrieval (IR) and Bibliometrics

This section focuses on some technical approaches to the delineation problem: information retrieval and bibliometric mapping. They share the same basic objects and networks, chiefly actors and affiliations, publication supports, textual elements, and citation relations. Although the general principles of bibliometric relation studies are quite well established, new techniques from data analysis and network analysis, including fast graph clustering, open new avenues for achieving delineation tasks on big data at the fine-grained level. The quality of results remains an open issue. Domain delineation confronts or combines the three approaches previously stated: ready-made categories (model A) are seldom sufficient; we shall envision ad hoc IR search (model B) with an occasional complement of ready-made categories; and bibliometric processes of mapping/clustering along the lines of model C.

3.1 IR Term Search

The question of delineation spontaneously calls for a response in terms of information-retrieval search. The only particularity is the scale of the search or more exactly, as mentioned before, the diversity expected in large domains, which is particularly demanding for the a priori framework of information search . The verbal description of the domain requires, beforehand, an intellectual model of the area. In addition to the methodological background brought by IR models, a broad range of search techniques address delineation issues:

-

Ready-made solutions in the most favorable cases, with previously embodied expertise, sketched above.

-

Search strategies of various levels of complexity, also depending on the type of data, relying on expert's sayings.

-

Multistep protocols: Query expansion, combination with bibliometric mapping.

IR models are outside the scope of this chapter. In the tools section below, we recall some of the techniques shared by IR and bibliometrics, especially the vector-space-derived models.

3.1.1 IR Tradeoff at the Mesolevel

The recall–precision trade-off is particularly difficult to reach at the mesolevel of domains exhibiting high diversity. Generic terms (say the nano prefix if we wish to target nanosciences and technology) present an obvious risk to precision. A collection of narrower queries (such as self-assembly, quantum dots, etc.) is expected to achieve much better precision. In the simpler Boolean model, this will privilege the union operator of subarea descriptors (examples for nanoscience [2.33, 2.95, 2.96]). However, nothing guarantees a goodness of coverage of the whole area by this bottom-up process. An a priori supervision of the process by a panel of experts is required, but the experts' specialization bias, especially in diverse and controversial areas, generates a risk of silence. Similar risks are met in the selection of training sets in learning processes. Another shortcoming is the time-consuming nature of supervision, again worsened by the diversity and multidisciplinarity of the domain. A light mapping stage beforehand may reduce the risk of missing subareas. As mentioned above, focused IR searches are, in contrast, able to retrieve weak signals lost in hard clustering.

3.1.2 Polyrepresentation and Pragmatism

Scientific texts contain rich information, most of it made searchable in the digital era. Pragmatically, all searchable parts of a bibliographic record, data or metadata are candidates for delineating domains: word \(n\)-grams in titles, abstracts, and full texts; authors, affiliations, date, journal or book, citations, acknowledgements, transformed data (classification codes, index, controlled vocabulary, related papers…) depending on the database. These various elements exhibit quite different properties. In theoretical terms, the variety of networks associated to these elements are one aspect of the polyrepresentation of scientific literature [2.97]. We will return to this question later (Sect. 2.3.2). A specific advantage of lexical search is the easy understanding of queries—whereas other elements (aggregated elements such as journals; citations) are more indirect. However, the ambiguity of natural language reduces this advantage.

Bibliometric literature is packed with examples of pragmatic delineation of domains based on IR search. By and large, apart from ready-made schemes when available (indexes, classification codes), a typical exploration combines a search for specialized journals if any, and a lexical search in complement. At times, an author-affiliation entry is used, especially in connection with citation data. Bradford and Lotka ranked lists are therefore good auxiliaries, with evident precautions on journals' or authors' degree of specialization .

3.1.3 Granularity

We noted above that some ready-made classifications such as the SCI scheme (journals or journal issues) are essentially based on full journals—or journal sections. These ready-made categories very seldom fit the needs of targeted studies. Instead, ad hoc groupings of selected journals relatively easy to set up with the help of experts, are a convenient starting point within a Bradfordian logic. The journal level presents obvious advantages. Journals exhibit a relative stability in the medium term; they are institutionalized centers of power through gatekeeping, and a (controversial) evaluation entity in the impact factor tradition.

However, the journal level is problematic for delineation studies. Journals whose specialization is such that they indisputably belong to the target domain, can be taken as a whole, but of course target domain literature are rarely covered by specialized journals only, and investigations should be extended to moderately or heavily multidisciplinary sources. Conditions of diversity and multidisciplinarity—which prevail in the targets of studies where elaborate delineation is worthwhile—hinders the efficiency of global Bradford/Lotka-based selections, with problems of normalization (refer also to [2.98]). We will return to these issues in the Sect. 2.3.2 devoted to clustering and mapping.

To conclude, the IR resources in scientific texts, data and metadata, suggest a polyrepresentation of scientific information (cognitive model [2.97]), which is akin to the multinetwork representation of the scientific universe. Ingwersen and Järvelin [2.99, p. 19] propose a typology of IR models and the perspective of the cognitive actor. IR protocols generally involve multistep approaches, with various core–periphery schemes. In conventional search, heavy ex ante supervision is needed for covering the variety of domains, ideally with good analytic/semantic capability. In the absence of a gold standard, proxy measures of relevance are needed.

3.1.4 Multistep Process

Multistep processes, possibly associated with combinations of various bibliometric attributes, are run-of-the-mill procedures (for example [2.32]).

Core–periphery rationale is common, in accordance with the selective power of concentration laws, both in IR and bibliometrics (journal cores in [2.100], cocitation cores in [2.101], \(h\)-core in [2.102], emerging topics in [2.103]). For example, working on highly cited objects—authors, journals, or articles—gives a set of reasonable size, amenable to further expansion with enhanced recall. Cores inspired from the Price law on Lotka distributions or from application of the \(h\)-index are helpful. Proxies such as seeds obtained from initial high-precision search stages can do as well. The core or seed expansion process is global or cluster-based. The risk of core–periphery schemes, by and large favorable to robustness, is to miss lateral or emerging signals. This may need some input of dynamic characterization of hotspots at the fine granularity level.

A parent method is bibliometric expansion on citations, which also uses information from a first run (set of documents retrieved by a search formula or a prior top cited selection, considered as the core) to enhance the recall through the citation connections, typically operating at the document level with or without a clustering/mapping step. In this line the Lex\(+\)Cite approach mentioned in Sect. 2.3 relies on a default global expansion, rather than a cluster-based one, to limit the risk of an exclusive focus on cluster-level signals that would miss across-network bridges.

Query expansion by adaptive search is along the same lines. Interactive retrieval with relevance feedback identifies the terms, isolated or associated (co-occurrences), specifically present in the most relevant documents retrieved according to various measures [2.104, 2.105, 2.106]. An efficient but heavy process consists in submitting the output of a search stage to data analysis/topic modeling , able to reconstruct the probable structure likely to have generated the data. By providing information on the linguistic context—also citation, authoring context, etc.—they in turn help to improve the search formulas by a kind of retro-querying. This ranges from simple synonym detection to construction of topics, orthogonal or not, suggesting the rephrasing of queries. Variants of itemset mining uncovering association rules ([2.107], with earlier forerunners) are promising in this respect (see below). Evaluation of output from unsupervised stages can also call for a manual improvement of queries.

Delineation protocols may also use the seed as a training set for learning algorithms. A difference is that core–periphery schemes usually rely on the selective power of bibliometric laws, whereas the training set might be extracted on various sampling methods, provided that the seed does not miss the variety of the target. As big data grows bigger, semisupervised approaches are gaining popularity in the machine learning community. This recent approach should prove attractive in the bibliometrics community, as there seems to be considerable interest in linking metadata groups and algorithmically defined communities [2.108].

To conclude on this part, whilst typical IR search relies on an a priori understanding of the field, multistep schemes involve stages of data analyses quite close to bibliometric mapping practices, the topic of the next subsection. IR and bibliometrics share roots and features, which soften the differences: adaptive loops, learning processes, seed-expansion, and core–periphery schemes. Bibliographic coupling, at the very origin of bibliometric mapping, came from the IR community [2.109] and the cluster hypothesis about relevant versus nonrelevant documents [2.110] voices the common interests of IR and bibliometrics, beyond the background methodology of information models (Boolean, vector space, or probabilistic) and general frameworks such as the above-mentioned cognitive model. The tightening of bibliometrics–IR relations has been echoed in a series of workshops and in dedicated issues of Scientometrics ([2.111, 2.112], see also [2.113] for a focus on domain delineation) and in the International Journal on Digital Libraries [2.114].

3.2 Clustering and Mapping

In contrast with conventional IR search, bibliometric mapping starts at a larger extension level than the targeted domain. This broad landscape, typically built by unsupervised methods, is scrutinized by experts to rule out irrelevant areas. The supervision task is limited to the postmapping stage. This is in principle less demanding than the a priori conception of a search formulation or of a training set. The default solution is a zoomable general or regional map of science, with availability and cost constraints. The alternative is the construction of a limited overset including almost certainly the anticipated domain, using a general search set for massive recall, an operation much lighter than setting up a precise search formula. In terms of scale, the final result is tantamount to the outcome of a top-down elimination process, although the selection modalities are diverse. There is currently great interest in delineation through mapping. IR and mapping are complementary in various ways. Firstly, we briefly describe the data analysis toolbox, before addressing the main bibliometric applications and a few problematic points.

3.2.1 Background Toolbox

The data structure of matrices in the standard bibliometric model allows scholars to mobilize the large scope of automatic clustering, factor/postfactor methods, and graph analysis. Classical methods of clustering and factor analysis continue to be used in bibliometrics, but in the last decade(s) novel methods came of age, more computer-efficient and fit for big data, an advantage for mapping science and delineating large domains. Starting with bibliometric data of the standard model and some metrics of proximity or distances, clustering and community detection methods produce groups. Elements are mapped using various dimension reduction algorithms. Factor methods produce groups through clustering applied to factor loadings, with an integrated two-dimensional (2-D) or three-dimensional (3-D) display when just two or three factors are needed in the analysis.

A major driving force of bibliometric methodology is the general network theory, which took large networks of science, especially collaboration and citation, as iconic objects [2.115, 2.116, 2.117]. Quite a few mechanisms have been proposed to explain or generate scale-free networks since Price's cumulative advantage model for citations [2.118] along the lines of Yule and Simon, and later studied in new terms (preferential attachment) by Albert and Barabási [2.119], see also [2.120]. These models have some common features with the Watts–Strogatz small worlds model, but also differences that are empirically testable [2.121]. Amongst other mechanisms: homophily [2.122], geographic proximity [2.123], thematic proximity inferred from linguistic or citation proximity. Börner et al reviewed a few issues in science dynamics modeling [2.124]. Of great interest in bibliometrics and especially delineation, community detection algorithms exhibit a general validity beyond real social networks, and belong to the general toolbox of mathematical clustering and graph theory—applicable to various markers of scientific activity, document citations, words, altmetric networks, etc., see also [2.120].

Hundreds of clustering and mapping methods have been designed during one century of uninterrupted research. This section can only provide a basic overview of the main method families, in the perspective of domain delineation. More comprehensive descriptions and references, as well as a basic benchmark of various methods, applied to a sample of textual data, can be found in [2.125].

3.2.1.1 Clustering Methods

Although hierarchical clustering algorithms sometimes seem old-fashioned because of their computing complexity, \(O(n^{2})\) in the very best cases, some of them show good performances for relative small universes. For large ones, they can be coupled beforehand to data-reduction stages, classical (SAS Fastclus \(O(n)\)), preclustering algorithms for big data (Canopy clustering [2.126]), or sampling methods. All-science bibliometric maps use rather faster algorithms today, not without limitations however. Discipline-level maps, or simply internal clustering of the domain set at various stages of delineation may still rely on the classical techniques.

Hierarchical ascending algorithms are local, deterministic and produce hard clusters, with a few exceptions (pyramidal classification), properties favorable to dynamic representations. They do not constrain the number of clusters and provide a multiscale view through embedded partitions, with some indication of robustness of forms in scale changes. Most hierarchical descending (divisive) methods are heavier. Hierarchical methods typically rely on ultrametrics, which has downsides, see [2.125].

Amongst popular methods in bibliometrics are ascending methods: single linkage, average linkage, and Ward. Single linkage is relatively fast and exhibits good mathematical properties in relation to spanning trees but produces disastrous chain effects which must be limited in various ways. Ward and especially group average linkage give better results. Group average linkage, advocated for bibliometric sets by Zitt and Bassecoulard [2.127] and used by Boyack and Klavans in various works [2.128], is slightly biased towards equal variance and is not too sensitive to outliers. Ward is biased towards equal size with a strong sensitivity to outliers. Properties and biases were studied especially by Milligan [2.129, 2.130] using Monte Carlo techniques.

Density methods are appealing: deterministic too, local, and as such prone to dynamic representations of publication or citation flows. [2.131] (density-based spatial clustering of applications with noise) is the most popular to the point of becoming synonymous with density clustering. The SAS clustering toolbox includes hierarchical methods with prior density estimation, with good properties towards sampling and the ability to capture elongated or irregular classes. However, this property is disputable in bibliometric uses (Sect. 2.3.2, Shape/Properties of Clusters). More recently, density peaks [2.132] has implemented an original and graphical semiautomatic procedure for determining the cluster seeds.

Not directly hierarchical is the venerable \(K\)-means clustering family, still popular, thanks both to its excellent time/memory performance and sensitivity to different cluster densities. A shortcoming of not being deterministic, they converge to local optima of their objective function, depending on their random (or supervised) initialization. In comparative analyses, they are not considered too sensitive to outliers. They optionally allow for soft/fuzzy clusters, and approximate dynamic data-flow analysis.

Factor methods are basically dimension-reduction techniques, indirectly linked to the partition problem. A quick-and-dirty heuristics for extracting a limited number \(k\) of dominant clusters from \(k\) factors consists of assigning each entity to the factor axis which maximizes the mode of its projection, subject to the constraint of a common factor sign for the majority of entities assigned to this cluster—which eliminates few of them in practice. For a more rigorous procedure, see the descending hierarchical clustering method Alceste [2.133] in the dataspace of correspondence analysis. Factor methods rely on the mathematical foundation of singular value decomposition ( ) of data matrices for reducing dimensionality and filtering noise. The interesting metrics used by correspondence analysis ( [2.134]) explains the attention over half a century from many scholars in relation to mapping or clustering limited to a few dominant factor dimensions. Dropping this limit, i. e., taking into account factor spaces with hundreds of dimensions [2.135], latent semantic analysis (LSA [2.136]) unleashed the potential of singular value decomposition and fostered the integration of semantics in textual applications, in a lighter but more convenient form than handmade ontologies, costly to edit and update.

Hybrid factor/clustering methods, sometimes coined topic models , result in representing each cluster as a local, oblique factor, with a progressive scale from core elements to peripheral ones, opened to fuzzy or overlapping interpretations or extensions. Generally powered by the expectation maximization algorithm ( ), they converge to local optima, too. Non-negative matrix factorization ( ) and self-organizing maps ( ) are well-known examples. Axial \(k\)-means ( in [2.137]) has been used in a comparative citations/words bibliometric context (Sect. 2.4).

Also known as topic models, the probabilistic models try to lay solid statistical foundations for their hybrid-looking representation: they produce explicit generative probabilistic models for the utterance of topics and terms [2.138]. Probabilistic LSA (pLSA in [2.139]) and latent Dirichlet allocation ( in [2.140]) are the best-known examples, claiming good semantic capabilities. The older fuzzy C-means method ( ) is akin to this family, which uses the EM scheme for converging to local optima of their objective function.

The graph clustering family, also known as network analysis, or community detection methods, does not operate on the raw (entities \(\times\) descriptors) matrix, as the previous families do, but on the square (entities \(\times\) entities) similarity matrix, whose visual counterpart is a graph. Most of these methods operate directly on the graph, detecting cliques or relaxed cliques (modal classification), e.g., Louvain [2.141], InfoMap [2.142], and smart local moving algorithm ( in [2.143]). Some of them operate on the reduced Laplacian space drawn from the graph (spectral clustering [2.144]). Quite a few comparative studies are available [2.145, 2.146, 2.147].

3.2.1.2 Note on Deep Neural Networks

While neural networks were somewhat in standby mode during the 1995–2005 decade, challenged by more manageable mathematical methods, several factors like the pressure of big data availability and progress in hardware ( , i. e., graphics processing units) triggered a renewal under the banners deep neural nets and deep learning. Allowing learning by backpropagation of errors in many layers networks, they gave form to the dream of knowledge acquisition by growing levels of abstraction: for images, extraction of local features; contours, homogeneous areas, shapes; for written language: character \(n\)-grams, words, word \(n\)-grams, expressions/phrases, sentences. Typically, they avoid heavy natural language processing ( ) preprocessing (parsing, unification, weighting, selection…). These techniques are already widely used in supervised learning, with spectacular progress in automatic translation, face recognition, listening/oral comprehension, with important investment from the largest internet-related companies (e.g., Google, Apple, Facebook, Amazon), especially. As far as informetrics and IR are concerned, the main domain impacted so far is logically large-scale retrieval ([2.148] which uses a robust letter-trigram-based word-\(n\)-gram representation). There have also been some attempts in relation to nonsupervised processes for information retrieval [2.149].

A promising technique is neural word embeddings ( ). Millions of texts now available online make it possible to develop vector representations of words in a semantic space in a more elaborate way than LSA—a method coined neural word embeddings. For example, the Word2Vec algorithm [2.150] processes raw texts so as to list billions of words-in-context occurrences (e.g., word \(+\) previous word \(+\) next word), then factorize [2.151] the word \(\times\) context matrix (tens of thousands of words, a few hundreds of thousands, or millions of unique contexts) and extract some hundreds or thousands of semantic and syntactic dimensions. We will return later to the semantic capabilities of NWE.

3.2.1.2.1 Note on the Definition of Distances

Whether starting from a binary presence/absence matrix or from occurrence or co-occurrence counts, some methods embed a specific weighting scheme, i. e., a metric, for computing distances, or similarities between items. This is the case of probabilistic models, correspondence analysis, and axial \(K\)-means. Other methods allow for a limited and controlled choice, as aggregative hierarchical methods do. In the case of graph clustering methods, the user may freely choose his preferential distance definition prior to building the adjacency matrix, which adds an extra degree of freedom beyond the choice of the degree of nonlinearity, via a threshold value. For word-based matrices, heavier than citation-based ones, the methods of the \(k\)-means family also make it possible to choose a weighting scheme (Salton's term frequency-inverse document frequency (TF-IDF ), Okapi BestMatch25 [2.152]).

Whereas factor/SVD methods combine the metrics and mapping capability, e.g., two-factor planes or 3-D displays, at the native granularity level (e.g., document \(\times\) words), other mapping algorithms may operate on rectangular or on square (distance) matrices of elements or on groups from a clustering stage, or institutional aggregates (journals). Families of mapping techniques rely on various principles: equilibrium between antagonistic forces—repulsion between nodes, attraction alongside edges (e.g., the Fruchterman and Reingold algorithm [2.153], implemented in Gephi [2.154], alone or combined with clustering (Sandia VxOrd/DrL/OpenOrd [2.155], CWTS VOSviewer [2.143])); optimization of diverse functions: projection stress minimization in the case of (multidimensional scaling), with Euclidean distances in the case of metric MDS, a variant of (principal component analysis), and other distances or nonlinear functions of these distances in the case of nonmetric MDS, one of the nonlinear unfolding techniques; maximizing inertia in the case of Correspondence analysis, minimizing edge-cuts in a 2-D projection plane; or maximizing local edge densities [2.156].

3.2.1.2.2 Itemset Techniques

Itemset techniques are used for describing a data universe in terms of simple procedures, typically Boolean queries with AND, OR, and NOT operators. This may be used for building a stable procedural equivalent of data, e.g., for updating a delineation task (like probabilistic factor analyses). It may also be used for query expansion, as mentioned above in Sect. 2.3.1. The problem amounts to duplicating a reference partition in a new universe: machine learning techniques are basically fit to this problem, and, in the particular context of textual descriptions, itemset techniques. They are akin to generating Boolean queries with AND, OR, and NOT operators, for extracting approximations of the delineated domain, within precision and recall limits established in the machine learning phase [2.107, 2.157].

3.2.1.3 A Benchmark

To illustrate the capabilities of these various methods with an example, in the absence of a bibliometric dataset labeled with indisputable ground truth classes, we turned towards a reference dataset popular in the machine learning community, the Reuters 21 578 ModApté split (the corpus description is available online at http://www.daviddlewis.com/resources/testcollections/rcv1/. The website http://www.cad.zju.edu.cn/home/dengcai/Data/TextData.html has made a preprocessed version of this corpus available to the public, as supplementary material to [2.158]). The main features are:

-

Source: A set of short texts: newswires from Reuters' press.

-

Contents: In the six-class selection used, the number of texts (\(\approx{\mathrm{7000}}\)) and terms (\(\approx{\mathrm{4000}}\)) is sufficient with regards to text statistics.

-

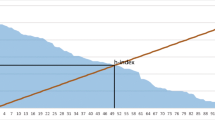

Class structure considered as ground truth: Built by experts, visually glaring in Fig. 2.3: two big classes, one very dense, the other not, and four small classes, two of which are linked together. In this way, two major problems of real-life datasets are addressed: the imbalance between cluster sizes, and between cluster densities.

We challenge 17 clustering/mapping methods to retrieve this class structure. The similarity of their cluster solution to ground truth partition is measured by two indicators, adjusted Rand index ( [2.159]) and normalized mutual information ( [2.160]). The results are detailed in [2.125]. Let us summarize them in a user-oriented view, sorted by number of required parameters: the lesser the better, ideally, facing a bibliometric dataset without prior knowledge, no parameter:

-

Two methods of network analysis require no internal parameterization, Louvain and InfoMap. However, the similarity matrix generally requires a threshold setting, here fixed to \(\mathrm{0.1}\) in the cosine intertext similarity matrix. Infomap obtains the best result in terms of NMI (\(\mathrm{0.436}\) value versus \(\mathrm{0.423}\)), the index considered the best match for human comparison criteria. This value is rather poor, and this method does not distinguish classes 1, 2, 3, 4, and splits class 6.

-

Nine methods require one parameter: The three hierarchical clusterings need a level cut parameter, possibly adjusted for 6 resulting clusters, while for CA, NMF, AKM, (probabilistic latent semantic analysis), LDA and spectral clustering, the number of desired clusters (6) has to be specified. As the latter group converges to local optima, we kept the best results in terms of their own objective function out of 20 runs. The indisputable winner is average link clustering, in both ARI (\(\mathrm{0.62}\)) and NMI (\(\mathrm{0.71}\)) terms. The lists of the four following challengers are contrasted: with regard to ARI, first Mac Quitty hierarchical clustering (\(\mathrm{0.50}\)), then LDA, AKM, CA; with regard to NMI, first AKM (\(\mathrm{0.51}\)), then Mac Quitty, CA, LDA. If one optimizes ARI over all 20 runs with prior knowledge of the six-clusters structure—a heroic hypothesis—, average link clustering still performs best (with a ten-clusters cut, ARI \(={\mathrm{0.71}}\), NMI \(={\mathrm{0.64}}\)) while the followers reach, at best, ARI \(={\mathrm{0.55}}\) and NMI \(={\mathrm{0.55}}\).

-

The last group of methods ( (independent component analysis), DBSCAN, FCM, affinity propagation, SLMA, density peaks) require at least two parameters, a handicap in the absence of prior knowledge of the corpus structure. SLMA obtains the best rating (ARI \(={\mathrm{0.60}}\), NMI \(={\mathrm{0.55}}\)).

Our general conclusion is that one must be very cautious regarding domain delineation resulting from one run of one method. Multiple samplings, if necessary, and level cuts of average links as well as multiple runs of LDA, AKM, and SLMA may help determine core clusters, and possibly continuous gateways between them. Limitations of this benchmark exercise should be kept in mind. It would benefit from tests on different reference datasets: any method can be trapped in particular data structures, and the results cannot be extrapolated without caution. As advocated below, processing multiple sources (lexical, citations, authors …) and investigating the analogies and differences in their results will always prove rewarding. A number of in-depth benchmarking studies are found for hierarchical clustering (Milligan [2.129, 2.130] not covering the last techniques), discussing the generation of test data as well as comparisons of algorithms. For community detection, usually taken as a synonym of graph-based clustering rather than clustering of true social (actors) communities, [2.145] ranked first Infomap, then Louvain and Pott's model approach [2.161]. Leskovec et al [2.146] studied the behavior of algorithms with increasing graph size. Yang and Leskovek [2.147] reflect on the principles of clustering outcomes compared to institutional classifications.

3.2.2 Bibliometric Mapping

3.2.2.1 Classical Way

Most classical bibliometric mapping, as well as information retrieval, relies on substantive (feature) representations of words, word combinations, citation, indexes, and so forth. Substantive representation implies legibility and interpretation by experts or users, and a condition for bibliometricians or sociologists to check and possibly deconstruct the document linkages. It contrasts with featureless machine representation applicable for example to distances of texts (see below). In contrast, the substantive approach is deepened in semantic studies: ontologies and semantic networks suppose more elaborate investigation of term relationships. Bibliometric mapping and IR techniques are both a client of ready-made semantic resources, and providers of studies, supported by data analyses, likely to help the construction of thesauri and ontologies.

The standard bibliometric model starts from the data structure of articles, essentially a series of basic article \(\times\) attributes matrices, one of these reflexive: article \(\times\) cited references, where references can also stand as attributes. The derived article \(\times\) article matrices (e.g., bibliographic coupling, lexical coupling) and elements \(\times\) elements matrices (e.g., coword or profiles, cocitation or profiles) cover a wide range of needs. Clusters of words are candidates for conceptual representation, concepts which in turn can index the documents. Likewise, clustering of cited articles reveal intellectual structures and in turn index the citing universe. Basically, the attributes (words from title, abstract, full text; keywords list, indexes—other fields like authoring) are processed in bags of monoterms or multiterms, recognized expressions or word \(n\)-grams. Standard bibliometric treatments rarely go further, semantic studies do, for example by using chain modeling of the texts. All these forms allow for control and interpretation of linguistic information.

Assuming that the final purpose is to classify or delineate literature, the access is dual: direct classification of articles after their profile on the structuring elements (words, cited references), or a detour by the structuring items: word profile (especially coword), citation profile (cocitation), index (or class profile) including coclassification, when applicable. The basics of citation-based mapping were established in the 1960s and the 1970s: bibliographic coupling [2.109], chained citations [2.162], cocitation [2.101, 2.163], author cocitation [2.164], coclassification, etc. The lexical counterpart, with its first technical foundations in Salton's pioneer works [2.165], was reinvested by English and French social constructivism in the 1980s [2.166, 2.167, 2.168] with a stress on local network measures quite in line with the development of social network analysis in that period [2.169]. In bibliometrics, the true metric approach of text-based classification, Benzécri's correspondence analysis [2.134], remained confidential. For convenience reasons, many large-scale classifications relied on proximity indexes and MDS or hierarchical single-linkage (ISI cocitation). We return later to word–citation comparison and combination (Sect. 2.4).

3.2.2.2 Developments

The principles above, mutatis mutandis, are kept in further developments of citation mapping: the approach through citation exchanges, mentioned in Sect. 2.2, assumes predefined entities, journals for example. At the article level, symmetrical linkages between articles, or between structuring elements, are classical: large-scale cocitation (CiteSpace [2.170]). Glänzel and Czerwon [2.171] advocated bibliographic coupling. As already mentioned, direct citation linkage clustering, the first benchmark for cocitation and coupling in Small's princeps paper [2.101], is considered as particularly able to reflect long-period phenomena [2.172, 2.173, 2.92] but not short-term evolutions. It turns out that the time range picked and the granularity of groupings desired might suggest the choice between the three families of citation methods to reflect structure and changes in science.

From the theoretical point of view, cocitation (respectively coword) is semantically superior to coupling, by visualizing the structure of the intellectual (cognitive) base, but requires a secondary assignment of current citing literature. Coupling as such, because it by default spares the dual analysis (the cited structure; the lexical content), is semantically poor but bibliographic coupling handles immediacy better than cocitation does. However, this depends on the computer constraints and the settings: the thresholding unavoidable in cocitation analysis drastically reduces weak signals that are accounted for in coupling. The dependence of the maximum retrieval on the threshold of citation and the assignment strength (number of references), in a close field, is modeled in [2.59]. Quite a few authors compared the methods empirically [2.128] over a short time range, [2.173, 2.174]. These studies are not always themselves comparable in their criteria, nor are they convergent in their outcome, so that it is difficult to come to a conclusion on this basis alone.

The new data analysis toolbox (fast graph unfolding, topic modeling) gradually pervades large-scale studies. From the domain delineation perspective, a general answer in terms of single best cannot be expected. The benchmark above reminds us that classical methods, apparently outdated in the big data era, still prove to perform quite well. Let us recall a few issues in clustering/mapping for bibliometric purposes, especially delineation.

3.2.3 A few Clustering/Mapping Issues

As other decision-support tools, maps in bibliometrics receive contrasted interpretations. In a social constructivist view, maps are mainly viewed as tools of stimulation of sociocognitive analysis and also as supports of negotiation with/amongst actors. If technicalities are not privileged, there is clear preference for local network maps, preferably lexical or actors-based, connected to sociocognitive thinking. Bibliometricians and librarians are keener on quantitative properties and retrieval performances. Expectations as to ergonomics, granularity, robustness, clusters properties, and semantic depth, largely vary depending on the type of study.

3.2.3.1 Ergonomics

Map usage benefits from new displays with interaction facilities. A tremendous variety of mapping methods is available ([2.175] although in practice a few efficient solutions prevail). The progress in interfaces (scale zooms, bridges between attributes, interaction with users…) changed the landscape of mapping. If adding cluster features to cluster maps is trivial [2.176], the systematization of overlay maps by Leydesdorff and Rafols [2.177] is quite appealing. Since delineation tasks often deal with multidisciplinarity, multiassignments, and cluster expansion, various types of cross-representations (Sect. 2.4) including overlay maps are quite convenient tools for discussion.

3.2.3.2 Granularity