Abstract

Traditional bridge inspection methods require lane closures, inspection equipment, and most importantly the experiences and knowledge of the inspectors. This increases not only the inspection cost and time, but also the risk to the travelling public. Due to the lengthy and costly traditional bridge inspection methods, there has been an increasing backlog of inspection activities. In this research, we design an unmanned aerial vehicle (UAV) assisted bridge defect inspection system, in which a UAV can capture the image and transmit the information to the ground station for further analysis. The system can be divided into 2 subsystems: electromechanics & communication system, and image processing system. The electromechanics & communication systems ensure the self-locating, flight control, image transmission, and human intervention functions. The image processing system performs the image preprocessing, defect extraction, and provides the inspection report. This system, if put into practice, can save the cost up to 70%. We believe that the UAV assisted bridge inspection can be popular in the future.

This work was supported in part by the National Natural Science Foundation of China under Grants 61773381, 61773382 and 61533019; Chinese Guangdong’s S&T project (2016B090910001, 2017B090912001); 2016 S&T Benefiting Special Project (No. 16-6-2-62-nsh) of Qingdao Achievements Transformation Program; Dongguan’s Innovation Talents Project (Gang Xiong, Jian Lu), and Guangdong Innovative Leading Talents Introduction Program (00201511).

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

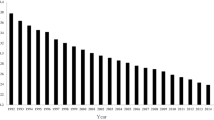

Bridges play an important role in the transportation network. There are over 800 thousand bridges in China according to the report of Ministry of Transport of the People’s Republic of China. To ensure the ongoing service capability and safety of the travelling public, it is essential to inspect bridges regularly and record the corresponding data. The bridge inspection usually includes defect detection on deck, superstructure, and substructure. Bridges should undergo regular inspection every 3 months, and periodic inspection once 3 years, according to Code for Maintenance of Highway Bridges and Culvers JTG H11. Up to now 16,623 bridge assessment records have been accumulated (Li et al. 2014). Traditionally, bridge inspections are conducted manually through traffic control, which includes under-bridge inspection units, mobile scaffolding, boom lifts and cherry pickers. The finical cost in the inspection of Jiaozhou Bay Bridge takes around RMB 1,412,000, with bridge inspection vehicle rent fee RMB 1,184,000; bridge inspection ship rent fee RMB 8000; and traffic block cost RMB 14,800, which is due to the labor-intensive nature of the current bridge inspection method. Moreover, the conventional inspection method is inaccurate, depending highly on the experiences and knowledge of inspectors. A more efficient, economic and accurate bridge inspection method is desirable.

UAV systems have been widely applied in infrastructure management area, because of its high mobility. The system includes traffic monitoring, construction engineering safety inspections and 3-D photogrammetric modelling (Irizarry et al. 2012). Currently, UAV is commonly used in aerial photography and light load transportation.

Our UAV assisted bridge defect inspection system mainly consists of 3 parts: UAV with its function units, ground station, and the interactions between UAV and ground station (see Fig. 1).

UAV incorporates multiple electronic devices, such as mainboard, gyroscope, communication unit, Lidar, and carries a pan and tilt camera, so as to conduct image capture task automatically. Ground station can extract defects on images, generate inspection reports, as well as remotely control the UAV. The interactions between UAV and ground station are command, information, and status. Ground station sends command to UAV. Generally, the command is to adjust the attitude (pitch, roll, and yaw) of the UAV, or to modify the performance of the electromechanics devices on UAV, for example, zoom in the camera.

This research focuses on the 3 parts of the system. The hardware configuration, the control framework, and sensor fusion of the UAV are to be studied. The communication realization methods and image processing methods are also elaborated. The rest of the paper is structured as follows. Section 2 lists related work. We describe the UAV and the UAV-ground station interaction in Sect. 3. Then, we present the details of the image processing methods and the results in Sect. 4. In the end, we conclude our work in Sect. 5.

2 Related Work

In general, the related projects and literatures fall into 2 categories: UAV developments and applications, and practice of assessing the visual condition of items. We will introduce both of them and present concisely our contribution in practical aspects.

UAVs can be used to execute observation or detection missions through automatic or remote control. Existing UAV applications are mainly used in mapping applications, environmental change monitoring, disaster prevention response, resource exploration, etc. For example, Murphy et al. (2008) used unmanned sea-surface and micro-aerial vehicles together after Hurricane Wilma in 2008. The effort identified cooperative unmanned sea-surface and micro-aerial vehicles strategies and open issues for autonomous operations near structures. The aerial vehicle is equipped with robust control system, which guarantees the performance in strong wind. Campoy et al. (2009) discussed applications in the field of civilian tasks, in which UAVs can be utilized. Companies like AIBOTIX from Germany offer solutions for wind turbine and power line inspections with UAV. One example from AIBOTIX is a hexarotor equipped with a protected frame and an upward camera, operated manually with the help of live video. Alighanbari et al. (2003) presented coordination of multiple UAVs for complicated task. Besides the above work, many achievements have also taken place in the field of power management of UAVs, UAV navigation, as well as UAV telemetry control system. In our case, the UAV is supposed to take clear pictures at a specific place from a specific angle, against the bad light and wind beneath the bridge deck, which sets higher requirements for automation.

Visual inspection constitutes an important part of quality control in industry. Back to 1983, the United States Department of Energy sponsored Honeywell to develop a metal surface defect detection device. The device consists of a linear CCD architecture, and the digital image processing software can effectively detect some small defects on metal surface. The automated visual inspection systems are utilized in many industrial and commercial applications. There are many visual inspection systems, which are used for defect detection of ceramic tiles (Rahaman and Hossain 2009), textured material (Kumar and Pang 2002), and textile fabric (Mak et al. 2005) etc. There are many techniques that has been employed for detecting the surface defect (Zhang et al. 2011) and digital texture image defect (Sivabalan et al. 2010) etc. American company Cognex developed iS-2000 automatic detection system and iLearn software to detect surface defects. In our study, bridge images have relatively low SNR (Signal-to-Noise Ratio) due to environment limitations. The defects are not very distinct in images, so the images are with low SNR. In addition, since it is an engineering project, the system needs to be user friendly and well packaged. All these problems are the challenges we are facing.

3 Electromechanics and Communication System

The UAV shapes, mechanisms, configurations, and characteristics can be tailored for task requirements. For bridge defect inspection, our electromechanics and communication system is designed as such (see Fig. 2). The power of the system is provided by battery. The battery eliminator circuit (BEC) is utilized to adjust voltage. The controller acts on motors (UAV motor, tilt motor, and roll motor) to change the attitude of UAV and camera. Sensors feedback information to both controllers and ground station through signals has little delay or noise, since delays or noise would cripple the control effect. Images are sent to ground station via data transmitter. The realization of navigation, flight control, image capture, signal transmission, and self-protection mechanism will be further discussed in this section.

3.1 Navigation

Bridges we are going to inspect in this study usually span over several or tens of kilometers (e.g. Jiaozhou Bay Bridge is of 36.48 km). When talking about “navigation”, we mean that the UAV flies from the ground station, arrive at a specific position within the bridge span, and keep a certain distance from the structure to capture images. UAV carries a GPS unit, a Real Time Kinematic (RTK) unit, a Lidar, a camera along with a communication system to function. GPS is a space-based radio navigation system owned by the United States government with accuracy of 30 cm. Real Time Kinematic (RTK) satellite navigation is a technique used to enhance the precision of position data derived from GPS systems. An RTK-GPS (Leica MC1000) has a nominal accuracy of ±1 cm +1 ppm for horizontal displacements and ±2 cm +2 ppm for vertical displacements. To capture high quality images of a bridge substructure requires the UAV to stay in a certain range and an appropriate distance. Thus, a Lidar, a camera and a communication system are introduced. The Lidar is for distance perception, which tells the distance between the UAV and the substructure. The formula relates the focal length of the camera and the distance will be later discussed in this section. The perceived distance and environment surroundings captured by camera can be sent back to ground station in real-time. The operator can remotely control the UAV based on these feedbacks.

3.2 Flight Control

The UAV adopts PID based double closed-loop control (See Fig. 3). The outer control loop is for position control, while the inner control loop is for attitude control. Position control is composed of distance error proportional control, and velocity error PID control. Attitude control is composed of attitude angle proportional control, and angular velocity PID control. The application of extended Kalman filter in our system integrates the data from inertial measurement unit (IMU), barometer, RTK-GPS to estimate the flight state. The sensor data will be transmitted to flight control system. Flight control system generates motor speed control signal and transmits it to ESC (Electronic Speed Control), according to flight command and flight state. Then, the ESC will change the motor speed. Lidar can detect obstacles within 100 m, which effectively avoids collision. The communication system transmits UAV information and images to the ground station in real time for personnel to assess the UAV state. The bridge inspection UAV supports two flight modes, one is manual mode; the other is automatic mode. The manual mode requires the personnel to manually control the UAV, which is for targeted area inspection. The automatic mode requires 3D data model of the bridge be imported to the system beforehand. The UAV will automatically fly through the route. This mode is for regular inspection and maintenance.

3.3 Image Capture

The image capture task is conducted by camera placed on gimbal. The gimbal can reduce the vibration of camera, and adjust its position through roll motor and tilt motor. If necessary, an LED can be turned on to get a clear image. In Fig. 3 we show the image capture mechanism. The image capture distance between camera and bridge, and the image acquisition interval can be derived through the following formula.

- H :

-

camera-structure distance (mm)

- δ:

-

measurement resolution (mm)

- f :

-

camera focal length (mm)

- t :

-

overlap length (mm)

- γ:

-

pixel spacing of photoelectric sensor (mm)

- L :

-

acquisition distance interval (mm)

- n :

-

pixel number of collector

- d :

-

pixel diameter of collector (mm)

- l :

-

target surface diameter of collector (mm).

3.4 Communication

The transmission of sensor data, flight control command and bridge defect images are achieved through communication system. The transmission of sensor data and flight control command is especially crucial. When the UAV is far beyond sight, the data from sensors and video from camera is the only reference of control command. The delay of transmission will misrepresent the state of UAV, causing potential danger. For this concern, we choose radio rather than Wi-Fi as our transmission method. In Wi-Fi communication, handshaking mechanism between UAV and ground station is applied. Each data package needs to be well sent, or the missed or incomplete data package will be resent. These mechanisms cause the delay of information. This limits the UAV to working within 500 m. When beyond 200 m, an obvious delay is observed. Different from Wi-Fi, radio is a one-way transmission method, which extends the working rage form 500 m up to 2 km.

3.5 Self-protection Mechanism

When error occurs, the self-protection mechanism is activated. For minor errors, like device temporary dysfunction, the alarming signal is sent to the ground station, manual intervention is suggested for the UAV control. For severe errors, like physical damage or low battery, the UAV should return to launching place.

4 Image Processing

Bridge inspection images are usually of relatively low signal to noise ratio (SNR) and contrast ratio, since defects and structures are often with the same color and the defects are very small compared with the whole structure. This section is going to elaborate the image processing procedures in our system (see Fig. 4). Our procedures can be roughly divided into 2 parts: pre-processing, and defect extraction. Image pre-processing includes gradient processing and gray-scale stretch, while defect extraction includes fisher thresholding and clustering analysis. Finally, a report includes images with defect highlighted, location information, time information will be presented to users. Note that all the images we are going to deal with are gray scale ones (Fig. 5).

4.1 Gradient Processing

Gray scale is not evenly distributed in inspection images. Defects have a relatively large gradient compared to the background. The above features of inspection images enable us to do image segmentation. We hope to do a preliminary defect extraction here. There are several common operators for image segmentation. The pros and cons of each operator are listed below (Table 1).

We choose canny operator here, because it is insensitive to noise. The image processing work is conducted at ground station when the UAV flight inspection is finished, so the computing burden is not that heavy. Note that, we do not binarize the images here. Values above threshold are set the same color, while for values below threshold, no operation is conducted to preserve the defect information.

4.2 Gray-Scale Stretch

Gray-scale stretch is a way to enhance contrast ratio. It linearly transforms the gray-scale into a larger scope.

4.3 Fisher Thresholding

Image segmentation is to extract meaningful information from the background. In our case, we are going to extract defects from bridge inspection images. Based on the nature of bridge inspection images (e.g. defects are various and usually small; image noise is quite obvious), Fisher criterion function is preferred in threshold setting. After this step, images are binarized.

4.4 Clustering Analysis

In clustering analysis, defect areas will be grouped and highlighted, and some trivial noise will be filtered. In Fisher thresholding step, defect points are very scattered. Clustering analysis will group these scattered points into several defect areas. Clustering centers with few defect points around will be filtered to eliminate noise.

4.5 Experimental Results

The bridge inspection images after each steps are shown below (Figs. 6, 7, 8 and 9).

The images after clustering analysis with defects highlighted, along with the location, time and other information will be included in the bridge inspection report, which is automatically generated by our system.

5 Conclusion

The UAV assisted bridge inspection system in our paper helps to ease the current costly, labor-intensive situation that has high practical relevance. By introducing UAV in our system, the inspection work can be performed, with the help of electronics and communication system. Currently, defect identification relies on inspectors’ experiences and knowledge. Image processing can play a more and more importation role. It is being adopted in civil infrastructure monitoring over the past few years. The potential for automated inspections was shown to us.

The future work of our system is to build a more integrated software for friendly use. There are a lot to explore from our inspection data. The data serves as an important reference in other tasks. We hope our system will not only have defect inspection reports, but also combine inspection planning and other engineering economics decision making units.

References

Technical code of maintenance for city bridges, China, CJJ

Li, L., Sun, L., Ning, G.: Deterioration prediction of urban bridges on network level using Markov-chain model. Math. Probl. Eng. 2014(7), 1–10 (2014)

Irizarry, J., Gheisari, M., Walker, B.N.: Usability assessment of drone technology as safety inspection tools. J. Inf. Technol. Constr. 17(1), 194–212 (2012)

Civil UAV Real-name Registration Regulation by Civil Aviation Administration of China: http://www.caac.gov.cn/XXGK/XXGK/GFXWJ/201705/P020170517409761154678.pdf

Civil UAV Application Regulation by Civil Aviation Administration of China: http://www.caac.gov.cn/HDJL/YJZJ/201708/t20170809_46115.html

Civil UAV Manufacturing Standards by Ministry of Industry and Information Technology of the People’s Republic of China: http://www.miit.gov.cn/n1146285/n1146352/n3054355/n3057585/n3057590/c5653876/content.html

Civil UAV Traffic Rules by Ministry of Transport of the People’s Republic of China: http://zizhan.mot.gov.cn/sj/fazhs/zongheyshlf_fzhs/201711/t20171128_2942432.html

Murphy, R.R., Steimle, E., Griffin, C., Cullins, C., Hall, M., Pratt, K.: Cooperative use of unmanned sea surface and micro aerial vehicles at Hurricane Wilma. J. Field Robot. 25(3), 164–180 (2008)

Campoy, P., Correa, J.F., Mondragón, I., Martínez, C., Olivares, M., Mejías, L., Artieda, J.: Computer vision onboard UAVs for civilian tasks. J. Intell. Robot. Syst. 54(1–3), 105–135 (2009)

Alighanbari, M., Kuwata, Y., How, J.P.: Coordination and control of multiple UAVs with timing constraints and loitering. In: Proceedings of the 2003 American Control Conference, vol. 6, pp. 5311–5316 (2003)

Suresh, B.R.: A real-time automated visual inspection system for hot steel slabs. IEEE Trans. Pattern Anal. Mach. Intell. 5(6), 563–572 (1983)

Xie, X.: A review of recent advances in surface defect detection using texture analysis techniques. Electron. Lett. Comput. Vis. Image Anal. 7(3), 1–22 (2008)

Rahaman, G.M.A., Hossain, M.M.: Automatic defect detection and classification technique from image: a special case using ceramic tiles. Int. J. Comput. Sci. Inf. Secur. 1(1), 22–30 (2009)

Kumar, A., Pang, G.K.H.: Defect detection in textural materials using Gabor filters. IEEE Trans. Ind. Appl. 38(2), 425–440 (2002)

Mak, K.L., Peng, P., Lau, H.Y.K.: A real time computer vision systems for detecting defects in textile fabrics. IEEE Trans. (2005)

Sivabalan, K.N., Ghanadurai, D.: Detection of defects in digital texture images using segmentation. Int. J. Eng. Sci. Technol. 2(10), 5187–5191 (2010)

Zhang, G., Chen, S., Liao, J.: Otsu image segmentation algorithm based on morphology and wavelet transformation. IEEE Trans. 1, 279–283 (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 IFIP International Federation for Information Processing

About this paper

Cite this paper

Yang, S. et al. (2018). UAV Assisted Bridge Defect Inspection System. In: Shi, Z., Pennartz, C., Huang, T. (eds) Intelligence Science II. ICIS 2018. IFIP Advances in Information and Communication Technology, vol 539. Springer, Cham. https://doi.org/10.1007/978-3-030-01313-4_43

Download citation

DOI: https://doi.org/10.1007/978-3-030-01313-4_43

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01312-7

Online ISBN: 978-3-030-01313-4

eBook Packages: Computer ScienceComputer Science (R0)