Abstract

Improving Importance Sampling estimators for rare event probabilities requires sharp approximations of the optimal density leading to a nearly zero-variance estimator. This paper presents a new way to handle the estimation of the probability of a rare event defined by the fact that the empirical mean of summands of a random walk belongs to a measurable set. We provide a sharp approximation of the optimal conditional density on long runs.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

11.1 Introduction and Context

In this paper, we consider efficient estimation of the probability of large deviations of a multivariate sum of independent, identically distributed, light-tailed, and non-lattice random vectors.

Consider \(\mathbf{X}_{1}^{n}:= \left (\mathbf{X}_{1},\ldots,\mathbf{X}_{n}\right )\) n i.i.d. random vectors with known common density p X on \(\mathbb{R}^{d}\), \(d\geqslant 1,\) copies of \(\mathbf{X}:= \left (\mathbf{X}^{(1)},\ldots,\mathbf{X}^{(d)}\right ).\) The superscript (j) pertains to the coordinate of a vector and the subscript i pertains to replications. Consider also u a measurable function defined from \(\mathbb{R}^{d}\) to \(\mathbb{R}^{s}.\) Define U: = u(X) with density p U and

We intend to estimate for large but fixed n

where A is a non-empty measurable set of \(\mathbb{R}^{s}\) such as \(E[u\left (\mathbf{X}\right )]\notin A.\) In [3], the authors consider in detail the case where \(d = s = 1\), \(A:= A_{n} = (a_{n},\infty )\) and a n is a convergent sequence.

The basic estimate of P n is defined as follows: generate L i.i.d. samples X 1 n(l) with underlying density p X and define

where

The Importance Sampling estimator of P n with sampling density g on \(\left (\mathbb{R}^{d}\right )^{n}\) is

where \(\hat{P}_{n}(l)\) is called “importance factor” and can be written

where the L samples \(Y _{1}^{n}(l):= \left (Y _{1}(l),\ldots,Y _{n}(l)\right )\) are i.i.d. with common density g; the coordinates of Y 1 n(l) however need not be i.i.d. It is known that the optimal choice for g is the density of \(\mathbf{X}_{1}^{n}:= \left (\mathbf{X}_{1},\ldots,\mathbf{X}_{n}\right )\) conditioned upon \(\left (\mathbf{X}_{1}^{n} \in \mathcal{E}_{n}\right )\), leading to a zero variance estimator. We refer to [5] for the background of this section.

The state-independent IS scheme for rare event estimation (see [6] or [12]), rests on two basic ingredients: the sampling distribution is fitted to the so-called dominating point (which is the point where the quantity to be estimated is mostly captured; see [11]) of the set to be measured; independent and identically distributed replications under this sampling distribution are performed. More recently, a state-dependent algorithm leading to a strongly efficient estimator is provided by [2] when d = s, u(x) = x and A has a smooth boundary and a unique dominating point. Indeed, adaptive tilting defines a sampling density for the i−th r.v. in the run which depends both on the target event \(\left (\mathbf{U}_{1,n} \in nA\right )\) and on the current state of the path up to step i − 1. Jointly with an ad hoc stopping rule controlling the excursion of the current state of the path, this algorithm provides an estimate of P n with a coefficient of variation independent upon n. This result shows that nearly optimal estimators can be obtained without approximating the conditional density.

The main issue of the method described above is to find dominating point. However, when the dimension of the set A increases, finding a dominating point can be very tricky or even impossible. A solution will be to divide the set under consideration into smaller subset and, for each one of this subset, find a dominating point. Doing so makes the implementation of an IS scheme harder and harder as the dimension increases.

Our proposal is somehow different since it is based on a sharp approximation result of the conditional density of long runs. The approximation holds for any point conditioning of the form \(\left (\mathbf{U}_{1,n} = nv\right ).\) Then sampling v in A according to the distribution of U 1, n conditioned upon \(\left (\mathbf{U}_{1,n} \in nA\right )\) produces the estimator. By its very definition this procedure does not make use of any dominating point, since it randomly explores the set A. Indeed, our proposal hints on two choices: first do not make use of the notion of dominating point and explore all the target set instead (no part of the set A is neglected); secondly, do not use i.i.d. replications, but merely sample long runs of variables under a proxy of the optimal sampling scheme.

We will propose an IS sampling density which approximates this conditional density very sharply on its first components y 1, …, y k where k = k n is very large, namely \(k/n \rightarrow 1.\) However, but in the Gaussian case, k should satisfy \(\left (n - k\right ) \rightarrow \infty \) by the very construction of the approximation. The IS density on \(\left (\mathbb{R}^{d}\right )^{n}\) is obtained multiplying this proxy by a product of a much simpler state-independent IS scheme following [13].

The paper is organized as follows. Section 11.2 is devoted to notations and hypothesis. In Sect. 11.3, we expose the approximation scheme for the conditional density of X 1 k under \(\left (\mathbf{U}_{1,n} = nv\right )\). Our IS scheme is introduced in Sect. 11.4. Simulated results are presented in Sect. 11.5 which enlighten the gain of the present approach over state-dependent Importance Sampling schemes.

We rely on [7] where the basic approximation (and proofs) used in the present paper can be found. The real case is studied in [4] and applications for IS estimators can be found in [3].

11.2 Notations and Hypotheses

We consider approximations of the density of the vector X 1 k on \(\left (\mathbb{R}^{d}\right )^{k}\), when the conditioning event writes (11.1) and k: = k n is such that

Therefore we may consider the asymptotic behavior of the density of the random walk on long runs.

Throughout the paper the value of a density p Z of some continuous random vector Z at point z may be written p Z (z) or \(p\left (\mathbf{Z} = z\right ),\) which may prove more convenient according to the context.

Let p nv (and distribution P nv ) denote the density of X 1 k under the local condition \(\left (\mathbf{U}_{1,n} = nv\right )\)

where Y 1 k belongs to \(\left (\mathbb{R}^{d}\right )^{k}\) and v belongs to A.

We will also consider the density p nA (and distribution P nA ) of X 1 k conditioned upon \(\left (\mathbf{U}_{1,n} \in nA\right )\)

The approximating density of p nv is denoted g nv ; the corresponding approximation of p nA is denoted g nA . Explicit formulas for those densities are presented in the next section.

11.3 Multivariate Random Walk Under a Local Conditioning Event

Let ɛ n be a positive sequence such as

It will be shown that \(\varepsilon _{n}\left (\log n\right )^{2}\) is the rate of accuracy of the approximating scheme.

We assume that \(\mathbf{U}:= u\left (\mathbf{X}\right )\) has a density p U (with p.m. P U ) absolutely continuous with respect to Lebesgue measure on \(\mathbb{R}^{s}.\) Furthermore, we assume that u is such that the characteristic function of U belongs to L r for some \(r\geqslant 1.\)

Denote \(\underline{0}\) is the vector of \(\mathbb{R}^{s}\) with all coordinates equal to 0 and \(V (\underline{0})\) a neighborhood of \(\underline{0}.\)

We assume that U satisfy the Cramer condition, meaning

and define

and

as the mean and the covariance matrix of the tilted density defined by

where t is the only solution of m(t) = α for α in the convex hull of P U . Conditions on Φ U (t) which ensure existence and uniqueness of t are referred to steepness properties (see [1], p153 ff, for all properties of moment generating function used in this paper).

We now state the general form of the approximating density. Let v ∈ A and denote

with an arbitrary y 0 and π u v defined in (11.7).

For \(1\leqslant i\leqslant k - 1\), we recursively define \(g(y_{i+1}\vert y_{1}^{i})\). Set \(t_{i} \in \mathbb{R}^{s}\) to be the unique solution to the equation

where \(u_{1,i} = u(y_{1}) + \cdots + u(y_{i}).\)

Denote

and

for j, l and m in {1, …, s}. In the sequel, \(\varkappa _{(i,n)}\) will denote the matrix with elements \(\left (\varkappa _{(i,n)}^{j,l}\right )_{1\leqslant j,l\leqslant s}.\)

Denote

where C i is a normalizing factor, \(\mathfrak{n}_{s}\left (u(y_{i+1});\beta \alpha +v,\beta \right )\) is the normal density at u(y i+1) with mean β α + v and covariance matrix β. α and β are defined by

and

and γ defined by

Then

Theorem 1.

Assume(E1), (E2), (K1) and (K2).

-

Let Y 1 k be a sample from density p nv . Then

$$\displaystyle\begin{array}{rcl} p\left (\mathbf{X}_{1}^{k} = Y _{ 1}^{k}\vert \mathbf{U}_{ 1,n} = nv\right ) = g_{nv}(Y _{1}^{k})(1 + o_{ P_{nv}}(1 +\varepsilon _{n}(\log n)^{2}))& & {}\end{array}$$(11.12) -

Let Y 1 k be a sample from density g nv . Then

$$\displaystyle\begin{array}{rcl} p\left (\mathbf{X}_{1}^{k} = Y _{ 1}^{k}\vert \mathbf{U}_{ 1,n} = nv\right ) = g_{nv}(Y _{1}^{k})(1 + o_{ G_{nv}}(1 +\varepsilon _{n}(\log n)^{2}))& & {}\end{array}$$(11.13)

Remark 11.1.

The approximation of the density of X 1 k is not performed on the sequence of entire spaces \(\left (\mathbb{R}^{d}\right )^{k}\) but merely on a sequence of subsets of \(\left (\mathbb{R}^{d}\right )^{k}\) which contains the trajectories of the conditioned random walk with probability going to 1 as n tends to infinity. The approximation is performed on typical paths. For the sake of applications in Importance Sampling, (11.13) is exactly what we need. Nevertheless, as proved in [7], the extension of our results from typical paths to the whole space \(\left (\mathbb{R}^{d}\right )^{k}\) holds: convergence of the relative error on large sets imply that the total variation distance between the conditioned measure and its approximation goes to 0 on the entire space.

Remark 11.2.

The rule which defines the value of k for a given accuracy of the approximation is stated in Sect. 5 of [7].

Remark 11.3.

When the X i ’s are i.i.d. multivariate Gaussian with diagonal covariance matrix and u(x) = x, the results of the approximation theorem are true for \(k = n - 1\) without the error term. Indeed, it holds \(p(\left.\mathbf{X}_{1}^{n-1} = x_{1}^{n-1}\right \vert \mathbf{U}_{1,n} = nv) = g_{nv}\left (x_{1}^{n-1}\right )\) for all x 1 n−1 in \(\left (\mathbb{R}^{d}\right )^{n-1}\).

As stated above the optimal choice for the sampling density is p nA . It holds

so that, in contrast with [2] or [6], we do not consider the dominating point approach but merely realize a sharp approximation of the integrand at any point of A and consider the dominating contribution of all those distributions in the evaluation of the conditional density p nA .

11.4 Adaptive IS Estimator for Rare Event Probability

The IS scheme produces samples \(Y:= \left (Y _{1},\ldots,Y _{k}\right )\) distributed under g nA , which is a continuous mixture of densities g nv as in (11.11) with \(p\left (\mathbf{U}_{1,n}/n = v\vert \mathbf{U}_{1,n} \in nA\right )\) .

Simulation of samples U 1, n ∕n under this density can be performed through Metropolis–Hastings algorithm, since

turns out to be independent upon \(P\left (\mathbf{U}_{1,n} \in nA\right ).\) The proposal distribution of the algorithm should be supported by A.

The density g nA is extended from \(\left (\mathbb{R}^{d}\right )^{k}\) onto \(\left (\mathbb{R}^{d}\right )^{n}\) completing the n − k remaining coordinates with i.i.d. copies of r.v’s Y k+1, …, Y n with common tilted density

with \(m_{k}:= m(t_{k}) = \frac{n} {n-k}\left (v -\frac{u_{1,k}} {n} \right )\) and

The last n − k r.v’s Y i ’s are therefore drawn according to the state independent i.i.d. scheme in phase with Sadowsky and Bucklew [13].

We now define our IS estimator of P n . Let Y 1 n(l): = Y 1(l), …, Y n (l) be generated under g nA . Let

and define

in accordance with (11.3).

Remark 11.4.

In the real case and for A = (a, ∞), the authors of [3] show that under certain regularity conditions the resulting relative error of the estimator is proportional to \(\sqrt{n - k_{n}}\) and drops by a factor \(\sqrt{n - k_{n}}/\sqrt{n}\) with respect to the state independent IS scheme. In [8], the authors propose a slight modification in the extension of g nA which allows to prove the strong efficiency of the estimator (11.17) using arguments from both [2] and [3].

11.5 When the Dimension Becomes Very High

This section compares the performance of the present approach with respect to the standard tilted one using i.i.d. replications under (11.7) on an extension of a well-known example developed in [9] and in [10]. Let \(B:= \left (\mathcal{E}_{100}\right )^{d}\) which is the d-Cartesian product of \(\mathcal{E}_{100}\) defined by

We want to estimate P 100 = P[B] and explore the gain in relative accuracy when the dimension of the measured set increases. Consider 100 r.v.’s X i ’s i.i.d. random vectors in \(\mathbb{R}^{d}\) with common i.i.d. N(0. 05, 1) distribution. Our interest is to show that in this simple asymmetric case our proposal provides a good estimate, while the standard IS scheme ignores a part of the event B. The standard i.i.d. IS scheme introduces the dominating point a = t(0. 28, …, 0. 28) and the family of i.i.d. tilted r.v’s with common N(a, 1) distribution. It can be seen that a large part of B is never visited through the procedure, inducing a bias in the estimation. Indeed, the rogue path curse (see [9]) produces an overwhelming loss in accuracy, imposing a very large increase in runtime to get reasonable results. Under the present proposal the distribution of the Importance Factor concentrates around P 100 avoiding rogue path.

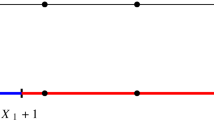

This example is not as artificial as it may seem; indeed, it leads to a 2d dominating points situation which is quite often met in real life. Exploring at random the set of interest avoids any search for dominating points. Drawing L i.i.d. points v 1, …, v L according to the distribution of U 1, 100∕100 conditionally upon B we evaluate P 100 with k = 99; note that in the Gaussian case Theorem 1 provides an exact description of the conditional density of X 1 k for all k between 1 and n. The following figure shows the gain in relative accuracy w.r.t. the state independent IS scheme according to the growth of d. The value of P 100 is 10−2d (Fig. 11.1).

Conclusion

In this paper, we explore a new way to estimate multi-constraints large deviation probability. In future work, the author will investigate the theoretical behavior of the relative error of our proposed estimator.

References

Barndorff-Nielsen, O.E.: Information and Exponential Families in Statistical Theory. Wiley, New York (1978)

Blanchet, J.H., Glynn, P.W., Leder, K.: Efficient simulation of light-tailed sums: an old-folk song sung to a faster new tune…. In: L’Ecuyer, P., Owen, A.B. (eds.) Monte Carlo and Quasi-Monte Carlo Methods, pp. 227–248. Springer, Berlin (2009)

Broniatowski, M., Caron, V.: Towards zero variance estimators for rare event probabilities. ACM TOMACS: Special Issue on Monte Carlo Methods in Statistics 23(1) (2013) [article 7]

Broniatowski, M., Caron, V.: Long runs under a conditional limit distribution. Ann. Appl. Probab (2014, to appear)

Bucklew, J.A.: Introduction to Rare Event Simulation. Springer Series in Statistics. Springer, New York (2004)

Bucklew, J.A., Ney, P., Sadowsky, J.S.: Monte Carlo simulation and large deviations theory for uniformly recurrent markov chains. J. Appl. Probab. 27(11), 49–61 (1990)

Caron, V.: Approximation of a multivariate conditional density (2013). arxiv:1401.3256

Caron, V., Guyader, A., Munoz, Z.M., Tuffin, B.: Some recent results in rare event estimation. In: ESAIM Proceedings (2013, to appear)

Dupuis, P., Wang, H.: Importance sampling, large deviations, and differential games. Stoch. Stoch. Rep. 76, 481–508 (2004)

Glasserman, P., Wang, Y.: Counterexamples in importance sampling for large deviations probabilities. Ann. Appl. Probab. 7(3), 731–746 (1997)

Ney, P.: Dominating points and the asymptotics of large deviations for random walk on \(\mathbb{R}^{d}\). Ann. Probab. 11(1), 158–167 (1983)

Sadowsky, J.S.: On Monte-Carlo estimation of large deviations probabilities. Ann. Appl. Probab. 9(2), 493–503 (1996)

Sadowsky, J.S., Bucklew, J.A.: On large deviations theory and asymptotically efficient Monte Carlo estimation. IEEE Trans. Inform. Theory 36(3), 579–588 (1990)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer Science+Business Media New York

About this paper

Cite this paper

Caron, V. (2014). Importance Sampling for Multi-Constraints Rare Event Probability. In: Melas, V., Mignani, S., Monari, P., Salmaso, L. (eds) Topics in Statistical Simulation. Springer Proceedings in Mathematics & Statistics, vol 114. Springer, New York, NY. https://doi.org/10.1007/978-1-4939-2104-1_11

Download citation

DOI: https://doi.org/10.1007/978-1-4939-2104-1_11

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4939-2103-4

Online ISBN: 978-1-4939-2104-1

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)