Abstract

The U.S. Supreme Court has not reexamined the test for admission of eyewitness identifications that are the product of suggestive procedures in over 35 years (Manson v. Brathwaite, 432 U.S. 98, 1977). Since then, there have been over 218 DNA-based exonerations of individuals who were mistakenly identified, and an extensive and rich scientific literature on eyewitness identification has emerged. This chapter reviews the original Manson ruling, using as an analytic framework the Court’s own justifications for implementing a Manson test for determining the admissibility of suggestively obtained identification evidence. The Court’s 1977 ruling was meant to be a safeguard against wrongful conviction, and we note how the DNA-based exonerations can only be a small fraction of the total cases of wrongful convictions based on mistaken identification. The flaws inherent in Manson, in light of the last 30 years of scientific research on eyewitness identification, are reviewed, and it is argued that Manson fails to provide an adequate safeguard against wrongful conviction based on mistaken identification. The two objectives of the Manson ruling, namely suppressing unreliable identifications and providing a disincentive for suggestive procedures, cannot be met with the basic approach inherent in Manson and paradoxically may incentivize police to use suggestive procedures.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Reliability Factor

- Suggestive Procedure

- Eyewitness Identification

- Eyewitness Testimony

- Wrongful Conviction

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

The U.S. Supreme Court has not reexamined the test for admission of eyewitness identifications that are the product of suggestive procedures in over 35 years (Manson v. Brathwaite, 1977) . Since then, there have been over 220 DNA-based exonerations of individuals who were wrongfully convicted on the basis of mistaken eyewitness identification (www.innocenceproject.org), and an extensive and rich scientific literature on eyewitness identification has emerged. We discuss the Court’s 1977 ruling, which was meant to be a safeguard against wrongful conviction, and we note how the DNA-based exonerations can only be a small fraction of the total cases of wrongful convictions based on mistaken identification. We then use the science of the last 30 years to show the ways in which the Manson ruling is flawed. We explain how the three objectives considered by the Court in the Manson ruling, namely presenting reliable evidence to the jury, ensuring the administration of justice, and deterring police use of suggestive procedures, cannot be met with the basic approach inherent in Manson. We then consider possible alternatives to Manson and describe two recent court cases that have rejected Manson in favor of other approaches to determining admissibility.

In my view, the Court's totality test will allow seriously unreliable and misleading [eyewitness identification] evidence to be put before juries. Equally important, it will allow dangerous criminals to remain on the streets while citizens assume that police action has given them protection (p. 128).

—Justice Marshall’s dissent in Manson v. Brathwaite (1977)

Justice Marshall’s 1977 written dissent represents a prescient analysis of the Manson ruling, which still stands in place today as the legal standard for determining the admissibility of suggestive eyewitness identification evidence. Although the Manson decision was characterized by marked disagreements in the majority and dissenting opinions, it has generally been applied uncritically by lower courts, both state and federal, in almost all jurisdictions throughout the country. Since Manson was decided, an extensive and rich scientific literature on eyewitness identification has emerged, and post-conviction DNA testing has uncovered 330 cases of wrongful conviction (as of August 20, 2015), the vast majority (over 70 %) of which were cases involving mistaken identification (www.innocenceproject.org). Each of these cases represents a failure of the criminal justice system to protect innocent people from wrongful conviction based on faulty eyewitness-identification evidence. This chapter reviews the original Manson ruling, using as an analytic framework the Court’s own justifications for implementing a Manson test for determining the admissibility of suggestively obtained identification evidence. We describe the flaws inherent in Manson in light of scientific research on eyewitness identification and argue that Manson fails to provide an adequate safeguard against wrongful conviction based on mistaken identification and paradoxically may incentivize police to use suggestive procedures.

Throughout this chapter, we refer to eyewitness-identification procedures as being either suggestive or non-suggestive. In doing this, we do not mean to imply that suggestiveness is a binary, either/or concept. Instead, we recognize that suggestiveness is best construed as a continuous variable. For example, failing to instruct an eyewitness prior to the administration of a lineup that the actual culprit might not be present is considered to be a suggestive procedure. However, this procedure is still relatively less suggestive than telling the witness explicitly that a suspect is in custody or that there is incriminating evidence against the suspect. Moreover, it could be argued that no identification procedure can be 100 % devoid of suggestion. After all, the mere presentation of a lineup suggests to an eyewitness that there is reason to suspect that someone in that lineup is the culprit. Accordingly, a low level of suggestion might be inherent and unavoidable in any identification procedure. For purposes of this chapter, we are not trying to quantify degrees of suggestion. Instead, we call a procedure suggestive if it reliably influences witnesses beyond the default level of suggestiveness that is inherent in even a properly conducted, unbiased lineup identification procedure.

Manson v. Brathwaite (1977) examined whether identification evidence that was obtained through the use of a suggestive single-photo display to the case’s sole witness—undercover agent Jimmy Glover—should have been suppressed. Officer Glover had participated in an undercover heroin purchase, during which he observed the seller through a 12- to 18-inch opening in an apartment door. After leaving the apartment, Glover provided a general description of the seller to fellow officer D’Onofrio, who—upon suspecting that the description matched that of Brathwaite—produced to Officer Glover a single photo of Brathwaite. Glover’s photo identification of Brathwaite became the primary evidence upon which Brathwaite was convicted.

The question at issue in Manson was not whether the single-photo identification procedure was impermissibly suggestive; both the Second Circuit and the Supreme Court concluded that it was. But whereas this type of suggestion would have warranted the automatic suppression of the identification according to the per se exclusion rule that was in place prior to 1972, the Manson Court considered the application of a more lenient approach for assessing admissibility, which ultimately rested on the “totality of the circumstances.” This “totality” or “reliability” approach had precedence in Neil v. Biggers (1972) and was based on the idea that an identification that was the product of suggestion could nevertheless be sufficiently reliable as to warrant its admission in evidence.

The Manson Court upheld and reaffirmed this reliability approach, concluding that the admissibility of suggestively obtained identification evidence hinged ultimately on the reliability of the identification. According to this approach, once a threshold of impermissible suggestion has been established by the defendant, a balancing test is applied in order to weigh the corrupting influence of the suggestive procedures against various factors intended to assess reliability. These factors, first set forth in Biggers, include the witness’s opportunity to view the offender, the witness’s degree of attention during the crime, the accuracy of the witness’s description of the offender, the time elapsed between the crime and the pretrial identification, and the level of certainty demonstrated by the witness at the time of the identification.Footnote 1 If, after weighing the procedure’s suggestion against the reliability factors, the Court determines that the suggestive procedure created a “very substantial likelihood of irreparable misidentification,” (Simmons v. United States, 1968) the identification will be suppressed. In Brathwaite’s case, although the Court agreed that the single-photo procedure was impermissibly suggestive, they ruled on the basis of the five reliability factors that the identification was nevertheless reliable and hence admissible into evidence.

Relatively early in the scientific literature on eyewitness identification, the Biggers and Manson criteria for assessing reliability were called into question based on the science available at the time (Wells & Murray, 1983). As the science progressed, problems with the Manson approach have become even clearer. Recently, a number of works have reviewed the problems with Manson in light of empirical research on eyewitness identification (Smalarz & Wells, 2012; Wells & Quinlivan, 2009) and explored the reasons why motions to suppress suggestively obtained identification evidence virtually never succeed (Wells, Greathouse, & Smalarz 2011). The present chapter draws on each of those works in an examination of the extent to which the Manson test has fulfilled the roles that were originally intended of it by the Court. Specifically, the Manson Court’s justification for reaffirming a reliability approach over the per se exclusion rule centered on three main considerations: (1) the presentation of reliable and relevant evidence to the jury; (2) the administration of justice; and (3) the deterrence of police use of suggestive procedures. We argue in the current chapter that Manson permits the routine admission of flawed identification evidence, fails to administer justice, and acts as an incentive—not a deterrent—for police use of suggestion.

Manson Permits the Routine Admission of Flawed Identification Evidence

One of the primary concerns expressed by the Manson Court about the per se exclusion rule in place prior to Manson was that its automatic and preemptive application to identifications resulting from suggestive procedures would too often exclude relevant evidence from consideration and evaluation by the jury. Rather than requiring the automatic suppression of an identification when an unnecessarily suggestive procedure had been used, Manson opened the door to the consideration of “alleviating factors” for the evaluation of the evidence on the basis that reliability is the “linchpin” for determining admissibility.

There is nothing inherently wrong with the notion that, under some circumstances, an identification that was made in the presence of suggestive procedures might nevertheless be sufficiently reliable so as to warrant its admission into evidence. In particular, eyewitness scientists generally agree that if the eyewitness’s memory is strong enough, then suggestive procedures should not undermine reliability. Wells and Quinlivan (2009) gave an extreme example to support this idea. If a victim of an abduction had been held for 3 months during which the culprit’s face was viewed clearly and repeatedly, it is unlikely that the victim would identify someone simply because he was suggested by the police. In this extreme instance, a reliability approach would appropriately trump concerns about suggestiveness. However, these are not the types of situations in which Manson is routinely applied. Instead, eyewitnesses often observe crimes under suboptimal conditions—quickly, under duress, when it is dark, when the perpetrator is wearing a disguise, etc. Under these conditions—when memory strength is relatively weak—the potential effects of suggestion are of much greater concern and the logic of the Manson test begins to break down.

The Manson Court’s endorsement of the Biggers factors for evaluating reliability came at a time when there was relatively little known about the psychological science of eyewitness memory and eyewitness identification . Today, we are much wiser. Decades of empirical eyewitness research has exposed severe flaws in the assumptions underlying the Court’s reasoning in Manson. First, the Court assumed that Manson would enable judges to identify and weed out unreliable identifications. Second, the Court assumed that juries would be capable of critically analyzing identification evidence, giving appropriate weight to factors such as suggestion when assessing the credibility of an eyewitness’s testimony. Eyewitness research now shows us that Manson is virtually useless for weeding out unreliable identifications, especially when suggestion was present. Further, it shows us that jurors are heavily persuaded by eyewitness testimony (whether accurate or mistaken) and that the typical safeguards in place at trial (e.g., cross-examination) do not help jurors differentiate between accurate and mistaken eyewitnesses.

Manson Fails to Weed Out Unreliable Identifications

The Manson admissibility test for identifying and weeding out unreliable identifications that are a product of suggestive procedures typically functions as a two-pronged inquiry.Footnote 2 The first prong involves determining whether the identification evidence was obtained through the use of an unnecessarily suggestive procedure. If the procedure was not unnecessarily suggestive, then the inquiry ends and the identification evidence is admitted. If the procedure is found to have been suggestive, then the second prong of the inquiry is carried out in which the corrupting influence of the suggestion is weighed against the five factors intended to assess reliability (view, attention, description, passage of time, and certainty).Footnote 3 If on the basis of these five factors the judge believes that the identification was reliable despite the suggestion, then the identification will be admitted into evidence. Only in the event that the Court believes that the suggestive procedure produced a “very substantial likelihood of irreparable misidentification” (Simmons v. United States, 1968) will the identification be suppressed.

The First Prong of Manson: Was the Procedure Suggestive?

Manson’s two-pronged structure creates two sources of potential error that could lead to the admission of unreliable identification evidence. A “first-prong” error would be constituted by a judge ruling that an identification procedure was not unnecessarily suggestive when in fact it was. There are a couple of reasons why this might occur. First, the judge (and the defense for that matter) might not be aware that the suggestion took place to begin with. Wells and Quinlivan (2009) cite a number of reasons why it is often very difficult or even impossible to establish that suggestion has occurred. Whereas some types of suggestion are readily discoverable because they inhere in the lineup procedure itself and are therefore documented (e.g., the use of poor lineup fillers or the absence of fillers in the case of a show-up), many of the suggestive procedures that have been shown through research to have a large impact on eyewitnesses are not ever recorded or documented. For example, verbal or nonverbal cues from the lineup administrator, failure to instruct the witness that the culprit might not be present, and selectively reinforcing a witness’s response to a specific lineup member are all powerful factors that can influence a witness, yet the discovery of these types of suggestion depends almost entirely upon the detective and/or the witness to report that they occurred. Even if the detective and the witness are properly motivated to report the presence of suggestion, it would require that they noticed the suggestion when it occurred, that they interpreted it as such, and that they remembered its occurrence at the time of questioning. But psychological research has indicated that people generally lack a strong awareness of the factors that influence their thoughts and behavior (e.g., Nisbett & Wilson, 1977) and tend to believe that their actions are largely self-directed (e.g., Wegner, 2002). And, there is now abundant evidence that human attitudes and behavior are regularly influenced by factors that operate outside of conscious awareness (Greenwald & Banaji, 1995; Jacoby & Kelley, 1987), examples of which might include lineup bias and verbal or nonverbal cues from the lineup administrator. Because of the difficulties associated with uncovering the presence of suggestion, Wells and Quinlivan argued that the actual prevalence of suggestive procedures likely greatly exceeds our abilities ever to prove that they occurred.

Even if the suggestion is discovered and brought to the attention of the trial judge, a “first-prong” error might still occur if the judge—despite being objectively aware of some suggestive aspect of the identification procedure—fails to recognize it as such or does not believe that the suggestion rises to an undue level. In fact, the tendency for judges to underestimate the impact of suggestive procedures has been cited as one of the primary reasons why motions to suppress suggestive identifications routinely fail (Wells, Greathouse, & Smalarz, 2012). Judges’ evaluations of whether or not a procedure was unnecessarily suggestive are tethered closely to their ideas about whether the suggestion likely influenced the witness. And to the extent that judges lack familiarity with the psychology of eyewitness identification, it is not surprising that they might fail to appreciate the power of suggestive procedures. Take, for example, the case of DNA-exoneree Thomas Doswell, who was convicted of rape and a series of other crimes on the basis of photo identifications made by the victim and a co-witness. Doswell’s photo was the only photo in the lineup that was marked with a letter “R”—an indication that he had been charged with rape. Doswell’s defense raised concerns about the suggestiveness of the lineup, but the judge ruled that the presence of the “R” did not constitute unnecessary suggestiveness:

I don’t believe that it was unduly suggestive. I am not saying it is the best practice in the world, but just to have the letter R on the plaque which also contains other numbers… there is no evidence that the victim would have had any background or any other knowledge that would give her an idea of what the R would stand for…

The judge admitted the identification evidence, Doswell was convicted, and then Doswell was later exonerated of the crime based on DNA testing. This anecdote provides just one illustration of a disconnect that exists between intuitive judicial reasoning and the findings of psychological science, which indicate that even the subtlest of cues can strongly influence people’s thoughts and behaviors, especially in situations characterized by uncertainty or ambiguity. But there are many more examples of cases like this one. In fact, judges’ failure to fully appreciate the impact of suggestion can be traced at least in part to the very fundamental human tendency to underestimate the impact of situational forces on behavior (Ross, 1977).

The tendency for judges to underestimate the power of suggestion also manifests itself in the judicial endorsement of the idea that identifications tainted by suggestive procedures can be corrected through the later application of pristine identification procedures. Even Justice Marshall, who demonstrated an impressive grasp of the psychology of eyewitness memory, suggested in his dissenting opinion in Manson that an identification that was rendered unreliable by suggestion can somehow be corrected in a later identification task: “when a prosecuting attorney learns that there has been a suggestive confrontation, he can easily arrange another lineup conducted under scrupulously fair conditions…” (p. 126–127). It is likely due to this type of reasoning that multiple-procedure identifications are frequently admitted even when the initial identification is suppressed. In an effort to bring judicial reasoning more in line with scientific conceptions of memory, eyewitness scholars have promulgated the “eyewitness memory as trace evidence” analogy, first proposed by Wells in 1993. Not unlike physical evidence, eyewitness evidence is subject to contamination at the time of collection, storage, or at testing. And, once the memory of the eyewitness has been contaminated—through the use of suggestive procedures, for example—it cannot be restored to its earlier, uncontaminated state simply through the application of pristine testing procedures.

The DNA-exoneration files are replete with examples of cases involving multiple identification procedures. Take, for instance, the case of Larry Youngblood, who was wrongfully convicted of kidnapping, sexual assault, and child molestation on the basis of multiple identifications made by the 10-year-old victim. The victim’s first in-person identification of Youngblood was during a one-man show-up procedure at a pretrial hearing in which Youngblood was handcuffed and in prison garb with police officers at his side. Youngblood’s defense moved to suppress the show-up identification along with the subsequent in-court identification on the grounds that the show-up was impermissibly suggestive and that it would taint any later identification. The trial judge did, in this case, recognize the inherent suggestiveness in the show-up procedure, and therefore suppressed it: “I feel that the procedure was improper in that it’s improper to present one person in handcuffs with corrections officers at his side and then obtain an identification of that individual.” However, the judge also opined that “[the show-up] is not tainting the in-court identification of the defendant” and ruled on those grounds to admit the in-court identification. Although this type of reasoning occurs routinely in the legal evaluation of eyewitness cases, it flies in the face of psychological principles about the fragility of memory. As is suggested by the “trace evidence” analogy of eyewitness memory, psychological scientists generally agree that memory contamination is largely irreversible and that the effects of suggestion cannot somehow be neutralized by later procedures.

Because transcripts of pretrial suppression hearings are not easily obtained and opinions resulting from these hearings are generally unpublished, it is difficult to know how often first-prong inquiries result in a ruling that the identification procedures were unnecessarily suggestive. The number likely varies from jurisdiction-to-jurisdiction and even from one judge to another, but our impression is that it occurs rarely. However, in the event that the “first-prong” hurdles have been jumped (i.e., the suggestion was discovered or identified and ruled upon as such), the identification evidence is then subjected to the second-prong inquiry. During this inquiry, the influence of the suggestion is weighed against the five reliability factors. The second-prong inquiry presents a new set of problems, most of which reflect fundamental issues with the reliability factors themselves, which we will review in turn.

The Second Prong of Manson: The Reliability Criteria

Perhaps the most obvious problem with the five reliability factors is that none of them bears a clear or linear relationship with identification accuracy. This means that none of the factors can be unconditionally relied upon to indicate whether the witness’s identification was accurate or mistaken. Take for example the description factor. A witness who gives a very detailed and very specific description of the culprit is not necessarily more likely to make an accurate identification of the culprit than is a witness whose description did not contain a great amount of detail. One reason for this dissociation is that the mental processes that lend themselves to accurately describing a face from memory are not the same as those that lend themselves to accurately recognizing a face (Wells & Hryciw, 1984). Thus, witnesses who study a face in order to accurately describe the face are the very same witnesses who might encounter difficulties when it comes to recognizing the face. Further, to the extent that the quality of a witness’s description can predict identification accuracy, it is often attributable to the fact that faces that are easier to describe are easier to identify, and not necessarily to the propensity of the witness to make an accurate identification (Wells, 1985). In general, research has found little to no reliable relationship between an eyewitness’s description of a perpetrator following a crime event and the eyewitness’s subsequent identification accuracy (Meissner, Sporer, & Susa, 2008; Pigott & Brigham, 1985).

Another one of the reliability factors is the amount of time that passed between the witnessed event and the identification procedure. As a general rule, the more time that has passed between the witnessed event and the identification procedure, the greater will be memory loss. However, there is no clear “cut-off” point that would serve as a useful indicator of whether or not the witness’s memory was reliable enough to make an accurate identification. We know that memory loss is a decelerating process over time, with the greatest memory loss occurring immediately after the event and subsequent memory loss decreasing with each time frame (Ebbinghaus, 1885). But in a given case, the effects of memory decay are relative and depend on a variety of other factors. In instances in which the witness had a very strong memory to begin with, he or she might be capable of making an accurate identification weeks, months, or even years after the event. In other cases, a witness with a poor memory of the culprit from the outset might not be able to make an accurate identification even within minutes of the event. Eyewitness memory researchers have therefore avoided relying on the time factor per se to provide very useful information about the reliability of a witness’s identification. What they have tended to focus on instead are the types of events that can occur during the passage of time that have an impact on memory. A long line of work on the corrupting influences of post-event information has clearly demonstrated that eyewitnesses’ recollections are subject to contamination from a variety of post-event sources (Loftus, 1979). Reports of co-witnesses (Wright, Self, & Justice, 2000), the framing of questions by police (Loftus & Palmer, 1974), and false information implanted in leading questions (Loftus, 1974) have all been shown to contaminate and distort witnesses’ memories of events. Thus, Manson’s narrow focus on the absolute amount of time that has passed—in the absence of a query about post-event influences that might be operating—bypasses the real issue of memory contamination and consequently fails to provide a useful gauge of reliability.

The remaining three Manson reliability factors—certainty, attention, and view—are characterized by the same problem as time and description in that none of them is unequivocally related to identification accuracy. The precarious relation between each of these factors and identification accuracy is described in detail by Wells and Quinlivan (2009). Our focus for the current purpose, however, is on the fact that these reliability factors are flawed in such a way that makes them not only useless but outright misleading indicators of accuracy in cases involving suggestion.

The first thing that should be noted about the factors of certainty, view, and attention is that they are self-reported by the witness. In other words, the witness’s standing on the factors is assessed by simply asking the witness questions such as: “How certain were you when you made the identification?”, “How long was the person’s face in view?”, and “How much attention were you paying to the face of the person?” Relying on witnesses’ self-reports is problematic for a number of reasons. First, self-reports all too often fail to map onto the objective reality. For example, people often report that they were affected by variables that did not in fact affect them and that they were unaffected by variables that did in fact affect them (Nisbett & Wilson, 1977). Further, eyewitness researchers have documented a systematic bias that exists in eyewitnesses’ self-reports whereby eyewitnesses tend to overestimate the duration of witnessed events—a tendency that is exacerbated by stress (Loftus, Schooler, Boone, & Kline, 1987). This tendency for witnesses to overestimate their exposure to witnessed events also illustrates how witnesses’ reports about the degree of attention they paid and how good of a view they had during witnessing might likewise be distorted. Moreover, self-reports have long been known to be susceptible to bias arising from demand-characteristics and social desirability concerns (Nederhof, 1985), such as when a witness enhances his/her reported level of certainty, quality of view, and degree of attention paid in an effort to be perceived as a good or helpful witness and to ensure the progression of the investigation. Self-reports are far from an objective reflection of reality; instead, they are a reflection of the witness’s subjective—and sometimes inaccurate—experience. Thus, to reply on self-reports as a way to assess eyewitness reliability is a questionable practice, particularly given the persuasive nature of identification evidence at trial, which we will return to in a later section on jurors’ perceptions of eyewitness testimony.

Obviously, in a Manson hearing witnesses are aware that their reliability is being assessed. From the view of psychological science, it seems peculiar to rely on the self-reports of witnesses themselves to, in effect, assess their own reliability. But aside from the basic problems associated with people’s self-reports, there is a much more serious problem with using witnesses’ recollections of their certainty, view, and attention to assess reliability—namely, that these reliability factors are not independent of the suggestion itself. Instead, the witness’s standing on each of these criteria can become inflated by the very presence of suggestive procedures. Wells and Quinlivan (2009) termed this phenomenon the “suggestiveness augmentation effect,” and they cited it as a principal reason why identifications obtained using even the most egregiously suggestive procedures are almost never suppressed.

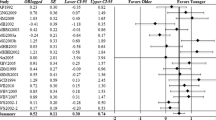

In one of the first studies demonstrating the suggestiveness augmentation effect, Wells and Bradfield (1998) examined the impact of suggestive post-identification feedback on a variety of testimony-relevant judgments, including witnesses’ self-reports of their certainty, view, and attention. In this study, mock-eyewitnesses viewed a video of a man planting a bomb in an air shaft and then attempted to identify the man from a photo lineup. Unbeknownst to the witnesses, the actual culprit was not in the lineup; hence, all the witnesses’ identifications were mistaken. Following their mistaken identifications, some witnesses were given confirming feedback by the lineup administrator: “Good, you identified the suspect in the case,” whereas other witnesses were told nothing. The witnesses were then asked questions about their certainty, view, attention, etc. The dramatic results of this study and many studies that have since followed it (see Steblay, Wells, & Douglass, 2014) indicated that witnesses who received the suggestive confirming feedback from the lineup administrator were much more likely to recall having been very certain, having had a good view, and having paid very close attention than were witnesses who were told nothing about their identifications.

Had these mistaken eyewitnesses been subjected to a Manson test, they would have had a higher standing on the reliability criteria than the witnesses who were not given the suggestive feedback. But it is not because their identifications were actually more reliable; after all, the feedback was not delivered until after the witnesses had viewed the video and made their (mistaken) identifications. Rather, the ostensibly greater “reliability” among witnesses who received post-identification feedback is attributable solely to the effects of the suggestion. In other words, suggestion itself increases the likelihood that a witness’s identification will be judged as reliable. Whereas the Manson Court intended for the reliability factors to provide a measure of reliability despite the suggestion, these factors can instead be conceived of as a product of the suggestive procedure itself; the witness’s standing on these factors cannot be separated from the effects of the suggestion.

This suggestiveness augmentation effect has been found to occur under other conditions of procedural suggestiveness. Biased lineup instructions that fail to inform the witness that the culprit might not be present produce identifications made with greater confidence than do unbiased instructions (Steblay, 1997). And identifications made from suggestive lineups containing highly dissimilar fillers are made with more confidence than are identifications made from unbiased, fairly composed lineups (Charman, Wells, & Joy, 2011). Thus, to the extent that suggestion is present in an identification procedure, it can be reasonably assumed that the witness is going to have an enhanced standing on the second prong of the Manson test, thereby increasing the chances that the testimony will be deemed reliable and admitted. The main idea here is that these reliability factors are coming into play under precisely the conditions in which they cannot be relied upon to indicate accuracy. Instead of ensuring that only reliable identifications are admitted into evidence, Manson operates in such a way that suggestive procedures virtually guarantee that the identifications will be deemed admissible.

Issues in the Application of the Manson Factors

Aside from the fundamental flaws in Manson’s two-pronged architecture, there are a number of other problems that have been identified regarding the application of Manson in the trial courts. The Manson ruling did not delineate the conditions under which it could be determined that a procedure caused a “very substantial likelihood” of misidentification. Thus, there is a degree of arbitrariness surrounding the manner in which the reliability factors are applied in a given case. The impression of many legal scholars has been that the vagaries of Manson permit judges to fall back on a “nevertheless” mentality, overlooking issues on one or many of the reliability factors so long as the witness has a high standing on some other criterion. In general, it appears that the ultimate criterion is the witness’s level of certaintyFootnote 4 (Smalarz & Wells, 2013; Wells, Greathouse, & Smalarz, 2011; Wells & Quinlivan, 2009). The legal archives and DNA-exoneration case files contain many instances of rulings handed down by judges in which identification testimony is admitted on the basis of the witness’s level of certainty, despite other obvious signs of unreliability. Wells and Quinlivan cite cases in which witnesses’ poor standing on the time, attention, and view factors are disregarded in light of the fact that the witnesses were certain (e.g., Neil v. Biggers, 1972; State v. Ledbetter, 2005). Hence, it seems that a witness can fall short on all of the other reliability factors, so long as he or she is certain. As was described above, research on the distorting effects of suggestion on witnesses’ certainty indicates the very serious problem created by this judicial overreliance on the certainty factor.

Eyewitness Testimony Is Persuasive to Jurors

Manson’s inability to help judges identify and weed out unreliable identifications at the pretrial level would be less of a concern if jurors themselves were capable of appropriately weighing the effects of suggestion in order to distinguish between accurate and inaccurate identification testimony. Indeed, the Manson Court placed a great deal of trust in juries to do so:

We are content to rely upon the good sense and judgment of American juries, for evidence with some element of untrustworthiness is customary grist for the jury mill. Juries are not so susceptible that they cannot measure intelligently the weight of identification testimony that has some questionable feature (p. 116).

Justice Marshall, however, warned in his dissenting opinion of juries’ general willingness to “credit inaccurate eyewitness testimony” (p. 126). Indeed, the large proportion of wrongful convictions resulting from mistaken identification provides at least some evidence that juries are persuaded by inaccurate eyewitnesses. But is this the exception rather than the rule?

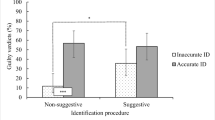

Eyewitness research has generally supported the claim that jurors are heavily persuaded by eyewitness testimony. In a pivotal experiment demonstrating the powerful influence of eyewitness-identification evidence on jurors’ verdicts, mock-jurors read a case about a grocery store robbery that resulted in the death of the owner and his granddaughter. They then indicated whether they believed the defendant was guilty or innocent. In the absence of eyewitness testimony, only 18 % of the jurors believed that the defendant was guilty. However, when the case contained testimony from a single eyewitness who had identified the defendant, a full 72 % of jurors voted to convict (Loftus, 1974). Researchers agree that eyewitness identification is a strong form of incriminating evidence that can greatly impact juror verdicts (e.g., Boyce, Beaudry, & Lindsay, 2006). More troubling, however, are findings from a number of research studies indicating that jurors are not differentially persuaded by the testimony of accurate and inaccurate eyewitnesses. In the first experiment to demonstrate this, Wells, Lindsay, and Ferguson (1979) created videotaped cross-examinations of witnesses who had attempted to make a photo identification of the perpetrator of a mock-theft. Tapes of accurate and inaccurate eyewitnesses were then shown to a new group of mock-jurors who tried to determine whether the witness had made an accurate or a mistaken identification. Results indicated that jurors believed the witnesses approximately 80 % of the time, regardless of whether the witness had accurately identified the thief or mistakenly identified an innocent person. This study, along with many others, indicates that jurors have a difficult time discriminating between accurate and inaccurate eyewitness testimony.

There are a number of reasons why jurors are not very good at discriminating between accurate and inaccurate eyewitnesses. First, jurors are somewhat insensitive to the factors that influence eyewitness memory. For example, Lindsay, Wells, and Rumpel (1981) examined the impact of varied viewing conditions on identification accuracy and on observers’ perceptions of the reliability of eyewitnesses. Witnesses in this study observed a staged crime under poor, moderate, or strong viewing conditions. They then attempted to identify the culprit from a photo lineup, and they indicated how confident they were in their identifications. Although the viewing-condition manipulation significantly affected identification accuracy (74 %, 50 %, and 33 % accuracy in strong, moderate, and poor viewing conditions, respectively), mock-jurors’ evaluations of the eyewitnesses were not similarly affected. The jurors did not appropriately account for the impact of viewing conditions on identification accuracy, as evidenced by their general overbelief of witnesses, particularly when viewing conditions were poor (77 % belief, 66 % belief, and 62 % belief for strong, moderate, and poor viewing conditions, respectively). Rather than scaling back their belief judgments in light of information about viewing conditions, the jurors’ evaluations were overwhelmingly influenced by the certainty expressed by the witnesses. Confirming this observation are other studies showing that the certainty expressed by an eyewitness is the primary indicator of whether the witness will be believed (Wells, Ferguson, & Lindsay, 1981). In fact, in a study designed to assess jurors’ appreciation of various factors known to influence identification accuracy, jurors disregarded virtually every other indicator of eyewitness accuracy , relying almost exclusively on the certainty of the witness (Cutler, Penrod, & Dexter, 1990; Cutler, Penrod, & Stuve, 1988). And yet, eyewitness certainty is only moderately correlated with accuracy, and in many situations, it is not related to accuracy at all (Wells, Olson, & Charman, 2002).

In addition to jurors’ overreliance on witnesses’ expressions of certainty and their insensitivity to factors that affect eyewitness memory, there is yet another problem with jurors’ assessments of eyewitnesses, which is particularly relevant to the assumptions made by the Manson Court about jurors’ fact-finding abilities in eyewitness cases. Jurors, much like judges, have a tendency to underestimate the impact of suggestive procedures on the accuracy and reliability of eyewitnesses’ identifications. In a study examining jurors’ sensitivity to suggestive procedures, Lindsay and Wells (1980) had mock-eyewitnesses make identifications from either biased (low-similarity fillers) or unbiased (high-similarity fillers) lineups. They then showed videotaped testimony of these witnesses to a new group of mock-jurors who evaluated whether they believed the witnesses were accurate and indicated how confident they were in their judgments. Although the similarity manipulation had a dramatic impact on the mistaken identification rate (31 % in the unbiased lineup versus 70 % in the biased lineup), the mock jurors were no less likely to believe witnesses who made an identification from a biased rather than from an unbiased lineup, nor were they any less confident in their decision to believe such witnesses. In a similar experiment, Devenport, Stinson, Cutler, and Kravitz (2002) found that although jurors were somewhat aware of the suggestive nature of failing to instruct witness that the culprit might not be present, this awareness did not translate to their verdict decisions.

In a recent experiment, Smalarz and Wells (2014) examined the extent to which suggestive post-identification feedback influenced evaluators’ abilities to discriminate between accurate and inaccurate eyewitnesses. Witnesses in this study viewed a crime video and then made accurate or mistaken identifications from a photo lineup. Some witnesses were then given confirming feedback (“Good, you identified the suspect”), and others were given no feedback. All of the witnesses were videotaped providing testimony about what they witnessed and whom they identified, and their testimony was later shown to a new group of evaluators who indicated whether they believed that the witnesses had made accurate or mistaken identifications. For witnesses who received no feedback, testimony-evaluators were approximately twice as likely to believe accurate witnesses (70 %) as they were to believe inaccurate witnesses (36 %), indicating that they were capable of distinguishing between accurate and inaccurate witnesses. However, for witnesses who received confirming feedback, evaluators were equally likely to believe accurate and inaccurate witnesses (about 63 % belief rate). This research indicated that suggestive post-identification feedback eliminated evaluators’ abilities to discriminate between accurate and inaccurate identification testimony.

To make matters even worse, it has been noted that the primary safeguard in place at trial for dealing with eyewitness evidence, namely the cross-examination of the eyewitness, is generally ineffective in helping jurors distinguish between accurate and mistaken eyewitnesses (Wells et al., 1998). The purpose of cross-examination is to uncover inconsistencies or gaps in an eyewitness’s testimony. But when the evidence in question comes from a genuinely mistaken eyewitness, the adversarial tactics typically utilized by the defense will be inefficacious for uncovering unreliability. The problem stems from the fact that mistaken eyewitnesses are not lying; they are telling the truth as they believe it. Thus, this supposed “greatest legal engine ever invented for the discovery of the truth” (Wigmore, 1970) sputters in the face of an honest but mistaken eyewitness and does little to guard against wrongful convictions based on mistaken identifications.

In light of these research findings, it becomes clear that the Manson majority was overly optimistic in their presupposition that jurors would be able to make accurate determinations about the reliability of eyewitnesses at trial. Eyewitness science has revealed that jurors are heavily persuaded by eyewitness testimony, evaluate eyewitnesses on the basis of factors that are not good indicators of accuracy (e.g., the witness’s confidence), and are generally insensitive to the effects of suggestion; that suggestion can eliminate jurors already-modest abilities to discriminate between accurate and mistaken eyewitnesses; and that cross-examination is not a cure for these problems. Thus, it becomes critically important that judges’ determinations about whether or not to admit identification evidence are derived from a framework that enables the suppression of unreliable identification evidence.

Manson Fails to Administer Justice

Perhaps the most important of the three issues considered by the Manson Court involves the relative impact of the reliability approach versus the per se exclusion approach on the administration of justice. The majority expressed concern that automatic suppression dictated by the per se rule was a “Draconian sanction” (p. 113) in cases where the identification is reliable despite the suggestion. They reasoned that a per se approach lends itself to judicial error, as it would frequently lead to the suppression of evidence and consequently result in acquittals of the guilty. A reliability approach, in contrast, would enable judges to act as gatekeepers—letting in contested evidence to the extent that it has indicia of reliability. Setting aside the many issues that were raised in the previous section that make it difficult for judges to fulfill this role as gatekeepers, it was reasoned by the Manson Court that a reliability approach would be less likely than the per se exclusion rule to result in the guilty going free. However, this narrow focus on loss of convictions stood in contrast to Justice Marshall’s concerns regarding the risks of mistaken identification leading to convictions of the innocent. Justice Marshall made the important point that every time an innocent person is wrongfully convicted, the guilty party inevitably goes free: “For if the police and the public erroneously conclude, on the basis of an unnecessarily suggestive confrontation, that the right man has been caught and convicted, the real outlaw must still remain at large” (p. 127). Eyewitness scholars have articulated a similar argument with regard to the relative costs associated with an eyewitness misidentification versus an eyewitness’s failure to identify the culprit from an identification procedure (Steblay, Dysart, & Wells, 2011). Steblay and her colleagues presented two equations to illustrate that a mistaken identification is a greater error than a failure to identify the culprit:

Thus, whereas misidentification of an innocent person results in two errors—an innocent person is inculpated and the culprit escapes detection—a failure to identify the culprit results in only one error—the culprit escapes detection. This same argument extends beyond the level of identification to the trial level when a judge is considering whether or not to suppress a suggestively obtained identification. The Manson test—touted by the Court as the preferred method for minimizing acquittals of the guilty—casts a wide net over the types of suggestive procedures that will be tolerated at trial, thereby putting innocent people at risk of wrongful conviction. What the majority in Manson failed to recognize, however, is that an error of wrongful conviction is always accompanied by the very error about which they were most concerned: the guilty party going free.

How frequently has Manson led to the wrongful conviction of an innocent person? Although there is no way to know the true number, to date there have been more than 220 mistakenly identified individuals whose wrongful convictions were uncovered through DNA testing, and the list continues to grow (www.innocenceproject.org). All of these individuals had the benefit of Manson when they were tried, and in each and every case, unreliable identification evidence made its way into the courtroom to be heard by juries who, on every occasion, voted to convict an innocent person. Meanwhile, the real perpetrators responsible for these crimes went on to commit additional ones. In total, unindicted perpetrators committed an additional 57 rapes, 17 murders, and 18 violent crimes while innocent people served time for their offenses (Innocence Project Research Department as of December 18, 2012).

Perhaps one could view the number of DNA-exonerations as a relatively small problem relative to the much larger number of convictions based on eyewitness evidence each year. However, it is important to consider that these exoneration cases largely underestimate the extent of the problem. Wells and Quinlivan (2009) point out several reasons why the true numbers of wrongful conviction based on mistaken identification must be dramatically higher than 220.

First, the vast majority of the known wrongful convictions were from sexual assault crimes because those are the crimes that left behind DNA-evidence that could later be tested for claims of innocence. It has been estimated, however, that fewer than 5 % of lineups conducted are for cases of sexual assault or other crimes that would leave behind DNA-evidence (Wells et al., 2011). The other 95 %—crimes such as murders, robberies, drive-by shootings, and the like—typically do not leave behind biological evidence that could trump the account of a mistaken eyewitness. Furthermore, these exonerated individuals are the few lucky ones not only because there was biological evidence left behind at the scene of the crime, but also because that evidence was properly collected and was preserved and maintained over time. Indeed, many innocence claims (even for cases of sexual assault) can never be tested because the biological evidence was not collected properly or it has been lost, has deteriorated, or has been destroyed.

One final troubling characteristic of the DNA-exoneration cases is that the mistaken eyewitnesses in those cases—most of whom were sexual assault victims—probably constitute some of the best eyewitnesses because they typically get a closer and longer look at the perpetrator than do witnesses to other crimes. Witnesses to robberies and drive-by shootings, for example, would be expected to perform far more poorly on an identification task than would victims of sexual assault. But, we will never know about these cases because of the absence of DNA-evidence. For all of these reasons, Wells and Quinlivan, along with other scholars, have argued that the DNA-exoneration cases represent only a small fraction of the people who have been convicted based on mistaken eyewitness-identification evidence.

The Pleading Effect

The foregoing analysis supports the conclusion that there are far more individuals who have been wrongfully convicted based on eyewitness evidence than can ever be definitively proven. But this conclusion might be of minimal practical use to judges who are faced with the task of determining in a given case whether to admit or suppress the identification evidence. For judges, the more relevant question might be: what is the likelihood that the case I am evaluating is a case of mistaken identification? All other things being equal, a judge might estimate that the chances of encountering a mistaken eyewitness at trial will reflect the rate of mistaken identification at the lineup level. Importantly, however, all else is not equal; one must take into account that the cases that are being brought forward to pretrial hearings are only those in which the defendants have refused to confess or plead guilty. Because innocent people are less likely to plead out than are guilty people, the proportion of guilty versus innocent people changes from the lineup level to the trial level. Specifically, the proportion of mistaken identifications that come before a judge at trial will likely be much higher than the proportion of mistaken identifications occurring at the lineup stage. Wells, Memon, and Penrod (2006) provide an illustrative example of this phenomenon, first coined as the pleading effect by Charman and Wells (2006).

First, we know from recent analyses that well over 90 % of all criminal convictions do not involve trials but instead are resolved via plea. For purposes of their analysis, Wells et al. (2006) used a very conservative 80 % figure (although the argument is stronger at 90 %) and made a further assumption that all of those 80 % were actually guilty. Now, even if some proportion of mistakenly identified suspects plead guilty—say 10 %—then we know that 90 % of the innocent suspects and 20 % of the guilty suspects will go to trial. How do these numbers unfold if we assume a mistaken identification rate of 4 %? This would mean that 90 % of the 4 % (3.6 % of the innocent suspects) and 20 % of the 96 % (19.2 % of the guilty suspects) will go to trial. Hence, at the trial level, 16 % of the defendants (3.6 % of the 22.8 % going to trial) will be cases of mistaken identification. The main idea here is that a judge would be wrong in assuming that the chances of encountering a mistaken eyewitness in his courtroom are too low to be of concern. In reality, what might start out as a 96 % guilty rate (in terms of being charged) becomes reduced, yielding a much higher base rate of innocent people whose cases of eyewitness identification might be heard by a judge. Moreover, it is likely that failed suppression motions would only serve to boost the plea rate among guilty suspects, thereby further increasing the chances that a jury or a trial judge would encounter an innocent defendant (who rejected the plea) at trial.

Given that the Manson framework does not fulfill the gatekeeping function for which it was intended, it is no surprise that Manson has been similarly inept at serving the administration of justice. The Manson admissibility test results in the routine admission of flawed identification evidence even when it was obtained using egregiously suggestive procedures. Given what we know about the persuasiveness of eyewitness testimony to juries, it is perhaps not so surprising that scores of innocent people have been convicted on the basis of such evidence.

Manson Acts as an Incentive: Not as a Deterrent—For Police Use of Suggestion

The third factor considered by the Court in Manson was the extent to which a reliability approach would serve as a deterrent for police use of suggestive procedures. Although neither the majority nor the dissent addressed the issue of deterrence at length, it was generally acknowledged by both sides that a per se approach that requires the automatic suppression of identifications obtained through suggestion would be the stronger deterrent of the two. Nevertheless, the majority argued that the reliability approach would have an influence on police behavior: “The police will guard against unnecessarily suggestive procedures under the totality rule, as well as the per se one, for fear that their actions will lead to the exclusion of identifications as unreliable” (p. 112).

In order for the Court’s deterrence argument to hold true, the presence of suggestive procedures would need to increase the chances that identifications would be excluded under Manson, thereby sending a message to police that suggestive procedures should be avoided. From the research reviewed here, we know that in actuality many of the reliability factors the second prong of Manson considers—witness certainty, attention, and view—are inflated by suggestion. As a result, witnesses exposed to suggestive procedures appear even more reliable to the Court. Under Manson, then, there is almost no chance that suggestively obtained identifications will be excluded, and police have no disincentive to obtaining identifications through suggestive means.

Not only does Manson fail to act as a deterrent, but scholars have suggested that Manson, as it stands now, actually creates an incentive for police use of suggestive procedures (Wells & Quinlivan, 2009). Police arguably have the intuitive sense that witnesses appear more reliable when they express a high degree of certainty and report optimal viewing conditions. If identifications are rarely excluded at Manson hearings, then why wouldn’t police implement suggestive procedures that ensure that a witness appears as certain and credible as possible? We are not suggesting that police are implementing suggestive identification procedures with malicious intentions. Rather, in accordance with basic human tendencies, police are likely responding to prior Manson rulings that rewarded the use of suggestive procedures by admitting the identification evidence. For the most part, police genuinely believe that the suspects they are pursuing are guilty, and in the interest of justice, they want to ensure convictions. If police are rewarded with admitted identification evidence, even when suggestive procedures are used, then it makes sense that they would continue to employ those procedures that increase the chances of identifications and the later perceived reliability of witnesses. As it stands now, we see no reason why police would be motivated to cease employing suggestive identification procedures, and in fact, under Manson they may even be incentivized to continue using suggestive procedures.

What Is the Solution?

The idea that there is a simple solution to what is in fact a very complex problem is, of course, mythical. But to the extent that there is an ideal solution, it would be this: Jettison suggestive procedures from the eyewitness-identification process and ensure that courts and juries evaluating identification evidence have appropriate, scientifically supported tools to help them critically evaluate and weigh that evidence.

If suggestive procedures did not occur in the first place, we would not need to be doing all of this hand wringing over the problem of how to evaluate eyewitness-identification evidence that was obtained from suggestive procedures. In fact, the elimination (or at least dramatic reduction) of suggestiveness via the development of scientific protocols for obtaining eyewitness-identification evidence is at the heart of the system variable approach in eyewitness science (Wells, 1978), and concrete protocols have been developed (e.g., Wells et al., 1998) that are not difficult to implement. An increasing number of states (e.g., New Jersey, North Carolina, Connecticut, Texas) now require compliance with some or all of these protocols, national law enforcement organizations (e.g., Department of Justice; International Association of Chiefs of Police; Police Education Research Foundation) endorse some or all of them, and law enforcement agencies across the country are voluntarily conforming their practices to the scientific research. Part of any solution, then, must be that police-orchestrated identification procedures be conducted in a manner demonstrated by scientific research to minimize the risk of misidentification. This requirement, however, will not be sufficient if courts refuse to identify and sanction suggestive procedures (or breaches of protocol) when they occur. Likewise, any solution must involve a reframing of the legal test for evaluating identification evidence that resolves the problems intrinsic to the Manson test described above. Any new legal framework for evaluating identification evidence must:

-

1.

Eliminate the balancing test and disaggregate suggestion from reliability in favor of a totality of the circumstances evaluation that allows for the consideration of the full range of factors that scientific research has shown bear on the accuracy and reliability of an identification

-

2.

Permit robust pretrial hearings, particularly in cases where the risk of misidentification is highest, where courts consider all relevant information from all witnesses, including eyewitnesses and experts. The initial burden of proof of reliability should be borne by the proponent of the evidence (usually the state), as it is the party with the best access to relevant information (i.e., the nature of the procedure; the witness’s experience and statements)

-

3.

Eliminate the all-or-nothing (suppression/admission) approach and provide meaningful intermediate remedies that ensure that fact finders have sufficient context and guidance to evaluate and weigh the evidence

-

4.

Institute remedies that have a meaningful deterrent effect on suggestive police conduct

A legal framework that does these things, coupled with law enforcement who abide by mandatory, science-based protocols for conducting non-suggestive, non-biased identification procedures, will reduce the risk of misidentification and future wrongful convictions.

In the last few years, two state supreme courts have considered whether their states’ Manson-based tests meet the goal of ensuring the reliability of identification evidence admitted at trial. Both evaluated the scientific literature and concluded that a Manson test is no longer viable in light of the research. In State v. Henderson (2011), the New Jersey Supreme Court concluded that the Manson test “does not fully meet its goals. It does not offer an adequate measure for reliability or sufficiently deter inappropriate police conduct. It also overstates the jury’s inherent ability to evaluate evidence offered by eyewitnesses who honestly believe their testimony is accurate” (p. 878). In State v. Lawson (2012), the Oregon Supreme Court concluded that the Manson test “does not accomplish its goal of ensuring that only sufficiently reliable identifications are admitted into evidence. Not only are the reliability factors … both incomplete and, at times, inconsistent with modern scientific findings, but the … inquiry itself is somewhat at odds with its own goals and with current Oregon evidence law” (p. 688).

Whereas the two courts reached the same conclusion about Manson’s failings, they offered different solutions, grounded in divergent legal theories. The Henderson Court took the more traditional approach, grounding its inquiry in a defendant’s state constitutional due process right to a fair trial—i.e., the defendant’s right not to be convicted based on unreliable evidence that is the product of state action. The Lawson Court grounded its new approach in the state code of evidence, a development that holds special promise for courts and litigators throughout the country, as it does not require courts to find (as the New Jersey Supreme Court did) that its state due process protections are broader than those of the federal constitution.

Under the new framework set forth in Henderson, a defendant will have to show “some evidence” of suggestion in order to obtain a pretrial hearing, at which a Court can consider any variable alleged to have affected the reliability of the identification. The Court will then determine whether the evidence is sufficiently reliable to be admitted in evidence; if it is admitted, the Court then has a range of intermediate remedies that can be used to ensure that the jury properly evaluates the evidence. Chief among these tools are expansive, scientifically based jury instructions that identify and explain the factors that may have affected the reliability of an identification. The New Jersey Supreme Court allowed that these instructions might be read to the jury both before the witness testifies and at the close of evidence, and that experts might be warranted in some cases. Finally, Henderson imposed additional procedural requirements on law enforcement conducting an identification procedure (something the New Jersey Supreme Court has unique jurisdiction to do).

In contrast, the Lawson Court began by shifting the burden to the state to demonstrate in all cases that the identification evidence is admissible. In order to make this showing, the state must demonstrate by a preponderance of the evidence that the evidence is relevant (most identification evidence will be relevant) and that it is otherwise admissible. The Oregon Supreme Court identified several relevant provisions of the evidence code that the state must be able to satisfy for the evidence to be admitted. For example, the state must be able to show that the witness had sufficient personal knowledge to support the identification (i.e., “an adequate opportunity to observe or otherwise personally perceive the facts to which the witness will testify, and did, in fact, observe or perceive them” (p. 692); that the identification was rationally based on the witness’s perceptions; and that the identification will be helpful to the trier of fact. Once these showings have been made, the defendant will then have an opportunity to demonstrate that the evidence’s probative value is outweighed by any prejudice it would cause (such as when a suggestive procedure was used) or other evidentiary concerns. In rejecting the false dichotomy of suppression versus admission, the Oregon Supreme Court set forth a host of intermediate remedies, including partial exclusion of witness testimony, expert testimony, and jury instructions.

Despite there being no perfect solution, we consider both the New Jersey approach (in Henderson) and the Oregon approach (in Lawson) to be vast improvements over Manson. Both require trial judges to be conversant with the scientific literature on eyewitness identification, both articulate alternatives to the false dichotomy of admission versus suppression, both see a role for expert testimony under the right circumstances, both set the bar higher for scrutiny of the eyewitness-identification evidence, and both acknowledge the scientific evidence that shows that the Manson reliability criteria are not appropriate trump cards for dismissing concerns about reliability when there was suggestiveness. Moreover, both Henderson and Lawson create a situation in which there are disincentives for using suggestive eyewitness-identification procedures. To the extent that other states replace their versions of Manson with something closer to Henderson or Lawson, we expect more law enforcement jurisdictions to adopt better (less suggestive) eyewitness-identification protocols and more innocent defendants to have fairer proceedings.

Notes

- 1.

We refer to these factors as view, attention, description, passage of time, and certainty, and collectively as the Manson factors.

- 2.

Even in jurisdictions still following the per se exclusionary rule (e.g., New York, Massachusetts), the Manson factors are generally appropriated for the “independent source” analysis, recreating the Manson balancing test for the in-court identification.

- 3.

Although the Manson Court specified that these factors were not exhaustive, they are generally applied as such by the lower courts (see O’Toole & Shay, 2006).

- 4.

Although Manson instructs trial courts to consider the witness’ certainty at the time of the identification, courts frequently settle for witnesses’ expressions of certainty during testimony. The suggestiveness augmentation effect problem—reviewed above—illustrates the dangers inherent in the courts’ failure to distinguish between certainty at identification and certainty at testimony.

References

Boyce, M., Beaudry, J. L., & Lindsay, R. C. L. (2006). Belief of eyewitness identification evidence. In R. C. L. Lindsay, D. F. Ross, J. D. Read, & M. P. Toglia (Eds.), Handbook of eyewitness psychology: Memory for people (pp. 219–254). Mahwah, NJ: Erlbaum.

Charman, S. D., & Wells, G. L. (2006). Applied lineup theory. In R. C. L. Lindsay, D. F. Ross, J. D. Read, & M. P. Toglia (Eds.), Handbook of eyewitness psychology: Memory for people (pp. 219–254). Mahwah, NJ: Erlbaum.

Charman, S. D., Wells, G. L., & Joy, S. (2011). The dud effect: Highly dissimilar fillers increase confidence in lineup identifications. Law and Human Behavior, 35, 479–500. doi:10.1007/s10979-010-9261-1.

Cutler, B. L., Penrod, S. D., & Dexter, H. R. (1990). Juror sensitivity to eyewitness identification evidence. Law and Human Behavior, 14, 185–191. doi:10.1007/BF01062972.

Cutler, B. L., Penrod, S. D., & Stuve, T. E. (1988). Juror decision-making in eyewitness identification cases. Law and Human Behavior, 12, 41–55. doi:10.1007/BF01064273.

Devenport, J. L., Stinson, V., Cutler, B. L., & Kravitz, D. A. (2002). How effective are the cross-examination and expert testimony safeguards? Jurors’ perceptions of the suggestiveness and fairness of biased lineup procedures. Journal of Applied Psychology, 87, 1042–1054. doi:10.1037//0021-9010.87.6.1042.

Ebbinghaus, H. E. (1885). Memory: A contribution to experimental psychology. New York: Dover, 1964.

Greenwald, A. G., & Banaji, M. R. (1995). Implicit social cognition: Attitudes, self-esteem, and stereotypes. Psychological Review, 102, 4–27. doi:10.1037/0033-295X.102.1.4.

Jacoby, L. L., & Kelley, C. M. (1987). Unconscious influences of memory for a prior event. Personality and Social Psychology Bulletin, 13, 314–336. doi:10.1177/0146167287133003.

Lindsay, R. C. L., & Wells, G. L. (1980). What price justice? Exploring the relationship of lineup fairness and identification accuracy. Law and Human Behavior, 4, 303–314. doi:10.1007/BF01040622.

Lindsay, R. C. L., Wells, G. L., & Rumpel, C. (1981). Can people detect eyewitness identification accuracy within and across situations? Journal of Applied Psychology, 66, 79–89. doi:10.1037//0021-9010.66.1.79.

Loftus, E. F. (1974). Reconstructing memory: The incredible eyewitness. Psychology Today, 8, 116–119.

Loftus, E. F. (1979). Eyewitness testimony. Cambridge, MA: Harvard University Press.

Loftus, E. F., & Palmer, J. C. (1974). Reconstruction of automobile destruction: An example of the interaction between language and memory. Journal of Verbal Learning and Verbal Behavior, 13, 585–589. doi:10.1016/S0022-5371(74)80011-3.

Loftus, E. F., Schooler, J. W., Boone, S. M., & Kline, D. (1987). Time went by so slowly: Overestimation of event duration by males and females. Applied Cognitive Psychology, 1, 3–13. doi:10.1002/acp.2350010103.

Manson v. Brathwaite. (1977). 432 U.S. 98.

Meissner, C. A., Sporer, S. L., & Susa, K. J. (2008). A theoretical and meta-analytic review of the relationship between verbal descriptions and identification accuracy in memory for faces. European Journal of Cognitive Psychology, 20(3), 414–455. doi:10.1080/09541440701728581.

Nederhof, A. J. (1985). Methods of coping with social desirability bias: A review. European Journal of Social Psychology, 15, 263–280. doi:10.1002/ejsp.2420150303.

Neil v. Biggers. (1972). 409 U.S. 188.

Nisbett, R. E., & Wilson, T. D. (1977). Telling more than we can know: Verbal reports on mental processes. Psychological Review, 84, 231–259. doi:10.1037//0033-295X.84.3.231.

O’Toole, T. P. & Shay, G. (2006). Manson v. Braithwaite revisited: Towards a new rule of decision for due process challenges to eyewitness identification procedures (pp. 109–148). 41 Val. U. L. Rev. 109.

Pigott, M. A., & Brigham, J. C. (1985). Relationship between accuracy of prior description and facial recognition. Journal of Applied Psychology, 70, 547–555. doi:10.1037//0021-9010.70.3.547.

Ross, L. (1977). The intuitive psychologist and his shortcomings: Distortions in the attribution process. In L. Berkowitz (Ed.), Advances in experimental social psychology (Vol. 10). New York: Academic Press.

Simmons v. United States. (1968). 390 U.S. 377.

Smalarz, L., & Wells, G. L. (2012). Eyewitness identification evidence: Scientific advances and the new burden on trial judges. Court Review, 48, 14–21.

Smalarz, L., & Wells, G. L. (2013). Eyewitness certainty as a system variable. In B. L. Cutler (Ed.), Reform of eyewitness identification procedures. Washington, DC: American Psychological Association Press.

Smalarz, L., & Wells, G. L. (2014). Post-identification feedback to eyewitnesses impairs evaluators’ abilities to discriminate between accurate and mistaken testimony. Law and Human Behavior, 38, 194–202. doi:10.1037/lhb0000067.

State v. Henderson. (2011). 208 N.J. 208, 218.

State v. Lawson. (2012). 352 Or. 724.

State v. Ledbetter. (2005). 275 Conn. 534.

Steblay, N. M. (1997). Social influence in eyewitness recall: A meta-analytic review of lineup instruction effects. Law and Human Behavior, 21, 283–298. doi:10.1023/A:1024890732059.

Steblay, N. K., Dysart, J. E., & Wells, G. L. (2011). Seventy-two tests of the sequential lineup superiority effect: A meta-analysis and policy discussion. Psychology, Public Policy, and Law, 17, 99–139. doi:10.1037/a0021650.

Steblay, N. M., Wells, G. L., & Douglass, A. L. (2014). The eyewitness post-identification feedback effect 15 years later: Theoretical and policy implications. Psychology, Public Policy, and Law, 20, 1–18. doi:10.1037/law0000001.

Wegner, D. M. (2002). The illusion of conscious will. Cambridge, MA: MIT Press.

Wells, G. L. (1985). Verbal descriptions of faces from memory: Are they diagnostic of identification accuracy? Journal of Applied Psychology, 70, 619–626. doi:10.1037/0021-9010.70.4.619.

Wells, G. L., & Bradfield, A. L. (1998). “Good, you identified the suspect:” Feedback to eyewitnesses distorts their reports of the witnessing experience. Journal of Applied Psychology, 83, 360–376. doi:10.1037//0021-9010.83.3.360.

Wells, G. L., Ferguson, T. J., & Lindsay, R. C. L. (1981). The tractability of eyewitness certainty and its implication for triers of fact. Journal of Applied Psychology, 66, 688–696. doi:10.1037/0021-9010.66.6.688.

Wells, G. L., Greathouse, S. M., & Smalarz, L. (2011). Why do motions to suppress suggestive eyewitness identifications fail? In B. L. Cutler (Ed.), Conviction of the innocent: Lessons from psychological research. Washington, DC: American Psychological Association Press.

Wells, G. L., & Hryciw, B. (1984). Memory for faces: Encoding and retrieval operations. Memory and Cognition, 12, 338–344. doi:10.3758/BF03198293.

Wells, G. L., Lindsay, R. C. L., & Ferguson, T. J. (1979). Accuracy, confidence, and juror perceptions in eyewitness identification. Journal of Applied Psychology, 64, 440–448. doi:10.1037//0021-9010.64.4.440.

Wells, G.L. (1978). Applied eyewitness testimony research: System variables and estimator variables. Journal of Personality and Social Psychology, 36, 1546-1557. doi: 10.1037/0022-3514.36.12.1546.

Wells, G. L., Memon, A., & Penrod, S. D. (2006). Eyewitness evidence: Improving its probative value. Psychological Science in the Public Interest, 7(2), 45–75. doi:10.1111/j.1529-1006.2006.00027.x.

Wells, G. L., & Murray, D. M. (1983). What can psychology say about the Neil vs. Biggers criteria for judging eyewitness identification accuracy? Journal of Applied Psychology, 68, 347–362. doi:10.1037/0021-9010.68.3.347.

Wells, G. L., Olson, E. A., & Charman, S. D. (2002). The confidence of eyewitnesses in their identifications from lineups. Psychological Science, 11, 151–154. doi:10.1111/1467-8721.00189.

Wells, G. L., & Quinlivan, D. S. (2009). Suggestive eyewitness identification procedures and the Supreme Court’s reliability test in light of eyewitness science: 30 Years later. Law and Human Behavior, 33, 1–24. doi:10.1007/s10979-008-9130-3.

Wells, G. L., Small, M., Penrod, S. J., Malpass, R. S., Fulero, S. M., & Brimacombe, C. A. E. (1998). Eyewitness identification procedures: Recommendations for lineups and photospreads. Law and Human Behavior, 22, 603–647. doi:10.1023/A:1025750605807.

Wigmore, J. H. (1970). Evidence in trials at common law (Chadbourn Revision). Boston: Little, Brown, and Company.

Wright, D. B., Self, G., & Justice, C. (2000). Memory conformity: Exploring misinformation effects when presented by another person. British Journal of Psychology, 91, 189–202. doi:10.1348/000712600161781.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer Science+Business Media, New York

About this chapter

Cite this chapter

Smalarz, L., Greathouse, S.M., Wells, G.L., Newirth, K.A. (2016). Psychological Science on Eyewitness Identification and the U.S. Supreme Court: Reconsiderations in Light of DNA-Exonerations and the Science of Eyewitness Identification. In: Willis-Esqueda, C., Bornstein, B. (eds) The Witness Stand and Lawrence S. Wrightsman, Jr.. Springer, New York, NY. https://doi.org/10.1007/978-1-4939-2077-8_3

Download citation

DOI: https://doi.org/10.1007/978-1-4939-2077-8_3

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4939-2076-1

Online ISBN: 978-1-4939-2077-8

eBook Packages: Behavioral Science and PsychologyBehavioral Science and Psychology (R0)