Abstract

Each part of the central nervous system communicates with the others by means of action potentials sent through parallel pathways. Despite the progressively increasing spatial and temporal variation added to the pattern of action potentials at each level of sensory processing, the integrity of information is retained with high precision. What is the mechanism that enables the precise decoding of these action potentials? This chapter is devoted to explaining the transformations of sensory input when information is encoded and decoded in the cortical circuitries. To unravel the full complexity of the problem, we discuss the following questions: Which features of the action potential patterns encode information? What is the relationship between action potentials and oscillations in the brain? What is the segmentation principle of spike processes? How is the precise spatiotemporal pattern of sensory information retained after multiple convergent synaptic transmissions? Is compression involved in the neural information transfer? If so, how is that compressed information decoded in cortical columns? What is the role of gamma oscillations in information encoding and decoding? How are time and space encoded? We illustrate these problems through the example of visual information processing. We contend that phase coding not only answers all these questions, but also provides an efficient, flexible, and biologically plausible model for neural computation. We argue that it is timely to begin thinking of the fundamentals of neural coding in terms of the integration of action potentials and oscillations, which, respectively, constitute the discrete and continuous aspects of neural computation.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Neural code

- Phase

- Spike

- Local field potential

- LFP

- Subthreshold membrane potential oscillation

- Gamma

- Theta

- Synchrony

- Interference

- V1

Analog and Digital Components of the Neural Code

The Importance of Neural Coding

Information processing in the nervous system has been evolving under the conflicting constraints of speed, reliability, metabolic costs, and size to ultimately shape the structure and wiring of the brain. To maximize speed, neurons developed myelin shields and nodes of Ranvier. For reliability, massive parallel axon bundles are formed. To minimize metabolic cost, neurons migrate to layers in order to provide better access to blood vessels. To minimize the size, cell bodies are arranged into heavily convolved but essentially 2D sheet-like structures such as the cerebral mantle, with long myelinated axonal connections between them. To keep the bandwidth of connections proportional to the increasing area of the cerebral mantle, messages are simultaneously transferred between these structures via parallel fiber tracts. However, our understanding of the biological principles of encoding, transferring, and decoding parallel messages is incomplete. Keeping the robustness and accuracy constraints in mind, we propose a biologically plausible theory for information encoding and decoding.

Understanding the neural coding is important for a number of reasons. One practical reason is to be able to read the signals from the brain in order to control external devices or actuators that augment or restore disabling conditions, such as neural prosthetics and other types of brain-machine interfaces. Second, it would allow us to treat neurological diseases such as epilepsy and Parkinson’s disease by restoring compromised information processing in the brain. Third, the nature of the code determines how we think about biological computations and may inspire artificial intelligence. Lastly, it reveals the limitations of our cognition and clarifies our place in the universe in general.

The Problem of Conductivity and Synaptic Divergence

Sensory information in the central nervous system is transmitted through parallel pathways in the form of action potentials (APs), also, when extracellularly recorded, referred to as “spikes.” While the rapid sequence of visually evoked APs form dense pockets of spikes at the level of retinal ganglion cells, these packets gain a triple-fold increase in temporal variation as they reach the primary visual cortex V1 (Fano factor = 0.11, 0.20, 0.33, respectively [1]), and the variance of arrival times exceeds 50 ms (ranging from 34 to 97 ms; 66 ± 10.7 ms) [2, 3]. When trying to tease apart the sources of this variation, we must consider two factors. One is the trial-by-trial variation of spike latencies at different stages. The second is the heterogeneity of conductivity in axonal transmission. The trial-by-trial variation is partially explained by decreasing firing rates and decreasing refractory periods along the visual pathway from the retina to the visual cortex [1]. In contrast, the single-trial desynchronization of spikes that increases progressively along successive stages of the visual pathway can be explained by the strikingly heterogeneous conductivity of axon fibers. For instance, the conductance velocity of M and P cell axons forms bimodal, almost nonoverlapping, distributions, where the M pathway responds as much as 20 ms earlier in layer 4B than does the P pathway [2].

The first problem is that due to the known heterogeneity of the axonal composition of these pathways, one would expect an originally synchronized volley of spikes induced at one end—for instance, by simultaneous stimulation of a set of photoreceptors—to arrive with as much as 50 ms jitter of latencies to the cortical targets in layer 4B. However, this progressive jitter poses a major computational challenge for explaining how a dispersed pattern of APs can retain the integrity of the code and effectively able to excite target neurons in the cortex with a ~10 ms precision, i.e., the integration time window of pyramidal cells [4–6]. We propose that despite the trial-by-trial variability of spikes and the conductivity differences between axons, the integrity of the spike code is preserved along the visual pathway due to a common oscillatory drive, which resolves simultaneity at the level of precision afforded by the frequency of oscillation. In addition, the same oscillatory drive may also map the spatial relationship between cells onto different phases of the common oscillation, a mechanism that we explain in details below (see section “Sensory Encoding-Decoding Circuits”).

The second problem is the divergence of synaptic connectivity in the sensory pathways. Continuing the example of the visual pathway, retinal ganglion cells in the mammalian brain establish synaptic terminals on several LGN neurons [7]. An even larger number of LGN neurons converge on single granular layer neurons in V1. However, the geniculo-cortical divergence is greater still than the retino-geniculate divergence: about 40 V1 neurons are connected to each LGN neuron. As a result of this divergence and reconvergence, a significant spatial blurring is imposed on the sensory input. We are then faced with yet another serious computational problem: how is the precise spatial mapping between the retina and visual cortex, upon which our photoreceptor resolution visual acuity relies, retained despite such highly divergent retino-geniculo-cortical connections? Likewise, sections “LGN-V1 Projection” and “Reconstruction of the Information from Phase Code” will answer this question.

Deterministic vs. Nondeterministic Brain

Despite the deterministic nature of Hodgkin-Huxley neurons [8], current models on neural coding are dominated by the assumption that spike processes are “stochastic,” i.e., nondeterministic. This is based on observations of the large variance of spike trains following repeated presentation of a “frozen-noise” stimulus [9]. In contrast, we argue that spike processes are deterministic at a >5 ms scale [10–12], if we consider the precision of neurons’ own temporal reference, i.e., the neuronal clocks.

In order to take the neuronal clock into account, instead of aligning the spike trains to stimulus onset and measuring the absolute time of spike occurrence, we align them to the neurons’ intrinsic oscillations. These oscillations can be recorded intracellularly as subthreshold membrane potential oscillations (SMOs) and extracellularly as local field potentials (LFPs). The relationship between SMO and LFP is still unresolved, but the high correlation between them suggests that the LFP is a sum of local SMOs combined with postsynaptic potentials [13] (see section “Correlation Between Subthreshold Membrane Potential Oscillations and Local Field Potentials”). The alignment can be achieved by first converting the oscillations to phase and then by replacing spike time by the phase of oscillation (Fig. 13.1). Spike phase combined with firing rate has demonstrated superior performance in information transmission [7–9]. However, knowing the relevant frequency of the internal clock that provides optimal segmentation is critical.

The relativity of spike timing uncertainty. The cartoon illustrates how the trial-by-trial reproducibility of an action potential sequence in response to sensory stimulation is dependent on the intrinsic frequency variation of membrane potential oscillations. (a) Under a “frozen-noise” stimulus condition, when the patterns of visual motion stimuli are identical in each trial, the experimentalist observes large intertrial variance in spike timing (Δt 1 ≠ Δt 2 ≠ Δt 3) and concludes that responses of neurons are stochastic. (b) In contrast, when local field potentials (LFPs) are recorded in conjunction to single units, the correlation between population depolarization (negative phases) and spike timing is evident. Note that the spike train in trial 2 is a stretched out version of the spike train in trial 1. (c) After warping the spike times to the simultaneous LFP by computing the phase of the spike relative to the LFP, spikes line up across trials (ΔΦ1 = ΔΦ2 = ΔΦ3). The precise alignment is evident when comparing the traditional peristimulus time histogram (PSTH, bottom a) with the peristimulus phase histogram (PSPH, bottom c), as the latter one is sharper and larger in amplitude than the former. The combination of spike timing variances is signified by the decomposition of the traditional PSTH to single-trial PSTHs (bottom b)

Without knowing the frequency of segmentation, information carried by spike trains is underestimated and the spike process appears nondeterministic. In the rest of the chapter, we argue that the key to unlock the neural code is to understand the subtle biophysical relationship between APs and subthreshold membrane potential oscillations (SMO). If the oscillation field of local SMOs is organized topographically, it will control the firing probability of large populations of neurons in a spatially ordered fashion, a principle that phase coding exploits.

Despite numerous qualifications, single neuron biophysics maintains that neurons generate APs when the combination of excitatory postsynaptic potentials (EPSPs) elicits a wave of depolarization that, upon reaching the axon initial segment, exceeds the neuron’s threshold. In addition to the EPSPs, the membrane potential of neurons periodically fluctuates around a mean due to the intrinsic subthreshold membrane potential oscillation (SMO). The SMO makes the neuron most excitable when the membrane potential is near threshold. During this depolarization phase, neurons are more likely to fire APs than during a hyperpolarized phase.

Two Information Transmission Logics in One Brain

Messages between neurons with the highest precision are exchanged across chemical synapses as a sequence of APs. Thus, APs are the smallest indivisible units of the code. Without them, there is no information processing, perception, coordinated movements, memory, or consciousness. However, they are not the only form of communication between neurons. Neurons communicate through electrical synapses, such as gap junctions [14], as well as through ephaptic conductance [15], which enable the sharing of subthreshold membrane oscillations within a local population of neurons. What are the functions of these two signals, and why are there two kinds? While APs encode the messages in discrete, binary values, SMOs change continuously. It is easy to see the analogy with the difference between digital and analog signal processing. If APs are the signals that communicate the absence or presence of a sensory/neural event, then the SMO may provide the reference to read that message. How? Before answering this question, we will outline a model of a complete processing cycle from the sensory input to the cortical stimulus representation. First, let’s see the correlation between APs and SMO. Then we will review spike-field correlations, because usually only LFPs are accessible. Finally, we review the status of correlation between SMO and LFP.

Correlation Between the Phase of Membrane Oscillations and Action Potential

The mechanism by which neurons coordinate APs with SMO is inherent to the process of AP genesis. We discriminate between four different mechanisms: (1) neurons are endowed with resonant properties. The membrane potential fluctuates without any synaptic drive to the cell [16–18], even in isolated cells [19]. The spontaneous fluctuation is limited to a narrow frequency range, which varies between structures but is shared among neurons of the same structure. This is the characteristic resonant frequency of the neuron at which a frequency-modulated input current would drive the cell membrane with the smallest attenuation [20]. Since the SMO frequency is the characteristic resonant frequency of the neuron, AP-evoked synaptic input arriving in-phase with the depolarization phase of SMO will be more likely to reach the threshold and reproduce an AP than off-phase input. Consequently, if rhythmic APs impinge on the cell at its characteristic resonant frequency and synchronize with the SMO, then each AP will be relayed reliably. Conversely, the SMO selectively relays the synaptic input that arrives in-phase with the membrane depolarization cycles. (2) Moreover, the SMO can be entrained by concerted synaptic input. (3) Synchronization of SMO can occur as a result of release from inhibition; for example, when a spontaneous excitation is followed by mutual inhibition by inhibitory interneurons, such as proposed in the olfactory bulb between mitral cells through granule cells [21–23]. (4) In addition, the SMOs of nearby neurons influence each other through GABAergic synapses, glia, and electric synapses, leading to a fast SMO synchronization with a small phase gradient induced by propagating waves. The concept that these four adaptive resonances, in concert, enable spikes to embed in the fabric of membrane oscillations is critical to the argument we make for phase coding.

Correlation Between the Phase of Local Field Potentials and Action Potentials

The correlation between action potentials and LFP has been widely reported in a number of species under a variety of mostly awake experimental conditions [24–30]. Interestingly, what drew the most attention to this correlation was not the phase locking between spikes and LFP per se but rather the systematic drift in phase (the “phase precession” of spikes) relative to the theta oscillations recorded from hippocampal pyramidal cells and interneurons. Since those early reports [31, 32], phase precession has been confirmed in a number of other areas including the entorhinal cortex [33] and the primate visual cortex [34]. The other line of evidence is derived from studies on spike-field coherence. The coherence between local spiking activity and distant LFP elucidates the direction of causal influence between cortical areas [35].

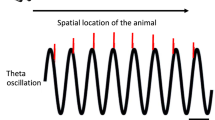

Phase precession was first described between single unit activity and LFP recorded from rat hippocampi as a systematic shift in spike probability relative to the phases of successive theta oscillations while the animal was traversing through a place field. Because place cells, by definition, are most likely to fire in restricted spatial locations, it is difficult to determine whether the phase precession is independent or dependent on the spatial location. Before we can determine the function of phase precession, we need to understand the relationship between APs and intracellular SMO under the same behavioral condition. By using an elaborate virtual reality paradigm on head-fixed mice, Harvey et al. were able to obtain simultaneous intracellular and extracellular recordings from the same hippocampal pyramidal cells and determine the relationship among spikes, LFP, and SMO [36]. It turned out that APs were always aligned to the depolarized phase of the subthreshold theta cycle. Thus, no phase precession was observed between spikes and the SMO of the same neurons. At the same time, the spike phase precession was maintained relative to the extracellular theta-band LFP. This discrepancy between the intracellular (SMO) and extracellular (LFP) theta phase precession can only be explained by assuming that the phase of extracellular theta drifts relative to the intracellular theta SMO. How is that possible?

We propose that a systematic drift of the LFP phase relative to the SMO phase can reliably reproduce the observed phase precession of APs relative to LFP, while also explaining the lack of precession relative to SMO. Such systematic drift is consistent with the propagating theta oscillations in the hippocampus [37, 38]. To explain spike phase precession, consider an array of neurons. Each neuron intrinsically generates voltage oscillations at θ i frequency synchronized across the array with a constant phase delay between them. Also, consider a point in extracellular space representing where an electrode picks up the voltage fluctuation θ e emanating from the surrounding neurons as LFP. Further assume that traveling theta waves sweep across the array of neurons and synchronize with local θ i . Because the extracellular electrode integrates voltage from a spherical volume of ~150 μm diameter that includes thousands of neurons, some of these neurons start the SMO earlier while others start it later. Thus, for the theta field to synchronize with early and late SMOs, the θ e frequency must be slightly lower than θ i , causing a discrepancy between intracellular and extracellular theta oscillations (Fig. 13.2). When the spike phases of a given neuron are referenced to the θ e extracellular electrode, it will show a precession. Meanwhile, the same spikes referenced to the neuron’s own intracellular SMO (θ i ) will be precisely aligned at depolarization peaks, nearest to threshold. Note that the direction of precession, whether it is a progressive phase advancement or phase lagging, depends on the direction of θ e propagation relative to the direction of phase gradient θ i . If the traveling theta wave is moving in the direction of the SMO phase gradient, then the spike process produces phase advancement; otherwise it produces phase lagging (Fig. 13.2).

A model of theta phase precession in the hippocampus. (a) The scheme depicts the field of theta oscillations in the hippocampus. The gray-shaded area represents the field of synchronized SMO waves with a phase gradient across a population of neurons. Each sine wave is a neuron. Three neurons on the left (n1, n2, n3) represent place cells as they fire at the peak of SMO when the animal is crossing the place field. Consistent with electrophysiology, the spikes are aligned to the SMO peak of the same cell. At the same time, a traveling theta wave (cyan shading) is sweeping through the cell population while maintaining synchrony with the cellular SMOs. The wave front (dashed line) displays a slower propagation than the SMO. The extracellular electrode detects the LFP (black sine wave) as the integral of the voltage fluctuation deriving from local SMOs as well as from the traveling theta wave. Because the theta wave is traveling, θ e < θ i . White crosses signify peaks of the traveling theta wave. When spikes (black ticks) of the red, blue, and green neurons are projected to the LFP, the spike phases display a progressive advancement across successive oscillations (red, blue, and green dots). (b) The phase precession from (a) as typically represented on the time/position and phase axes. The black bar signifies the phase precession

Other examples for coordination between LFP and spike processes were observed in primates. In a reaching task, correlations between spike and LFP between the dorsal premotor area of frontal cortex and the reach region of the parietal cortex increased when the monkey was making free choices instead of instructed choices [39]. In another experiment, primates were trained to hold two items in working memory. During memory encoding, the phase of spikes associated with those items in the prefrontal cortex were segregated relative to a 32 Hz oscillation according to the order of memory items [40]. In general, LFP was found to correlate with spike synchrony [41].

Correlation Between Subthreshold Membrane Potential Oscillations and Local Field Potentials

Although, based on the correlation of APs with SMO phases (see section “Correlation Between the Phase of Membrane Oscillations and Action Potential”) and LFP phases (see section “Correlation Between the Phase of Local Field Potentials and Action Potentials”), a tight coupling between SMO and LFP would not be surprising; such a relationship is not necessary. Relatively few studies have addressed this question directly. Among those, significant correlation between local subthreshold oscillations and local field potentials was found in low (1–25 Hz) [13] and high (>25 Hz) frequency bands with the expected polarity reversal between intra- and extracellular potentials [13, 21]. We know even less about the mechanism of the transfer between SMO and LFP.

To explain such correlation, the SMO must synchronize between neurons to be able to generate LFP, which integrates over the membrane oscillations of thousands of neurons. However, the mechanism of such synchronization is poorly understood. For instance, SMOs may synchronize at low frequencies through the glia surrounding neurons. Neuroglia have been known to buffer and redistribute local extracellular K+ and are thus able to transfer slow wave oscillations and spike-wave seizures [42]. Astrocytic calcium transients have also been shown to display oscillatory activity [43].

Subthreshold oscillations synchronize with LFP in the motor cortex [44], and they do so even across the central sulcus between somatosensory and motor cortical areas at 20–40 Hz but with a 180° phase reversal between superficial and deep layers [45]. Abundant correlation between LFP and SMO was observed in the barrel cortex of behaving mice during quiet wakefulness but not during whisking [46].

As mentioned before, neurons are also capable of synchronizing their SMO through ephaptic coupling, which has proven to be effective at synchronizing APs in slice while synaptic transmission was pharmacologically blocked [15]. In contrast, the old model, based on current source-density analysis [47, 48], postulated an entirely synaptic origin of LFP. Although the mechanism that links SMO to LFP is still hypothetical, the old model needs a revision in light of new data showing substantial contribution of synchronized SMO to LFP. Moreover, extraneuronal components such as glia and astrocytes may play a role in transferring and integrating SMOs across the extracellular space to create LFP.

After discussing the critical components of phase coding and the coherence between SMO and APs, we now turn our attention to a model of phase coding in action.

Sensory Encoding-Decoding Circuits

Sensory organs are topographically organized. The retina, inner ear, olfactory neuroepithelium, and cutaneous receptors are all 2D surfaces. Except for the olfactory pathway, these 2D surfaces project through massive parallel pathways to the secondary interface that is the thalamus. The rest of the chapter will focus on the mammalian visual pathway, but the same principles can be generalized to other sensory systems. Visual coding has three critical stages: retina, LGN, and V1. From V1 to higher cortical areas, the same principles apply. We will first describe the retinal encoding on the example of mammalian visual system, then the information compression at LGN, and finally the decoding in V1 by information reconstruction.

Retina-LGN Projection

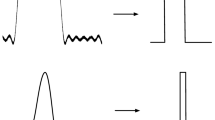

Sensory encoding in the mammalian visual system takes place in the retina by the retinal ganglion cells. These cells are topographically organized, where each retinal ganglion in the fovea represents a 0.5–2° receptive field of the eye-centered visual field. Light from this receptive field elicits a spike train in the retinal ganglions, which is modulated by two processes: (1) the extrinsic luminance contrast and (2) the intrinsic retinal waves, i.e., local membrane oscillations, which we described earlier as SMO. In this spike train, information is multiplexed in short (~300 ms) packets, consistent with the notion of “retinal functional units” (RFU) [49]. We further propose that the two components, the light-induced and intrinsic oscillations, each contribute to the code differently. The light-induced component generates a burst of APs, which encodes luminance contrast by two distinct mechanisms (Fig. 13.3a): (1) by frequency coding, where the firing rate is proportional to the stimulation of the on/off center after subtraction of the stimulation of surround for on-center cells and off-center cells, respectively [50, 51], and (2) by latency coding, where the latency of the elicited burst is inversely proportional to the luminance [37, 39]. The retino-geniculate pathway also keeps the two types of codes separated in two different channels. One channel operates at low frequency bands (<30 Hz) and transfers the average firing rate. The other channel operates at gamma frequency bands (40–80 Hz) and converts the spike latency to oscillation cycles [53]. As we had proposed previously, the position of the retinal input, encoded by the phase, is also included to this channel [54].

Gamma alignment in the retina. (a) Visual encoding starts with a luminance-induced burst in the retinal ganglions, where the burst latency is inversely proportional to the luminance. Certain spike elements of the burst are sampled through local gamma oscillations (SMO) that are topographically organized (gamma alignment). (b) Major information processing steps in the retina-LGN-cortex circuitry. The first two steps are the conversion of bursts to gamma-aligned spike patterns. Because of the constant phase gradient between adjacent ganglion cells, spike phases represent the relative spatial position, while the gamma cycle represents stimulus intensity. The third step is code compression in the LGN through dispersion. The fourth step is reconstruction based on interference. The inset under latency code in (a) represents the electroretinogram (ERG) recorded from the back of the eye. The ERG captures the retinal functional unit (RFU ~300 ms), which is a briefly evoked gamma frequency oscillation that also contains the packet of spike message

How is the fine retinal position encoded? Because RFUs are evoked upon visual transients such as saccadic eye movements and microsaccades, they trigger short traveling waves of gamma oscillations (wave-packets) [49]. Intriguingly, retinal ganglions are able to resolve fine textural details at less than about one-fifth of a receptor diameter resolution with “hyperacuity” [55, 56]. On a theoretical ground, the eye would not be able to capture these high-resolution details unless it first converted them to the temporal domain. Whenever the eye (or the object relative to the eye) moves, the motion of the luminance transient generates a temporal offset between the SMOs of adjacent ganglion cells, captured as a phase difference of the oscillations. Hence, instead of the spatial receptor density, what really constrains the spatial resolution is the temporal resolution of those cells. Fortunately, the smallest spatial and temporal window has been determined and found to be 2–3 arcmin and 20–30 ms, respectively [55]; it closely matches the duration of a gamma cycle. It is reasonable to assume that the search for the smallest discernible spatial difference (such as contour discontinuity or texture) detectable by a moving eye based on the phase difference between the activities of two neurons takes a gamma cycle. In summary, we propose that an RFU is able to scan-in a patch of high acuity texture during a microsaccade or post-saccadic fixation from the fovea and convert it to a code that is a combination of (a) firing rate, (b) firing latency, and (c) spike phase relative to the intrinsic gamma SMO. These packets are sent to the LGN (Fig. 13.3b).

At this point we are ready to define the construction of the retino-geniculo AP packets. Luminance contrast induces latency-encoded AP bursts in a group of retinal ganglions. Next, single spikes from the bursts are aligned with the stimulus-induced gamma oscillations. This stage is called “gamma alignment” (Fig. 13.3, [41, 44]). When these responses are measured in absolute time, the AP latency is quantized in gamma cycles, and the textural information (e.g., hyperacuity) is encoded by the gamma phase. In summary, the sequence of retino-geniculate APs compresses three types of information: the luminance proportional firing rate (<30 Hz), the gamma-aligned latency at 40–80 Hz resolution, and a ~1 ms precision AP phase code, which reflects textural details in supra-photoreceptor resolution.

Gamma Alignment

While the frequency-encoded quality directly affects the likelihood that an LGN target neuron will relay the message to V1 through LGN, the gamma-aligned code is a complex temporal code (see section “Deciphering the Phase Code”) that has to be decoded. The gamma SMO will transfer only those AP components of the burst pattern that coincide with the depolarization phases of gamma. When spike bursts are selectively eliminated by allowing only APs that are in-phase with the depolarization peaks of gamma SMOs to be processed, gamma SMOs attach a spatial phase tag to the temporal code (Fig. 13.3a). This phase tag can be decoded as long as the retina and LGN are sharing the same gamma clock, and transient gamma couplings between the retina and LGN have been supported by a number of observations [26, 49, 53].

Topography of Retinal Gamma

What is the biological function of gamma alignment? The answer derives from the spatial topography of gamma oscillations in the retina (Fig. 13.4). Gamma oscillations are topographically organized in the retina, because every eye or head movement induces a whole field visual transient, or because objects move in space with inertia and generate local transients. These transients activate the 2D matrix of photoreceptors simultaneously. Those photoreceptors converge on the retinal ganglion cells and generate a packet of gamma oscillations in each ganglion cell, which organizes itself in a simultaneous wave sweeping through the 2D matrix of ganglions. Due to the constant phase lag between adjacent photoreceptors, the induced gamma has a phase gradient. Depending on the type of motion, this phase gradient has different geometries.

Mapping of retinal topography on V1. (a) When a motion stimulus is projected on the back of the eye, the stimulus components activate a set of photoreceptors along a line in the retina. These components elicit successive spikes in n1, n2, and n3 neurons. Because the spikes are aligned to the gamma SMO, their latency is nk × γ + n × ϕ, where n is the number of neurons, k is a velocity constant, and ϕ is the phase gradient (here 3γ + 2φ). (b) The sequential activation in the retina will generate a similar pattern of activity and topography when reconstructed in the cortical columns of V1. What was radial in the retina is longitudinal in V1. The cortical projection of the motion trace (top panel) and its neuronal reconstruction from phase-coded sensory input (lower panel). An array of cortical neurons receives three spikes, which are projected on all neurons in V1. However, the only neurons responding are the ones that share the exact phase of the input APs. The reconstruction is based on the same wave function as described above (nk × γ + n × ϕ)

The Origin of Retinal Gamma

The origin of gamma oscillation in the retina is still unresolved. Retinal waves were proposed on a theoretical basis [57] and later discovered in the developing retina [58], but their role in wiring the retino-geniculate projections is controversial [59]. Gamma oscillations are still prevalent in the retina after birth [26, 49, 52]. We propose that these oscillations are intrinsically induced in the retinal ganglions but extrinsically triggered by saccades or microsaccades, which, in turn, modulate the luminance field. The same frequency oscillations appear in the LGN phase-coupled with the retina [26, 49, 52].

The retina-LGN gamma coupling can be accomplished in two different ways. One is a direct projection from the retinal ganglia to the LGN. This can be achieved through the gamma-band 40–80 Hz spike channel [52]. The other is indirectly through eye movements. The retina does not receive feedback from LGN. However, eye movements are under cortical and subcortical control (frontal eye fields and superior colliculus, respectively), through which waves of activity are generated. Such wave-triggering eye movements are the microsaccades: 1° ~20 ms duration. Microsaccades, discovered by Robert Darwin (Charles Darwins’ father) in 1786 [59], cover the retina across a range of several dozen to several hundred photoreceptor widths. The duration of microsaccades closely matches the gamma oscillations. During one microsaccade, the retina is able to scan 2–120 arcmin of the visual field, and the lower band is the same minimal displacement as for hyperacuity. Because the microsaccade causes a translational motion of all textures and edges, this transient generates the gamma oscillations wrapped into an RFU as described above (see section “Retina-LGN Projection”). The contrast transients evoke a gamma SMO that travels across the retina with the speed of microsaccades. The induced gamma SMO will couple frequency with neurons in LGN by pacing LGN’s own gamma SMO.

Since luminance contrast is latency encoded, the larger contrast signal generates an earlier burst of APs relative to the gamma wave onset (triggered upon the microsaccade) than smaller contrast. The burst duration/frequency is proportional to the intensity of light. Adjacent retinal ganglions will engage the microsaccade-triggered gamma oscillation with a slight latency difference as contrasts deriving from the same object traverses sequentially through them. Thus, microsaccade-triggered gamma oscillations traveling over a small distance in the retina have an intrinsic phase difference.

For the LGN to faithfully communicate the gamma-aligned information to cortical targets, it needs to preserve the AP-gamma phase relationship, because the object-specific information is encoded relative to the gamma oscillations. Therefore, the retinal gamma oscillations with the correct gamma phases have to be communicated with the LGN. This is established by the retinal-LGN gamma coherence. A profound retina-LGN coherence in the 60–120 Hz frequency range was found in multiunit data [61]. Information encoded in gamma-aligned spikes is then compressed before transmitted to cortical targets, which is the subject of the next section (see section “LGN-V1 Projection”).

LGN-V1 Projection

When the gamma-aligned code arrives to LGN, it will be transferred through the relay cells to V1 (Fig. 13.5). The retinal input acts through monosynaptic excitatory connections, which contribute 5 % of the total relay cell input [62]. The remaining 95 % derives from cortico-LGN feedback connections and interneuronal input. There are at least three classes of LGN neurons in terms of temporal characteristics of responses to retinal input: one with sustained responses, another with transient responses, and a third heterogeneous class [63]. The reproducibility of relay neuron responses is very robust and precise in time [1]. The same study also reported that the Fano factor of burst responses remained sub-Poisson if they only considered the first spikes of each burst. This precision is critical because most cell-to-cell and trial-by-trial spike time variance observed in V1 recordings derives from the latency encoding of the original burst responses in the retina and not from the uncertainty of LGN responses. Therefore, we attribute the LGN response latency differences to the gamma alignment of the retino-geniculate signal.

Information transfer through the retino-geniculo-cortical pathway. (a) The gamma-aligned action potential (AP) pattern from retinal ganglions is transferred to the LGN through the optic tract. (b) In the LGN, the AP pattern from different fibers is dispersed through divergent synaptic connections, which led to merging of the AP patterns, resulting in potentially confused AP origin and spatial blurring. Nevertheless, the spatial information is retained by the precise phase of APs. (c) As a result of merging APs from different neurons, a compressed code is broadcasted to the visual cortex V1 where neurons decode the spatial information from the phase

The feedback projection that dominates the relay cell input is critical. This input usually occupies the distant dendrites, and as such, it has a modulatory rather than direct influence on the responses of LGN neurons. We assume the main contribution of this feedback is to synchronize relay cell activity with V1 gamma. Since the evoked retinal gamma imposes gamma-band coherence onto the LGN neurons through the retino-geniculate input, the corticothalamic feedback closes the loop between the retina and V1. We hypothesize that the entire trisynaptic feedforward retino-geniculo-cortical pathway intermittently engages to a coherent gamma phase lock. Visual information is most efficiently transmitted during these phase locks. Because the gamma is topographically organized over the retina, and retina is topographically mapped on LGN as well as on V1, the topography of the retinal gamma phase gradient must be preserved across both retinotopic maps, LGN and V1. Note that the overarching gamma coherence does not mean zero-phase-lag synchrony between retina and LGN and V1. The conductivity imposes a slight delay between the gamma cycles at successive processing levels, but it is inconsequential because all neuronal computations are performed relative to the local gamma.

Most importantly, the projection neurons in LGN display a synaptic dispersion through the collateral synaptic divergence of axons impinging on the relay cells (Fig. 13.5b, c). The result of this divergence is critical because it distributes the gamma-aligned input over a group of relay cells. Therefore, the topographically organized spike packets will be spatially blurred. A given thalamic relay cell’s R x,y input represents the output of the retinal ganglion G x,y mixed with the output of neighboring G x ± n,y ± n , where x and y are the retinal coordinates and n is a number of neighbor retinal ganglion cells collaterally terminating on the R x,y cell. Does this convergence have a detrimental effect on the code conveyed to V1? It depends on whether or not the topography of retinal projections can be recovered in V1. Given the approximately same number of input and output axons, any dispersion of axonal projections across neurons comes with convergence of axons on the same neurons. Hence, dispersion and combination of APs across neurons happen at the same time. Combining the AP packets derived from different ganglion cells intersperses the individual APs across neighbors by merging them like a zipper. The result of merging is that the same packets will be transferred through neighboring axons. This will compress the code and will also greatly improve the reliability of transfer between LGN and the cortex. The code compression is achieved by collapsing the messages sent in parallel independent channels into fewer independent channels. Nevertheless, the total number of channels may remain the same but communicate redundant information. By the reduction of independent channels, the reliability improves because the compressed code is cloned in multiple axons. Then, error correction is possible between noisy fibers at the terminals, because all axons convey the same temporal pattern of APs; therefore, if one axon transfers an incomplete AP sequence, the other axons can correct for that postsynaptically by overlapping terminals. The other biological advantage is that the same information can be routed to multiple cortical areas. Thus different areas can compete and parse the same code according to different aspects. This advantage may not be significant in the sensory pathway because the divergence between LGN and cortical targets is limited. However, it is significant when the corticocortical transfer is considered. Our next question regards how the original spatial specificity of the retinotopic code is recovered. Without that, there is no use for neural encoding and code compression.

Reconstruction of the Information from Phase Code

V1 receives simultaneous spike trains through a massively parallel fiber bundle of optic radiatum, which emanates from the small nuclei of LGN bilaterally like the horn of a phonograph. The fibers are retinotopically organized, and this makes the projection on the granule cells of V1 also retinotopic. Every G x,y retinal position corresponds to a V1 x,y location. However, since the retinal output is dispersed by the divergent transmission through LGN, those x,y positions in the LGN-V1 pathway are no longer fiber specific (Fig. 13.3b). The question is: how can the position code be recovered from the compressed multiplexed information?

The circuitries of the cerebral cortex are organized into columns, small cylindrical volumes with an intricate internal wiring scheme [64]. Pyramidal neurons in the column generate SMO at 20–60 Hz (gamma/beta) frequency range. In our model, each column is an independent gamma generator of its own by inducing gamma oscillations that spread from the center to the periphery of the column. We further assume that different columns may couple by phase locking their gamma oscillations while they remain independent from other columns. Phase coupling does not imply phase synchrony. When phase coupling is established between two columns, their gamma oscillations display a constant phase lag. If we strategically place electrodes over a small area of the cerebral cortex, we are able to measure these phase-locked and slightly asynchronous oscillations as wave propagation. These waves are generated by local SMOs displaying a phase gradient. The role of these SMO waves is to periodically depolarize a group of pyramidal cells in the column in a radial order from the center to the periphery. Thus, neurons that undergo phasic depolarization are more sensitive to the input that arrives in-phase during that interval. Conversely, neurons that receive input in-phase with their SMO are more likely to generate APs than neurons for which the same input is not in-phase with the local SMO. How exactly does the reconstruction work?

Key Concepts of Information Reconstruction

Interference Principle

For the reconstruction of sensory information from spike phases to work, the cortical field of gamma oscillations must be phase locked with the gamma oscillations of the retina. This phase locking is provided by the above-described retino-thalamo-cortical loop. Given the temporal coherence and spatial mapping between the gamma oscillation field in the retina and the gamma oscillation field in V1, the compressed code from LGN meets with a gamma field in V1 layer 4B. The AP sequence arrives at the granule cells in the form of synchronous volleys through parallel axons, but only a small fraction of EPSPs will coincide with the depolarized state of granule cells’ SMO and be able to reach further to layer 2/3 pyramidal cells within the same column. At the same time, EPSPs that do not coincide with the depolarization phase of SMO will fail to initiate APs. These signals are not transmitted further within that column. As a consequence of the topological one-to-one mapping between the sensory neuromatrix and the cortical neuromatrix, these coincidences between SMO and EPSP will occur in cells that are in topological register (Fig. 13.4). For instance, concentric circular waves in the retina induce linear traveling waves in V1 because the fovea projects to the posterior pole of the calcarine fissure while the periphery projects to the anterior boundary of V1. Linear waves in the retina induce waves in V1 along the anterior-posterior axis, where the retinal periphery maps to the anterior V1. On the other hand, linear waves in the retina are mapped along the dorsal-ventral axis of V1, where the upper retinal hemifield projects to the ventral V1 (Fig. 13.4). Despite the geometrical distortion, the topological mapping is conserved.

Deciphering the Phase Code

All sensory information can be described by four variables: the intensity I (here defined as luminance), the coordinate of the sensory receptor [r,θ] relative to other receptors within the sensory organ, and time t of the activation time. In the visual modality, I r,θ,t represents the luminance I at radial coordinate r and angular position θ of the retinal ganglion at time t. (For the sake of simplicity, we neglect channels of other sensory qualities, such as the color channels, but they can be easily included.) These coordinates must project on specific cortical columns of V1 at retinotopic coordinates V x,y without the requirement of a one-to-one projection. The problem the nervous system must solve is how to accomplish this one-to-one projection without a prewired direct axonal projection from the retina to V1 (Fig. 13.4).

As discussed earlier, retinal ganglions convert the luminance to latency, and these latencies map on the nth gamma cycle, where n is inversely proportional to I r,θ . Then, by synaptic convergence, the parallel latency-encoded trains of spikes are merged into fewer channels than the number of retinal photoreceptors without changing their temporal relationship. These channels transfer the retinal signal to the V1 through LGN. In the compressed spike train, the AP’s timing is controlled by two parameters, the integer number of gamma oscillations (γ) encoding luminance and the gamma phase (φ) encoding the r,θ polar coordinates. Here, the phase φ derives from the phase difference between γ oscillations in the center and the periphery of the retina (φ = γ central − γ r,θ ) as a result of spreading gamma oscillations in the retina. The latency of the AP evoked by the transient I r,θ on the retina arriving in LGN is t AP = t 0 + Δ t c + n × γ + φ, where t 0 is the time of stimulus, Δ t c is a constant conduction delay, and φ is a cortical position-dependent phase difference within a γ cycle. Here φ < λ γ , where λ is the length of a gamma cycle and n is the latency in gamma cycles that an I r,θ induces. Thus, the code conveyed by each spike is quantized by the γ cycles and φ phase. If we assume that Δ t c is constant within a group of axons, the relative spike latency at a given neuron is simply n × γ cycles + φ phase, by construction. This relative time is transferred in packets between the retina and the LGN, containing the complete topographical information about the intensity of light exposing the retina at any position. In these packets, spike phases label the position of retinal input, while relative spike latency encodes luminance. However, for this phase information to be readable by neurons at the decoding site, these neurons need to access the γ reference, either the original or a copy of it. The simplest means would be to transfer γ along with the packets. However, an oscillation, being an analog quantity, cannot be transmitted by axons since they are restricted to the binary (digital) data transmission of APs. Rather, since gamma is ubiquitous and intrinsically generated in multiple sensory and cortical structures, it only needs to be synchronized across these areas. Sufficient evidence for this cross-areal synchronization is described in sections “Retina-LGN Projection,” “Gamma Alignment,” “Topography of Retinal Gamma,” “The Origin of Retinal Gamma,” and LGN-V1 Projection.”

The cross-areal gamma coupling brings local gamma oscillations into coherence intermittently. Coherence is achieved when the SMOs of synaptically connected neurons in the retina, LGN, and V1 display successive gamma cycles with the same frequency but a constant delay. This is equivalent to stating that the phase gradient between cells A and B in the retina is equal to the phase gradient between corresponding cells A′ and B′ in the LGN or V1. This requirement is fulfilled by definition, because corresponding cells are not defined based on topographic register but instead by the phase gradient. Thus topography is defined by the topology of cross-areal phase coherence, which nevertheless provides a relatively consistent one-to-one relationship.

When the gamma coherence is established between LGN and V1 and the compressed spike message is transferred, all V1 neurons within the LGN projection field receive the exact same AP sequence simultaneously in a pattern of synchronized volleys (Fig. 13.6). As the volley of APs reaches the layer 4B granule cells, it will be relayed to layer 2/3 pyramidal cells and generate excitatory postsynaptic potentials (EPSPs) at every synapse upon which it terminates. By this time the gamma coherence should be established between the LGN and V1, which depolarizes the layer 2/3 cells periodically. The spike input and depolarization waves set the condition for some selected layer 2/3 pyramidal cells to be able to fire APs. However, only those pyramidal cells that receive input from granule cells while their membrane is depolarized by the peak phase of gamma SMO will fire APs. Although other pyramidal cells will not transfer the input at that time, they may fire when the coincidences between the local SMO depolarization and the input from the granule cells occur. Because the topographic distribution of gamma phase gradient over V1 reflects the topography of the retina, the coincidence of EPSP and SMO is established over specific pyramidal cells, exactly those that are in topographic register with the retina (Figs. 13.4 and 13.6). These coincidences will reconstruct the original sensory input from the compressed code similar to holography, where the 3D shape of an object is “drawn” in space as an interference pattern between the phase-modulated laser and the reference laser beam. However, instead of lasers, the nervous system utilizes its own ubiquitous coherent oscillation, the gamma, to reconstruct information encoded in phase. Because the phase coincidence between gamma SMO and sensory input does not typically happen in a single neuron, but in many neurons simultaneously, the reconstruction operates on a field. This notion is captured by the interference principle described in section “Interference Principle” [53, 56].

Information reconstruction in the visual cortex. (a) The cartoon illustrates the parallel information flow from the retina through the LGN to V1. The reconstructed AP pattern maps on the columnar architecture of V1. Each column generates its own gamma SMO, which spreads radially from the column center. The column at which the SMO was at its peak when the input from the LGN arrived responded first to the input volley of APs (t 1). As the SMO wave unfolds in space and time (expanding red shading in columns), subsequent volleys coincide with the SMO peak at different neighbor columns and generate a spatial pattern that reproduces the topography of the pattern of the visual input on the retina. The output of V1 is compressed again before it is projected to V2. (b) The corresponding sequence of APs in time from (a). The AP pattern evoked by visual input in the retinal ganglions is broadcasted as a gamma-aligned code to the LGN, where it is compressed and transferred as parallel spike volleys to V1. The columns in V1 decode it by phase coincidences of spikes with SMO, and original spike sequences are recovered

Note that the reconstruction is highly sensitive to the topography of oscillations and the oscillation gradient over the cortical area of projection. In addition, it is sensitive to noise, which is a subject of other studies.

Corticocortical Information Exchange

One can assume the same mechanism to be in place for corticocortical information transfer. First, APs of cortical pyramidal neurons in layers 5–6 have to be converted to latency code as γ + φ in each cortical column before it is transferred to another column. This is achieved through converging/diverging synaptic connections, similar to the sensory nuclei of the thalamus (see section “LGN-V1 Projection”). The spikes generated in different neurons, each aligned to the local gamma phase, will merge on output layer neurons maintaining γ + φ phase on all output neurons. These neurons fire APs in synchrony and relay the same spike times in parallel channels through a bundle of axons terminating at multiple distant cortical areas, reaching as far as the opposite hemisphere through the callosal interhemispheric connections. Because the originally labeled-line code is now compressed into the same code in every output neuron of the given column, it is critical that the originally labeled information can be decoded in different areas without addressing each axon by its origin. The challenge and its solution in the corticocortical transfer are similar to that of the sensory transfer. It also transfers topographically organized information via channels with topographically uniform content.

Topography

Phase coding generates topographic patterns in the brain that are not unfamiliar to us. We demonstrated by simulations that a layer of uniformly distributed neurons, which collectively support sinusoid plane wave propagation of SMOs, generate representations typical of grid cells observed in the rodent brain [53]. If gamma serves as the reference wave for neural information processing and it provides the temporal unit for reconstructing information from phase, uniformly distributed gamma generators in the neocortex self-organize into columns where the local gamma radially propagates within each column. Then it is conceivable that a neighborhood of columns is able to synchronize information processing cycles in a form of continuous spatial wave function as long as the SMOs of constituent columns are coherent. In contrast, columns with incoherent SMOs could maintain segregated information processing. The independence of two adjacent gamma generators is maintained within the radius of a complete gamma cycle in space, and so does the coherence maintained over multiple spatial gamma cycles. The radii of gamma generators depend on the propagation speed. We demonstrated by simulations that the phase gradient that provides maximal precision of reconstruction from gamma phases, i.e., the propagation speed of gamma waves in the cortex, must be close to 0.1 mm/ms [53]. Intriguingly, this is very close to the empirically described values across species and preparations [65–68]. Given the propagation speed and the oscillation frequency gamma, the cortical columns must be about 1 mm in diameter, which closely matches to the 500 μm–2 mm cortical column size. Decreasing the frequency of SMO increases the estimated column diameter, which is the case in the motor and premotor cortical areas.

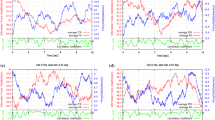

We discussed earlier that propagating theta oscillations cause AP phase precession when measured from extracellular electrodes while the same APs maintain their phase lock to the intrinsic SMO (see sections “Correlation Between the Phase of Membrane Oscillations and Action Potential” and “Correlation Between the Phase of Local Field Potentials and Action Potentials”). This result is also supported by empirical results [36–38]. Here we illustrate how phase coding generates a biologically plausible topography before selective wiring takes place: we modeled the self-organization of orientation maps in the primary visual cortex without orientation selectivity being assigned. First, we constructed a retinal array of 36 × 36 neurons consisting of 81 neuron groups. Each individual group collected the input of 16 ganglion cells. These ganglion cells encoded the visual input by gamma-aligned spikes as described in section “Gamma Alignment”. The visual input was modeled by a set of orientated sinusoid gratings with a single-cycle spatial frequency (Fig. 13.7). The total 1,296 ganglion cell model converged on an array of 81 LGN neurons, each transferring the compressed code from 16 ganglions to the cortical array of 36 × 36 neurons, interfacing with the retina. These cortical neurons were also assigned by gamma SMOs with the same spatial phase gradient as the ganglions. According to our simulations, the model cortical layer reconstructed the phase-encoded stimulus with a characteristic spatial transformation. Each different orientation was mapped on a slightly phase-shifted area of the cortical cell array because the gamma phase difference in the time domain naturally mapped onto a spatial phase difference between locally generated gamma SMOs. Because gamma SMO displayed a similar spatial phase gradient both in the retina and in the cortex by construction, this spatial phase gradient enforces the mapping of different stimulus orientations to different spatial phases. When the reconstruction from phase takes place in the cortex, the originally phase-encoded APs will coincide with the cortical SMO of those neurons that are in the same topological position. Because of the retinotopy of cortical cells, the topography of APs in the cortex roughly reproduces the retinal input [53]. When we superimposed neuronal responses to different orientations, we observed a typical pinwheel pattern, characteristic of the empirical pinwheels observed in columnarly organized visual cortical architectures [69]. We emphasize that there was neither a particular projection imposed on the architecture nor was orientation selectivity assigned to the cells in the model. Nevertheless, a pinwheel structure evolved from mapping the original temporal phase offsets into space. This illustrates how orientation selectivity may emerge from phase coding, while these emergent maps can stabilize by means of spike time-dependent plasticity that strengthens the synaptic connections over time.

Computational model of the self-organization of visual orientation maps. (a) A set of oriented grating stimuli was applied to drive retinal ganglions, one orientation at a time. The square-shaped stimulus covered the entire area of the retina. (b) The cortical activity induced by the corresponding stimulus set in (a). The hues of colors represent the activity of neurons at the given point of the 36 × 36 neuron space. (c) The luminance values were processed through latency-encoding gamma alignment. Compression into 81 channels took place at the LGN and the final step of reconstruction in V1. (d) The combined orientation responses in V1. Colors in (b) are superimposed. (e) Empirical results of pinwheel structure [69]. Note that although orientation selectivity was not wired in the system. Nevertheless, phase coding generated an orientation-selective pinwheel structure

Time in the Brain

Tracking the real time of events in our environment is critical for survival. All physical and social interactions are time dependent. Often there is a very narrow time-window of interaction with the environment, which, if missed, can have dramatic outcomes. For monkeys to grab a branch while jumping from tree to tree or for humans to steer a car away in intersection to avoid a collision, timing is vital. To achieve this temporal precision, the brain needs to integrate across a number of parallel sensory channels and across different modalities, such as between sound and visual motion. The inherent problem of sensory processing is delay, the time it takes for the brain to access the true time of events. It takes time to compute time. There are three problems: one is the combined effect of conductivity and cumulative synaptic delay that sets the boundary for perceptual delay and reaction time. The second is the variance of these delays across axons and fiber bundles and sensory pathways (see section “The Problem of Conductivity and Synaptic Divergence”). The third is the discrete nature of sensory processing.

Conductance delay itself would not be a problem as long as the brain can compensate for that delay, and Libet’s subjective referral is one example of how that can be accomplished [70]. However, the problem of heterogeneity of axon conductivity is difficult to explain away [71, 72]. The time scale of the variations of thalamocortical delays in the visual pathway (>20–40 ms) exceeds the time window of spike time-dependent plasticity (±10 ms, [73, 74]). Hence it is critical for the brain to accomplish the level of precision necessary for cortical neurons, as coincidence detectors [75], to detect true coincidences in the physical world. How can the brain combine color with motion when the latency difference between the two pathways is larger than 20 ms?

The solution we propose is plausible based on physiological and anatomical facts. According to phase coding, neural fingerprints of sensory events carry their own time tags. The spike-train packets encode the complete spatiotemporal pattern of a sensory event. The delay between two events is captured by the gamma cycles between them, and more refined discrimination is achieved on the spatiotemporal domain as phase differences. Instead of registering events in absolute time, the phase captures the relative time of sensory events such as a texture moving across a small patch of retina.

Because space is converted to time, time in the neural code is inextricably connected to space. Most importantly, the embedded time code makes the timing of sensory events immune to distortions due to the variance in conduction delays.

The notion that the brain references the times and locations of events by the cycle and phase of ongoing oscillations was corroborated by recent magnetoencephalographic evidence: perceptual adaptation to audiovisual asynchrony correlated with the change in the phase of entrained cortical oscillations in the auditory cortex relative to the visual stimuli and predicted the individual’s perceived simultaneity of audiovisual events [76]. In support of the notion of discrete sensory sampling, gamma frequency oscillations have been observed in the insect nervous system, specifically in the mushroom body, in fish, and also in the mammalian nervous system [77, 78] (see section “The Origin of Retinal Gamma” above). Moreover, one gamma cycle is 20–25 ms long and matches the average critical fusion frequency (15 Hz rod-mediated vision and 60 Hz at very high illumination intensities) [79]. Motion perception in the mammalian brain relies on this discrete sampling of visual space. In summary, we propose that the temporal basis of transferring time-labeled spatial information in a broad range of species and sensory organs is gamma SMO-based. This mechanism guarantees the separation of the temporal information in the spike packets from the transfer delay and processing time due to conduction delays in neuronal circuitries.

Benefits of Phase Coding

What are the biological advantages of phase code? Why perform so much work only to encrypt sensory messages for mere tenths of a millisecond before decoding? This brings us back to the issue of why coding is necessary at all. The answer applies to other biological systems using coding, such as DNA with its complementary nucleotide sequences. That is, the information in these messages has to (1) be maintained in time, (2) be transferred over a distance, (3) be read by diverse targets, (4) support selective partial readout, (5) be noise resistant, and (6) be reproducible. We argue that phase coding in the brain meets with these requirements:

-

1.

Phase code maintains information by explicitly encoding time and space. Because time between events is encoded by the distance the gamma wave travels and distance is encoded in phase, time and space are inextricably combined in the phase code by the sequence of APs. These sequences can be maintained and reproduced in synaptic circuitries by known plasticity rules and reverberations.

-

2.

Phase code can be broadcasted over a distance as binary code by APs. Delays do not affect the code because the code contains time.

-

3.

The code must be accessible to be read by multiple targets since the sensory information has to be processed by those multiple targets (e.g., the retinal input is used by the optic tectum and thalamic targets).

-

4.

Different subsystems read different parts of the code. Thus the code has to be composed such that these parts can be parsed. The gamma SMO provides a biologically plausible reference for the parsing because it is locally generated and ubiquitous. The interference principle implements the target selective readout (section “Interference Principle”).

-

5.

Noise resistance. The code has to be transferred with minimal distortion. This is provided by the code compression. Merging spikes across parallel channels makes the code self-correcting, because if one AP fails to be transferred, divergent connections at the next level will fill in from neighbor axons.

-

6.

Reproducibility. The code can be maintained in polysynaptic circuitries as in (1) and is accessible for other structures at other times. The code can also be recompressed in the cortical columns before transferring to other columns. Cortical columns naturally reproduce the sensory information and distribute them to vast numbers of cortical columns.

In addition to these benefits, sensory systems are able to enhance the spatial resolution of the signal by intrinsically induced or movement-evoked traveling waves of membrane potential over the receptor surface. One example for enhanced spatial resolution was hyperacuity (discussed in section “Retina-LGN projection”), which illustrates how converting spatial information on the temporal domain can increase the spatial resolution of the transmitted sensory signal. Hence, at cortical targets, the information can be reconstructed at higher than receptor resolution.

Finally, phase coding is not simply an encoding and decoding principle that can restore the original information with 100 % accuracy. The cortical representation is not meant to be an identical reconstruction of the retinal image. Instead, because of the target diversity and partial readout principles (3 and 4), different structures extract different components of the sensory information. One manifestation of such distortion is the topographical mapping of angular space to the topography of visual cortex. Another manifestation is the mapping of visual orientations in retinal coordinates to cortical orientation space, which maps the temporal interference pattern into a spatial phase map. It then reproduces the empirical pinwheel structure without predefined orientation selectivity (section “Topography”).

Transformations of the code and selective readout at different levels of information processing are constrained by the topology of oscillation fields at those levels. The relationship between the retinal oscillations and oscillation fields in V1 determines the information that will be read out as well as of the nature of the distortion of the code. However, instead of compromising the code, this distortion enhances its properties, as the orientation mapping enhances the abstraction of orientation features from the retinal image. Hence, subsequent processing stages are able to extract different features from the input by simply changing the structure of the oscillation field. Because phase coding redefines the goal of neuronal computation by focusing on the transformation of oscillation fields between interfacing stages of sensory and cortical processing, it will force us to develop a new computational toolset for investigation of the interaction between the discrete APs and the continuous field of membrane oscillations at multiple scales. This approach will ultimately deepen our understanding of how local cellular mechanisms of information processing make up the fabric of large-scale computations in the brain.

References

Kara P, Reinagel P, Reid RC. Low response variability in simultaneously recorded retinal, thalamic, and cortical neurons. Neuron [Internet]. 2000 Sep 1 [cited 2013 Mar 27];27(3):635–46. Available from: http://www.cell.com/neuron/fulltext/S0896-6273(00)00072-6

Schmolesky MT, Wang Y, Hanes DP, Thompson KG, Leutgeb S, Schall JD, et al. Signal timing across the macaque visual system. J Neurophysiol [Internet]. 1998 Jun 1 [cited 2014 Jun 22];79(6):3272–8. Available from: http://jn.physiology.org/content/79/6/3272

Carrasco A, Lomber SG. Neuronal activation times to simple, complex, and natural sounds in cat primary and nonprimary auditory cortex. J Neurophysiol [Internet]. 2011 Sep 1 [cited 2014 Jun 22];106(3):1166–78. Available from: http://jn.physiology.org/content/106/3/1166

Spruston N, Johnston D. Perforated patch-clamp analysis of the passive membrane properties of three classes of hippocampal neurons. J Neurophysiol [Internet]. 1992 Mar 1 [cited 2014 Jun 22];67(3):508–29. Available from: http://jn.physiology.org.ezproxy.lib.utexas.edu/content/67/3/508

Branco T, Häusser M. Synaptic integration gradients in single cortical pyramidal cell dendrites. Neuron [Internet]. Elsevier; 2011 Mar 10 [cited 2014 May 24];69(5):885–92. Available from: http://www.cell.com/article/S0896627311001036/fulltext

Pouille F, Scanziani M. Enforcement of temporal fidelity in pyramidal cells by somatic feed-forward inhibition. Science [Internet]. 2001 Aug 10 [cited 2014 May 27];293(5532):1159–63. Available from: http://www.sciencemag.org/content/293/5532/1159.abstract

Reid RC. Divergence and reconvergence: multielectrode analysis of feedforward connections in the visual system. Prog Brain Res [Internet]. 2001 Jan [cited 2013 Mar 27];130:141–54. Available from: http://www.ncbi.nlm.nih.gov/pubmed/11480272

Hodgkin AL, Huxley AF. A quantitative description of membrane current and its application to conduction and excitation in nerve. J Physiol [Internet]. 1952 Aug [cited 2014 May 26];117(4):500–44. Available from: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=1392413&tool=pmcentrez&rendertype=abstract

Shadlen MN, Newsome WT. The variable discharge of cortical neurons: implications for connectivity, computation, and information coding. J Neurosci [Internet]. 1998 6 Jun ed. 1998;18(10):3870–96. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=9570816

Shmiel T, Drori R, Shmiel O, Ben-Shaul Y, Nadasdy Z, Shemesh M, et al. Neurons of the cerebral cortex exhibit precise interspike timing in correspondence to behavior. Proc Natl Acad Sci U S A [Internet]. 2005 Dec 13 ed. 2005;102(51):18655–7. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=16339894

Nadasdy Z, Hirase H, Czurko A, Csicsvari J, Buzsaki G. Replay and time compression of recurring spike sequences in the hippocampus. J Neurosci [Internet]. 1999 Oct 26 ed. 1999;19(21):9497–507. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=10531452

Hatsopoulos N, Geman S, Amarasingham A, Bienenstock E. At what time scale does the nervous system operate? Neurocomputing. 2003;52–54:25–9.

Okun M, Naim A, Lampl I. The subthreshold relation between cortical local field potential and neuronal firing unveiled by intracellular recordings in awake rats. J Neurosci [Internet]. 2010 Mar 24 [cited 2013 Mar 4];30(12):4440–8. Available from: http://www.jneurosci.org/content/30/12/4440.abstract

Bennett MV, Zukin RS. Electrical coupling and neuronal synchronization in the mammalian brain. Neuron [Internet]. 2004 Feb [cited 2014 May 27];41(4):495–511. Available from: http://www.sciencedirect.com/science/article/pii/S0896627304000431

Anastassiou CA, Perin R, Markram H, Koch C. Ephaptic coupling of cortical neurons. Nat Neurosci [Internet]. Nature Publishing Group, a division of Macmillan Publishers Limited. All Rights Reserved.; 2011 Feb [cited 2013 May 23];14(2):217–23. Available from: http://dx.doi.org/10.1038/nn.2727

Alonso A, Llinas RR. Subthreshold Na+-dependent theta-like rhythmicity in stellate cells of entorhinal cortex layer II. Nature [Internet]. 1989 Nov 9 ed. 1989;342(6246):175–7. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=2812013

Llinas R, Yarom Y. Properties and distribution of ionic conductances generating electroresponsiveness of mammalian inferior olivary neurones in vitro. J Physiol [Internet]. 1981 Jun 1 ed. 1981;315:569–84. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=7310722

Llinás R, Yarom Y. Oscillatory properties of guinea-pig inferior olivary neurones and their pharmacological modulation: an in vitro study. J Physiol [Internet]. 1986 Jul [cited 2013 Jun 2];376:163–82. Available from: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=1182792&tool=pmcentrez&rendertype=abstract

White JA, Klink R, Alonso A, Kay AR. Noise from voltage-gated ion channels may influence neuronal dynamics in the entorhinal cortex. J Neurophysiol [Internet]. 1998 Jul 11 ed. 1998;80(1):262–9. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=9658048

Hutcheon B, Yarom Y. Resonance, oscillation and the intrinsic frequency preferences of neurons. Trends Neurosci [Internet]. 2000;23(5):216–22. Available from: http://www.sciencedirect.com/science/article/B6T0V-405SNMS-9/2/1ce5d50ef414d6a3cc174e6e9598ae0b

Lagier S, Carleton A, Lledo P-M. Interplay between local GABAergic interneurons and relay neurons generates gamma oscillations in the rat olfactory bulb. J Neurosci [Internet]. 2004 May 5 [cited 2013 May 22];24(18):4382–92. Available from: http://www.jneurosci.org/content/24/18/4382.short

Desmaisons D, Vincent J-D, Lledo P-M. Control of Action Potential Timing by Intrinsic Subthreshold Oscillations in Olfactory Bulb Output Neurons. J Neurosci [Internet]. 1999 Dec 15 [cited 2013 Jun 2];19(24):10727–37. Available from: http://www.jneurosci.org/content/19/24/10727.short

Balu R, Larimer P, Strowbridge BW. Phasic stimuli evoke precisely timed spikes in intermittently discharging mitral cells. J Neurophysiol [Internet]. 2004 Aug 1 [cited 2013 May 29];92(2):743–53. Available from: http://jn.physiology.org/content/92/2/743.full

Gray CM, Singer W. Stimulus-specific neuronal oscillations in orientation columns of cat visual cortex. Proc Natl Acad Sci U S A. 1989;86(5):1698–702.

Vinck M, Lima B, Womelsdorf T, Oostenveld R, Singer W, Neuenschwander S, et al. Gamma-phase shifting in awake monkey visual cortex. J Neurosci [Internet]. 2010 Jan 27 [cited 2013 May 24];30(4):1250–7. Available from: http://www.jneurosci.org/content/30/4/1250.long

Castelo-Branco M, Neuenschwander S, Singer W. Synchronization of visual responses between the cortex, lateral geniculate nucleus, and retina in the anesthetized cat. J Neurosci [Internet]. 1998 Aug 11 ed. 1998;18(16):6395–410. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=9698331

Montemurro MA, Rasch MJ, Murayama Y, Logothetis NK, Panzeri S. Phase-of-firing coding of natural visual stimuli in primary visual cortex. Curr Biol [Internet]. 2008 Mar 11 ed. 2008;18(5):375–80. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=18328702

Kayser C, Montemurro MA, Logothetis NK, Panzeri S. Spike-phase coding boosts and stabilizes information carried by spatial and temporal spike patterns. Neuron [Internet]. 2009 Mar 3 ed. 2009;61(4):597–608. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=19249279

Luczak A, Bartho P, Harris KD. Gating of sensory input by spontaneous cortical activity. J Neurosci [Internet]. 2013 Jan 23 [cited 2014 May 26];33(4):1684–95. Available from: http://www.jneurosci.org/content/33/4/1684

Laurent G. Dynamical representation of odors by oscillating and evolving neural assemblies. Trends Neurosci [Internet]. 1996 Nov 1 ed. 1996;19(11):489–96. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=8931275

O’Keefe J, Recce ML. Phase relationship between hippocampal place units and the EEG theta rhythm. Hippocampus. 1993;3(3):317–30.

Skaggs WE, McNaughton BL, Wilson MA, Barnes CA. Theta phase precession in hippocampal neuronal populations and the compression of temporal sequences. Hippocampus. 1996;6(2):149–72.

Hafting T, Fyhn M, Moser M-B, Moser EI. Phase precession and phase locking in entorhinal grid cells, 2006 Neuroscience Meeting. Atlanta, GA: Society for Neuroscience; 2006.

Vinck M, Lima B, Womelsdorf T, Oostenveld R, Singer W, Neuenschwander S, et al. Gamma-phase shifting in awake monkey visual cortex. J Neurosci [Internet]. 2010 Jan 29 ed. 2010;30(4):1250–7. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=20107053

Pesaran B, Pezaris JS, Sahani M, Mitra PP, Andersen RA. Temporal structure in neuronal activity during working memory in macaque parietal cortex. Nat Neurosci. 2002;5(8):805–11.

Harvey CD, Collman F, Dombeck DA, Tank DW. Intracellular dynamics of hippocampal place cells during virtual navigation. Nature [Internet]. 2009 Oct 16 ed. 2009;461(7266):941–6. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=19829374

Lubenov EV, Siapas AG. Hippocampal theta oscillations are travelling waves. Nature [Internet]. 2009 May 19 ed. 2009; Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=19448612