Abstract

Energy minimization methods are a very popular tool in image and signal processing. This chapter deals with images defined on a discrete finite set. The energies under consideration can be differentiable or not or convex or not. Analytical results on the minimizers of different energies are provided that reveal salient features of the images recovered in this way, as a function of the shape of the energy itself. An intrinsic mutual relationship between energy minimization and modeling via the choice of the energy is thus established. Examples and illustrations corroborate the presented results. Applications that take benefit from these results are presented as well.

Access provided by Autonomous University of Puebla. Download reference work entry PDF

Similar content being viewed by others

Keywords

- Energy Minimization Methods

- Multiplicative Noise Removal

- Compressed Sensing

- Data Fidelity Term

- Nonsmooth Data-fidelity

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

In numerous applications, an unknown image or a signal \(u_{o} \in \mathbb{R}^{p}\) is represented by data \(v \in \mathbb{R}^{q}\) according to an observation model, called also forward model

where \(A: \mathbb{R}^{p} \rightarrow \mathbb{R}^{q}\) is a (linear or nonlinear) transform. When u is an m × n image, its pixels are arranged columnwise into a p-length real vector, where p = mn and the original u[i, j] is identified with \(u[(i - 1)m + j]\). Some typical applications are, for instance, denoising, deblurring, segmentation, zooming and super-resolution, reconstruction in inverse problems, coding and compression, feature selection, and compressive sensing. In all these cases, recovering a good estimate \({\hat{u}}\) for u o needs to combine the observation along with a prior and desiderata on the unknown u o . A common way to define such an estimate is

where \(\mathcal{F}: \mathbb{R}^{p} \times \mathbb{R}^{q} \rightarrow \mathbb{R}\) is called an energy (or an objective), \(U \subset \mathbb{R}^{p}\) is a set of constraints, \(\Psi \) is a data-fidelity term, \(\Phi \) brings prior information on u o , and β > 0 is a parameter which controls the trade-off between \(\Psi \) and \(\Phi \).

The term \(\Psi \) ensures that \(\hat{u}\) satisfies (1) quite faithfully according to an appropriate measure. The noise n is random and a natural way to derive \(\Psi \) from (1) is to use probabilities; see, e.g., [7, 32, 37, 56]. More precisely, if \(\pi (v\vert u)\) is the likelihood of data v, the usual choice is

For instance, if A is a linear operator and \(v = Au + n\) where n is additive independent and identically distributed (i.i.d.) zero-mean Gaussian noise, one finds that

This remains quite a common choice partly because it simplifies calculations.

The role of \(\Phi \) in (3) is to push the solution to exhibit some a priori known or desired features. It is called prior or regularization or penalty term. In many image processing applications, \(\Phi \) is of the form

where for any i ∈ { 1, …, r}, \({\mathrm{D}_{i}}: \mathbb{R}^{p} \rightarrow \mathbb{R}^{s}\), for s an integer \(s\geqslant 1\), are linear operators and \(\|\cdot \|\) is usually the ℓ 1 or the ℓ 2 norm. For instance, the family \(\{{\mathrm{D}_{i}}\} \equiv \left \{{\mathrm{D}_{i}}:\in \{ 1,\ldots,r\}\right \}\) can represent the discrete approximation of the gradient or the Laplacian operator on u or the finite differences of various orders or the combination of any of these with the synthesis operator of a frame transform or the vectors of the canonical basis of \(\mathbb{R}^{r}\). Note that s = 1 if {D i } are finite differences or a discrete Laplacian; then

And if {D i } are the basis vectors of \(\mathbb{R}^{r}\), one has ϕ( | D i u | ) = ϕ( | u[i] | ). In (6), \(\phi: \mathbb{R}_{+}\mapsto \mathbb{R}\) is quite a “general” function, often called a potential function (PF). A very standard assumption is that

H1

\(\phi: \mathbb{R}_{+} \rightarrow \mathbb{R}\) is proper, lower semicontinuous (l.s.c.) and increasing on \(\mathbb{R}_{+}\), with ϕ(t) > ϕ(0) for any t > 0.

Some typical examples for ϕ are given in Table 1 and their plots in Fig. 1.

Plots of the PFs given in Table 1. PFs with \(\phi '(0^{+}) = 0\) (- - -), PFs with ϕ′(0+) > 0 (—)

Remark 1.

If ϕ′(0+) > 0 the function t → ϕ( | t | ) is nonsmooth at zero in which case \(\Phi \) is nonsmooth on \(\cup _{i=1}^{r}[w \in \mathbb{R}^{p}:\mathrm{ D}_{i}w = 0]\). Conversely, \(\phi '(0^{+}) = 0\) leads to a smooth at zero t → ϕ( | t | ). With the PF (f13), \(\Phi \) leads to the counting function, commonly called the ℓ 0-norm.

For the human vision, an important requirement is that the prior \(\Phi \) promotes smoothing inside homogeneous regions but preserves sharp edges. According to a fine analysis conducted in the 1990s and summarized in [7], ϕ preserves edges if H1 holds as if H2, stated below, holds true as well:

H2

This assumption is satisfied by all PFs in Table 1 except for (f1) in case if α = 2. Note that there are numerous other heuristics for edge preservation.

1.1 Background

Energy minimization methods, as described here, are at the crossroad of several well-established methodologies that are briefly sketched below.

-

Bayesian maximum a posteriori (MAP) estimation using Markov random field (MRF) priors. Such an estimation is based on the maximization of the posterior distribution \(\pi (u\vert v) =\pi (v\vert u)\pi (u)/Z,\) where π(u) is the prior model for u o and Z = π(v) can be seen as a constant. Equivalently, \(\hat{u}\) minimizes with respect to u the energy

$$\displaystyle{\mathcal{F}(u,v) = -\ln \pi (v\vert u) -\ln \pi (u).}$$Identifying these first term above with \(\Psi (\cdot,v)\) and the second one with \(\Phi \) shows the basis of the equivalence. Classical papers on MAP energies using MRF priors are [14–16, 20, 51, 56]. Since the pioneering work of Geman and Geman [56], various nonconvex PFs ϕ were explored in order to produce images involving neat edges; see, e.g., [54, 55, 65]. MAP energies involving MRF priors are also considered in many books, such as [32, 53, 64]. For a pedagogical account, see [96].

-

Regularization for ill-posed inverse problems was initiated in the book of Tikhonov and Arsenin [93] in 1977. The main idea can be stated in terms of the stabilization of this kind of problems. Useful textbooks in this direction are, e.g., [61, 69, 94] and especially the recent [91]. This methodology and its most recent achievements are nicely discussed from quite a general point of view in Chapter Regularization Methods for Ill-Posed Problems in this handbook.

-

Variational methods are related to PDE restoration methods and are naturally developed for signals and images defined on a continuous subset \(\Omega \subset \mathbb{R}^{d}\), d = 1, 2, …; for images d = 2. Originally, the data-fidelity term is of the form (5) for A = Id and \(\Phi (u) =\int _{\Omega }\phi (\|Du\|_{2})dx\), where ϕ is a convex function as those given in Table 1 (top). Since the beginning of the 1990s, a remarkable effort was done to find heuristics on ϕ that enable to recover edges and breakpoints in restored images and signals while smoothing the regions between them; see, e.g., [7, 13, 26, 31, 59, 64, 73, 85, 87]. One of the most successful is the Total Variation (TV) regularization corresponding to ϕ(t) = t, which was proposed by Rudin, Osher, and Fatemi in [87]. Variational methods were rapidly applied along with data-fidelity terms \(\Psi \). The use of differential operators D k of various orders \(k\geqslant 2\) in the prior \(\Phi \) has been recently investigated; see, e.g., [22, 23]. More details on variational methods for image processing can be found in several textbooks like [3, 7, 91].

For numerical implementation, the variational functional is discretized and \(\Phi \) takes the form of (6). Different discretization approaches are considered; see, e.g., [2, 27, 95]

The equivalence between these approaches has been considered in several seminal papers; see, e.g., [37, 63]. The state of the art and the relationship among all these methodologies are nicely outlined in the recent book of Scherzer et al. [91]. This book gives a brief historical overview of these methodologies and attaches a great importance to the functional analysis of the presented results.

1.2 The Main Features of the Minimizers as a Function of the Energy

Pushing curiosity ahead leads to various additional questions. One observes that frequently data fidelity and priors are modeled separately. It is hence necessary to check if the minimizer \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\) obeys all information contained in the data model \(\Psi \) as well as in the prior \(\Phi \). Hence the question: how the prior \(\Phi \) and the data-fidelity \(\Psi \) are effectively involved in \(\hat{u}\) – a minimizer of \(\mathcal{F}(\cdot,v)\). This leads to formulate the following inverse modeling problem:

This problem was posed in a systematic way and studied since [74, 75]. The point of view provided by (7) is actually adopted by many authors. Problem (7) is totally general and involves crucial stakes:

-

It yields rigorous and strong results on the minimizers \(\hat{u}\).

-

Such a knowledge enables a real control on the solution – the reconstructed image or signal \(\hat{u}\).

-

Conversely, it opens new perspectives for modeling.

-

It enables the conception of specialized energies \(\mathcal{F}\) that fulfill the requirements in applications.

-

This kind of results can help to derive numerical schemes using knowledge on the solutions.

Problem (7) remains open. The results presented here concern images, signals, and data living on finite grids. In this practical framework, the results in this chapter are quite general since they hold for energies \(\mathcal{F}\) which can be convex or nonconvex or smooth or nonsmooth, and results address local and global minimizers.

1.3 Organization of the Chapter

Some preliminary notions and results that help the reading of the chapter are sketched in Sect. 2. Section 3 is devoted to the regularity of the (local) minimizers of \(\mathcal{F}(\cdot,v)\) with a special focus on nonconvex regularization. Section 4 shows how edges are enhanced using nonconvex regularization. In Sect. 5 it is shown that nonsmooth regularization leads typically to minimizers that are sparse in the space spanned by {D i }. Conversely, Sect. 6 exhibits that the minimizers relevant to nonsmooth data fidelity achieve an exact fit for numerous data samples. Section 7 considers results when both \(\Psi \) and \(\Phi \) are nonsmooth. Illustrations and applications are presented.

2 Preliminaries

In this section we set the notations and recall some classical definitions and results on minimization problems.

2.1 Notation

We systematically denote by \(\hat{u}\) a (local) minimizer of \(\mathcal{F}(\cdot,v)\). It is explicitly specified when \(\hat{u}\) is a global minimizer.

-

D j n – The differential operator of order n with respect to the jth component of a function.

-

v[i] – The ith entry of vector v.

-

# J – The cardinality of the set J.

-

J c = I∖J – The complement of J ⊂ I in I where I is a set.

-

\(K^{\perp }\) – The orthogonal complement of a sub-vector space \(K \subset \mathbb{R}^{n}\).

-

A ∗ – The transpose of a matrix (or a vector) where A is real valued.

-

\(A \succ 0\) (\(A\succeq 0\)) – The matrix A is positive definite (positive semi-definite)

-

\(\mathbb{1}_{n} \in \mathbb{R}^{n}\) –The n-length vector composed of ones, i.e., \(\mathbb{1}_{n}[i] = 1\), \(1\leqslant i\leqslant n\).

-

\(\mathbb{L}^{n}\) – The Lebesgue measure on \(\mathbb{R}^{n}\).

-

\(\mathrm{Id}\) – The identity operator.

-

\(\|.\|_{\rho }\) – A vector or a matrix ρ-norm.

-

\(\mathbb{R}_{+}\stackrel{\mathrm{def}}{=}\{t \in \mathbb{R}\:\ t\geqslant 0\}\) and \(\mathbb{R}_{+}^{{\ast}}\stackrel{\mathrm{def}}{=}\{t \in \mathbb{R}\:\ t > 0\}\).

-

TV – Total Variation.

-

{e 1, …, e n } – The canonical basis of \(\mathbb{R}^{n}\), i.e., e i [i] = 1 and e i [j] = 0 if i ≠ j.

2.2 Reminders and Definitions

Definition 1.

A function \(\mathcal{F}: \mathbb{R}^{p} \rightarrow \mathbb{R}\) is coercive if \(\lim\limits_{\|u\|\rightarrow \infty }\mathcal{F}(u) = +\infty \).

A special attention being dedicated to nonsmooth functions, we recall some basic facts.

Definition 2.

Given \(v \in \mathbb{R}^{q}\), the function \(\mathcal{F}(\cdot,v): \mathbb{R}^{p} \rightarrow \mathbb{R}\) admits at \(\hat{u} \in \mathbb{R}^{p}\) a one-sided derivative in a direction \(w \in \mathbb{R}^{p}\), denoted \(\delta _{1}\mathcal{F}(\hat{u},v)(w)\), if the following limit exists:

where the index 1 in δ 1 means that derivatives with respect to the first variable of \(\mathcal{F}\) are addressed.

Here \(\delta _{1}\mathcal{F}(\hat{u},v)(w)\) is a right-side derivative; the left-side derivative is \(-\delta _{1}\mathcal{F}(\hat{u},v)(-w)\). If \(\mathcal{F}(\cdot,v)\) is differentiable at \(\hat{u}\), then \(\delta _{1}\mathcal{F}(\hat{u},v)(w) = D_{1}\mathcal{F}(\hat{u},v)w\) where D 1 stands for differential with respect to the first variable (see paragraph “Notation”). For \(\phi: \mathbb{R}_{+} \rightarrow \mathbb{R}\), we denote by ϕ′(t −) and ϕ′(t +) its left-side and right-side derivatives, respectively.

The classical necessary condition for a local minimum of a (nonsmooth) function is recalled [60, 86]:

Theorem 1.

If \(\mathcal{F}(\cdot,v)\) has a local minimum at \(\hat{u} \in \mathbb{R}^{p}\), then \(\delta _{1}\mathcal{F}(\hat{u},v)(w)\geqslant 0\), for every \(w \in \mathbb{R}^{p}\).

If \(\mathcal{F}(\cdot,v)\) is Fréchet differentiable at \(\hat{u}\), one finds \(D_{1}\mathcal{F}(\hat{u},v) = 0\).

Rademacher’s theorem states that if \(\mathcal{F}\) is proper and Lipschitz continuous on \(\mathbb{R}^{p}\), then the set of points in \(\mathbb{R}^{p}\) at which \(\mathcal{F}\) is not Fréchet differentiable form a set of Lebesgue measure zero [60, 86]. Hence \(\mathcal{F}(\cdot,v)\) is differentiable at almost every u. However, when \(\mathcal{F}(\cdot,v)\) is nondifferentiable, its minimizers are typically located at points where \(\mathcal{F}(\cdot,v)\) is nondifferentiable; see, e.g., Example 1 below.

Example 1.

Consider \(\mathcal{F}(u,v) = \frac{1} {2}\|u - v\|^{2} +\beta \vert u\vert \) for β > 0 and \(u,v \in \mathbb{R}\). The minimizer \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\) reads as

Clearly, \(\mathcal{F}(\cdot,v)\) is not Fréchet differentiable only at zero. For any \(\vert v\vert \leqslant \beta\), the minimizer of \(\mathcal{F}(\cdot,v)\) is located precisely at zero.

The next corollary shows what can happen if the necessary condition in Theorem 1 fails.

Corollary 1.

Let \(\mathcal{F}\) be differentiable on \((\mathbb{R}^{p} \times \mathbb{R}^{q})\setminus \Theta _{0}\) where

Given \(v \in \mathbb{R}^{q}\), if \(\hat{u}\) is a (local) minimizer of \(\mathcal{F}(\cdot,v)\) then

Proof.

If \(\hat{u}\) is a local minimizer, then by Theorem 1, \(\delta _{1}\mathcal{F}(\hat{u},v)(-w)\geqslant 0\), hence

If \((\hat{u},v) \in \Theta _{0}\), the necessary condition (9) cannot hold. □

Example 2.

Suppose that \(\Psi \) in (3) is a differentiable function for any \(v \in \mathbb{R}^{q}\). For a finite set of positive numbers, say θ 1, …, θ k , suppose that the PF ϕ is differentiable on \(\mathbb{R}_{+}\setminus \cup _{j=1}^{k}\theta _{j}\) and that

Given a (local) minimizer \(\hat{u}\), denote

Define \(F(\hat{u},v) = \Psi (\hat{u},v) +\beta \sum _{i\in I\setminus I_{\hat{u}}}\phi (\|{\mathrm{D}_{i}}\hat{u}\|_{2})\), which is differentiable at \(\hat{u}\). Clearly, \(\mathcal{F}(\hat{u},v) = F(\hat{u},v) + \beta \sum _{i\in I_{\hat{u}}}\phi (\|{\mathrm{D}_{i}}\hat{u}\|_{2})\). Applying the necessary condition (9) for \(w =\hat{ u}\) yields

In particular, one has \(\sum _{i\in I_{\hat{u}}}\phi '\left (\|{\mathrm{D}_{i}}\hat{u}\|_{2}^{-}\right )\leqslant \sum _{i\in I_{\hat{u}}}\phi '\left (\|{\mathrm{D}_{i}}\hat{u}\|_{2}^{+}\right )\), which contradicts the assumption on ϕ′ in (10). It follows that if \(\hat{u}\) is a (local) minimizer of \(\mathcal{F}(\cdot,v)\), then \(I_{\hat{u}} = \varnothing \) and

A typical case is the PF (f6) in Table 1, namely, ϕ(t) = min{α t 2, 1}. Then k = 1 and \(\theta _{1} = \frac{1} {\sqrt{\alpha }}\).

The following existence theorem can be found, e.g., in the textbook [35].

Theorem 2.

For \(v \in \mathbb{R}^{q}\), let \(U \subset \mathbb{R}^{p}\) be a nonempty and closed subset and \(\mathcal{F}(\cdot,v): U \rightarrow \mathbb{R}\) a lower semicontinuous (l.s.c.) proper function. If U is unbounded (with possibly \(U = \mathbb{R}^{p}\) ), suppose that \(\mathcal{F}(\cdot,v)\) is coercive. Then there exists \(\hat{u} \in U\) such that \(\mathcal{F}(\hat{u},v) =\inf\limits_{u\in U}\mathcal{F}(u,v)\).

This theorem gives only sufficient conditions for the existence of a minimizer. They are not necessary, as seen in the example below.

Example 3.

Let \(\mathcal{F}: \mathbb{R}^{2} \times \mathbb{R}^{2} \rightarrow \mathbb{R}\) involve (f6) in Table 1 and read

For any v, \(\mathcal{F}(\cdot,v)\) is not coercive since it is bounded by β in the direction spanned by \(\left \{(0,u[2])\right \}\). However, its global minimum is strict and is reached for \(\hat{u}[1] =\hat{ u}[2] = v[1]\) with \(\mathcal{F}(\hat{u},v) = 0\).

To prove the existence of optimal solutions for more general energies, we refer to the textbook [9].

Most of the results summarized in this chapter exhibit the behavior of the minimizer points \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\) under variations of v. In words, they deal with local minimizer functions.

Definition 3.

Let \(\mathcal{F}: \mathbb{R}^{p} \times \mathbb{R}^{q} \rightarrow \mathbb{R}\) and \(O \subseteq \mathbb{R}^{q}\). We say that \(\mathcal{U}: O \rightarrow \mathbb{R}^{p}\) is a local minimizer function for the family of functions \(\mathcal{F}(\cdot,O) = \left \{\mathcal{F}(\cdot,v): v \in O\right \}\) if for any v ∈ O, the function \(\mathcal{F}(\cdot,v)\) reaches a strict local minimum at \(\mathcal{U}(v)\).

When \(\mathcal{F}(\cdot,v)\) is proper, l.s.c., and convex, the standard results below can be evoked; see [35, 49].

Theorem 3.

Let \(\mathcal{F}(\cdot,v): \mathbb{R}^{p} \rightarrow \mathbb{R}\) be proper, convex, l.s.c., and coercive for every \(v \in \mathbb{R}^{q}\).

-

(i)

Then \(\mathcal{F}(\cdot,v)\) has a unique (global) minimum which is reached for a closed convex set of minimizers \(\left \{\hat{\mathcal{U}}(v)\right \}\stackrel{\mathrm{def}}{=}\left \{\hat{u} \in \mathbb{R}^{p}: \mathcal{F}(\hat{u},v) =\inf \limits _{u\in U}\mathcal{F}(u,v)\right \}\).

-

(ii)

If in addition \(\mathcal{F}(\cdot,v)\) is strictly convex, then there is a unique minimizer \(\hat{u} = \mathcal{U}(v)\) (which is also global). So \(\mathcal{F}(\mathbb{R}^{p},v)\) has a unique minimizer function \(v\mapsto \mathcal{U}(v)\).

The next lemma, which can be found, e.g., in [52], addresses the regularity of the local minimizer functions when \(\mathcal{F}\) is smooth. It can be seen as a variant of the implicit functions theorem.

Lemma 1.

Let \(\mathcal{F}\) be \(\mathcal{C}^{m}\), \(m\geqslant 2\), on a neighborhood of \((\hat{u},v) \in \mathbb{R}^{p} \times \mathbb{R}^{q}\). Suppose that \(\mathcal{F}(\cdot,v)\) reaches at \(\hat{u}\) a local minimum such that \(D_{1}^{2}\mathcal{F}(\hat{u},v) \succ 0\). Then there are a neighborhood \(O \subset \mathbb{R}^{q}\) containing v and a unique \(\mathcal{C}^{m-1}\) local minimizer function \(\mathcal{U}: O \rightarrow \mathbb{R}^{p}\), such that \(D_{1}^{2}\mathcal{F}(\mathcal{U}(\nu ),\nu ) \succ 0\) for every ν ∈ O and \(\mathcal{U}(v) =\hat{ u}\).

This lemma is extended in several directions in this chapter.

Definition 4.

Let \(\phi: [0,+\infty ) \rightarrow \mathbb{R}\) and \(m\geqslant 0\) an integer. We say that ϕ is \(\mathcal{C}^{m}\) on \(\mathbb{R}_{+}\), or equivalently that \(\phi \in \mathcal{C}^{m}(\mathbb{R}_{+})\), if and only if \(t\mapsto \phi (\vert t\vert )\) is \(\mathcal{C}^{m}\) on \(\mathbb{R}\).

By this definition, ϕ′(0) = 0. In Table 1, left, \(\phi \in \mathcal{C}^{1}(\mathbb{R}_{+})\) for (f1) if α < 2, \(\phi \in \mathcal{C}^{2}(\mathbb{R}_{+})\) for (f4), while for (f2), (f3), and (f7)–(f9) we find \(\phi \in \mathcal{C}^{\infty }(\mathbb{R}_{+})\).

3 Regularity Results

Here, we focus on the regularity of the minimizers of \(\mathcal{F}: \mathbb{R}^{p} \times \mathbb{R}^{q} \rightarrow \mathbb{R}\) of the form

where \(A \in \mathbb{R}^{q\times p}\) and for any i ∈ I we have \({\mathrm{D}_{i}} \in \mathbb{R}^{s\times p}\) for \(s\geqslant 1\). Let us denote by D the following rs × p matrix:

When A in (11) is not injective, a standard assumption in order to have regularization is

H3

\(\ker (A) \cap \ker (\mathrm{D}) =\{ 0\}\).

H3 is trivial if \(\mathrm{rank}\,A = p\) or rank D = p. Often, \(\ker (\mathrm{D}) =\mathrm{ span}(\mathbb{1}_{p})\) and \(A\mathbb{1}_{p}\neq 0\), so H3 holds.

3.1 Some General Results

We first verify the conditions on \(\mathcal{F}(\cdot,v)\) in (11) that enable Theorems 2 and 3 to be applied. Since H1 holds, \(\mathcal{F}(\cdot,v)\) in ( 11 ) is l.s.c. and proper.

-

1.

\(\mathcal{F}(\cdot,v)\) in (11) is coercive for any \(v \in \mathbb{R}^{q}\) at least in one of the following cases:

-

Rank(A) = p and \(\phi: \mathbb{R}_{+}\mapsto \mathbb{R}_{+}\) is nondecreasing.

-

H1 and H3 hold and \(\lim\limits_{t\nearrow \infty }\phi (t) = \infty \) (e.g., (f1)–(f5),(f8), (f10), and (f12) in Table 1).

By Theorem 2, \(\mathcal{F}(\cdot,v)\) has minimizers.

-

-

2.

For any \(v \in \mathbb{R}^{q}\), the energy \(\mathcal{F}(\cdot,v)\) in (11) is convex and coercive if H1 and H3 hold for a convex ϕ. Then the claim in Theorem 3(3) holds true.

-

3.

Further, \(\mathcal{F}(\cdot,v)\) in (11) is strictly convex and coercive for any \(v \in \mathbb{R}^{q}\) if \(\phi\) satisfies H1 and if one of the following assumptions holds:

-

Rank(A) = p and ϕ is convex.

-

H3 holds and ϕ is strictly convex.

Then the claim in Theorem 3(3) holds. Further, if \(\mathcal{F}\) is \(\mathcal{C}^{m}\) for \(m\geqslant 2\), then the minimizer function \(\mathcal{U}: \mathbb{R}^{q} \rightarrow \mathbb{R}^{p}\) (see Definition 3) is \(\mathcal{C}^{m-1}\) by Lemma 1.

-

However, the PFs involved in (11) used for signal and image processing are often nonconvex, bounded, or nondifferentiable. One extension of the standard results is given in the next section.

3.2 Stability of the Minimizers of Energies with Possibly Nonconvex Priors

Related questions have been considered in critical point theory, sometimes in semi-definite programming; the well-posedness of some classes of smooth optimization problems was addressed in [42]. Other results have been established on the stability of the local minimizers of general smooth energies [52]. Typically, these results are quite abstract to be applied directly to energies of the form (11).

Here the assumptions stated below are considered.

H4

The operator A in (11) satisfies rank A = p, i.e., A ∗ A is invertible.

H5

The PF ϕ in (11) is \(\mathcal{C}^{0}(\mathbb{R}_{+})\) and \(\mathcal{C}^{m}\), \(m\geqslant 2\), on \(\mathbb{R}_{+}^{{\ast}}\) with \(0\leqslant \phi '(0^{+}) < \infty \).

Under H1, H2, H4, and H5, the prior \(\Phi \) (and hence \(\mathcal{F}(\cdot,v)\)) in (11) can be nonconvex and in addition nonsmooth. By H1 and H4, \(\mathcal{F}(\cdot,v)\) in (11) admits a global minimum \(v \in \mathbb{R}^{q}\) – see Item 1 in section “Some General Results.” However, \(\mathcal{F}(\cdot,v)\) can present numerous local minima.

-

Energies \(\mathcal{F}\) with nonconvex and possibly nondifferentiable PFs ϕ are frequently used in engineering problems since they were observed to give rise to high-quality solutions \(\hat{u}\). It is hence important to have good knowledge on the stability of the obtained solutions.

The results summarized in this section provide the state of the art for energies of the form (11).

3.2.1 Local Minimizers

The stability of local minimizers is an important matter in its own right for several reasons. Often, a nonconvex energy is minimized only locally, in the vicinity of some initial guess. Second, the minimization schemes that guarantee the finding of the global minimum of a nonconvex objective function are exceptional. The practically obtained solutions are usually only local minimizers.

The statements below are a simplified version of the results established in [44].

Theorem 4.

Let \(\mathcal{F}(\cdot,v)\) in (11) satisfy H1, H2, H 4, and H 5. Then there exists a closed subset \(\Theta \subset \mathbb{R}^{q}\) whose Lebesgue measure is \(\mathbb{L}^{q}(\Theta ) = 0\) such that for any \(v \in \mathbb{R}^{q}\setminus \Theta \), there exists an open subset \(O \subset \mathbb{R}^{q}\) with v ∈ O and a local minimizer function (see Definition 3 ) \(\mathcal{U}: O \rightarrow \mathbb{R}^{p}\) which is \(\mathcal{C}^{m-1}\) on O and fulfills \(\hat{u} = \mathcal{U}(v)\).

Since \(\Theta \) is closed in \(\mathbb{R}^{q}\) and \(\mathbb{L}^{q}(\Theta) = 0\), the stated properties are generic.

3.2.2 Commentary on the Assumptions

All assumptions H1, H2, and H5 bearing on the PF ϕ are nonrestrictive; they address all PFs in Table 1 except for (f13) which is discontinuous at zero. The assumption H4 cannot be avoided, as seen in Example 4.

Example 4.

Consider \(\mathcal{F}: \mathbb{R}^{2} \times \mathbb{R} \rightarrow \mathbb{R}\) given by

where \(v \equiv v[1]\). The minimum is obtained after a simple computation.

In this case, assumption H4 fails and there is a local minimizer function only for \(v \in \left [-\frac{1} {2}, \frac{1} {2}\right ]\).

3.2.3 Other Results

The derivations in [44] reveal several other practical results.

-

1.

If \(\phi \in \mathcal{C}^{2}(\mathbb{R}_{+})\), see Definition 4, then \(\forall v \in \mathbb{R}^{q}\setminus \Theta \), every local minimizer \(\hat{u}\) of \(\mathcal{F}(u,v)\) is strict and \(D_{1}^{2}\mathcal{F}(\hat{u},v) \succ 0\). Consequently, Lemma 1 is extended since the statement holds true \(\forall v \in \mathbb{R}^{q}\setminus \Theta \).

-

For real data v – a random sample of \(\mathbb{R}^{q}\) – whenever \(\mathcal{F}(\cdot,v)\) is differentiable and satisfies the assumptions of Theorem 4 , it is a generic property that local minimizers \(\hat{u}\) are strict and their Hessians \(D_{1}^{2}\mathcal{F}(\hat{u},v)\) are positive definite.

-

-

2.

Using Corollary 1, the statement of Theorem 4 holds true if \(\phi '(0^{+}) = 0\) and if there is τ > 0 such that \(\phi '(\tau ^{-}) >\phi '(\tau ^{+})\). This is the case of the PF (f6) in Table 1.

-

3.

If ϕ′(0+) > 0, define

$$\displaystyle{ \hat{J}\stackrel{\mathrm{def}}{=}\left \{i \in I\:\ {\mathrm{D}_{i}}\hat{u} = 0\right \}\ \ \mbox{ and}\quad K_{\hat{J}}\stackrel{\mathrm{def}}{=}\left \{w \in \mathbb{R}^{p}\:\ {{\mathrm{D}_{i}}}w = 0,\ \forall i \in \hat{ J}\right \}. }$$(12)Then \(\forall v \in \mathbb{R}^{q}\setminus \Theta \), every local minimizer \(\hat{u}\) of \(\mathcal{F}(u,v)\) is strict and

-

(a)

\(D_{1}\mathcal{F}\vert _{K_{\hat{J}}}(\hat{u},v) = 0\ \ \mbox{ and}\ \ D_{1}^{2}\mathcal{F}\vert _{K_{\hat{J}}}(\hat{u},v) \succ 0\) – a sufficient condition for a strict minimum on \(K_{\hat{J}}\).

-

(b)

\(\delta _{1}\mathcal{F}(\hat{u},v)(w) > 0,\ \ \forall w \in K_{\hat{J}}^{\perp }\setminus \{0\}\) – a sufficient condition for a strict minimum on \(K_{\hat{J}}^{\perp }\).

-

Here (a) and (b) provide a sufficient condition for a strict (local) minimum of \(\mathcal{F}(\cdot,v)\) at \(\hat{u}\) (a direct consequence of [80, Theorem 1]). These conditions are satisfied at the (local) minimizers \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\) for every \(v \in \mathbb{R}^{q}\) , except for a negligible subset of \(\mathbb{R}^{q}\) , in which case Lemma 1 can be applied.

One can interpret these results as follows:

-

(a)

3.2.4 Global Minimizers of Energies with for Possibly Nonconvex Priors

The results on the global minimizers of (11) presented next are extracted from [45].

Theorem 5.

Assume that \(\mathcal{F}(\cdot,v)\) in (11) satisfy H1, H2, H 4, and H 5. Then there exists a subset \(\hat{\Theta } \subset \mathbb{R}^{q}\) such that \(\mathbb{L}^{q}(\hat{\Theta }) = 0\) and the interior of \(\mathbb{R}^{q}\setminus \hat{\Theta }\) is dense in \(\mathbb{R}^{q}\), and for any \(v \in \mathbb{R}^{q}\setminus \hat{\Theta }\) the energy \(\mathcal{F}(\cdot,v)\) has a unique global minimizer. Furthermore, the global minimizer function \(\hat{\mathcal{U}}: \mathbb{R}^{q}\setminus \hat{\Theta } \rightarrow \mathbb{R}^{p}\) is \(\mathcal{C}^{m-1}\) on an open subset of \(\mathbb{R}^{q}\setminus \hat{\Theta }\) which is dense in \(\mathbb{R}^{q}\).

-

Otherwise said, in a real-world problem there is no chance of getting data v such that the energy \(\mathcal{F}(\cdot,v)\) (11) has more than one global minimizer.

Nonetheless, \(\hat{\Theta }\) plays a crucial role for the recovery of edges; this issue is developed in Sect. 4.

3.3 Nonasymptotic Bounds on Minimizers

The aim here is to give nonasymptotic analytical bounds on the local and the global minimizers \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\) in (11) that hold for all PFs ϕ in Table 1. Related questions have mainly been considered in particular cases or asymptotically; see, e.g., [4, 71, 92]. In [51] the mean and the variance of the minimizers \(\hat{u}\) for strictly convex and differentiable functions ϕ have been explored.

The bounds provided below are of practical interest for the initialization and the convergence analysis of numerical schemes. The statements given below are extracted from [82].

Bounds on the restored data.

One compares the “restored” data \(A\hat{u}\) with the given data v.

H6

Consider the alternative assumptions:

-

\(\phi '(0^{+}) = 0\) and \(\phi \in \mathcal{C}^{1}(\mathbb{R}_{+}\setminus \Theta _{0})\) where the set \(\Theta _{0} = \left \{t > 0:\phi '(t^{-}) >\phi '(t^{+})\right \}\) is at most finite.

-

ϕ′(0 + ) > 0 and ϕ is \(\mathcal{C}^{1}\) on \(\mathbb{R}_{+}^{{\ast}}\).

The set \(\Theta _{0}\) allows us to address the PF given in (f6). Let us emphasize that under H1 and H6, the PF ϕ can be convex or nonconvex.

Theorem 6.

Consider \(\mathcal{F}(\cdot,v)\) of the form (11) where H1, H 3, and H 6 hold. For every \(v \in \mathbb{R}^{q}\), if \(\mathcal{F}(\cdot,v)\) has a (local) minimum at \(\hat{u}\), then

Comments on the results.

This bound holds for every (local) minimizer of \(\mathcal{F}(\cdot,v)\). If A is a uniform tight frame (i.e., A ∗ A = Id), one has

The mean of restored data.

In many applications, the noise corrupting the data can be supposed to have a mean equal to zero. When A = Id, it is well known that mean\((\hat{u}) =\) mean(v); see, e.g., [7]. However, for a general A one has

The requirement \(A\mathbb{1}_{p} \propto\mathbb{1}_{q}\) is quite restrictive. In the simple case when ϕ(t) = t 2, \(\ker (\mathrm{D}) =\mathbb{1}_{rs}\) and A is square and invertible, it is easy to see that this is also a sufficient condition. Finally, if \(A\neq \mathrm{Id}\), then generally mean \((\hat{u})\neq\) mean \((v)\).

The residuals for edge-preserving regularization.

A bound on the data-fidelity term at a (local) minimizer \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\) shall be given. The edge-preserving H2 (see Sect. 1) is replaced by a stronger edge-preserving assumption:

H7

\(\|\phi '\|_{\infty }\stackrel{\mathrm{def}}{=}\max \left \{\sup _{t\geqslant 0}\vert \phi '(t^{+})\vert,\,\sup _{ t>0}\vert \phi '(t^{-})\vert \right \} < \infty \).

Except for (f1) and (f13), all other PFs in Table 1 satisfy H7. Note that when ϕ′(0+) > 0 and H7 hold, one usually has \(\|\phi '\|_{\infty } =\phi '(0^{+})\).

Theorem 7.

Let \(\mathcal{F}(\cdot,v)\) be of the form (11) where \(\mathrm{rank}\,(A) = q\leqslant p\), and H1, H 3, H 6, and H 7 hold. For every \(v \in \mathbb{R}^{q}\), if \(\mathcal{F}(\cdot,v)\) has a (local) minimum at \(\hat{u}\), then

Let us emphasize that the bound in (14) is independent of data v and that it is satisfied for any local or global minimizer \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\). (Recall that for a real matrix C with entries C[i, j], one has \(\|C\|_{1} =\max _{j}\sum _{i}\vert C[i,j]\vert \) and \(\|C\|_{\infty } =\max _{i}\sum _{j}\vert C[i,j]\vert \); see, e.g., [35].)

If \(\mathrm{D}\) corresponds to a discrete gradient operator for a two-dimensional image, \(\|\mathrm{D}\|_{1} = 4\). If in addition A = Id, (14) yields

The result of this theorem may seem surprising. In a statistical setting, the quadratic data-fidelity term \(\|Au - v\|_{2}^{2}\) in (11) corresponds to white Gaussian noise on the data, which is unbounded. However, if ϕ is edge preserving according to H7, any (local) minimizer \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\) gives rise to a noise estimate \((v - A\hat{u})[i]\), \(1\leqslant i\leqslant q\) that is tightly bounded as stated in (14).

-

Hence the model for Gaussian noise on the data v is distorted by the solution \(\hat{u}\).

-

When \(\mathcal{F}(\cdot,v)\) is convex and coercive, (14) shows that a good initialization for a minimization algorithm should be a point u 0 such that Au 0 = v , e.g., the minimum norm solution of \(\|v -\hat{ u}\|_{2}\) given by \(u_{0} = A^{{\ast}}(AA^{{\ast}})^{-1}v\).

4 Nonconvex Regularization

4.1 Motivation

A permanent requirement is that the energy \(\mathcal{F}\) favors the recovery of neat edges. Since the pioneering work of Geman and Geman [56], various nonconvex \(\Phi \) in (3) have been proposed [15, 54, 55, 64, 68, 72, 85]. Indeed, the relevant minimizers exhibit neat edges between homogeneous regions. However, these nonconvex energies are tiresome to control and to minimize (only few algorithms are proved to find the global minimizer in particular cases). In order to avoid these numerical intricacies, since the 1990s, an important effort was done to derive convex edge-preserving PFs; see, e.g., [20, 31, 57, 64, 87] and [7] for an overview. The most popular convex edge-preserving PF was derived by Rudin, Osher, and Fatemi [87]: it amounts to ϕ = t, for {D i } yielding the discrete gradient operator, the ℓ 2-norm in (6) (see Sect. 1), and the relevant \(\Phi \) is called the Total Variation (TV) regularization.

In Fig. 2 one sees that the height of the edges is better recovered when ϕ is nonconvex, compared to the convex TV regularization. The same effect can also be observed, e.g., in Figs. 7, 8, and 10.

A considerable progress in nonconvex minimization has been realized. For energies of the form (2)–(3) we refer to [5, 19, 88, 89].

-

This section is devoted to explain why edges are nicely recovered using a nonconvex ϕ.

4.2 Assumptions on Potential Functions ϕ

Consider \(\mathcal{F}(\cdot,v)\) of the form (11) where \({\mathrm{D}_{i}}: \mathbb{R}^{p} \rightarrow \mathbb{R}^{1}\), i ∈ I = { 1, …, r}, i.e.,

and \(\phi: \mathbb{R}_{+} \rightarrow \mathbb{R}_{+}\) satisfies H1 (see Sect. 1), H6 section “Nonasymptotic Bounds on Minimizers,” and H8 given below

H8

\(\phi\) is \(\mathcal{C}^{2}\) and \(\phi '(t)\geqslant 0\) on \(\mathbb{R}_{+}^{{\ast}}\), and \(\inf _{t\in \mathbb{R}_{+}^{{\ast}}}\phi ''(t) < 0\) with \(\lim\limits_{t\rightarrow \infty }\phi ''(t) = 0\);

as well as one of the following assumptions:

H9

\(\phi '(0^{+}) = 0\), and there are two numbers τ > 0 and \(\mathcal{T} \in (\tau,\infty )\) such that \(\phi ''(t)\geqslant 0\) on [0,τ], ϕ″(t) < 0 on (τ,∞), ϕ″(t) decreases on \((\tau,\mathcal{T} )\) and increases on \((\mathcal{T},\infty )\).

H10

ϕ′(0 + ) > 0, and limt→0 ϕ″(t) < 0 is well defined and ϕ″(t) < 0 strictly increases on (0,∞).

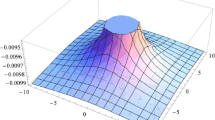

These assumptions are illustrated in Fig. 3. They hold for all nonconvex PFs in Table 1, except for (f6) and (f13) which are presented separately. Further, these assumptions are easy to relax.

Illustration of the assumptions in two typical cases – (f7) and (f11) – in Table 1

The results presented below come from [81].

4.3 How It Works on \({\boldsymbol{\mathbb{R}}}\)

-

This example illustrates the main facts that explain why edges are enhanced when ϕ is nonconvex, satisfying H1 , and H 8 along with either H 9 or H 10.

Let \(\mathcal{F}: \mathbb{R} \times \mathbb{R} \rightarrow \mathbb{R}\) be given by

The (local) minimality conditions for \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\) read as

-

If \(\phi '(0^{+}) = 0\) or \(\left [\phi '(0^{+}) > 0\ \mathrm{and}\ \hat{u}\neq 0\right ]\) : \(\hat{u} +\beta \phi '(\hat{u}) = v\) and \(1 +\beta \phi ''(\hat{u})\geqslant 0\).

-

If ϕ′(0+) > 0 and \(\hat{u} = 0\) : \(\vert v\vert \leqslant \beta \phi '(0^{+})\).

To simplify, we assume that \(v\geqslant 0\). Define

One has θ 0 = 0 if ϕ′(0+) > 0 and \(0 <\theta _{0} < \mathcal{T} <\theta _{1}\) if \(\phi '(0^{+}) = 0\). A few calculations yield

-

1.

For every \(v \in \mathbb{R}_{+}\) no minimizer lives in (θ 0, θ 1) (cf. Fig. 4).

-

2.

One computes 0 < ξ 0 < ξ 1 such that (cf. Fig. 4)

-

a.

If \(0\leqslant v\leqslant \xi _{1}\), \(\mathcal{F}(\cdot,v)\) has a (local) minimizer \({\hat{u}_{0}} \in [0,\theta _{0}]\), hence \({\hat{u}_{0}}\) is subject to a strong smoothing.

-

b.

If \(v\geqslant \xi _{0}\), \(\mathcal{F}(\cdot,v)\) has a (local) minimizer \({\hat{u}_{1}}\geqslant \theta _{1}\), hence \({\hat{u}_{1}}\) is subject to a weak smoothing.

-

c.

If v ∈ [ξ 0, ξ 1] then \(\mathcal{F}(\cdot,v)\) has two local minimizers, \({\hat{u}_{0}}\) and \({\hat{u}_{1}}\).

-

a.

-

3.

There is ξ ∈ (ξ 0, ξ 1) such that \(\mathcal{F}(\cdot,\xi )\) has two global minimizers, \(\mathcal{F}{(\hat{u}_{0}},\xi ) = \mathcal{F}{(\hat{u}_{1}},\xi )\), as seen in Fig. 5;

-

a.

If 0 < v < ξ, the unique global minimizer is \(\hat{u} =\hat{ u}_{0}\).

-

b.

If v > ξ, the unique global minimizer is \(\hat{u} =\hat{ u}_{1}\).

-

a.

-

4.

The global minimizer function \(v\mapsto \mathcal{U}(v)\) is discontinuous at ξ and \(\mathcal{C}^{1}\)-smooth on \(\mathbb{R}_{+}\setminus \{\xi \}\).

Item 1 is the key for the recovery of either homogeneous regions or high edges. The minimizer \({\hat{u}_{0}}\) (see Items 2a and 3a) corresponds to the restoration of homogeneous regions, while \({\hat{u}_{1}}\) (see Items 2b and 3b) corresponds to edges. Item 3 corresponds to a decision for the presence of an edge at the global minimizer. Since {ξ} is closed and \(\mathbb{L}^{1}\{\xi \} = 0\), Item 4 confirms the results of section “Global Minimizers of Energies with for Possibly Nonconvex Priors.”

4.4 Either Smoothing or Edge Enhancement

(A) Case Φ ′ (0 + )=0.

Below the case depicted in Figs. 4, left, and 5, left, is extended to \(\mathbb{R}^{p}\).

Theorem 8.

Let \(\mathcal{F}(\cdot,v)\) be of the form (15) where H1, H 3, H 8, and H 9 hold, and {Di : i ∈ I} are linearly independent. Set \(\mu \stackrel{\mathrm{def}}{=}\max _{1\leqslant i\leqslant r}\|\mathrm{D}^{{\ast}}(\mathrm{DD}^{{\ast}})^{-1}e_{i}\|_{2}\). For \(\beta > \frac{2\mu ^{2}\;\|A^{{\ast}}A\|_{ 2}} {\vert \phi ''(\mathcal{T} )\vert }\), there are \(\theta _{0} \in (\tau,\mathcal{T} )\) and \(\theta _{1} \in (\mathcal{T},\infty )\) such that \(\forall v \in \mathbb{R}^{q}\), if \(\hat{u}\) is a (local) minimizer of \(\mathcal{F}(\cdot,v)\), then

In many imaging problems, {D i } are not linearly independents. If {D i } are linearly dependent, the result (16) holds true for all (local) minimizers \(\hat{u}\) that are locally homogeneous on regions that are connected with respect to {D i }. Otherwise, one recovers both high edges and smooth transitions, as seen in Fig. 8a. When ϕ is convex, all edges are smoothed, as one can observe in Fig. 7a.

The PF ϕ(t) = min{α t 2, 1} (f6) in Table 1 does not satisfy assumptions H8 and H9. From Corollary 1 and Example 2 (section “Reminders and Definitions”), any (local) minimizer \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\) obeys

Proposition 1 below addresses only the global minimizers of \(\mathcal{F}(\cdot,v)\).

Proposition 1.

Let \(\mathcal{F}(\cdot,v)\) be given by (15) where \(\phi (t) =\min \{\alpha t^{2},1\}\), {D : i ∈ I} are linearly independent and \(\mathrm{rank}\,(A)\geqslant p - r\geqslant 1\). If \(\mathcal{F}(\cdot,v)\) has a global minimizer at \(\hat{u}\), then

where B is a matrix depending only on A and D.

If D = Id, then B = A. If u one-dimensional signal and \({\mathrm{D}_{i}}u = u[i] - u[i + 1]\), \(1\leqslant i\leqslant p - 1\), one has \(B = (\mathrm{Id} -\frac{1} {p}\mathbb{1}\mathbb{1}^{T})AH\) where \(H \in \mathbb{R}^{p\times p}\) is upper triangular composed of ones.

In Proposition 1, set \(\theta _{0} = \frac{\gamma } {\sqrt{\alpha }}\quad \mathrm{and}\quad \theta _{1} = \frac{1} {\sqrt{\alpha }\gamma }\) for \(\gamma \stackrel{\mathrm{def}}{=}\max _{i\in I}\Gamma _{i} < 1\).

Let us define the following subsets:

One can interpret the results of Theorem 8 and Proposition 1 as follows:

-

The pixels in \({\hat{J}_{0}}\) form homogeneous regions with respect to {D i }, whereas the pixels in \({\hat{J}_{1}}\) are break points.

In particular, if {D i } correspond to first-order differences, \({\hat{J}_{0}}\) addresses smoothly varying regions where \(\vert {\mathrm{D}_{i}}\hat{u}\vert \leqslant \theta _{0}\) , while \({\hat{J}_{1}}\) corresponds to edges higher than θ 1 −θ 0.

(B) Case Φ ′ (0 + ) > 0.

Here the results are stronger without assumptions on {D i }. This case corresponds to Figs. 4, right, and 5, right.

Theorem 9.

Consider \(\mathcal{F}(\cdot,v)\) of the form (15) where H 3 holds and ϕ satisfies H1, H 8, and H 10. Let \(\beta > \frac{2\mu ^{2}\;\|A^{{\ast}}A\|_{ 2}} {\vert \lim\limits_{t\searrow 0}\phi ''(t)\vert }\), where μ > 0 is a constant depending only on {Di }. Then \(\exists \theta _{1} > 0\) such that \(\forall v \in \mathbb{R}^{q}\), every (local) minimizer \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\) satisfies

The results of Theorem 9 were extended to energies involving box constraints in [33].

The “0-1” PF (f13) in Table 1 does not satisfy H8 and H10 since it is discontinuous at 0.

Proposition 2.

Let \(\mathcal{F}(\cdot,v)\) in (15) be defined for \(\phi (0) = 0,\ \phi (t) = 1\ \mbox{ if}\ t > 0\), i.e., (f13), {Di } be linearly independent and \(\mathrm{rank}\,A\geqslant p - r\geqslant 1\). If \(\mathcal{F}(\cdot,v)\) has a global minimum at \(\hat{u}\), then

where the matrix B depends only on D and on A.

In (20), B is the same as in Proposition 1. For \(\theta _{1}\stackrel{\mathrm{def}}{=}\min _{i\in I} \frac{\sqrt{\beta }} {\|Be_{i}\|}\), it is clear that (20) holds.

Let

Using this notation, the results of Theorem 9 and Proposition 2 show that:

-

The indexes in \({\hat{J}_{0}}\) address regions in \(\hat{u}\) that can be called strongly homogeneous (since \(\vert {\mathrm{D}_{i}}\hat{u}\vert = 0\)), while \({\hat{J}_{1}}\) addresses breakpoints where \(\vert {\mathrm{D}_{i}}\hat{u}\vert \geqslant \theta _{1}\).

If {D i } are first-order differences, \(\hat{u}\) is neatly segmented : \({\hat{J}_{0}}\) corresponds to constant regions, while \({\hat{J}_{1}}\) describes all edges and they are higher than θ 1.

Direct segmentation of an image from data transformed via a general (nondiagonal) operator A remains a difficult task using standard methods. The result in (19), Theorem 9, tells us that such a segmentation is naturally involved in the minimizers \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\), for any operator A. This effect can be observed, e.g., on Figs. 8b, d and 11d.

(C) Illustration: Deblurring of an image from noisy data.

The original image u o in Fig. 6a presents smoothly varying regions, constant regions, and sharp edges. Data in Fig. 6b correspond to \(v = a {\ast} u_{o} + n\), where a is a blur with entries \(a_{i,j} =\exp \left (-(i^{2} + j^{2})/12.5\right )\) for \(-4\leqslant i,j\leqslant 4\), and n is white Gaussian noise yielding 20 dB of SNR. The amplitudes of the original image are in the range of [0, 1. 32] and those of the data in [−5, 50]. In all restored images, {D i } correspond to the first-order differences of each pixel with its 8 nearest neighbors. In all figures, the obtained minimizers are displayed on the top. Just below, the sections corresponding to rows 54 and 90 of the restored images are compared with the same rows of the original image.

The restorations in Fig. 7 are obtained using convex PFs ϕ while those in Fig. 8 using nonconvex PFs ϕ. Edges are sharp and high in Fig. 8 where ϕ is nonconvex, which corroborates the results in paragraphs (A) and (B). In Fig. 8b, d ϕ is nonconvex and ϕ′(0 + ) > 0 in addition. As stated in Theorem 9, in spite of the fact that A is nondiagonal (and ill-conditioned), the restored images are fully segmented and the edges between constant pieces are high.

5 Nonsmooth Regularization

-

Observe that the minimizers corresponding to ϕ′(0+) > 0 (nonsmooth regularization) in Figs. 2 b, c, 7 b, 8 b, d, 10 a–c, and 11 d are constant on numerous regions. This section is aimed to explain and to generalize this observation.

Consider

where \(\Psi: \mathbb{R}^{p} \times \mathbb{R}^{q} \rightarrow \mathbb{R}\) is any explicit or implicit \(\mathcal{C}^{m}\)-smooth function for \(m\geqslant 2\) and \({\mathrm{D}_{i}}: \mathbb{R}^{p}\mapsto \mathbb{R}^{s}\), ∀i ∈ I = { 1, …, r}, are general linear operators for any integer \(s\geqslant 1\). It is assumed that ϕ satisfies H1 along with

H11

ϕ is \(\mathcal{C}^{2}\) -smooth on \(\mathbb{R}_{+}^{{\ast}}\) and ϕ′(0 + ) > 0.

Note that \(\Psi \) and ϕ can be convex or nonconvex. Let us define the set-valued function \(\mathcal{J}\) on \(\mathbb{R}^{p}\) by

Given \(u \in \mathbb{R}^{p}\), \(\mathcal{J} (u)\) indicates all regions where \({\mathrm{D}_{i}}u = 0\). Such regions are called strongly homogeneous with respect to {D i }. (The adverb “strongly” is used to emphasize the difference with just “homogeneous regions” where \(\|{\mathrm{D}_{i}}u\|_{2} \approx 0\).) In particular, if {D i } correspond to first-order differences between neighboring samples of u or to discrete gradients, \(\mathcal{J} (u)\) indicates all constant regions in u.

5.1 Main Theoretical Result

The results presented below are extracted from [80].

Theorem 10.

Given \(v \in \mathbb{R}^{q}\), assume that \(\mathcal{F}(\cdot,v)\) in (21)–(22) is such that \(\Psi \) is \(\mathcal{C}^{m}\), \(m\geqslant 2\) on \(\mathbb{R}^{p} \times \mathbb{R}^{q}\), and that ϕ satisfies H1 and H 11. Let \(\hat{u} \in \mathbb{R}^{p}\) be a (local) minimizer of \(\mathcal{F}(\cdot,v)\). For \(\hat{J}\stackrel{\mathrm{def}}{=}\mathcal{J} (\hat{u})\), let \(K_{\hat{J}}\) be the vector subspace

Suppose also that

-

(a)

\(\delta _{1}\mathcal{F}(\hat{u},v)(w) > 0\), for every \(w \in K_{\hat{J}}^{\perp }\setminus \{0\}\).

-

(b)

There is an open subset \(O_{\hat{J}}' \subset \mathbb{R}^{q}\) such that \(\mathcal{F}\vert _{K_{\hat{J}}}\left (.,O_{\hat{J}}'\right )\) has a local minimizer function \(\mathcal{U}_{\hat{J}}: O_{\hat{J}}' \rightarrow K_{\hat{J}}\) which is \(\mathcal{C}^{m-1}\) continuous and \(\hat{u} = \mathcal{U}_{\hat{J}}(v)\).

Then there is an open neighborhood \(O_{\hat{J}} \subset O_{\hat{J}}'\) of v such that \(\mathcal{F}(\cdot,O_{\hat{J}})\) admits a \(\mathcal{C}^{m-1}\) local minimizer function \(\mathcal{U}: O_{\hat{J}} \rightarrow \mathbb{R}^{p}\) which satisfies \(\mathcal{U}(v) =\hat{ u}\), \(\mathcal{U}\vert _{K_{\hat{J}}} = \mathcal{U}_{\hat{J}}\) and

Note that \(\hat{J}\) and \(K_{\hat{J}}\) are the same as those introduced in (12) section “Local Minimizers.”

Commentary on the assumptions.

\(\mathcal{F}(\cdot,v)\) has a local minimum at \(\hat{u}\), by Theorem 1 one has \(\delta _{1}\mathcal{F}(\hat{u},v)(w)\geqslant 0\), for all \(w \in K_{\hat{J}}^{\perp }\setminus \{0\}\), and if for some w the inequality becomes inequality, then the inequality is strict for − w. So (a) is not a strong requirement. Condition (b) amounts to Lemma 1 (section “Reminders and Definitions”) applied to \(\mathcal{F}\vert _{K_{\hat{J}}}\) which is \(\mathcal{C}^{m}\) on a neighborhood of \((\hat{u},v)\) belonging to \(K_{\hat{J}} \times \mathbb{R}^{q}\).

If \(\mathcal{F}(\cdot,v)\) (possibly nonconvex) is of the form (11) and assumption H4 (section “Stability of the Minimizers of Energies with Possibly Nonconvex Priors”) holds, Theorem 4 and the other results given next show that (a) and (b) are satisfied for any \(v \in \mathbb{R}^{q}\setminus \Theta \) where \(\Theta \) is closed and \(\mathbb{L}^{q}(\Theta ) = 0\).

Significance of the results.

Using the definition of \(\mathcal{J}\) in (23), the conclusion of the theorem can be reformulated as

Minimizers involving large subsets \(\hat{J}\) are observed in Figs. 2b, c, 7b, 8b, d, 10a–c, and 11d. It was seen in Examples 1 and 4, as well as in section “How It Works on \({\boldsymbol{\mathbb{R}}}\)” (case ϕ′(0+) > 0), that \(\hat{J}\) is nonempty for data v living in an open \(O_{\hat{J}}\). Note also that there is an open subset \(\tilde{O}_{\hat{J}} \subset O_{\hat{J}}\) such that \(\mathcal{J}\left (\mathcal{U}(v)\right ) = \hat{J}\) for all \(v \in \tilde{ O}_{\hat{J}}\). These sets \(\tilde{O}_{\hat{J}}\) are described in Example 5.

Observe that (26) is a severe restriction since \(K_{\hat{J}}\) is a closed and negligible subset of \(\mathbb{R}^{p}\), whereas data v vary on open subsets \(O_{\hat{J}}\) of \(\mathbb{R}^{q}\).

Focus on a (local) minimizer function \(\mathcal{U}: O \rightarrow \mathbb{R}^{p}\) for \(\mathcal{F}(\cdot,\,O)\) and put \(\hat{J} = \mathcal{J} (\mathcal{U}(v))\) for some v ∈ O. By Theorem 10, the sets \(O_{\hat{J}}\) and \(\tilde{O}_{\hat{J}}\) are of positive measure in \(\mathbb{R}^{q}\). When data ν range over O, the set-valued function \((\mathcal{J} \circ \mathcal{U})\) generally takes several distinct values, say {J j }. Thus, with a (local) minimizer function \(\mathcal{U}\), defined on an open set O, there is associated a family of subsets \(\{\tilde{O}_{J_{j}}\}\) which form a covering of O. When \(\nu \in \tilde{ O}_{J_{j}}\), we find a minimizer \(\hat{u} = \mathcal{U}(\nu )\) satisfying \(\mathcal{J} (\hat{u}) = J_{j}\).

-

Energies with nonsmooth regularization terms, as those considered here, exhibit local minimizers which generically satisfy constraints of the form \(\mathcal{J} (\hat{u})\neq \varnothing \).

In particular, if \(\{{\mathrm{D}_{i}}\}\) are discrete gradients or first-order difference operators, minimizers \(\hat{u}\) are typically constant on many regions. For example, if ϕ(t) = t , we have \(\Phi (u) =\mathrm{ TV}(u)\) , and this explains the stair-casing effect observed in TV methods on discrete images and signals [30, 39].

5.2 Examples and Discussion

The subsection begins with an illustration of Theorem 10 and its meaning.

Restoration of a noisy signal.

Figure 9 shows a piecewise constant signal u o corrupted with two different noises.

Figure 10 depicts the restoration from these two noisy data samples by minimizing an energy of the form \(\mathcal{F}(u,v) =\| u - v\|^{2} +\beta \sum _{ i=1}^{p-1}\phi (\vert u[i] - u[i + 1]\vert )\). The minimizers shown in Fig. 10a–c correspond to functions ϕ such that ϕ′(0+) > 0 and they are constant on large segments. The reader is invited to compare the subsets where these minimizers are constant. The function ϕ in Fig. 10d satisfies \(\phi '(0^{+}) = 0\) and the resultant minimizers are nowhere constant.

Restoration using different functions ϕ. Original u o (-.-.), minimizer \(\hat{u}\) (—). Each figure from (a) to (d) shows the two minimizers \(\hat{u}\) corresponding to the two data sets in Fig. 9 (left and right), while the shape of ϕ is plotted in the middle

Example 5 below gives a rich geometric interpretation of Theorem 25.

Example 5 (1D TV Regularization).

Let \(\mathcal{F}: \mathbb{R}^{p} \times \mathbb{R}^{p} \rightarrow \mathbb{R}\) be given by

where \(A \in \mathbb{R}^{p\times p}\) is invertible. Clearly, there is a unique minimizer function \(\mathcal{U}\) for \(\mathcal{F}(\cdot, \mathbb{R}^{p})\). Two striking phenomena concerning the sets \(\tilde{O}_{J}\) are described next:

-

1.

For every point \(\hat{u} \in \mathbb{R}^{p}\), there is a polyhedron \(Q_{\hat{u}} \subset \mathbb{R}^{p}\) of dimension \(\#\mathcal{J} (\hat{u})\), such that for every \(v \in Q_{\hat{u}}\), the same point \(\mathcal{U}(v) =\hat{ u}\) is the unique minimizer of \(\mathcal{F}(\cdot,v)\).

-

2.

For every \(J \subset \{ 1,\ldots,p - 1\}\), there is a subset \(\tilde{O}_{J} \subset \mathbb{R}^{p}\), composed of \(2^{p-\#J-1}\) unbounded polyhedra (of dimension p) of \(\mathbb{R}^{p}\) such that for every \(v \in \tilde{ O}_{J}\), the minimizer \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\) satisfies \({\hat{u}_{i}} =\hat{ u}_{i+1}\) for all i ∈ J and \({\hat{u}_{i}}\neq {\hat{u}_{i+1}}\) for all i ∈ J c. Their closure forms a covering of \(\mathbb{R}^{p}\).

The next Remark 2 deserves to be combined with the conclusions of section “Nonasymptotic Bounds on Minimizers.”

Remark 2.

The energy in (27) has a straightforward Bayesian interpretation in terms of maximum a posteriori (MAP) estimation (see section “Background,” first item). The quadratic data-fidelity term corresponds to an observation model of the form \(v = Au_{o} + n\) where n is independent identically distributed (i.i.d.) Gaussian noise with mean zero and variance denoted by σ 2. The likelihood reads \(\pi (v\vert u) =\exp \left (-\frac{1} {2\sigma ^{2}}\|Au - v\|_{2}^{2}\right )\). The regularization term corresponds to an i.i.d. Laplacian prior on each difference \(u[i] - u[i + 1]\), \(1\leqslant i\leqslant p - 1\), that is, exp(−λ | t for \(\lambda = \frac{\beta } {2\sigma ^{2}}\). Since this density is continuous on \(\mathbb{R}\), the probability to get a null sample, \(t = u[i] - u[i + 1] = 0\), is equal to zero. However, the results presented above show that for the minimizer \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\), the probability to have \(\hat{u}[i] -\hat{ u}[i + 1] = 0\) for a certain amount of indexes i is strictly positive. This means that the Laplacian prior on the differences \(u[i] - u[i + 1]\) is far from being incorporated in the MAP solution \(\hat{u}\).

5.3 Applications

The use of nondifferentiable (and also nonconvex) regularization in compressive sensing is actually extremely abundant; readers can check, e.g., the textbook [50].

Image reconstruction is computed tomography.

The concentration of an isotope in a part of the body provides an image characterizing metabolic functions and local blood flow [21, 62]. In emission computed tomography (ECT), a radioactive drug is introduced in a region of the body and the emitted photons are recorded around it. Data are formed by the number of photons \(v[i]\geqslant 0\) reaching each detector, i = 1, …, q. The observed photon counts v have a Poissonian distribution [21, 90]. Their mean is determined using projection operators {a i , i = 1, 2, …, q} and a constant ρ > 0. The data-fidelity \(\Psi \) derived from the log-likelihood function is nonstrictly convex and reads:

Figure 11 presents image reconstruction from simulated ECT data by minimizing and energy of the forms (21) and (22) where \(\Psi \) is given by (28) and {D i } yield the first-order differences between each pixel and its eight nearest neighbors. One observes, yet again, that a PF ϕ which is nonconvex with ϕ′(0+) > 0 leads to a nicely segmented piecewise constant reconstruction.

6 Nonsmooth Data Fidelity

-

Figure 12 shows that there is a striking distinction in the behavior of the minimizers relevant to nonsmooth data-fidelity terms (b) with respect to nonsmooth regularization (a). More precisely, many data samples are fitted exactly when the data-fidelity term is nonsmooth. This particular behavior is explained and generalized in the present section.

D is a first-order difference operator, i.e., \({\mathrm{D}_{i}}u = u[i] - u[i + 1]\), \(1\leqslant i\leqslant p - 1\). Data (- - -), restored signal (—). Constant pieces in (a) are emphasized using “∗,” while data samples that are equal to the relevant samples of the minimizer in (b) are emphasized using “∘”

Consider

where \(a_{i} \in \mathbb{R}^{p}\) for all i ∈ { 1, …, q} and \(\psi: \mathbb{R}_{+} \rightarrow \mathbb{R}_{+}\) is a function satisfying

H12

ψ is \(\mathcal{C}^{m}\), \(m\geqslant 2\) on \(\mathbb{R}_{+}^{{\ast}}\) and ψ′(0 + ) > 0 is finite.

By this condition, t ↦ ψ( | t | ) is continuous on \(\mathbb{R}\). Let \(A \in \mathbb{R}^{q\times p}\) denote the matrix such that for any i = 1, …, q, its ith row reads a i ∗.

Nonsmooth data-fidelity terms \(\Psi \) in energies of the form (29) and (30) were introduced in image processing in 2001 [77].

6.1 General Results

Here we present some results on the minimizers \(\hat{u}\) of \(\mathcal{F}\) as given in (29) and (30), where \(\Psi \) is nondifferentiable, obtained in [78, 79]. An additional assumption is that

H13

The regularization term \(\Phi: \mathbb{R}^{p} \rightarrow \mathbb{R}\) in ( 29 ) is \(\mathcal{C}^{m}\), \(m\geqslant 2\).

Note that \(\Phi \) in (29) can be convex or nonconvex. To analyze the observation in Fig. 12b, the following set-valued function \(\mathcal{J}\) will be useful:

Given v and a (local) minimizer \(\hat{u}\) of \(\mathcal{F}(\cdot,v)\), the set of all data entries v[i] that are fitted exactly by \((A\hat{u})[i]\) reads \(\hat{J} = \mathcal{J} (\hat{u},v)\). Its complement is \(\hat{J}^{c} =\{ 1,\ldots,q\}\setminus \hat{J}\).

Theorem 11.

Let \(\mathcal{F}\) be of the form (29)–(30) where assumptions H 12 and H 13 hold. Given \(v \in \mathbb{R}^{q}\), let \(\hat{u} \in \mathbb{R}^{p}\) be a (local) minimizer of \(\mathcal{F}(\cdot,v)\). For \(\hat{J} = \mathcal{J} (\hat{u},y)\), where \(\mathcal{J}\) is defined according to (31), let

and let \(K_{\hat{J}}\) be its tangent. Suppose the following:

-

1.

The set \(\left \{a_{i}: i \in \hat{ J}\right \}\) is linearly independent.

-

2.

\(\forall w \in K_{\hat{J}}\setminus \{0\}\) we have \(D_{1}(\mathcal{F}\vert _{\overline{\mathcal{K}_{\hat{ J}}(v)}})(\hat{u},v)w = 0\) and \(D_{1}^{2}(\mathcal{F}\vert _{ \overline{\mathcal{K}_{\hat{J}}(v)}})(\hat{u},v)(w,w) > 0\).

-

3.

\(\forall w \in K_{\hat{J}}^{\perp }\setminus \{0\}\) we have \(\delta _{1}\mathcal{F}(\hat{u},v)(w) > 0\).

Then there are a neighborhood \(O_{\hat{J}} \subset \mathbb{R}^{q}\) containing v and a \(\mathcal{C}^{m-1}\) local minimizer function \(\mathcal{U}: O_{\hat{J}} \rightarrow \mathbb{R}^{p}\) relevant to \(\mathcal{F}(\cdot,O_{\hat{J}})\), yielding in particular \(\hat{u} = \mathcal{U}(v)\), and

The result in (32) means that \(\mathcal{J} (\mathcal{U}(\nu ),\nu ) =\hat{ J}\) is constant on \(O_{\hat{J}}\).

Note that for every v and \(J\neq \varnothing \), the set \(\mathcal{K}_{J}(v)\) is a finite union of connected components, whereas its closure \(\overline{\mathcal{K}_{J}(v)}\) is an affine subspace. Its tangent \(K_{\hat{J}}\) reads

A comparison with \(K_{\hat{J}}\) in (24) may be instructive. Compare also (b) and (c) in Theorem 11 with (a) and (b) in Theorem 10. By the way, conditions (b) and (c) in Theorem 11 ensure that \(\mathcal{F}(\cdot,v)\) reaches a strict minimum at \(\hat{u}\) [78, Proposition 1]. Observe that this sufficient condition for strict minimum involves the behavior of \(\mathcal{F}(\cdot,v)\) on two orthogonal subspaces separately. This occurs because of the nonsmoothness of t ↦ ψ( | t | ) at zero. It can be useful to note that at a minimizer \(\hat{u}\),

Commentary on the assumptions.

Assumption (a) does not require the independence of the whole set {a i : i ∈ { 1, …, q}}. It is easy to check that this assumption fails to hold only for some v is included in a subspace of dimension strictly smaller than q. Hence, assumption (a) is satisfied for almost all \(v \in \mathbb{R}^{q}\) and the theorem addresses any matrix A, whether it be singular or invertible.

Assumption (b) is the classical sufficient condition for a strict local minimum of a smooth function over an affine subspace; see Lemma 1 (section “Reminders and Definitions”). If an arbitrary function \(\mathcal{F}(\cdot,v): \mathbb{R}^{p} \rightarrow \mathbb{R}\) has a minimum at \(\hat{u}\), then necessarily \(\delta _{1}\mathcal{F}(\hat{u},v)(w)\geqslant 0\) for all \(w \in K_{\hat{J}}^{\perp }\); see Theorem 1. In comparison, (c) requires only that the latter inequality be strict.

It will be interesting to characterize the sets of data v for which (b) and (c) may fail at some (local) minimizers. Some ideas from section “Local Minimizers” can provide a starting point.

Corollary 2.

Let \(\mathcal{F}\) be of the form (29)–(30) where p = q, and H 12 and H 13 hold true. Given \(v \in \mathbb{R}^{q}\), let \(\hat{u} \in \mathbb{R}^{p}\) be a (local) minimizer of \(\mathcal{F}(\cdot,v)\). Suppose the following:

-

(a)

The set \(\{a_{i}: 1\leqslant i\leqslant q\}\) is linearly independent.

-

(b)

\(\forall w \in \mathbb{R}^{q}\) satisfying \(\|w\|_{2} = 1\) we have \(\beta \left \vert D\Phi (\hat{u})w\right \vert <\psi '(0^{+})\sum _{ i=1}^{q}\vert \langle a_{ i},w\rangle \vert \).

Then

and there are a neighborhood \(O_{\hat{J}} \subset \mathbb{R}^{q}\) containing v and a \(\mathcal{C}^{m-1}\) local minimizer function \(\mathcal{U}: O_{\hat{J}} \rightarrow \mathbb{R}^{p}\) relevant to \(\mathcal{F}(\cdot,O_{\hat{J}})\), yielding in particular \(\hat{u} = \mathcal{U}(v)\), and

More precisely, \(\mathcal{U}(\nu ) = A^{-1}\nu\) for any \(\nu \in O_{\hat{J}}\).

In the context of Corollary 2, A is invertible. Combining this with (33) and (b) shows that

Then

The subset on the left contains an open subset of \(\mathbb{R}^{q}\) by the continuity of \(v\mapsto D\Phi (A^{-1}v)\) combined with (b).

Significance of the results.

Consider that \(\#J\geqslant 1\). The result in (32) means that the set-valued function \(v \rightarrow \mathcal{J} (\mathcal{U}(v),v)\) is constant on \(O_{\hat{J}}\), i.e., that \(\mathcal{J}\) is constant under small perturbations of v. Equivalently, all residuals \(\langle a_{i},\mathcal{U}(v)\rangle - v[i]\) for \(i \in \hat{ J}\) are null on \(O_{\hat{J}}\).

Theorem 11 shows that \(\mathbb{R}^{q}\) contains volumes of positive measure composed of data that lead to local minimizers which fit exactly the data entries belonging to the same set. In general, there are volumes corresponding to various \(\hat{J}\) so that noisy data come across them. That is why nonsmooth data-fidelity terms generically yield minimizers fitting exactly a certain number of the data entries. The resultant numerical effect is observed in Fig. 12b as well as in Figs. 14 and 15.

Remark 3 (Stability of Minimizers).

The fact that there is a \(\mathcal{C}^{m-1}\) local minimizer function shows that, in spite of the nonsmoothness of \(\mathcal{F}\), for any v, all local minimizers of \(\mathcal{F}(\cdot,v)\) which satisfy the conditions of the theorem are stable under weak perturbations of data v. This result extends Lemma 1.

Example 6.

Let \(\mathcal{F}\) read

It is easy to see that there is a unique local minimizer function \(\mathcal{U}\) which is given by

Condition (c) in Theorem 11 fails to hold only for \(\left \{v \in \mathbb{R}^{q}: \left \vert v[i]\right \vert = \frac{1} {\beta },\ \forall i \in \hat{ J}\right \}\). This set is of Lebesgue measure zero in \(\mathbb{R}^{q}\). For any J ∈ { 1, …, q} put

Obviously, every v ∈ O J gives rise to a minimizer \(\hat{u}\) satisfying

Each set O J has a positive Lebesgue measure in \(\mathbb{R}^{q}\). Moreover, the union of all O J when J ranges on all subsets \(J \subset \{ 1,\ldots,q\}\) (including the empty set) forms a partition of \(\mathbb{R}^{q}\).

Numerical experiment.

The original image u o is shown in Fig. 13a. Data v in Fig. 13b are obtained by replacing some pixels of u o by aberrant impulsions, called outliers.

In all Figs. 14–17, {D i } correspond to the first-order differences between each pixel and its four nearest neighbors. Figure 14a corresponds to an \(\ell_{1}\) data-fidelity term for β = 0. 14. The outliers are well visible although their amplitudes are clearly reduced. The image of the residuals \(v -\hat{ u}\), shown in Fig. 14b, is null everywhere except at the positions of the outliers in v. The pixels corresponding to nonzero residuals (i.e., the elements of \(\hat{J}^{c}\)) provide a good estimate of the locations of the outliers in v. Figure 15a shows a minimizer \(\hat{u}\) of the same \(\mathcal{F}(\cdot,v)\) obtained for β = 0. 25. This minimizer does not contain visible outliers and is very close to the original image u o . The image of the residuals \(v -\hat{ u}\) in Fig. 15b is null only on restricted areas but has a very small magnitude everywhere beyond the outliers.

The minimizers of two different cost-functions \(\mathcal{F}\) involving a smooth data-fidelity term \(\Psi \), shown in Figs. 16 and 17, do not fit any data entry. In particular, the restoration in Fig. 17 corresponds to a nonsmooth regularization and it is constant over large regions; this effect was explained in Sect. 5.

6.2 Applications

The possibility to keep some data samples unchanged by using nonsmooth data fidelity is a precious property in various application fields. Nonsmooth data fidelities are good to detect and smooth outliers. This property was exploited for deblurring under impulse noise contamination; see, e.g., [10–12].

Denoising of frame coefficients.

Consider the recovery of an original (unknown) \(u_{o} \in \mathbb{R}^{p}\) – a signal or an image containing smooth zones and edges – from noisy data

where n represents a perturbation. As discussed in Sect. 4, a systematic default of the images and signals restored using convex edge-preserving PFs ϕ is that the amplitude of edges is underestimated.

Shrinkage estimators operate on a decomposition of data v into a frame of ℓ 2, say {w i : i ∈ J} where J is a set of indexes. Let W be the corresponding frame operator, i.e., \((Wv)[i] =\langle v,w_{i}\rangle\), \(\forall i \in J\), and \(\tilde{W}\) be a left inverse of W, giving rise to the dual frame \(\{\tilde{w}_{i}: i \in J\}\). The frame coefficients of v read \(y = Wv\) and are contaminated with noise Wn. The inaugural work of Donoho and Johnstone [40] considers two different shrinkage estimators: given T > 0, hard thresholding corresponds to

while in soft thresholding one takes \(y_{T}[i] = y[i] - T\mathrm{sign}(y[i])\) if i ∈ J 1 and y T [i] = 0 if i ∈ J 0. Both soft and hard thresholding are asymptotically optimal in the minimax sense if n is white Gaussian noise of standard deviation σ and

This threshold is difficult to use in practice because it increases with the size of u. Numerous improvements were realized; see, e.g., [4, 13, 24, 34, 38, 66, 70]. In all cases, the main problem is that smoothing large coefficients oversmooths edges, while thresholding small coefficients can generate Gibbs-like oscillations near edges; see Fig. 18c, d. If shrinkage is weak, noisy coefficients (outliers) remain almost unchanged and produce artifacts having the shape of \(\{\tilde{w}_{i}\}\); see Fig. 18c–e.

Methods to restore the noisy signal in (a). Restored signal (—), original signal (- -). (a) Original and data corrupted with white Gaussian noise. (b) TV regularization. (c) Sure-shrink. (d) T = 35 optimal, \({\hat{u}_{T}} =\sum _{i}y_{T}[i]\tilde{w}_{i}\). (e) y T , T = 23, \({\hat{u}_{T}} =\sum _{i}y_{T}[i]\tilde{w}_{i}\). (f) The proposed method

In order to alleviate these difficulties, several authors proposed hybrid methods where the information contained in important coefficients y[i] is combined with priors in the domain of the sought-after signal or image [18, 25, 36, 43, 67]. A critical analysis was presented in [46].

A specialized hybrid method involving ℓ 1 data fidelity on frame coefficients is proposed in [46]. Data are initially hard thresholded – see (35) – using a suboptimal threshold T in order to keep as much as possible information. (The use of another shrinkage estimator would alter all coefficients, which is not desired.) Then

-

1.

J 1 is composed of:

-

Large coefficients bearing the main features of u o that one wishes to preserve intact

-

Aberrant coefficients (outliers) that must be restored using the regularization term

-

-

2.

J 0 is composed of:

-

Noise coefficients that must be kept null.

-

Coefficients y[i] corresponding to edges and other details in u o – these need to be restored in accordance with the prior incorporated in the regularization term.

-

In order to reach the goals formulated in 1 and 2 above, denoised coefficients \(\hat{x}\) are defined as a minimizer of the hybrid energy F(., y) given below:

where ϕ is convex and edge preserving. Then the sought-after denoised image or signal is

Several properties relevant to the minimizers of F in (37), the parameters λ i , i ∈ { 0, 1}, and the solution \(\hat{u}\) are outlined in [46].

Noisy data v are shown along with the original u o in Fig. 18a. The restoration in Fig. 18b minimizes \(\mathcal{F}(u) =\| Au - v\|_{2}^{2} +\beta \mathrm{ TV}\) – homogeneous regions remain noisy, edges are smoothed, and spikes are eroded. Figure 18c is obtained using the sure-shrink method [41] from the toolbox WaveLab. The other restorations use thresholded Daubechies wavelet coefficients with eight vanishing moments. The optimal value for the hard thresholding obtained using (36) is T = 35. The relevant restoration – Fig. 18d – exhibits important Gibbs-like oscillations as well as wavelet-shaped artifacts. For T = 23 the coefficients have a richer information content, but \(\tilde{W}y_{T}\), shown in Fig. 18e, manifests Gibbs artifacts and many wavelet-shaped artifacts. Introducing the thresholded coefficients of Fig. 18e in the specialized energy F in (37) leads to Fig. 18f: edges are clean and piecewise polynomial parts are well recovered.

7 Nonsmooth Data Fidelity and Regularization

7.1 The \({\mathit{L}_{1}}\)-TV Case

For discrete signals of finite length, energies of the form \(\mathcal{F}(u,v) =\| u - v\|_{1} +\beta \sum _{ i=1}^{p-1}\vert u[i + 1] - u[i]\vert \) were considered by Alliney in 1992 [1].

Following [1, 78, 79], S. Esedoglu and T. Chan explored in [28] the minimizers of the L 1-TV functional given below

where the sought-after minimizer \(\hat{u}\) belongs to the space of bounded variation functions on \(\mathbb{R}^{d}\). The main focus is on images, i.e., d = 2. The analysis in [28] is based on a representation of \(\mathcal{F}\) in (38) in terms of the level sets of u and v. Most of the results are established for data v given by the characteristic function \(\chi _{\Sigma }\) of a bounded domain \(\Sigma \subset \mathbb{R}^{d}\). Theorem 5.2 in [28] says that if \(v =\chi _{\Sigma }\), where \(\Sigma \subset \mathbb{R}^{d}\) is bounded, then \(\mathcal{F}(\cdot,v)\) admits a minimizer of the form \(\hat{u} =\chi _{\hat{\Sigma }}\) (with possibly \(\hat{\Sigma }\neq \Sigma \)). Furthermore, Corollary 5.3. in [28] states that if in addition \(\Sigma \) is convex, then for almost every \(\beta \geqslant 0\), \(\mathcal{F}(\cdot,v)\) admits a unique minimizer and \(\hat{u} =\chi _{\hat{\Sigma }}\) with \(\hat{\Sigma } \subseteq \Sigma \). Moreover, it is shown that small features in the image maintain their contrast intact up to some value of β, while for a larger β they suddenly disappear.

7.1.1 Denoising of Binary Images and Convex Relaxation

Many problems such as text denoising and document processing, two-phase image segmentation, shape restoration, and fairing of surfaces in computer graphics are naturally stated as the minimization of an energy over the set of the binary images. These energies are obviously nonconvex since the constraint set is finite. Their global minimizer was shown in [29] to be also the minimizer of the convex L 1-TV functional which is convex. This result yielded much simpler algorithms for binary image restoration. An illustration is given on Fig. 19.

Since then, L 1-TV relaxations have became a common tool for convex relaxations; see, e.g., among many others [84] and the references therein.

Also, L 1-TV energies were revealed very successful in image decomposition; see, e.g., [8, 48].

7.1.2 Multiplicative Noise Removal

In various active imaging systems, such as synthetic aperture radar (SAR), laser, or ultrasound imaging, the data representing the underlying (unknown image) S 0 are corrupted with multiplicative noise. Such a noise is a severe degradation; see Fig. 20. When possible, a few independent measurements for the same scene are realized, \(\Sigma _{k} = S_{0}\eta _{k}\) for k ∈ { 1, …, K}, where the noise η k is typically modeled by the one-sided exponential distribution. The data \(\Sigma \) used for denoising is the average of the set of all K measurements:

The combined multiplicative noise follows a Gamma distribution. Adaptive filtering works only if the noise is weak. For strong noise, variational methods often use TV regularization. In [6] the log-likelihood of the raw data (39) is regularized using TV. Instead, the properties of L 1-TV are used to design an energy in [47]. First, the log-data \(v =\log \Sigma \) is decomposed into a curvelet transform yielding noisy coefficients y = Wv. A suboptimal hard thresholding is applied for T adapted to the expectation of the log noise. Let \(I_{0} =\{ i\:\ \vert y[i]\leqslant T\}\) and I 1 = { i : | y[i] > T}. Since the threshold is low, I 1 contains outliers. Coefficients \(\hat{x}\) are restored by minimizing

The restored image \(\hat{S}\), shown in Fig. 20, is obtained as \(\hat{S} =\exp (\tilde{W}(\hat{x}))\,B\) where \(\tilde{W}\) is a left inverse of W and B is a bias correction.

7.2 \({\boldsymbol{\ell_{1}}}\) Data Fidelity with Regularization Concave on \(\mathbb{R}_{+}\)

One could expect that ℓ 1 data fidelity regularized with a PF concave on \(\mathbb{R}_{+}\) should somehow reinforce the properties of ℓ 1 − TV. The question was recently examined in [83]. Consider the energy

where \(\phi: \mathbb{R}_{+} \rightarrow \mathbb{R}_{+}\) is continuous and concave on \(\mathbb{R}_{+}\) (e.g., (f10), (f11), and (f12) in Table 1).

7.2.1 Motivation

Figures 21 and 22 depict (the global) minimizers of \(\mathcal{F}(u,v)\) in (40) for a one-dimensional signal where A = Id, {D i } are first-order differences, and ϕ is smooth and concave on \(\mathbb{R}_{+}\).

Minimizers of \(\mathcal{F}(\cdot,v)\) as given in (40) for \(\phi (t) =\ln (\alpha t + 1)\), α = 2 and different values of β. Data samples ( ), minimizer samples \(\hat{u}[i]\) (\({\boldsymbol{+ + +}}\))

), minimizer samples \(\hat{u}[i]\) (\({\boldsymbol{+ + +}}\))

Minimizers of \(\mathcal{F}(\cdot,v)\) as given in (40) for different PFs ϕ. Data are corrupted with Gaussian noise. Data samples v[i] are marked with ( ), samples \(\hat{u}[i]\) of the minimizer – with (\({\boldsymbol{+ + +}}\)). The original signal is reminded in (

), samples \(\hat{u}[i]\) of the minimizer – with (\({\boldsymbol{+ + +}}\)). The original signal is reminded in ( ). (a) \(\phi (t) = \frac{\alpha \;t} {\alpha \;t+1}\), α = 4, β = 3. (b) ϕ(t) = t, β = 0. 8

). (a) \(\phi (t) = \frac{\alpha \;t} {\alpha \;t+1}\), α = 4, β = 3. (b) ϕ(t) = t, β = 0. 8

The tests in Figs. 21 and 22 show that a PF concave on \(\mathbb{R}_{+}\) considerably reinforces the properties of \(\ell_{1} -\mathrm{ TV}\). One observes that the minimizer satisfies exactly part of the data term and part of the prior term (corresponding to constant pieces). In Fig. 22b, the previous ℓ 1 − TV model is considered. Figure 21 shows also that the minimizer remains unchanged for some range of values of β and that after a threshold value, it is simplified.

Example 7 below furnishes a first intuition on the reasons underlying the phenomena observed in Figs. 21 and 22.

Example 7.

Given \(v \in \mathbb{R}\), consider the function \(\mathcal{F}(\cdot,v): \mathbb{R} \rightarrow \mathbb{R}\) given below

The necessary conditions for \(\mathcal{F}(\cdot,v)\) to have a (local) minimum at \(\hat{u}\neq 0\) and \(\hat{u}\neq v\) – that its first differential meets \(D_{1}\mathcal{F}(\hat{u},v) = 0\) and that its second differential obeys \(D_{1}^{2}\mathcal{F}(\hat{u},v)\geqslant 0\) – do not hold:

where the last inequality comes from the strict concavity of ϕ on \(\mathbb{R}_{+}^{{\ast}}\). Hence, \(\mathcal{F}(\cdot,v)\) cannot have a minimizer such that \(\hat{u}\neq 0\) and \(\hat{u}\neq v\), for any \(v \in \mathbb{R}\). Being coercive, \(\mathcal{F}(\cdot,v)\) does have minimizers. Consequently, any (local) minimizer of \(\mathcal{F}\) in (41) satisfies

7.2.2 Main Theoretical Results

The PFs considered here are concave on \(\mathbb{R}_{+}\) and smooth on \(\mathbb{R}_{+}^{{\ast}}\). More precisely, they satisfy H1 (Sect. 1), H8, and H10 (section “Assumptions on Potential Functions \(\phi\)”). One can see Fig. 3, right, for an illustration of the assumptions.

Proposition 3.