Abstract

For most individuals, the field of applied behavioral analysis (ABA) evokes thoughts related to the use of assessment procedures to evaluate the causes of aberrant behavior, the use of reinforcement to produce modifications in social behavior, and/or teaching of basic skills to individuals with disabilities. The principles of ABA, however, also apply to assessing the academic needs of students, selecting appropriate academic interventions, and evaluating individual students’ response to intervention (RTI). In this chapter, the authors describe ABA as a foundation of RTI models. The chapter begins with a description of differences between traditional assessment and behavioral assessment and how those differences necessitate a change in the manner by which schools determine why a student is struggling behaviorally and/or academically. The authors then discuss the fundamental principles of ABA and how those principles can guide schools in better selecting both behavioral and academic targets for change, selecting and developing appropriate interventions, and finally measuring intervention effectiveness.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Problem Behavior

- Applied Behavioral Analysis

- Teacher Attention

- Measure Intervention Effect

- Single Subject Design

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Implementation of a response to intervention (RTI) model requires schools to make both conceptual and procedural modifications that are essential to its success and will lead to improvements in the education and behavior of all students within a building. Schools must modify their identification process from one which identifies children based upon discrepancies in the constructs of intelligence and achievement to one that identifies students who are at risk of later disability identification. RTI is a prevention-based model that requires students to receive early intervention based upon their instructional and behavioral needs. Waiting for students to fail before beginning to provide them with intervention results in too many students being identified as needing intervention when the possibility of remediation is slim (President’s Commission on Excellence in Special Education 2002). A second change required by schools in order to ensure the success of RTI is a change in assessment procedures. Schools must not rely so heavily on evaluating behaviors believed to represent internal states/constructs and instead directly evaluate the behaviors and skills students must possess to be socially and academically successful. Measuring behaviors that students need to succeed, as opposed to measuring constructs, will provide schools with data that can directly inform instruction. Finally, implementation of an RTI model requires that schools do not blame children for their behavior or poor academic performance, and instead determine what aspects of the instructional environment must be manipulated to maximize student achievement. In essence, the implementation of RTI within schools requires a shift from providing services to students based upon a nomothetic traditional assessment framework to assessing student needs based upon an idiographic applied behavioral analysis (ABA) framework.

FormalPara Traditional AssessmentThe goal of traditional assessment is to determine the precise problem and measure inferred states from indirect observations (Galassi and Perot 1992; Hayes et al. 1986; Tkachuk et al. 2003). These inferred states are assumed to be stable, intraorganismic variables (Hayes et al. 1986; Nelson and Hayes 1979a, 1979b). That is, the variables do not change based on the situation, rather they are a reflection of the person’s cognitive constructs, mental disorders, and/or their personality emphasizing personology constructs (Hayes et al. 1986; Nelson and Hayes 1979a; Tkachuk et al. 2003). Hence, behavior is seen to be a sign or sample of these constructs (Hayes et al. 1986; Nelson and Hayes 1979b). Behavior is not viewed as having controlling variables outside of the individual; therefore, these variables are largely ignored (Hayes et al. 1986). Since traditional assessment is based on stable constructs, the focus of assessment is to classify and diagnose individuals, therefore, emphasizing group experimental designs (Tkachuk et al. 2003) and the comparison of individual performance to normative data (Hayes et al. 1986).

The IQ-achievement discrepancy model, a traditional assessment approach, has served as the approach for determining students’ special education eligibility since the 1970s (Fletcher et al. 2004). Under the discrepancy model, students are identified as needing special education if a discrepancy exist between the constructs of intelligence (believed to represent learning potential) and academic achievement (Sparks and Lovett 2009). Other constructs (e.g., inattention, depression, anxiety) are also measured to evaluate whether the discrepancy between their intelligence and achievement is due to a learning disability or the manifestation of other internal states. To measure these constructs, caregivers (e.g., teachers and parents) as well as the students themselves, might be asked to complete behavioral rating scales on the frequency with which a student engages in various behaviors (e.g., day dreaming, interrupting others, fidgeting, somatic complaints). Students with attention problems might have higher ratings on questions pertaining to concentration, perceptions of being off-task, and boredom in school, whereas students with anxiety would have higher ratings on questions about fearing people or situations, being self-conscious, and being nervous (Achenbach 1991; Reynolds and Kamphaus 2006).

Basing students’ educational needs primarily on their internal states, as determined by samples of their behavior believed to represent constructs, ignores the impact of the learning environment on behavior. Ignoring the impact of the environment results in students being blamed for their failure and, ultimately, labeling some students with a disability who were, in fact, disabled by their learning environment. Choosing to focus on problems within the child also limits the availability of information used for developing effective interventions (President’s Commission on Excellence in Special Education 2002).

FormalPara Applied Behavior AnalysisThe discipline of ABA provides a foundation from which the principles and procedures for RTI were developed. ABA is the science of solving socially important problems by evaluating how the environment impacts behavior (Gresham 2004). Emphases of ABA include (a) measuring individuals’ behavior as opposed to their mental states, (b) continuity between those behaviors which are observable, and those events private to the individual, (c) predicting and controlling individuals’ behavior as opposed to the behavior or mental states of groups, and (d) an understanding of the environmental causes of behavior (Fisher et al. 2011). The primary focus of ABA is on observable behaviors (e.g., talking, academic engagement, biting, crying, fidgeting, etc.) and measuring the ways in which the environment (e.g., quality of instruction and teacher attention) influences the dimensions of those behaviors (Cooper et al. 2007). In ABA, the amount (frequency, intensity, or duration) a person engages in target behaviors of interest is compared to his/her history of engaging in the target behavior under the same or different environmental conditions. Environmental variables are measured and manipulated to evaluate how changes in the environment might alter the amount a person engages in the behavior.

With ABA as a foundation, RTI models focus on whether the general education environment (tier 1) can be expected to produce adequate learning and the environmental changes necessary for producing significant gains for the target student. Assessing the skills and behavior of all students within a school through multiple universal screenings across the academic year provides RTI teams with information regarding the general effectiveness of tier 1 instruction. It allows the academic performance and classroom behavior of individual students to be compared to other students receiving similar tier 1 instruction and behavior management. Manipulation of the existing environment through the implementation of increasingly intensive interventions during tier 2 instruction provides information to RTI teams regarding the level of modifications necessary to produce adequate learning and behavior. Students who require environmental manipulations that extend beyond that which a general education classroom can provide are in need of special education services (tier 3) (Fletcher et al. 2005; Marston 2005; Shinn 2007). Given ABA’s focus on behavior, the environment, and measuring the impact of changes to the environment on the behavior of individuals, it provides a framework that RTI teams can reference when (a) identifying target behaviors, (b) selecting and developing interventions, and (c) measuring intervention effects (Martens and Ardoin 2010) (see Table 1.)

Selecting Target Behaviors

An essential feature of ABA as an applied science is the targeting of behaviors for change that are immediately important to the individual and society (Cooper 1982). At face value, selecting target behaviors for change within a school setting that are socially significant and will result in immediate changes in student behavior may sound simple. The task, however, requires knowledge of appropriate replacement behaviors, an understanding of the hierarchy of skills necessary to perform complex tasks (e.g., reading with comprehension), and knowing how to assess the behaviors targeted by intervention (Noell et al. 2011)

Classroom Behavior

When developing interventions for students who are engaging in unacceptable levels of inappropriate classroom behavior, research within ABA has demonstrated the importance of identifying appropriate alternatives that could potentially replace the inappropriate behavior (Carr and Durand 1985; Cooper et al. 2007). Although punishing inappropriate behavior will likely result in an immediate decrease in punished behaviors, failure to teach an appropriate replacement behavior may result in the student simply replacing the inappropriate behavior with an equally problematic alternative. For this reason, education professionals implementing an RTI framework must determine why the student is engaging in inappropriate behavior (i.e., what is reinforcing the inappropriate behavior), what appropriate behavior(s) might enable the student to access the same reinforcer(s), and what appropriate replacement behavior(s) the student is capable of engaging in. RTI teams should refer to the large base of ABA research using functional assessment procedures to identify the factors potentially reinforcing inappropriate behavior, and the literature on differential reinforcement for guidance on selecting and increasing appropriate alternatives (Geiger et al. 2010; LeGray et al. 2010; Miller and Lee 2013).

Academic Performance

Selecting a target behavior for students identified as needing tier 2 intervention due to poor academic performance might seem obvious, as the goal is to get the student to accurately perform the academic tasks related to his/her area of weakness. In fact, it may be tempting to simply target the behavior(s) measured through universal screening procedures which were used to identify the student as needing intervention. However, by definition the students identified through a universal screening as needing tier 2 intervention are not performing at the level of their peers, and are likely in need of remediation of prerequisite skills to adequately perform those behaviors at which their peers are succeeding. Thus, the tendency to select academic behaviors within the child’s grade level curriculum is unlikely to be an appropriate target for intervention (Brown-Chidsey and Steege 2005; Daly et al. 2007). For instance, many schools employ curriculum-based measurement procedures in reading (CBM-R) to identify which of their students might need supplemental reading instruction. CBM-R measures students’ oral reading rate with accuracy (Ardoin et al. 2013; Mellard et al. 2009). Second grade is recognized as a grade in which fluency greatly increases across the academic year. It would, therefore, seem socially appropriate to provide second grade students struggling in reading with an intervention addressing reading fluency. A lower-performing second-grade student may not, however, have the prerequisite skills (e.g., letter recognition, knowledge of letter sounds, phonological awareness) necessary to benefit from an oral reading fluency-based intervention. The intervention therefore would not likely result in the student making adequate progress, not due to the student needing a more intensive intervention, but due to the student needing an intervention targeting his/her skill needs. Thus, although oral reading fluency might seem to be the most socially significant behavior to target, such a decision would be unlikely to elevate the student’s skills to a level comparable to his/her peers.

Selecting the proper academic skills to target for intervention requires knowledge of the prerequisite skills necessary to perform the complex tasks that make up grade level curriculum goals. Research in ABA highlights the importance of creating task analyses for complex, multistep activities, and then determining which of the skills the student has and has not mastered (Noell et al. 2011). For instance, Parker and Kamps (2011) used task analyses, in combination with self-monitoring and social scripts, to teach functional life skills and increase verbal interactions with peers in two children with autism. Three activities were selected for the intervention (playing board games, cooking, and dining in a restaurant) and a task analysis was developed for each. The task analyses were written and displayed on a laminated sheet of paper, listing each step necessary for completing the activity in the order it needed to be completed. The number of steps for each activity ranged from 8 to 22, and, in addition to performing the more functional components of the task, the steps included prompts for initiating conversation with peers. Participants were taught how to use the task analysis, using a combination of verbal and physical prompts, in the settings in which the intervention sessions would take place. Data were collected on each student’s task completion, activity engagement, and peer directed verbalizations. Once students’ proficiency in task completion improved, prompts were faded in order to decrease reliance on the prompts and increase the probability of the student generalizing the behaviors to other settings. Similar procedures can be employed for teaching students how to complete complex math problems or write well-constructed narratives (Alter et al. 2011; Hudson et al. 2013; Noell et al. 2011).

Selecting and Developing Interventions

A primary objective of ABA is to identify the motivation behind behavior, and use that knowledge to create individualized interventions that work to reduce problem behavior and increase positive replacement behaviors (Gresham 2004). This is synchronous with the goal (within an RTI framework) of determining whether a student’s problem behavior or poor academic performance is a function of the environment in which they are being instructed, or the result of a disability. Universal screening data that indicates other students are responding to tier 1 instruction provides evidence that general education instruction is adequate. If interventions are properly selected, students’ response to increasingly intensive levels of intervention through tier 2 instruction should provide RTI teams with information regarding whether (a) a student failed to learn essential prerequisite skills that if learned would allow the student to make adequate academic progress and (b) the level of modifications necessary to tier 1 instruction for the student to make adequate rates of academic progress (McDougal et al. 2010). The likelihood that tier 2 will provide RTI teams with accurate information regarding a student’s instructional needs is largely based upon whether proper analyses are conducted to determine the function of students’ poor classroom behavior or academic performance.

Classroom Behavior

Research in ABA suggests that development of an intervention based upon the cause of the student’s problem behavior is likely to lead to greater improvements in behavior with a less intense intervention (Daly et al. 1997; Iwata et al. 1994; Vollmer and Iwata 1992). Determining the cause of problem behavior is often accomplished through a functional behavior assessment (FBA), a process of analyzing environmental conditions and collecting data on patterns of behavior to establish a hypothesis of the function (Cooper et al. 2007; Solnick and Ardoin 2010). The term FBA is familiar to many educators, especially those involved in special education. Amendments to the Individuals with Disabilities Education Act (IDEA) in 1997 mandated that schools conduct an FBA, and implement a behavioral intervention plan based on the behavioral function, if a student’s aberrant behavior is determined to be a result of his/her disability (Individuals with Disability Education Act Amendments of 1997 [IDEA] 1997).

FBAs involve a process of gathering information on the function of behavior through indirect assessments (e.g., structured interviews, rating scales), descriptive assessments (e.g., direct observations in the child’s typical environment), and functional analyses. The data collected from these procedures are used to form and test hypotheses about the motivation behind a child’s behavior (Cooper et al. 2007). Once the function of problem behavior is identified, targeted interventions can be put in place to change the reinforcement contingencies maintaining the problem behavior. Most behavioral interventions developed through the widely used school-wide behavioral management system known as positive behavioral intervention and support (Positive Behavioral Interventions and Supports (PBIS) 2013; Sugai et al. 2000) begin with an FBA (Carr and Sidener 2002), and use the information collected to create interventions teaching the student more appropriate, effective, and efficient ways of accessing reinforcement than engaging in the inappropriate behavior (Carr and Sidener 2002; Sugai and Horner 2002). The basis for these assessments is derived from the ABA research literature (Iwata et al. 1994; Vollmer and Iwata 1992).

IDEA requirements for FBAs in schools were largely based upon research conducted with students engaging in high rates of severe behavior, and studies in which a component of functional assessment, functional analysis, was implemented (Drasgow and Yell 2001; Individuals with Disability Education Act Amendments of 1997 [IDEA] 1997, 2004). Functional analysis is the only component of an FBA that involves the direct testing of hypotheses on behavioral function by performing systematic environmental manipulations and examining which of the test conditions elicits the highest rate of problem behavior. In a school setting, the conditions typically assessed involve attention (positive reinforcement), escape from demands (negative reinforcement) and play (automatic or sensory reinforcement; Solnick and Ardoin 2010). For example, in the typical functional analysis escape condition, students are issued a demand and allowed to momentarily escape from the task demands when they engage in problem behavior. If the function of student behavior is escape, then the student is more likely to escape in this condition. Thus, the condition that results in the highest rate of inappropriate behavior is hypothesized to be the function of that behavior. Although it might be considered problematic to intentionally induce problem behavior in this manner, with severe problem behavior (e.g., head banging), the benefits of creating an effective intervention may outweigh the risk of the student engaging in inappropriate behavior during assessment sessions (Iwata et al. 1994).

Functional analyses are difficult to implement in a school setting due to the challenges of controlling the classroom environment (e.g., peer attention) and the fact that functional analysis conditions are meant to elicit problem behavior, which may endanger the student, peers, and teachers (Bloom et al. 2011; Solnick and Ardoin 2010). Several studies have, however, demonstrated some success in employing modified functional analysis procedures in the child’s natural environment. Bloom et al. (2011) substantially reduced the length of sessions to only 2 min per test condition and implemented the test conditions within the context of naturally occurring classroom activities. For example, attention and tangible conditions were conducted during free play, and the demand conditions occurred during instructional time, when it was appropriate for the teacher to be issuing demands. The authors reported that results from the trial-based functional analyses matched those of a standard functional analyses conducted for comparison purposes in 60 % of the cases. In another modification to traditional functional analyses procedures, researchers have examined participants’ latency to problem behavior under different levels of aversive demands and teacher attention (Call et al. 2009; Hine and Ardoin 2011). Such procedures allow for analyses to be conducted with fewer occasions of inappropriate behavior and allow for inappropriate behavior to be appropriately dealt with when it occurs.

Functional analysis procedures have also been successfully implemented by classroom teachers with typically developing children at risk for reading failure, displaying high levels of off-task and disruptive behavior (Shumate and Wills 2010). All functional analysis sessions in this study were conducted during regularly scheduled classroom activities, with escape and attention conditions occurring during small group reading instruction and the control condition taking place in a separate play area. Three sessions were presented each day (one of each condition), until the data showed a clear pattern of responding. As teacher attention was determined to be maintaining the aberrant behavior of all participants, an intervention was designed to address this specific function. Teacher attention was withheld for all instances of problem behavior, with immediate attention and praise-delivered contingent on desirable alternative behaviors (e.g., hand raising). The results from this Shumate and Wills (2010) indicate that the function-based intervention was successful in both decreasing the participants’ off-task and disruptive behaviors and increasing appropriate alternatives, further validating the use of function-based assessments in the role of identifying the variables maintaining problem behavior.

Academic Performance

The FBA literature might initially not seem to generalize to students difficulties with academic skills. However, the benefits of systematically altering the stimuli students are exposed in order to evaluate the causes of their academic difficulties remain, whereas, in the FBA literature, problem behavior is believed to be a function of attention, escape, tangibles (e.g., access to toys or food), or automatic reinforcement (Cooper et al. 2007). Daly et al. (1997) argued a student’s failure to perform academically is a function of his/her (a) lack of motivation, (b) insufficient opportunities to practice the skill, (c) not previously having sufficient assistance/instruction/modeling in how to perform the task, (d) not having been asked to perform the task in that manner previously, and (e) simply not having the prerequisite skills necessary to perform the task. In line with this theory, researchers have explored brief experimental analysis (BEA) procedures, which include the implementation of test conditions that involved implementing interventions matching the aforementioned function of poor academic performance. Daly et al. (2002) conducted a BEA of reading during which elementary students were exposed to interventions of increasing complexity that were associated with the functions of poor academic performance identified by Daly et al. (1997). The intervention that produced the highest level of student reading accuracy and fluency for each student was hypothesized to be associated with the function of each student’s poor reading fluency.

The interventions tested within BEA of academic performance are largely based upon Haring and Eaton’s (1978) instructional hierarchy, a systematic framework for providing students with prompts to promote correct academic responding, and consequences to encourage future correct responding. Haring and Eaton (1978) described how principles of ABA apply to academic learning. They outlined strategies to move a student from not having the skills or knowledge to respond accurately to stimuli to responding accurately and fluently to that stimuli, and finally, generalizing and, then, adapting knowledge and skills to new instructional materials (Ardoin and Daly 2007). Haring and Eaton’s instructional hierarchy has been employed across multiple reading and math studies to assess and develop interventions for elementary students (e.g., Ardoin et al. 2007; Cates and Rhymer 2003; Eckert et al. 2002; Martens et al. 1999) and is central to many of the problem-solving models employed by RTI teams in schools (Daly et al. 2005; Goldstein and Martens 2000; Hosp and Ardoin 2008).

Haring and Eaton (1978), as well as supporting studies (e.g., Belfiore et al. 1995; Szadokierski and Burns 2008), suggest that multiple opportunities to respond to stimuli promotes future accurate and fluent responding to the drilled stimuli. For oral reading, this would mean that repeated reading increases accurate and fluent responding to words and word sequences that were drilled. Essentially, multiple opportunities to practice helps to develop strong stimulus control, allowing for accurate and fluent reading of drilled text. After stimulus control is developed to allow for accurate and fluent responding, generalization can be promoted by providing practice opportunities. Practice involves opportunities to respond to learned stimuli when the stimuli are presented across multiple contexts. For oral reading, this would mean providing opportunities to read the same words in different configurations or passages, thus, allowing for further development of stimulus control at the word level and generalization is reinforced (i.e., generalization due to multiple exemplars).

Generalization and Maintenance

Another important principle of ABA that must be incorporated into the implementation of interventions within an RTI model is programming for generalization and maintenance of improvements in behavior over time (Mesmer and Duhon 2011). Generalization and maintenance of intervention effects should not be expected as a positive side effect of intervention; rather, they must be programmed into the intervention (Stokes and Baer 1977). Interventions developed within an ABA framework are developed so that improvements in behavior are not only observed within intervention sessions but also generalized to other settings and maintained across time. Steps taken to increase the probability of generalization and maintenance include (a) training behaviors that will be naturally reinforced outside of the intervention setting, (b) conducting the intervention across multiple settings and using multiple exemplars of stimuli that signals to the individual to engage in the appropriate behavior, and (c) training target behaviors to high levels of proficiency, which minimizes the effort required by the student to engage in the behavior and thus increases the probability that the behavior will occur and be reinforced across settings (Ardoin et al. 2007; Mesmer and Duhon 2011). For instance, Ledford et al. (2008) evaluated the effectiveness of a teaching procedure on the acquisition of related and pictorial information by children with autism during sight word instruction conducted in pairs. The authors selected target words from lists provided by caregivers, ensuring the information learned would be relevant, and, thus, more likely to be reinforced in the children’s natural environments. In addition, generalization probes were conducted throughout the study in contexts where children could apply the information they were taught in the classroom. For example, a child might be asked to identify a sign reading “Keep Out” while walking past the janitor’s closet.

The same steps used within ABA for promoting generalization and maintenance of target behaviors have and should be employed when developing tier 2 behavioral and academic interventions. For instance, many schools employ repeated reading procedures for improving students’ reading fluency and comprehension. To ensure the effects of intervention are generalized to the classroom, the materials on which intervention is being provided could be selected from the reading curriculum. Repeated readings could also be provided on directions that are frequently presented on standardized tests and classroom tests, as well as on content area classroom materials (e.g., science, history). If content area materials are too challenging for the student to read, listening passage preview procedures can be provided on the materials, which involve modeling accurate reading and, thereby, preventing students from practicing errors (Eckert et al. 2002). It is also important that interventions be implemented across settings in order to promote students’ engagement in appropriate behavior and/or use of newly learned skills across content areas. With preplanning, physical education teachers can easily incorporate basic math calculation and problem-solving instruction into their activities, and content area teachers can assist students in applying targeted reading comprehension and writing skills into their daily instruction. It is, however, essential that all individuals providing instruction/intervention are taught how to implement the procedures as inconsistent implementation can hamper intervention progress.

Measuring Intervention Effects

In addition to providing RTI teams with a foundation for selecting behaviors to target for intervention and developing interventions, ABA provides a model for evaluating intervention effects. Using direct, continuous measurement of individuals’ behavior to inform intervention decisions is an essential component of both ABA and RTI (Cooper 1982; Carr and Sidener 2002). Failure to continually evaluate the effects of intervention on classroom behavior and academic performance has the potential to result in the continued implementation of ineffective interventions that can worsen classroom behavior or further increase the discrepancy between the academic achievement of a student and his/her peers.

Selecting Behaviors to Monitor

Many RTI teams monitor the effects of students’ academic performance using the screening measures employed by their schools for conducting universal screenings (Mellard et al. 2009). Although useful for evaluating generalization effects and for comparing a students’ rate of growth to peers, these measures are typically not sufficiently sensitive to measure intervention effects within short periods of time (2–3 weeks). RTI teams should, therefore, monitor the behaviors that are specifically targeted by the intervention being provided to a student (Ardoin et al. 2008). For instance, if an intervention is implemented to improve a student’s on-task behavior, on-task behavior collected in the setting and time during which intervention is implemented would be the most appropriate behavior to measure. Although the student’s work completion and class grades might also be expected to improve, these two outcomes are not directly targeted by the intervention and are likely to be impacted by environmental variables other than those controlled by the intervention , and, thus, should not serve as primary outcome measures. Likewise, a student’s response to an intervention designed to improve vocabulary and comprehension skills should not be evaluated based upon CBM-R (oral reading fluency) progress monitoring data. Even though a students’ oral reading fluency would likely improve with gains in vocabulary and comprehension, CBM-R is a general outcome measure on which immediate intervention effects should not be expected (Ardoin et al. 2013). Although there is an emphasis on generalization within the field of ABA, data must be collected on the behaviors directly targeted through intervention as generalization does not occur immediately. Measurement of generalization alone may result in a premature decision to designate an intervention as not producing adequate student gains, when, in fact, the intervention is having positive effects (Ardoin et al. 2008, 2013). Measuring whether a student improves in those skills directly targeted through intervention can provide schools with data at an earlier phase of intervention implementation that will directly inform them of the probability that an intervention will produce inadequate response to instruction (Ardoin 2006).

Evaluating Intervention Effects

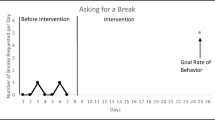

Neither ABA nor RTI teams typically use inferential statistics to evaluate the effects of intervention on groups of individuals. Instead, visual analyses of data plotted across baseline and intervention conditions are conducted. Despite having a common level of analysis (the individual), as well as common purposes for conducting assessments (i.e., identifying the cause of problem behavior) and implementing interventions (decreasing inappropriate behavior and increasing appropriate behavior, teaching skills), the questions asked within ABA and by RTI teams when evaluating intervention data generally differs. RTI teams are primarily interested in whether or not a student is making adequate progress. When examining classroom behavior, a student’s current level or rate of behavior is compared to a desired level of behavior, often taken from the average of other students in the classroom. Evaluating whether a student is making adequate academic gains is generally determined by comparing the student’s observed rate of growth to a target rate of growth that is based upon either national or district level normative growth rates (Ardoin et al. 2013).

In ABA, the question is not simply whether a student is responding adequately, but whether changes in behavior are truly due to intervention implementation and if the changes are sufficient enough to positively impact student functioning (Gresham 2004; Roane et al. 2011). In order to address these questions, a student’s behavior is not compared to prespecified normative levels or rates of gain. Rather, the level, trend, and variability of intervention data are compared to these same characteristics of baseline data. Baseline data collected as part of an ABA study are intended to provide an understanding of behavior under pre-intervention conditions. Only by understanding pre-intervention behavior can it be known if behavior has changed with the implementation of the intervention. Improvements in behavior from baseline to intervention does not, however, guarantee that observed changes were due to the intervention (Roane et al. 2011).

To demonstrate that the intervention alone is responsible for changes in behavior, single subject design methodology is employed to demonstrate the elements of prediction, verification, and replication. Prediction is demonstrated when behavior changes in the intended therapeutic direction as compared to baseline data. The element of prediction is not provided when (a) insufficient baseline data are collected thus preventing a clear understanding of behavior prior to intervention, (b) substantial variability exist in both baseline and intervention data resulting in substantial overlap in data between conditions, (c) baseline data trending in the direction it would be expected to trend with intervention implementation, and (d) failure of behavior to change when the intervention is implemented. The second element of single subject designs is verification, which provides evidence that an intervention is the cause of changes in behavior. Verification can be demonstrated by either withdrawing the intervention and observing a return to baseline levels of behavior or evidence that behaviors not yet targeted through intervention (either engaged in by the same person or others within the study) have not changed from their baseline levels. The final element used to demonstrate the effects of intervention is replication. Replication is demonstrated either by behavior changing in the direction intended with the reinstatement of the intervention or intervention effects being replicated across other behaviors, individuals, or settings (Richards et al. 1999).

Although it is not reasonable to expect RTI teams to evaluate interventions in the same manner as tightly controlled ABA studies, there is much that can be learned from these analytic procedures. First, RTI teams would benefit from developing a greater understanding of the need to collect baseline data. Universal screening data can serve as a form of baseline data as it provides evidence that a student has failed to respond to tier 1 instruction and, thus, their level of academic performance falls below their peers. Universal screening data alone does not, however, provide information regarding the variability and trend in student behavior prior to intervention implementation. Second, RTI teams would benefit from addressing the question of whether an intervention is effective instead of only addressing the question of whether a student is making adequate progress.

Answering the question of whether intervention is effective for a student would require teams to measure behaviors that are directly targeted by the selected intervention, thus, allowing RTI teams to evaluate intervention effects within shorter periods of time. It would also allow RTI teams to use the data they collect for making informative decision regarding whether a student has mastered the skill(s) being targeted by the intervention and, thus, when the target of intervention needs to be modified. Ultimately, answering the question regarding whether intervention is improving student behavior by collecting data on the skills being targeted through intervention will result in (a) implementation of ineffective interventions for shorter periods of time, (b) increases in the rate of gains made by students as RTI teams can be more responsive to the changing instructional needs of students, and (c) RTI teams being able to better predict students’ responsiveness to instruction as they observe the rate at which students acquire the skills necessary to make improvements on generalization measures.

Conclusion

Assessment within an RTI framework is a relatively new endeavor for many schools. Unlike the IQ-achievement discrepancy model, much of the decision-making is more complex. The decision of whether a student should qualify for special education services is not based on a simple discrepancy between two constructs. Rather, it is based upon analyses of data and whether those data suggest the student is responding adequately to intervention. Implementation of RTI models are also complicated by the fact that poor classroom behavior and academic performance may be due to the instructional environment and decisions made by the RTI team. A student’s response to instruction is dependent upon the quality of tier 1 instruction, accurate identification and measurement of targeted behaviors, the selection of an appropriate evidenced-based intervention, and implementation of the intervention with fidelity.

Although the empirical literature on RTI is still emerging, schools can reference the extensive research available on the principles of ABA, which serve as a foundation for RTI. The science of ABA, with its focus on measuring behavior and the impact of the environment on behavior, provides a scientific foundation from which schools can draw upon to ensure that their instructional environment will maximize achievement for students of all abilities. In 1986 when describing differences between traditional assessment and ABA, Hayes, Nelson, and Jarrett wrote, “Rather than seek the pure group first, perhaps we should let treatment responsiveness or other functional effects select our groups for us” (p. 499). It is this principle upon which RTI frameworks are built. We must not place students into categories based upon measures of mental constructs, but rather we must provide instruction to students based upon data that directly informs us of their instructional needs.

References

Achenbach, T. (1991). Manual for the child behavior checklist/4- 18 and 1991 profile. Burlington: University of Vermont Department of Psychiatry.

Alter, P., Brown, E., & Pyle, J. (2011). A strategy-based intervention to improve math word problem solving skills of students with emotional and behavioral disorders. Education & Treatment of Children, 34(4), 535–550. doi:10.1353/etc.2011.0028.

Ardoin, S. P. (2006). The response in response to intervention: Evaluating the utility of assessing maintenance of intervention effects. Psychology in the Schools, 43(6), 713–725.

Ardoin, S. P., & Daly, E. J., III (2007). Introduction to the special series: Close encounters of the instructional kind-how the instructional hierarchy is shaping instructional research 30 years later. Journal of Behavioral Education, 16, 1–6.

Ardoin, S. P., McCall, M., & Klubnik, C. (2007). Promoting generalization of oral reading fluency: Providing drill versus practice opportunities. Journal of Behavioral Education, 16, 55–70.

Ardoin, S. P., Roof, C. M., Klubnik, C., & Carfolite, J. (2008). Evaluating curriculum-based measurement from a behavioral assessment perspective. The Behavior Analyst Today, 9, 36–48.

Ardoin, S. P., Christ, T. J., Morena, L. S., Cormier, D. C., & Klingbeil, D. A. (2013). A systematic review and summarization of the recommendations and research surrounding curriculum-based measurement of oral reading fluency (CBM-R) decision rules. Journal of School Psychology, 51(1), 1–18. doi:10.1016/j.jsp.2012.09.004.

Belfiore, P. J., Skinner, C. H., & Ferkis, M. A. (1995). Effects of response and trial repetition on sight-word training for students with learning disabilities. Journal of Applied Behavior Analysis, 28, 347–348.

Bloom, S. E., Iwata, B. A., Fritz, J. N., Roscoe, E. M., & Carreau, A. B. (2011). Classroom application of a trial- based functional analysis. Journal of Applied Behavior Analysis, 44(1), 19–31.

Brown-Chidsey, R., & Steege, M. W. (2005). Response to intervention: Principles and strategies for effective practice. New York: Guilford.

Call, N. A., Pabico, R. S., & Lomas, J. E. (2009). Use of latency to problem behavior to evaluate demands for inclusion in functional analyses. Journal of Applied Behavior Analysis, 42, 723–728.

Carr, E. G., & Durand, M. V. (1985). Reducing behavior problems through functional communication training. Journal of Applied Behavior Analysis, 18(2), 111–126.

Carr, J. E., & Sidener, T. M. (2002). On the relation between applied behavior analysis and positive behavioral support. The Behavior Analyst, 25(2), 245.

Cates, G. L., & Rhymer, K. N. (2003). Examining the relationship between mathematics anxiety and mathematics performance: An instructional hierarchy perspective. Journal of Behavioral Education, 12, 23–34.

Cooper, J. O. (1982). Applied behavior analysis in education. Theory into Practice, 21(2), 114–118.

Cooper, J. O., Heron, T. E., & Heward, W. L. (2007). Applied behavior analysis (2nd ed.). New Jersey: Pearson.

Daly, E. J., III, Martens, B. K., Witt, J. C., & Dool, E. J. (1997). A model for conducting a functional analysis of academic performance problems. School Psychology Review, 26, 554–574.

Daly, E. J., III, Murdoch, A., Lillenstein, L., Webber, L., & Lentz, F. E. (2002). An examination of methods for testing treatments: Conducting brief experimental analyses of the effects of instructional components on oral reading fluency. Education & Treatment of Children, 25, 288–316.

Daly, E. J., III, Chafouleas, S. M., & Skinner, C. H. (2005). Interventions for reading problems: Designing and evaluating effective strategies. New York: Guilford.

Daly, E. J., III, Martens, B. K., Barnett, D., Witt, J. C., & Olson, S. C. (2007). Varying intervention delivery in response to intervention: Confronting and resolving challenges with measurement, instruction, and intensity. School Psychology Review, 36(4), 562–581.

Drasgow, E., & Yell, M. L. (2001). Functional behavioral assessments: Legal requirements and challenges. School Psychology Review, 30, 239–251.

Eckert, T. L., Ardoin, S. P., Daly, E. J., III, & Martens, B. K. (2002). Improving oral reading fluency: A brief experimental analysis of combining an antecedent intervention with consequences. Journal of Applied Behavior Analysis, 35, 271–281.

Fisher, W. W., Groff, R. A., & Roane, H. S. (2011). Applied behavior analysis: History, philosophy, principles, and basic methods. In W. W. Fisher, C. C. Piazza, & H. S. Roane (Eds.), Handbook of applied behavior analysis (pp. 3–33). New York: Guilford.

Fletcher, J., Coulter, W. A., Reschly, D., & Vaughn, S. (2004). Alternative approaches to the definition and identification of learning disabilities: Some questions and answers. Annals of Dyslexia, 54(2), 304–331.

Fletcher, J. M., Denton, C., & Francis, D. J. (2005). Validity of alternative approaches for the identification of learning disabilities: Operationalizing unexpected underachievement. Journal of Learning Disabilities, 38, 545–552.

Galassi, J. P., & Perot, A. R. (1992). What you should know about behavioral assessment. Journal of Counseling and Development, 70(5), 624–631.

Geiger, K. B., Carr, J. E., & LeBlanc, L. A. (2010). Function-based treatments for escape-maintained problem behavior: A treatment-selection model for practicing behavior analysts. Behavior Analysis in Practice, 3, 22–32.

Goldstein, A. P., & Martens, B. K. (2000). Lasting change: Methods for enhancing generalization of gain. Champaign: Research Press.

Gresham, F. M. (2004). Current status and future directions of school-based behavioral interventions. School Psychology Review, 33(3), 326–343.

Haring, N. G., & Eaton, M. D. (1978). Systematic procedures: An instructional hierarchy. In N. G. Haring, T. C. Lovitt, M. D. Eaton, & C. L. Hansen (Eds.), The fourth R: Research in the classroom (pp. 23–40). Columbus: Charles E. Merril.

Hayes, S. C., Nelson, R. O., & Jarrett, R. B. (1986). Evaluating the quality of behavioral assessment. Conceptual foundations of behavioral assessment (pp. 463–503). New York: Guilford.

Hine, J. F., & Ardoin, S. P. (2011, May). Using discrete trials to increase the feasibility of assessing student problem behavior. Paper presented at the 37th annual conference of the Association of Applied Behavior Analysis International. Denver, CO.

Hosp, J. L., & Ardoin, S. P. (2008). Assessment for instructional planning. Assessment for Effective Intervention, 33(2), 69–77.

Hudson, T., Hinkson-Lee, K., & Collins, B. (2013). Teaching paragraph composition to students with emotional/behavioral disorders using the simultaneous prompting procedure. Journal of Behavioral Education, 22(2), 139–156. doi:10.1007/s10864-012-9167-8.

Individuals with Disability Education Act Amendments of 1997 [IDEA]. (1997). http://thomas.loc.gov/home/thomas.php. Accessed 10 Dec 2013.

Individuals with Disabilities Education Improvement Act of 2004. (2004). Pub. L. No. 108–446.

Iwata, B. A., Dorsey, M. F., Slifer, K. J., Bauman, K. E., & Richman, G. S. (1994). Toward a functional analysis of self-injury. Journal of Applied Behavior Analysis, 27(2), 197–209.

Ledford, J. R., Gast, D. L., Luscre, D., & Ayres, K. M. (2008). Observational and incidental learning by children with autism during small group instruction. Journal of Autism and Developmental Disorders, 38(1), 86–103.

LeGray, M. W., Dufrene, B. A., Sterling-Turner, H., Joe Olmi, D. D., & Bellone, K. (2010). A comparison of function-based differential reinforcement interventions for children engaging in disruptive classroom behavior. Journal of Behavioral Education, 19(3), 185–204. doi:10.1007/s10864-010-9109-2.

Marston, D. (2005). Tiers of intervention in responsiveness to intervention: Prevention outcomes and learning disabilities identification patterns. Journal of Learning Disabilities, 38, 539–544.

Martens, B. K., & Ardoin, S. P. (2010). Assessing disruptive behavior within a problem-solving model. In G. G. Peacock, R. A. Ervin, E. J. Daly, & K. W. Merrell (Eds.), Practical handbook in school psychology: Effective practices for the 21st century (pp. 157–174). New York: Guilford.

Martens, B. K., Eckert, T. L., Bradley, T. A., & Ardoin, S. P. (1999). Identifying effective treatments from a brief experimental analysis: Using single case design elements to aid decision-making. School Psychology Quarterly, 14, 163–181.

McDougal, J. L., Graney, S. B., Wright, J. A., & Ardoin, S. P. (2010). RTI in practice: A practical guide to implementing effective evidence-based interventions in your school. Hoboken: Wiley.

Mellard, D. F., McKnight, M., & Woods, K. (2009). Response to Intervention screening and progress monitoring practices in 41 local school. Learning Disabilities Research & Practice, 24, 186–195.

Mesmer, E. M., & Duhon, G. J. (2011). Response to intervention: Promoting and evaluating generalization. Journal of Evidence-Based Practices for Schools, 12(1), 75–104.

Miller, F. G., & Lee, D. L. (2013). Do functional behavioral assessments improve intervention effectiveness for students diagnosed with ADHD? A single-subject meta-analysis. Journal of Behavioral Education, 22(3), 253–282. doi:10.1007/s10864-013-9174-4.

Nelson, R. O., & Hayes, S. C. (1979a). Some current dimensions of behavioral assessment. Behavioral Assessment, 1, 1–16.

Nelson, R. O., & Hayes, S. C. (1979b). The nature of behavioral assessment: A commentary. Journal of Applied Behavior Analysis, 12(4), 491–500.

Noell, G. H., Call, N. A., & Ardoin, S. P. (2011). Building complex repertoires from discrete behaviors by establishing stimulus control, behavioral chains, and strategic behavior. In W. Fisher, C. Piazza, & H. Roane (Eds.), Handbook of applied behavior analysis (pp. 250–269). New York: Guilford.

Parker, D., & Kamps, D. (2011). Effects of task analysis and self-monitoring for children with autism in multiple social settings. Focus on Autism and Other Developmental Disabilities, 26(3), 131–142.

Positive Behavioral Interventions and Supports. (2013). Technical Assistance Center on Positive Behavioral Interventions and Supports. Office of Special Education Programs, 2013. Web. <http://www.pbis.org>. Accessed 10 Dec 2013.

President’s Commission on Excellence in Special Education. (2002). A new era: Revitalizing special education for children and their families. Jessup: ED Pubs.

Reynolds, C. R., & Kamphaus, R. W. (2006). BASC-2: Behavior assessment system for children (2nd ed.). Upper Saddle River: Pearson Education, Inc.

Richards, S., B., Taylor, R. L., Ramasamy, R., & Richards, R. Y. (1999). Single subject research: Applications in educational and clinical settings. San Diego: Singular Publishing Group, Inc.

Roane, H. S., Rihgdahl, J. E., Kelley, M. E., & Glover, A. C. (2011). Single-case experimental design. In W. W. Fisher, C. C. Piazza, & H. S. Roane (Eds.), Handbook of applied behavior analysis (pp. 132–150). New York: Guilford.

Shinn, M. R. (2007). Identifying students at risk, monitoring performance, and determining eligibility within response to intervention: Research on educational need and benefit from academic intervention. School Psychology Review, 36, 601–617.

Shumate, E. D., & Wills, H. P. (2010). Classroom-based functional analysis and intervention for disruptive and off-task behaviors. Education and Treatment of Children, 33, 23–48.

Solnick, M., & Ardoin, S. P. (2010). A quantitative review of functional analysis procedures in public school settings. Education and Treatment of Children, 33, 153–175.

Sparks, R. L., & Lovett, B. J. (2009). Objective criteria for classification of postsecondary students as learning disabled. Journal of Learning Disabilities, 42(3), 230–239.

Stokes, T. F., & Baer, D. M. (1977). An implicit technology of generalization. Journal of Applied Behavior Analysis, 10, 349–367.

Sugai, G., & Horner, R. H. (2002). Introduction to the special series on positive behavior support in schools. Journal of Emotional & Behavioral Disorders, 10(3), 130.

Sugai, G., Horner, R. H., Dunlap, G., Hieneman, M., Lewis, T. J., Nelson, C. M., Scott, T., Liaupsin, C., Sailor, W., Turnbull, A. P., Turnbull, H. R., III, Wickham, D., Reuf, M., & Wilcox, B. (2000). Applying positive behavioral support and functional behavioral assessment in schools. Journal of Positive Behavioral Interventions, 2, 131–143.

Szadokierski, I., & Burns, M. K. (2008). Analogue evaluation of the effects of opportunities to respond and ratios of known items within drill rehearsal of Esperanto words. Journal of School Psychology, 46(5), 593–609.

Tkachuk, G., Leslie-Toogood, A., & Martin, G. L. (2003). Behavioral assessment in sport psychology. The Sport Psychologist, 17, 104–117.

Vollmer, T. R., & Iwata, B. A. (1992). Differential reinforcement as treatment for behavior disorders: Procedural and functional variations. Research in Developmental Disabilities, 13(4), 393–417.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer Science+Business Media New York

About this chapter

Cite this chapter

Ardoin, S., Wagner, L., Bangs, K. (2016). Applied Behavior Analysis: A Foundation for Response to Intervention. In: Jimerson, S., Burns, M., VanDerHeyden, A. (eds) Handbook of Response to Intervention. Springer, Boston, MA. https://doi.org/10.1007/978-1-4899-7568-3_3

Download citation

DOI: https://doi.org/10.1007/978-1-4899-7568-3_3

Published:

Publisher Name: Springer, Boston, MA

Print ISBN: 978-1-4899-7567-6

Online ISBN: 978-1-4899-7568-3

eBook Packages: Behavioral Science and PsychologyBehavioral Science and Psychology (R0)