Abstract

Increasing attention has been paid to the role of executive functions in school learning and achievement in recent years (e.g., Dawson, 2012; Maricle & Avirett, 2012; McCloskey, 2012; Meltzer, 2007, 2012; Miller, 2007, 2013). For example, within the emerging subdiscipline of school neuropsychology, attempts have been made to integrate psychometric and neuropsychological theories in an effort to better understand brain–behavior relationships (e.g., Flanagan, Alfonso, Ortiz, & Dynda, 2010; Miller, 2007). In addition, some intelligence test developers offer a cognitive processing model as a basis for interpreting test performance and provide clinical clusters, such as “executive processes,” “cognitive fluency,” and “broad attention” as part of their battery (e.g., WJ III NU; Woodcock, McGrew, & Mather, 2001, 2007). Other test authors developed tests that more directly purport to measure executive functions, including planning and attention. For example, the Kaufman Assessment Battery for Children, second edition (KABC-II; Kaufman & Kaufman, 2004), although based on the Cattell–Horn–Carroll (CHC) theory of the structure of cognitive abilities, maintains its roots in the Lurian model of cognitive processing and measures “Fluid Reasoning (Gf)/Planning,” for example. Likewise, the Cognitive Assessment System (CAS; Das & Naglieri, 1997) is based on a Lurian cognitive processing theory of intelligence and measures planning, attention, and simultaneous and successive processes, of which the former two are often conceived of as executive functions (Maricle & Avirett, 2012; Naglieri, 2012).

Portions of this chapter were adapted from Flanagan, Oritz, and Alfonso (2013). Essentials of cross-battery assessment, third edition. Hoboken, NJ: Wiley.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Executive Function

- Work Memory Capacity

- Concept Formation

- Neuropsychological Batterie

- Cognitive Batterie

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Introduction

Increasing attention has been paid to the role of executive functions in school learning and achievement in recent years (e.g., Dawson, 2012; Maricle & Avirett, 2012; McCloskey, 2012; Meltzer, 2007, 2012; Miller, 2007, 2013). For example, within the emerging subdiscipline of school neuropsychology, attempts have been made to integrate psychometric and neuropsychological theories in an effort to better understand brain–behavior relationships (e.g., Flanagan, Alfonso, Ortiz, & Dynda, 2010; Miller, 2007). In addition, some intelligence test developers offer a cognitive processing model as a basis for interpreting test performance and provide clinical clusters, such as “executive processes,” “cognitive fluency,” and “broad attention” as part of their battery (e.g., WJ III NU; Woodcock, McGrew, & Mather, 2001, 2007). Other test authors developed tests that more directly purport to measure executive functions, including planning and attention. For example, the Kaufman Assessment Battery for Children, second edition (KABC-II; Kaufman & Kaufman, 2004), although based on the Cattell–Horn–Carroll (CHC) theory of the structure of cognitive abilities, maintains its roots in the Lurian model of cognitive processing and measures “Fluid Reasoning (Gf)/Planning,” for example. Likewise, the Cognitive Assessment System (CAS; Das & Naglieri, 1997) is based on a Lurian cognitive processing theory of intelligence and measures planning, attention, and simultaneous and successive processes, of which the former two are often conceived of as executive functions (Maricle & Avirett, 2012; Naglieri, 2012).

Despite some references and inferences made to executive functions, most developers of intelligence tests have not addressed executive functions directly and, other than the Delis-Kaplan Executive Function System (D-KEFS; Delis, Kaplan, & Kramer, 2001), there do not appear to be any other psychometric cognitive batteries that were designed expressly for the purpose of assessing executive functions (McCloskey, Perkins, & Van Divner, 2009). Moreover, tests that include measures of executive functions, such as the NEPSY-II (Korkman, Kirk, & Kemp, 2007), do not provide a rationale for the selection and inclusion of specific tasks based on an overarching model of executive capacities (McCloskey et al.). Since most intelligence batteries do not measure executive functions well and since most neuropsychological batteries do not measure a broad range of executive functions, a flexible battery approach is needed to test hypotheses about an individual’s executive functions.

There is general consensus in the research literature that executive functions consist of separate but related cognitive processes. Although researchers have not agreed on the components of executive functions, there is consensus that they consist of several domains, namely, initiating, planning, and completing complex tasks; working memory; attentional control; cognitive flexibility; and self-monitoring and regulation of cognition, emotion, and behavior (see Maricle & Avirett, 2012 for a discussion). For the purpose of this chapter, we focus on the major functions of the frontal-subcortical circuits of the brain, including planning, focusing and sustaining attention, maintaining or shifting sets (cognitive flexibility), verbal and design fluency, and use of feedback in task performance (i.e., functions of the dorsolateral prefrontal circuit), as well as working memory (i.e., functions of the inferior/temporal posterior parietal circuit; Miller, 2007). We chose to focus mainly on this subset of executive functions because our intelligence, cognitive, and neuropsychological batteries can provide information about them. However, it is important to remember that this selected set of executive functions, because they are derived from performance on intelligence, cognitive, and neuropsychological batteries, assists in understanding an individual’s executive function capacities when directing perception, cognition, and action in the symbol system arena only (McCloskey et al., 2009). Practitioners will need to supplement these instruments when concerns about executive function capacities extend into the intrapersonal, interpersonal, and environmental arenas. Nevertheless, focus on executive function capacities in the symbol system arena, via the use of standardized tests, is useful in school settings to assist in understanding a child’s learning and academic production (McCloskey et al.).

The purpose of this chapter is to describe how the cross-battery assessment (XBA) approach can be used to measure a selected set of executive functions, particularly those that are relevant to the cognition domain (e.g., reasoning with verbal and visual-spatial information). Although the XBA approach is based primarily on CHC theory—a theory that does not include a specific or general construct of executive functioning—recently, it was integrated with neuropsychological theories and applied to neuropsychological batteries (Flanagan et al., 2010; Flanagan, Ortiz, & Alfonso, 2013). More specifically, the current iteration of the XBA approach identifies specific components of executive functions and provides guidelines for measuring those components (Flanagan et al., 2013). This chapter will describe the XBA approach and provide a brief summary of CHC theory. Next, this chapter will describe how an integration of CHC and neuropsychological theory and research can be used to inform test selection as well as quantitative and qualitative interpretations of specific executive functions in the cognition domain. This chapter will also include brief examples of cross-battery assessments from which information about executive functions may be garnered.

The Cross-Battery Assessment Approach

As our understanding of cognitive abilities continues to unfold and as we begin to gain a greater understanding of how school neuropsychology will influence the practice of test interpretation, it seems clear that the breadth and depth of information we can obtain from our cognitive and neuropsychological instruments is ever increasing. In light of the recent expansion of CHC theory and the integration of this theory with neuropsychological theories, it will remain unlikely that an individual intelligence, cognitive ability, or neuropsychological battery will provide adequate coverage of the full range of abilities and processes that may be relevant to any given evaluation purpose or referral concern. The development of a battery that fully operationalizes CHC theory, for example, would likely be extremely labor intensive and prohibitively expensive for the average practitioner, school district, clinic, or university training program. Therefore, flexible battery approaches are likely to remain essential within the repertoire of practice for most professionals. By definition, flexible battery approaches offer an efficient and practical method by which practitioners may evaluate a broad range of cognitive abilities and processes, including executive functions. In this section, we summarize one such flexible battery approach, XBA, because it is grounded in a well-validated theory and is based on sound psychometric principles and procedures.

The XBA approach was introduced by Flanagan and her colleagues over a decade ago (Flanagan & McGrew, 1997; Flanagan, McGrew, & Ortiz, 2000; Flanagan & Ortiz, 2001; McGrew & Flanagan, 1998). It provides practitioners with the means to make systematic, reliable, and theory-based interpretations of cognitive, achievement, and neuropsychological instruments and to augment any instrument with subtests from other batteries to gain a more complete understanding of an individual’s strengths and weaknesses (Flanagan, Alfonso, & Ortiz, 2012; Flanagan, Ortiz, & Alfonso, 2007). Moving beyond the boundaries of a single cognitive, achievement, or neuropsychological battery by adopting the theoretically and psychometrically defensible XBA principles and procedures allows practitioners the flexibility necessary to measure the cognitive constructs and neurodevelopmental functions that are most germane to referral concerns (e.g., Carroll, 1998; Decker, 2008; Kaufman, 2000; Wilson, 1992).

According to Carroll (1997), the CHC taxonomy of human cognitive abilities “appears to prescribe that individuals should be assessed with respect to the total range of abilities the theory specifies” (p. 129). However, because Carroll recognized that “any such prescription would of course create enormous problems,” he indicated that “[r]esearch is needed to spell out how the assessor can select what abilities need to be tested in particular cases” (p. 129). Flanagan and colleagues’ XBA approach was developed specifically to “spell out” how practitioners can conduct assessments that approximate the total range of cognitive and academic abilities and neuropsychological processes more adequately than what is possible with any collection of co-normed tests. And, for the purpose of this chapter, the XBA approach will spell out how practitioners can measure specific CHC abilities from which information about a subset of executive functions within the cognition domain may be derived.

In a review of the XBA approach, Carroll (1998) stated that it “can be used to develop the most appropriate information about an individual in a given testing situation” (p. xi). More recently, Decker (2008) stated that the XBA approach “may improve…assessment practice and facilitate the integration of neuropsychological methodology in school-based assessments…[because it] shift[s] assessment practice from IQ composites to neurodevelopmental functions” (p. 804).

Noteworthy is the fact that assessment professionals “crossed” batteries well before Woodcock (1990) recognized the need to do so and before Flanagan and her colleagues introduced the XBA approach in the late 1990s following his suggestion. Neuropsychological assessment has long adopted the practice of crossing various standardized tests in an attempt to measure a broader range of brain functions than that offered by any single instrument (Lezak, 1976, 1995; Lezak, Howieson, & Loring, 2004; see Wilson, 1992 for a review). Nevertheless, several problems with crossing batteries plagued assessment related fields for years. Many of these problems have been circumvented by Flanagan and colleagues’ XBA approach (see Flanagan & McGrew, 1997; Flanagan et al., 2007, 2013 for examples). But unlike the XBA approach, the various so-called “cross-battery” techniques applied within the field of neuropsychological assessment, for example, are not typically grounded in a systematic approach that is theoretically and psychometrically sound. Thus, as Wilson (1992) cogently pointed out, the field of neuropsychological assessment was in need of an approach that would guide practitioners through the selection of measures that would result in more specific and delineated patterns of function and dysfunction—an approach that provides more clinically useful information than one that is “wedded to the utilization of subscale scores and IQs” (p. 382). Indeed, all fields involved in the assessment of cognitive and neuropsychological functioning have some need for an approach that would aid practitioners in their attempt to “touch all of the major cognitive areas, with emphasis on those most suspect on the basis of history, observation, and on-going test findings” (Wilson, 1992, p. 382). The XBA approach has met this need. A brief definition of and rationale for the XBA approach follows.

Definition

The XBA approach is a method of assessing cognitive and academic abilities and neuropsychological processes that is grounded mainly in CHC theory and research. It allows practitioners to measure reliably a wider range (or a more in depth but selective range) of ability and processing constructs, than that represented by any given stand alone assessment battery in a psychometrically defensible manner.

The Foundation of the XBA Approach

The XBA approach is based on three foundational sources of information—namely, CHC and neuropsychological theories, broad ability classifications, and narrow ability classifications—that together provide the knowledge base necessary to organize theory-driven, comprehensive assessments of cognitive, achievement, and neuropsychological constructs. A brief summary of each foundational source of information follows.

The Cattell–Horn–Carroll theory. Psychometric intelligence theories converged in recent years on a more complete or “expanded” multiple intelligence taxonomy, reflecting syntheses of factor analytic research conducted over the past 60–70 years. The most recent representation of this taxonomy is the CHC structure of cognitive abilities. CHC theory is an integration of Cattell and Horn’s Fluid–Crystallized (Gf–Gc) theory (Horn, 1991) and Carroll’s (1993) three-stratum theory of the structure of cognitive abilities.

In the late 1990s, McGrew (1997) attempted to resolve some of the differences between the Cattell–Horn and Carroll models. On the basis of his research, McGrew proposed an “integrated” Gf–Gc theory and he and his colleagues used this model as a framework for the XBA approach (e.g., Flanagan & McGrew, 1997; Flanagan et al., 2000; McGrew & Flanagan, 1998). This integrated theory quickly became known as the CHC theory of cognitive abilities shortly thereafter (see McGrew, 2005), and the WJ III NU COG was the first cognitive battery to be based on this theory. Many other cognitive batteries followed suit, including the KABC-II; Differential Ability Scales, second edition (DAS-II; Elliott, 2007); and Stanford–Binet Intelligence Scales, fifth edition (SB5; Roid, 2003).

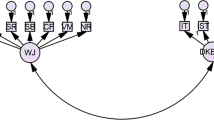

Recently, Schneider and McGrew (2012) reviewed CHC-related research and provided a summary of the CHC abilities (broad and narrow) that have the most evidence to support them. In their attempt to provide a CHC overarching framework that incorporates the well-supported cognitive abilities, they articulated a 16-factor model containing over 80 narrow abilities (see Fig. 22.1). The ovals in the figure represent broad abilities and the rectangles represent narrow abilities. Additionally, an overall “g” or general ability is omitted from this figure intentionally due to space limitations. Because of the large number of abilities represented in CHC theory, the broad abilities in Fig. 22.1 are grouped conceptually into six categories to enhance comprehensibility, in a manner similar to that suggested by Schneider and McGrew (i.e., Reasoning, Acquired Knowledge, Memory and Efficiency, Sensory, Motor, and Speed and Efficiency). Space limitations preclude a discussion of all the ways in which CHC theory has evolved and the reasons why recent refinements and changes have been made (see Flanagan et al., 2013, and Schneider and McGrew for a discussion). However, to assist the reader in understanding the components of the theory, the broad abilities are defined in Table 22.1. For the purpose of this chapter, only the narrow abilities that are relevant to understanding executive functions within the cognition domain will be defined. Definitions of all narrow CHC abilities are found in Flanagan and colleagues and Schneider and McGrew. Overall, CHC theory represents a culmination of about seven decades of factor analysis research within the psychometric tradition. However, in addition to structural evidence, there are other sources of validity evidence, some quite substantial, that support CHC theory (see Horn & Blankson, 2005, for a summary).

Current and expanded Cattell-Horn-Carroll (CHC) theory of cognitive abilities. Note: This figure is based on information presented in Schneider and McGrew (2012). Ovals represent broad abilities and rectangles represent narrow abilities. Overall “g” or general ability is omitted from this figure intentionally due to space limitations. Conceptual groupings of abilities were suggested by Schneider and McGrew

CHC broad (Stratum II) classifications of cognitive, academic, and neuropsychological tests. Based on the results of a series of cross-battery confirmatory factor analysis studies of the major intelligence batteries (see Keith & Reynolds, 2010, for a review) and the task analyses of many cognitive test experts, Flanagan and colleagues classified all the subtests of the major cognitive, achievement, and neuropsychological batteries according to the particular CHC broad abilities they measured (e.g., Flanagan et al., 2010, 2013; Flanagan, Ortiz, Alfonso, & Mascolo, 2006; McGrew, 1997; McGrew & Flanagan, 1998; Reynolds, Keith, Flanagan, & Alfonso, in press). To date, more than 100 batteries and 750 subtests have been classified according to the CHC broad and narrow abilities they measure, based in part on the results of these studies (Flanagan et al., 2013). The CHC classifications of cognitive, achievement, and neuropsychological batteries assist practitioners in identifying measures that assess the various broad and narrow abilities represented in CHC theory.

Classification of tests at the broad ability level is necessary to improve upon the validity of assessment and interpretation. Specifically, broad ability classifications ensure that the CHC constructs that underlie assessments are minimally affected by construct-irrelevant variance (Messick, 1989, 1995). In other words, knowing what tests measure what abilities enables clinicians to organize tests into construct-relevant composites—composites that contain only measures that are relevant to the construct, ability, or process of interest.

To clarify, construct-irrelevant variance is present when an “assessment is too broad, containing excess reliable variance associated with other distinct constructs … that affects responses in a manner irrelevant to the interpreted constructs” (Messick, 1995, p. 742). For example, the Wechsler Intelligence Scale for Children, fourth edition (WISC-IV; Wechsler, 2003), Perceptual Reasoning Index (PRI) contains construct-irrelevant variance because, in addition to its two indicators of Gf (i.e., Picture Concepts, Matrix Reasoning), it has an indicator of Gv (i.e., Block Design). Therefore, the PRI is a mixed measure of two relatively distinct, broad CHC abilities (Gf and Gv); it contains reliable variance (associated with Gv) that is irrelevant to the interpreted construct of Gf. Notwithstanding the Wechsler PRI, most current intelligence and cognitive batteries contain only construct-relevant CHC broad ability composites—a welcomed improvement over previous edition of intelligence tests and an improvement that was based, in part, on the influence that the XBA approach had on test development, particularly in the late 1990s and early 2000s (see Alfonso, Flanagan, & Radwan, 2005).

CHC narrow (Stratum I) classifications of cognitive, academic, and neuropsychological tests. Narrow ability classifications were originally reported in McGrew (1997) and then later reported in McGrew and Flanagan (1998) and Flanagan et al. (2000) following minor modifications. Flanagan and her colleagues continued to gather content validity data on ability subtests and expanded their analyses to include subtests from achievement batteries (Flanagan et al., 2006) and, more recently, neuropsychological batteries (Flanagan et al., 2013). Classifications of ability and processing subtests according to content, format, and task demand at the narrow (stratum I) ability level were necessary to improve further upon the validity of assessment and interpretation (see Messick, 1989). Specifically, these narrow ability classifications were necessary to ensure that the CHC constructs that underlie assessments are well represented (McGrew & Flanagan). According to Messick (1995), construct underrepresentation is present when an “assessment is too narrow and fails to include important dimensions or facets of the construct” (p. 742).

Interpreting the WJ III Concept Formation (CF) test as a measure of Fluid Intelligence (i.e., the broad Gf ability) is an example of construct underrepresentation. This is because CF measures one narrow aspect of Gf (viz., Induction). At least one other Gf measure (i.e., subtest) that is qualitatively different from Induction is necessary to include in an assessment to ensure adequate representation of the Gf construct (e.g., a measure of General Sequential Reasoning [or Deduction]). Two or more qualitatively different indicators (i.e., measures of two or more narrow abilities subsumed by the broad ability) are needed for adequate construct representation (Comrey, 1988; Keith & Reynolds, 2012; Messick, 1989, 1995; Reynolds et al., in press). The aggregate of CF (a measure of Induction) and the WJ III Analysis–Synthesis test (a measure of deduction), for example, would provide an adequate estimate of the broad Gf ability because these tests are strong measures of Gf and represent qualitatively different aspects of this broad ability.

The classifications of tests at the broad and narrow ability levels of CHC theory guard against two ubiquitous sources of invalidity in assessment: construct-irrelevant variance and construct underrepresentation. In addition, these classifications augment the validity of test performance interpretation. Furthermore, to ensure that XBA procedures are theoretically and psychometrically sound, it is recommended that practitioners adhere to a set of guiding principles, which are enumerated in Table 22.2. Taken together, CHC theory, the CHC classifications of tests that underlie the XBA approach, and the accompanying guiding principles provide the necessary foundation from which to organize assessments and interpret assessment results in a manner that is comprehensive and supported by research (Flanagan et al., 2013).

CHC theory, as it is operationalized by current intelligence and cognitive batteries, emphasizes the sum of performances or outcome, rather than the process or steps that led to a particular outcome, which is why little, if any, emphasis is placed on understanding executive functions. Conversely, neuropsychological batteries place greater emphasis on process, allowing for practitioners to derive information about executive functions more readily. Because both outcome and process are important, each is addressed in this chapter. To ensure that both are addressed during test interpretation, an integration of CHC and neuropsychological theories is warranted.

Enhancing Interpretation of Test Performance: An Integration of CHC and Neuropsychological Theories

With the emergence of the field of school neuropsychology (e.g., Decker, 2008; Fletcher-Janzen & Reynolds, 2008; Hale & Fiorello, 2004; Miller, 2007, 2010, 2013) came the desire to link CHC theory and neuropsychological theories. Understanding how CHC theory and neuropsychological theories relate to one another expands the options available for interpreting test performance and improves the quality and clarity of test interpretation, as a much wider research base is available to inform practice.

Although scientific understanding of the manner in which the brain functions and how mental activity is expressed on psychometric tasks has increased dramatically in recent years, there is still much to be learned. All efforts to create a framework that guides test interpretation benefit from diverse points of view. For example, according to Fiorello, Hale, Snyder, Forrest, and Teodori (2008), “the compatibility of the neuropsychological and psychometric approaches [CHC] to cognitive functioning suggests converging lines of evidence from separate lines of inquiry, a validity dimension essential to the study of individual differences in how children think and learn” (p. 232; parenthetic information added). Their analysis of the links between the neuropsychological and psychometric approaches not only provides validity for both but also suggests that each approach may benefit from knowledge of the other. As such, a framework that incorporates the neuropsychological and psychometric approaches to cognitive functioning holds the promise of increasing knowledge about the etiology and nature of a variety of disorders (e.g., specific learning disability) and the manner in which such disorders are treated. This type of framework should not only connect the elements and components of both assessment approaches, but it should also allow for interpretation of data within the context of either model. In other words, the framework should serve as a “translation” of the concepts, nomenclature, and principles of one approach into their counterparts in the other. A brief discussion of one such framework, developed by Flanagan and her colleagues, is presented here (Flanagan et al., 2010, 2013). A variation of their framework is illustrated in Fig. 22.2 and represents an integration based on psychometric, neuropsychological, and Lurian perspectives.

The interpretive framework shown in Fig. 22.2 draws upon prior research and sources, most notably Dehn (2006), Fiorello et al. (2008), Fletcher-Janzen and Reynolds (2008), Miller (2007, 2010, 2013), and Strauss, Sherman, and Spreen (2006). In understanding the manner in which Luria’s blocks, the neuropsychological domains, and CHC broad abilities may be linked to inform test interpretation and mutual understanding among assessment professionals, Flanagan and colleagues pointed out four important observations that deserve mention. First, there is a hierarchical structure among the three theoretical conceptualizations. Second, the hierarchical structure parallels a continuum of interpretive complexity, spanning the broadest levels of cognitive functioning, where mental activities are “integrated,” to the narrowest level of cognitive functioning where mental activity is reduced to more “discrete” abilities and processes (see far left side of Fig. 22.2). Third, all mental activity takes place within a given ecological and societal context and is heavily influenced by language as well as other factors external to the individual. As such, the large gray shaded area represents “language and ecological influences on learning and production,” which includes factors such as exposure to language, language status (English learner vs. English speaker), opportunity to learn, motivation and effort, and socioeconomic status. Fourth, administration of cognitive and neuropsychological tests is typically conducted in the schools (e.g., for students suspected of having a specific learning disability) when a student fails to respond as expected to quality instruction and intervention. Thus, the framework in Fig. 22.2 is a representation of cognitive constructs and neuropsychological processes that may be measured (when a student is referred because of learning difficulties) and the manner in which they relate to one another.

According to Flanagan et al. (2010), when a student has difficulty with classroom learning and fails to respond as expected to intervention, a school-based hypothesis-generation, testing, and interpretation process should be carried out. Conceptualization of any case may begin at the “integrated” level (i.e., top of Fig. 22.2).

Luria’s functional units are depicted at the top of Fig. 22.2 as overarching cognitive concepts (see Naglieri, 2012, for definitions of the Lurian blocks). The interaction between, and the interconnectedness among, the functional units are represented by the horizontal double-headed arrows in the figure. Because Luria’s functional units are primarily descriptive concepts designed to guide applied clinical evaluation practices, neuropsychologists have had considerable independence in the manner in which they align their assessments with these concepts (Flanagan et al.).

Although a few psychoeducational batteries have been developed to operationalize one or more of Luria’s functional units, for the most part, neuropsychologists have typically couched Luria’s blocks within clinical and neuropsychological domains. In doing so, the Lurian blocks have been transformed somewhat from overarching concepts to domains with more specificity (Flanagan et al., 2010). These domains are listed in the rectangles at the top of Fig. 22.2 with their corresponding Lurian block. For example, the neuropsychological domains of attention, sensory-motor, and speed (and efficiency) correspond to Block 1; visual–spatial, auditory–verbal, and memory (and learning) correspond to Block 2; and executive functioning, learning (and memory), and efficiency (and speed) correspond to Block 3. Noteworthy is the fact that the memory and learning domain spans Blocks 2 and 3, and its placement and use of parentheses are intended to convey that memory may be primarily associated with Block 2 (simultaneous/successive) whereas the learning component of this domain is probably more closely associated with Block 3 (planning/metacognition). Likewise, speed and efficiency span Blocks 1 and 3, and its placement and use of parentheses denote that speed may be more associated with Block 1 (i.e., attention) whereas efficiency seems to be more associated with Block 3 (Flanagan et al., 2010).

Perhaps the most critical aspect of Flanagan et al.’s (2010) integrative framework is the distinction between functioning at the neuropsychological domain level and functioning at the broad CHC level. As compared to the neuropsychological domains, CHC theory allows for greater specificity of cognitive constructs. Because of structural differences in the conceptualization of neuropsychological domains and CHC broad abilities vis-à-vis factorial complexity, it is not possible to provide a precise, one-to-one correspondence between these conceptual levels. This is neither a problem nor an obstacle, but simply the reality of differences in perspective among these two lines of inquiry.

As compared to the neuropsychological domains, CHC constructs within the psychometric tradition tend to be relatively distinct because the intent is to measure a theoretical construct as purely and independently as possible. This is not to say, however, that the psychometric tradition has completely ignored shared task characteristics in favor of a focus on precision in measuring a single theoretical construct. For example, Kaufman provided a “shared characteristic” (or “Demand Analysis;” discussed later in this chapter) approach to individual test performance for several intelligence tests including the KABC-II and the various Wechsler Scales (Kaufman, 1979; see also McCloskey, 2009; McGrew & Flanagan, 1998; Sattler, 1988). This practice has often provided insight into the underlying cause(s) of learning difficulties, and astute practitioners continue to make use of it. Despite the fact that standardized, norm-referenced tests of CHC abilities were designed primarily to provide information about relatively discrete theoretical constructs, performance on these tests can still be viewed within the context of the broader neuropsychological domains. That is, when evaluated within the context of an entire battery, characteristics that are shared among groups of tests on which a student performed either high or low, for example, often provide the type of information necessary to assist in further understanding the nature of an individual’s underlying cognitive function or dysfunction, conceptualized as neuropsychological domains, such as executive functioning (Flanagan et al., 2010).

The double-headed arrows between neuropsychological domains and CHC abilities in Fig. 22.2 demonstrate that the relationship between these constructs is bidirectional. That is, one can conceive of the neuropsychological domains as global entities that impact performance on various CHC ability measures, just as one can conceive of a particular measure of a specific CHC ability as involving aspects of more than one neuropsychological domain. For example, as will be discussed in the next section, the broad CHC abilities of Gf, Gsm, Glr, and Gs, while conceived of as relatively distinct in the CHC literature, together may reveal information about executive functioning. That is, to gain an understanding of neuropsychological domains, it is likely necessary to evaluate performance across different CHC domains.

Flanagan et al.’s (2010) conceptualization of the relations between the neuropsychological domains and the CHC broad abilities is presented next. For the purpose of parsimony, the neuropsychological domains are grouped according to their relationship with the Lurian blocks, and thus, these domains are discussed as clusters rather than discussed separately.

Correspondence Between the Neuropsychological Domains and CHC Broad Abilities

According to Flanagan et al. (2010), measures of at least six CHC broad abilities involve processes associated with the Attention/Sensory-Motor /Speed (and Efficiency) neuropsychological cluster, including Psychomotor Abilities (Gp), Tactile Abilities (Gh), Kinesthetic Abilities (Gk), Decision/Reaction Time or Speed (Gt),Footnote 1 Processing Speed (Gs), and Olfactory Abilities (Go).Footnote 2 Gp involves the ability to perform body movements with precision, coordination, or strength. Gh involves the sensory receptors of the tactile (touch) system, such as the ability to detect and make fine discriminations of pressure on the surface of the skin. Gk includes abilities that depend on sensory receptors that detect bodily position, weight, or movement of the muscles, tendons, and joints. Because Gk includes sensitivity in the detection, awareness, or movement of the body or body parts and the ability to recognize a path the body previously explored without the aid of visual input (e.g., blindfolded), it may involve some visual–spatial process, but the input remains sensory-based and thus better aligned with the sensory-motor domain. Gt involves the ability to react and/or make decisions quickly in response to simple stimuli, typically measured by chronometric measures of reaction time or inspection time. Gs is the ability to automatically and fluently perform relatively easy or overlearned cognitive tasks, especially when high mental efficiency is required. As measured by current cognitive batteries (e.g., WISC-IV Coding, Symbol Search, and Cancellation), Gs seems to capture the essence of both speed and efficiency, which is why there are double-headed arrows from Gs to Block 1 (where Speed is emphasized) and Block 3 (where Efficiency is emphasized) in Fig. 22.2. Go involves abilities that depend on sensory receptors of the main olfactory system (nasal chambers). Many CHC abilities associated with the Attention/Sensory-Motor /Speed (and Efficiency) cluster are measured by neuropsychological tests (e.g., NEPSY-II, D-KEFS, Dean-Woodcock Neuropsychological Battery [DWNB; Dean & Woodcock, 2003]; Flanagan et al., 2010).

Prior research suggests that virtually all measures of broad CHC abilities are associated with the visual–spatial/auditory–verbal/memory (and learning) neuropsychological cluster. That is, the vast majority of tasks on cognitive and neuropsychological batteries require either visual-spatial or auditory–verbal input. Apart from tests that relate more to discrete sensory-motor functioning and that utilize sensory input along the kinesthetic, tactile, or olfactory systems, all other tests will necessarily rely either on visual-spatial or auditory–verbal stimuli. Certainly, visual (Gv) and auditory (Ga) processing are measured well on neuropsychological and cognitive instruments. Furthermore, tests of Short-Term Memory (Gsm) and Long-Term Storage and Retrieval (Glr) typically rely on visual (e.g., pictures) or verbal (digits or words) information for input. Tasks that involve reasoning (Gf), stores of acquired knowledge (e.g., Gc, Gkn), and even speed (Gs) also use either visual–spatial and/or auditory–verbal channels for input. Furthermore, it is likely that such input will be processed in one of the two possible ways—simultaneously or successively (Luria, 1973; Naglieri, 2005, 2012).

Finally, research suggests that the Executive Functioning/Learning (and Memory)/Efficiency (and Speed) neuropsychological cluster is associated with eight broad CHC abilities, including Fluid Reasoning (Gf; e.g., planning), Crystallized Intelligence (Gc; e.g., concept formation and generation), General (Domain-Specific) Knowledge Ability (Gkn), Quantitative Knowledge (Gq), Broad Reading and Writing Ability (Grw), Processing Speed (Gs; e.g., focus/selected attention), Short-Term Memory (Gsm; e.g., working memory), and Long-Term Storage and Retrieval (Glr; e.g., retrieval fluency). Gf generally involves the ability to solve novel problems using inductive, deductive, and/or quantitative reasoning and, therefore, is most closely associated with executive functioning. Gc represents one’s stores of acquired knowledge (e.g., vocabulary, general information) or “learned” information and is entirely dependent on language, the ability that Luria believed was necessary to mediate all aspects of learning. In addition, Domain-Specific Knowledge (Gkn), together with knowledge of Reading/Writing (Grw) and Math (Gq), reflects the learning component of “memory and learning.” Therefore, Flanagan and colleagues contended that Gc, Gkn, Grw, and Gq are related to this neuropsychological cluster. Gsm, especially working memory, and Glr, especially the retrieval fluency abilities, are often conceived of as executive functions and involve planning and metacognition.

As may be seen in Fig. 22.2, Flanagan et al. (2010) have placed the CHC narrow abilities at the discrete end of the integrated-discrete continuum. It is noteworthy that narrow ability deficits tend to be more amenable to remediation, accommodation, or compensatory strategy interventions as compared to broader and more overarching abilities. For example, poor memory span, a narrow ability subsumed by the broad ability, Gsm, can often be compensated for effectively via the use of strategies such as writing things down or recording them in some manner for later reference. Likewise, it is possible to train a phonetic coding deficit (a narrow Ga ability) to the point where it becomes a skill. In contrast, when test performance suggests more pervasive dysfunction, as may be indicated by deficits in one or more neuropsychological domains, for example, the greater the likelihood that intervention will need to be broader, more intensive, and long term, perhaps focusing on the type of instruction being provided to the student and how the curriculum ought to be modified and delivered to improve the student’s learning (Fiorello et al., 2008; Flanagan, Alfonso, & Mascolo, 2011; Mascolo, Flanagan, & Alfonso, in press; see also Meltzer, 2012). Therefore, knowing the areas that are problematic for the individual should guide the planning, selection, and tailoring of interventions (Mascolo et al.).

Accurate interpretation of test performance is needed if corresponding educational strategies and interventions are to lead to positive outcomes for the individual. Figure 22.2 includes four types of interpretation. It is likely that practitioners will rely most on the types of interpretation that are grounded in the theories with which they are most familiar (e.g., CHC, Luria).

Type 1 interpretation: Neuropsychological processing interpretation. This level of interpretation was referred to above. Specifically, when subtests are organized according to those that reflect weaknesses or deficits and those that reflect average or better ability, the neuropsychological domains associated with the tests in each grouping may be analyzed to determine if any particular neuropsychological domain or Lurian block is associated with one group of subtests and not (to a substantial degree) the other. Analyzing a student’s performance at this more integrated level may help practitioners explain why some, perhaps, seemingly uncorrelated subtests are related in the context of the individual’s brain–behavior functioning.

For example, if an individual exhibits weaknesses on the WISC-IV Matrix Reasoning, Digit Span, and Cancellation subtests, yet average or above average performance on all other subtests, it may be hypothesized that it is because these tasks, which involve Executive Functioning (e.g., Matrix Reasoning), Learning and Memory (e.g., Digit Span), and Efficiency and Speed (e.g., Cancellation), all relate to the frontal-subcortical circuits in Luria’s Block 3. Therefore, test performance suggests that the individual has difficulty with planning, organizing, and carrying out tasks, an interpretation that should be supported with ecological validity (e.g., specific activities involving planning and organization are extremely difficult for the individual and often cannot be accomplished without support). In addition, possible frontal-subcortical dysfunction or, more specifically, damage or dysfunction in the dorsolateral prefrontal circuit may be inferred from the test findings (see Miller, 2007), but only within the context of case history and in the presence of converging data sources. However, if the individual demonstrated weaknesses in Digit Span and Cancellation only, and therefore the deficits were not entirely representative of Block 3, for example, interpretation according to specific neuropsychological domains involved in Digit Span and Cancellation task performance may be more informative (e.g., individual has difficulty with Attention).

Moving to the more discrete end of the integrated-discrete interpretation continuum in Fig. 22.2, information about more specific abilities and processes may be obtained when evaluating subtests that were grouped according to factor analysis results. For example, most cognitive assessment batteries group subtests into broad CHC ability domains or composites based on the results of CHC-driven confirmatory factor analysis (Keith & Reynolds, 2012).

Type 2 interpretation: Broad CHC ability interpretation. This type of interpretation emphasizes broad ability constructs (e.g., Gv) over narrow ability and processing constructs (e.g., Visualization [Vz], Visual Memory [MV]). Broad abilities are represented by at least two qualitatively different indicators (subtests) of the construct. For example, in Fig. 22.2, the broad ability of Gf is represented by two subtests from the same battery (i.e., WJ III NU COG), each of which measures a qualitatively different aspect of Gf. These subtests are Analysis–Synthesis (a measure of RG) and Concept Formation (a measure of I). Interpretation of Gf (referred to as “Type 2 Interpretation” in Fig. 22.2) may be made when two conditions are met: (a) two or more qualitatively different narrow ability or processing indicators (subtests) of Gf are used to represent the broad ability; and (b) the broad ability composite (Gf in this example) is considered cohesive, suggesting that it is a good summary of the theoretically related abilities that comprise it.

As may be seen in Fig. 22.2, the WJ III NU COG contains two qualitatively different indicators of Gf. When the difference between WJ III NU COG subtest standard scores is not statistically significant, then the WJ III NU COG Gf composite is considered cohesive and may be interpreted as a reliable and valid estimate of this broad ability (see “Level 2 interpretation” in Fig. 22.2). However, if the difference between these subtest standard scores is statistically significant and uncommon in the general population, then the Gf composite is considered noncohesive and, therefore, should not be interpreted as a good summary of the abilities that comprise it. At this point in the interpretation process, a judgment regarding whether or not follow-up assessment is necessary should be made. For example, if the lower of the two scores in the Gf composite was indicative of a weakness or deficit and the higher score was suggestive of at least average ability, then it would make sense to follow up on the lower score via a subtest that measures the same narrow ability as the one underlying the subtest with the lower score.

Suppose the lower score in a two-subtest composite was 105 (65th percentile) and the composite was considered noncohesive. It seems unnecessary to follow up on the lower score in the composite, as this score does not suggest any type of weakness or dysfunction. Likewise, the higher score in the composite, in this example, represents a normative strength (e.g., standard score of 120; 91st percentile). Therefore, even though the composite is not a good summary of the theoretically related abilities that comprise it, performance ranges from average to well above average in the broad ability area, suggesting no need for follow-up. Alternatively, suppose the lower score in a two-subtest composite was 85 (16th percentile on the Concept Formation subtest) and the higher score was 100 (50th percentile on the Analysis–Synthesis subtest). In this example, regardless of whether or not the composite is cohesive, there is certainly a need to follow up on the lower score with another measure of Induction because the score of 85 is suggestive of a weakness in Induction. If another measure of Induction results in average or better performance, then Type 2 interpretation ensues, and a broad ability cross-battery composite is calculated using the scores from Analysis–Synthesis and the second test of Induction (Flanagan et al., 2013). If the second measure of Induction suggests below average performance, then Type 3 interpretation is necessary.

Type 3 interpretation: Narrow CHC ability interpretation (XBA). This type of interpretation highlights a specific situation wherein XBA data are often considered. For example, suppose that the WJ III NU COG Gf composite (represented in Fig. 22.2) was noncohesive and the Analysis–Synthesis standard score was greater than 100 and significantly higher than the Concept Formation standard score, which was below 85 and suggestive of a normative weakness or deficit. Many practitioners would opt to follow up on the lower score and assess the narrow ability of Induction by administering another measure of Induction, following the cross-battery guiding principles (see Table 22.2). Because the WJ III NU COG does not contain another measure of Induction, the practitioner must select a subtest from another battery. In the example provided in Fig. 22.2, the practitioner administered the D-KEFS Free-Sorting subtest. Now the practitioner has three measures of Gf, two of which measure Induction and one that measures General Sequential Reasoning. These three subtest scores may be analyzed via XBA softwareFootnote 3 to determine the best way to interpret them. When the two narrow ability indicators of Induction form a cohesive composite, then the inductive reasoning cross-battery narrow ability composite is calculated and interpreted. In this example, the narrow ability of Induction would be interpreted as a weakness or deficit since both scores fell below 85.

Note that when two tests of Induction differ significantly from one another (i.e., they do not form a cohesive composite), a qualitative analysis of task demands and task characteristics is necessary to generate hypotheses regarding the reason for this unexpected finding. This type of qualitative analysis is labeled “Type 4 interpretation” in Fig. 22.2 and is discussed later.

Quantitative (Type 2 and Type 3) Evaluation of Executive Functions via the XBA Approach

Prior to explaining Type 4 interpretation, it is important to realize that broad and narrow Type 2 and Type 3 interpretations, respectively, are relevant to understanding executive functions from a psychometric or quantitative perspective. According to Miller (2007), information about various executive functions may be derived from psychometric tests. For example, tests that measure working memory capacity; concept formation and generation; planning, reasoning, and problem solving; retrieval fluency; and attention reveal information about executive functions. These constructs correspond to broad and narrow CHC abilities (see Fig. 22.3). For example, working memory capacity is a narrow ability subsumed by the broad Gsm ability in CHC theory. There are many popular batteries that include subtests that measure working memory capacity, such as Wechsler Adult Intelligence Scale, fourth edition (WAIS-IV; Wechsler, 2008); Letter–Number Sequencing; and the SB5 Block Span testlet (see Fig. 22.3). Concept formation and generation appears to correspond quite well to Gc-type tasks, particularly those that require an individual to reason (Gf) with verbal information. Many Gc tests involve the ability to reason, such as the D-KEFS Twenty Questions subtest. Therefore, these types of tests appear to require a Gc/Gf blend of abilities, as indicated in Fig. 22.3. Planning, reasoning, and problem solving corresponds to Gf; retrieval fluency corresponds to Glr; and Attention (particularly sustained attention) corresponds to Gs.

As may be seen in Fig. 22.3, there are three narrow abilities that are subsumed by Gf, namely, Induction (I), General Sequential Reasoning or Deduction (RG), and Quantitative Reasoning (RQ), the latter of which involves reasoning both inductively and deductively with numbers. Likewise, there are four and three narrow abilities subsumed by Glr and Gs in Fig. 22.3, respectively. Note that only the Glr and Gs narrow abilities that are most relevant to understanding specific executive functions are included in Fig. 22.3 (see Fig. 22.1 for the remaining narrow abilities that make up these domains). Table 22.3 provides definitions of all the terms that are included in Fig. 22.3.

Glr is comprised of narrow abilities that can be divided into two categories—learning efficiency and retrieval fluency (Schneider & McGrew, 2012). The latter category of retrieval fluency is considered an executive function (e.g., Miller, 2007) and can be measured by verbal tasks that require the rapid retrieval of information, such as naming as many animals as one can think of as quickly as possible or naming as many words that begin with the letter “r” as quickly as possible. The narrow Gs abilities included in Fig. 22.3 all involve sustained attention. Typical Gs tasks on cognitive batteries require the individual to do simple clerical-type tasks quickly for a prolonged period of time, usually 3 minutes. Table 22.4 includes the subtests of several cognitive and neuropsychological batteries that measure planning, reasoning, and problem solving (Gf); concept formation and generation (Gc/Gf); working memory capacity (Gsm); and retrieval fluency (Glr; and attention (Gs), allowing for the derivation of information about executive functions in the cognition domain.

The bottom portion of Fig. 22.3 includes five horizontal arrows, each one representing an executive function (Miller, 2007). Unlike the executive functions that may be inferred from tests measuring the abilities listed in the top portion of this figure, the executive functions listed in the bottom portion do not correspond well to any particular CHC ability. For example, in order to derive information about how an individual is able to modify his or her performance based on feedback, one needs to observe the individual perform many tasks, not only in a one-to-one standardized testing situation but in multiple settings (e.g., the classroom, at home). Therefore, in order to obtain information about the executive functions listed in the bottom portion of Fig. 22.3, it is necessary to conduct a qualitative evaluation, which is discussed in the next section of this chapter (i.e., Type 4 Interpretation).

To conduct a comprehensive assessment of the executive functions via measurement of the CHC abilities listed in the top half of Fig. 22.3, it is necessary to cross batteries for the following reasons. First, as may be seen in Table 22.4, the only batteries that measures aspects of all the areas listed in the top portion of Fig. 22.3 are the WJ III NU COG and DAS-II. Therefore, when using any cognitive or neuropsychological battery (in Table 22.4) other than the WJ III NU COG and DAS-II, there is a need to supplement the battery with subtests from another battery to measure all five CHC abilities (in the top portion of Fig. 22.3). Second, when administering traditional intelligence batteries, such as the Wechsler Scales, the examiner often serves as the “executive control board” during testing because she/he tells the examinee what to do and how to do it via detailed standardized test directions (Feifer & Della Toffalo, 2007, p. 17). As such, intelligence batteries, including the WJ III NU COG, are often not sensitive to executive function difficulties and, therefore, will need to be supplemented with neuropsychological subtests in certain areas (e.g., reasoning), to more accurately understand an individual’s executive control capacities. Nevertheless, it is important to understand that no set of directions on intelligence tests can completely eliminate the need for the examinee to use executive functions, such as basic self-regulation cues to engage in, and process and respond to test items (McCloskey et al., 2009). Third, following the administration of any battery, unexpected results are often present and hypotheses are generated and tested to explain the reason for the initial pattern of results. Testing hypotheses almost always requires the examiner to administer subtests from other batteries, as single batteries do not contain all the necessary subtests for follow-up assessments (see Flanagan et al., 2013, for a discussion). In cases in which it is necessary to supplement a battery or test hypotheses about aberrant test performance, following the XBA guiding principles and procedures (and using the XBA DMIA v2.0 software) will insure that the results are interpreted in a psychometrically and theoretically defensible manner.

Type 4 interpretation: Variation in task demands and task characteristics. Interpreting subtest scores representing narrow abilities often requires additional information from the practitioner to understand unexpected variation in performance. The XBA approach includes qualitative evaluations of cognitive and neuropsychological processes at the Type 4 level of interpretation to address how differences in task characteristics, such as input stimuli and output responses, and processing demands might affect an individual’s performance on a particular subtest.

The focus on qualitative aspects of evaluations has been a common practice in neuropsychological assessment and has recently been reemphasized in cognitive assessment methods. The emphasis on clinical observation and qualitative behaviors is fundamental to the processing approach in neuropsychological assessment, which uses a flexible battery approach to gather both quantitative and qualitative data (Kaplan, 1988; Miller, 2007; Semrud-Clikeman, Wilkinson, & Wellington, 2005). Current models of school neuropsychology assessment also have foundations in the process assessment approach and stress the importance of qualitative observations to ensure ecological validity and guide individualized interventions (Hale & Fiorello, 2004; Miller, 2007). Additionally, the integration of qualitative assessment methods in XBA proposed by Flanagan et al. (2010, 2013), and elaborated on here, illustrates the benefits of assessing both quantitative and qualitative information in cognitive assessment practice.

The inclusion of qualitative information in intellectual and cognitive assessment is also evident in the WISC-IV Integrated (Wechsler, 2004). The tasks of the WISC-IV Integrated were designed from a process-oriented approach to help practitioners utilize qualitative assessment methods (McCloskey, 2009). Specifically, McCloskey notes how the process approach has influenced three perspectives in the use and interpretation of the WISC-IV Integrated, “[1] WISC-IV subtests are complex tasks, with each one requiring the use of multiple cognitive capacities for successful performance; [2] variations in input, processing, and/or output demands can greatly affect performance on tasks involving identical or similar content; and [3] careful, systematic observation of task performance greatly enhances the understanding of task outcome” (2009, p. 310).

The emphasis on qualitative assessment originated from the belief that the processes or strategies that an examinee uses during a task are as clinically relevant as the quantitative score (outcome) (Miller, 2007; Semrud-Clikeman et al., 2005). A major tenet in the process approach is that although examinees may obtain the same score on a task, they may be utilizing different strategies and/or neuropsychological processes to perform the task (Kaplan, 1988; Semrud-Clikeman et al., 2005). The analysis of qualitative information derived from observing task performance can provide valuable insight to potential cognitive or neuropsychological strengths and deficits and provide useful information to guide individualized interventions (Hale & Fiorello, 2004). For example, qualitative observations of two examinees that performed poorly on the D-KEFS Tower task may indicate different problems in executive functioning. The first examinee took several minutes before initiating the task, was slow in moving the disks, and made several rule violations, while the other examinee rushed into the task and used a trial-by-error approach. Both examinees appear to have difficulty with planning and problem solving; however, the impulsive examinee might have difficulty due to poor response inhibition, whereas the slower examinee may have difficulty with decision making, rule learning, and establishing and maintaining an instructional set (Delis et al., 2001).

As shown in Fig. 22.3, the XBA approach to assessing executive functions highlights five aspects of executive functioning that can be inferred through qualitative evaluations of an examinee’s test performance: use of feedback, response inhibition, motor programming, cognitive set shifting, and different aspects of attention. Based on task characteristics and demands analysis, Table 22.5 illustrates qualitative aspects of executive functions on subtests of common cognitive and neuropsychological batteries. It should be noted that some neuropsychological batteries include quantitative measures of response inhibition (e.g., NEPSY-II Statue); however, since current intelligence and cognitive batteries do not directly assess response inhibition, it is included in the qualitative section, as it is an observable behavior. The qualitative assessment of these executive functions is not limited to the specific subtest classifications in Table 22.5 since examinees may be utilizing (or failing to utilize) these executive functions depending upon which strategy they implement during a task. For example, although Matrix Reasoning on the Wechsler Scales is not designed to assess response inhibition, if an examiner notices the individual is responding impulsively and making errors based on visual stimuli that are similar to the correct response, the practitioner may infer that the individual has difficulty inhibiting responses to distracting stimuli if this is consistent with other behavioral observations.

Additionally, the executive functions that define the qualitative portion of Fig. 22.3 do not comprise an exhaustive list, but rather include the executive functions most commonly assessed in neuropsychological evaluations (not necessarily in the assessment of intelligence, using traditional intelligence batteries) (Miller, 2007). Since there is a lack of consensus among disciplines regarding the classifications of the processes that comprise executive functions, different models of executive functions may include other aspects of self-regulation, goal-directed behavior, and organization, not mentioned in or inferred from measurement of the abilities listed in Fig. 22.3. When attempting to derive information about executive functions from psychometric tests following the XBA approach, it is recommended that practitioners use the model in Fig. 22.3 as a framework and add to it with additional measures of executive functions, depending on the reason for referral and presenting behaviors of the examinee.

The previous discussion of a Type 3 interpretation described a scenario where an examinee performed average (SS = 100) on the WJ III NU Analysis–Synthesis (AS) subtest yet demonstrated a (normative) weakness (SS = 82) on WJ III NU Concept Formation (CF). To follow up on the low CF score, the examiner chose to administer the D-KEFS Free Sorting, an additional measure of induction (Gf: I). If the scores on these two measures of induction differ significantly from one another (an unexpected finding), then a Type 4 interpretation is warranted to explain the variation in performance on two measures of the same narrow ability. The following example illustrates Type 4 interpretation.

Sara, a fifth-grade student, was referred for an evaluation by her teacher because she has difficulty functioning independently in the classroom despite behavioral interventions. Sara’s teacher reports that she has difficulty following directions and often is the last student to begin an assigned task. Sara is also constantly asking her teacher for help or to check if an answer is correct. Although Sara’s previous teachers expressed similar concerns, Sara’s difficulties have become more problematic with the independent structure and demands of the fifth-grade classroom. Additionally, Sara’s teacher is concerned about her poor written responses on essay questions, which sometimes appear “off” and often “don’t make sense.”

After administering the WJ III NU COG Gf subtests and following up with the D-KEFS Free-Sorting task, it was clear that Sara’s Free-Sorting Description Score (Sc.S = 5) was significantly lower than her score on the CF task of induction. Because this finding was unexpected, the examiner conducted a demand analysis to gather additional information about the variations in task characteristics and cognitive and neuropsychological demands specific to all three measures of Gf. This information is presented in Table 22.6 and the similarities and differences among these tasks are discussed below within the context of Sara’s performance.

As discussed in the Type 3 interpretation, Sara’s average performance on the WJ III NU Analysis–Synthesis (AS) task and poor performance on the WJ III NU Concept Formation (CF) and D-KEFS Free-Sorting tasks suggests that her ability to reason logically, using known rules (Gf: RG), is better than her ability to observe underlying principles or rules of a problem (Gf: I). When Sara was solving problems on the AS task, she was constantly looking to the key presented at the top of the stimulus easel and using her fingers to help guide her decisions for which colored box fit the answer. Therefore, it appears as though Sara’s ability to reason and apply rules is stronger when she is presented with a visual key that can be used as a reference during a task. However, on the CF task, Sara had a difficult time following the first few sets of instructions and relied on examiner feedback during the sample teaching item to gain understanding of the task directions. Although all three tasks include lengthy oral directions, the instructions presented in the CF task are particularly complicated and require greater demands on receptive language. Furthermore, Sara gave several answers on the CF task that required querying but was often able to obtain the correct answer after the query. Finally, Sara had difficulty starting the D-KEFS Free-Sorting task and took a long time between each sort. Although Sara’s ability to correctly sort the cards into groups was more consistent (Sc.S = 7) with her performance on the CF task, she had a hard time articulating and explaining how she was able to sort the cards (Sc.S = 5). Additionally, Sara often turned to the examiner to ask if she was correct and appeared disappointed when the examiner explained that she could not provide feedback.

The behavioral observations noted during task performance and the analysis of the cognitive and neuropsychological demands for each task allowed the examiner to come up with the following hypothesis regarding Sara’s inconsistent scores within the Gf domain. Sara appears to have greater difficulty on reasoning and problem-solving tasks that involve concept formation and generation, such as the CF and Free-Sorting task. Furthermore, Sara’s difficulty generating and explaining multiple sorts may also indicate problems with cognitive flexibility, divergent thinking, and ideation fluency (Miller, 2007; Miller & Maricle, 2012). Additionally, these tasks require more receptive and expressive language demands and tap into Gc abilities, which was indicated as another weakness for Sara based on her low Gc performance on the WJ III NU COG.

Sara’s slow performance during the Free-Sorting task also implies difficulty initiating problem-solving tasks and planning (Delis et al., 2001). This, along with Sara’s receptive and expressive language difficulties, may explain why Sara has difficulty starting tasks and following directions. Furthermore, Sara’s reliance on examiner feedback and visual keys during the WJ III NU tasks may signify problems with self-monitoring and explain why Sara often seeks feedback from her teacher. Overall, it appears that Sara’s inconsistent performance in Gf tasks may stem from problems with Gc (language abilities) as well as executive functions, particularly verbal reasoning, problem-solving initiation, self-monitoring, and concept formation and generation. Sara’s dependence on her teacher in the classroom is likely a compensatory strategy she has learned to help guide her through complex tasks. Interventions, such as teaching self-regulated strategy development (SRSD) to improve self-monitoring and self-revision, will allow Sara to learn how to function more independently in the classroom (De La Paz, 2007).

The previous example of a Type 4 interpretation demonstrated that it is often necessary to go beyond a strict quantitative interpretation of task performance and analyze the task characteristics of subtests as well as the student’s approach to performing those tasks to gain a better understanding of cognitive strengths and weaknesses. Many evaluations of students with learning difficulties require the integration of quantitative and qualitative data to understand a student’s cognitive capacities fully, including executive function capacities. Following is an example of a cross-battery assessment of executive functions, using the WISC-IV as the core battery that integrates mainly Type 2 (quantitative) and Type 4 (qualitative) interpretation.

Highlights of a Wechsler-Based Cross-Battery Assessment of Executive Functions

Ben began middle school (seventh grade) in the Fall of 2011. Ben has been having significant difficulties academically for the first time. His science teacher reported that he has a hard time initiating projects independently and seldom completes in-class assignments on time. Ben reportedly relies on a classmate to help him with science projects and as a result, his teacher moved his seat in an attempt to get him to function more independently in the classroom. Reports from Ben’s other teachers suggest that he is often the last student to “find his place” and he frequently “holds up the class,” seemingly intentionally. Ben leaves important books and assignments in his locker often and, therefore, does not consistently complete homework. Although Ben reported that he studies for exams, his grades are poor, often as the result of careless errors (e.g., lack of attention to detail in math word problems) and incomplete or underdeveloped responses to open-ended questions. His teachers all agree that Ben knows more information than he is able to demonstrate on tests and quizzes. Overall, there is consensus among Ben’s teachers that, while Ben appears to be very bright, he lacks motivation and appears to exhibit attention-seeking behaviors (e.g., he jokes with his classmates that he is last to complete his work). Ben’s parents believe that he is having a hard time adjusting to his new school, including an increase in homework assignments and projects, and they are worried about his recent negative attitude toward school. An evaluation was requested to explore whether Ben’s learning difficulties are the result of an underlying learning disability, behavioral difficulties, or both.

As part of Ben’s comprehensive evaluation, the evaluator administered the WISC-IV and WIAT-III. The results of Ben’s performance on these batteries are found in Table 22.7. A quantitative analysis of Ben’s WISC-IV/WIAT-III scores indicates that his performance ranged from Average to Well Above Average (Type 2 Interpretation).Footnote 4 Despite Ben’s poor academic performance in the seventh grade and the observations offered by Ben’s teachers, many practitioners would conclude that the difficulties Ben is experiencing in school are not related to any underlying cognitive deficits or dysfunction and therefore, they must be the result of the behavioral problems reported (e.g., attention-seeking behavior, lack of motivation). Prior to drawing such a conclusion, it is necessary to determine if the evaluator noticed any unusual approaches to solving problems during the evaluation or any unusual patterns of errors in task performance, for example (Type 4 Interpretation).

A qualitative analysis of Ben’s performance on the WISC-IV and WIAT-III subtests revealed several important observations. For example, the evaluator believed that Ben’s VCI and PRI may have underestimated his capacity to reason with verbal and visual-spatial information, respectively. On the Similarities subtest, the evaluator did not observe Ben reasoning. Specifically, Ben’s responses were immediate, indicating that the information requested was readily available to Ben (i.e., quickly retrieved from existing stores of general knowledge and lexical knowledge). When items became more difficult, Ben was quick to respond, “I don’t know” and did not take the time to “think” about a response. This same response style was evident on the Comprehension subtest. In addition, when items asked for “some advantages,” Ben seemed content with his initial response and when queried he stated, “That’s all I can think of.” Despite Ben’s Average (Compre-hension) and Above Average (Similarities) performance on these subtests, his response style suggests difficulty cueing and directing the use of reasoning abilities as well as difficulty shifting mindset.

On the WISC-IV Picture Concepts subtest and WIAT-III Math Problem-Solving subtest, Ben demonstrated inconsistencies in performance, revealing incorrect responses interspersed across test items, which is unusual given that items are arranged in order of increasing difficulty. This pattern of performance may suggest difficulty cueing the appropriate consideration of the cognitive demands of the task and the amount of mental effort required to effectively perform the task. Ben’s pattern of performance on Picture Concepts and Math Problem Solving may also suggest difficulty with monitoring performance and correcting errors. Likewise, on the Block Design subtest, Ben did not pay close attention to detail, especially on items that did not include the black lines on the stimulus card. Ben also appeared to give up easily on items and often said, “I can’t figure out that one.”

An examination of Ben’s pattern of errors on the Picture Concepts, Math Problem Solving, Coding, and Symbol Search subtests demonstrates that his errors were careless and not reflective of a lack of ability or knowledge, which is consistent with teacher reports. It appears that Ben may have difficulty monitoring his attention over a sustained period of time. His performance on the processing speed subtests, in particular, and perhaps also Block Design, suggests that Ben has difficulty cueing and directing the focusing of attention to visual details and task demands. Based on the evaluator’s qualitative analysis on Ben’s approach to tasks coupled with unusual patterns of errors on certain subtests, it was hypothesized that Ben has weaknesses in executive functions related to modulating and monitoring his performance. To test hypotheses specific to these executive functions, it was necessary to cross batteries.

The evaluator chose to test certain hypotheses about Ben’s executive functions using the WISC-IV Integrated, which is statistically linked to the WISC-IV (following guiding principle #5 of the XBA approach). The evaluator administered Similarities Multiple Choice (SIMC) and Comprehension Multiple Choice (COMC). The evaluator hypothesized that by altering the cueing and directing of open-ended inductive reasoning (Similarities and Comprehension) to the cueing and directing of the recognition of the effective application of induction reasoning (SIMC, COMC), performance will improve. Ben’s performance on both SIMC and COMC was significantly higher than his performance on Similarities and Comprehension, respectively. These results suggest that when the demands of open-ended inductive reasoning are reduced to recognition of the effective application of inductive reasoning, Ben’s capacity for reasoning inductively improves significantly. Ben’s capacity for reasoning inductively is greater than that which he can demonstrate with an open-ended format—a format typically used for tests and quizzes in school. Furthermore, Ben is able to perform in the average range on structured tasks for which explicit instructions are given and that are administered in a one-to-one testing situation. However, when he is required to perform academic tasks involving reasoning in a more unstructured setting (e.g., middle school, homework environment, school exams), his performance is well below average compared to his same-grade peers. Therefore, Ben would benefit from the following interventions.

Ben’s teachers should provide verbal prompts and cues to assist him in the reasoning process when tasks require open-ended inductive reasoning. Ben’s teachers should use direct instruction in acquisition lessons (e.g., How do I use inductive reasoning to reach a conclusion?) with modeling and think alouds to explicitly teach Ben how to use the skills. Ben’s teachers should gradually offer guided practice (e.g., guided questions list) to promote internalization of the cueing and directing of reasoning skills. Teachers may consider using graphic organizers to guide Ben in using inductive reasoning skills. Steps to reasoning inductively should be made accessible for Ben’s use until he has internalized the steps. And, Ben should be given multiple opportunities to extend his thinking about content (Marzano & Pickering, 1992).

To follow up on other hypotheses the evaluator had regarding Ben’s difficulties with executive functions, it was necessary to administer subtests from a battery that is more sensitive to identifying such difficulties. In addition, it was necessary to measure retrieval fluency—an executive function listed in the top portion of Fig. 22.3 that is not measured by the WISC-IV/WIAT-III/WISC-IV Integrated batteries. The evaluator reasoned that he could test his remaining hypotheses about the nature of Ben’s difficulties and assess retrieval fluency using subtests from only one additional battery (following XBA guiding principle #6)—the D-KEFS.

Based on Ben’s performance on the WISC-IV Picture Concepts subtest, it was hypothesized that Ben had difficulty cueing the appropriate consideration of the cognitive demands of a task and the amount of mental effort required to effectively perform the task as well as difficulty cueing and directing the monitoring of work and the correcting of errors. Ben’s performance on certain D-KEFS tasks supports this hypothesis. For example, Ben received a scaled score of 8 on the Free-Sorting task and a scaled score of 12 on the Sort Recognition Description Score Card Set 2. The difference between these scores is statistically significant. This result suggests that Ben has difficulty transferring knowledge into action in less structured situations in the face of intact concept formation skills, which may explain the difficulty he has completing projects in science class, as such projects are unstructured. It was also observed that Ben paid less attention to the perceptual aspects of the cards as compared to the verbal aspects on the Free-Sorting task, which is supportive of the hypothesis that Ben has difficulty cueing and directing the focusing of attention to visual details (as observed on the Block Design and processing speed subtests of the WISC-IV).