Abstract

Wireless mobile network optimization is a complex task that consists in achieving different design objectives such as spectral efficiency, energy efficiency, fairness, and quality of service. Radio resource allocation is responsible for managing the available resources in the radio access interface and, therefore, is an important tool for optimizing networks and achieving the designed objectives mentioned previously. However, in general all these network design objectives cannot be achieved at the same time by resource allocation strategies. In fact, different resource allocation strategies can be designed to maximize one objective in detriment of the other as well as to balance the objectives. In this chapter we deal with important trade-offs between contradicting objectives in modern wireless mobile networks: capacity versus fairness and capacity versus satisfaction. We present resource allocation strategies that can achieve static and adaptive performances when the previously mentioned trade-offs are considered. In order to design the resource allocation strategies we consider heuristics and utility-based solutions. System-level simulations show that the proposed techniques are powerful tools to the network operator, since they can decide in which trade-off point of the capacity-fairness or capacity-satisfaction planes they want to operate the system.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Spectral Efficiency

- Proportional Fair

- Transmission Time Interval

- Radio Resource Allocation

- Resource Reallocation

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

The design of wireless mobile networks is driven by a multitude of objectives. As an example, among the requirements for 4th Generation(4G) given by International Mobile Telecommunications (IMT)-Advanced we can highlight maximum average cell spectral efficiency and cell border spectral efficiency in bits/s/Hz, maximum packet latency and minimum number of supported Voice over IP (VoIP) users [15]. In this case we can identify as design objectives spectral efficiency (average cell spectral efficiency), cell coverage (cell border spectral efficiency), Quality of Service (QoS) (packet latency), and user satisfaction or user capacity (number of supported VoIP users). Other objectives could be present in network design such as energy efficiency and fairness.

One of the most important tools for optimizing wireless mobile networks is Radio Resource Allocation (RRA). RRA is responsible for managing the available resources in the radio access interface such as frequency chunks, transmit power, and time slots. When the system bottleneck is in the radio access instead of the core network, efficient RRA can dictate the performance of the overall system.

However, in general all these network design objectives cannot be achieved at the same time by RRA strategies. A well-known case that illustrates this issue is the RRA strategies that aim at maximizing spectral efficiency. In order to maximize spectral efficiency, the system resources should be assigned to the users that can use them in the most efficient way in terms of b/s/Hz. These users are the ones that have better channel quality states. However, this RRA solution in general leads to reduced fairness and poor QoS provision to the other users that do not have the best channel quality states. Clearly, spectral efficiency is a contradicting objective with regard to both fairness and QoS provision.

Different RRA strategies can be designed to maximize one objective in detriment of another as well as to balance them. In this context, RRA strategies can be static or adaptive. By adaptive RRA strategies we mean solutions that can be configured to achieve different points in the trade-off between opposing objectives, whereas static strategies are able to achieve only a fixed point in the trade-off between the system objectives.

In order to conceive RRA solutions for the existing design objectives, many strategies can be followed. We highlight in this chapter the heuristic and utility-based approaches. As will be shown in the following sections, the heuristic design provides simple and quick solutions to the RRA problems, while the utility-based approach is a flexible and general tool for RRA design.

In this chapter we study important trade-offs in the downlink of modern wireless mobile networks and show adaptive RRA solutions based on the heuristic and utility-based approaches. The focus in this chapter is on Non-Real Time (NRT) services that have as main QoS metric throughput or average data rate . The remainder of this chapter is organized as follows. In Sect. 4.2 we review important objectives and trade-offs in wireless networks, whereas in Sects. 4.3 and 4.4 we present the heuristic and utility-based frameworks for conceiving RRA solutions, respectively. Then, in Sects. 4.5 and 4.6 we propose adaptive RRA strategies for the capacity versus fairness and capacity versus QoS trade-offs, respectively. Finally, in Sect. 4.7 we summarize this chapter with the main conclusions of the presented study.

2 Trade-Offs in Wireless Networks

Resource allocation for wireless mobile communications systems can have different objectives, such as the maximization of system capacity, cell coverage, user QoS (user satisfaction), fairness in the resource distribution, etc. Unfortunately, in general all these objectives cannot be achieved at the same time. Below we list some fundamental compromises that appear in wireless cellular networks:

-

Coverage Versus QoS: Due to propagation losses, the QoS of the users located in the cell edge is usually worse than the one perceived by the users that are close to the base station. A procedure used in the planning and dimensioning of cellular systems is to determine the cell radius depending on the required percentage of the users that should use the minimum allowed Modulation and Coding Scheme (MCS). The trade-off is also evident in this dimensioning procedure, because the higher the minimum QoS requirement, the smaller the cell coverage will be.

-

Capacity Versus Coverage: Excessive capacity can have a negative impact on the coverage of interference-limited systems. This is the case of Third Generation (3G) systems based on Code Division Multiple Access (CDMA), where the cells shrink when they become heavily loaded (cell breathing phenomenon) [10]. Another aspect is that base stations with high power provide good coverage, but also generate excessive interference to the neighbor cells, which can decrease the overall system capacity.

-

Fairness Versus Coverage: The random user location in the coverage area and the wireless channel variability cause differences in the channel quality perceived by the users. This quality variability is directly proportional to the cell coverage: the larger the cell size, the higher the variability. Normally, resource allocation algorithms take into account the Channel State Information(CSI) of the users. So, the higher the variability of the users’ CSI, the lower the fairness of the corresponding resource allocation.

-

Fairness Versus QoS: Since the wireless resources are limited, the QoS of the users cannot be improved indefinitely. If the QoS of few users is maximized, the others will feel the lack of resources. This imbalance is translated into a fairness decrease. On the other hand, if a high fairness is assured and consequently the users have more or less the same QoS, the maximum achievable QoS in this situation is lower.

-

Capacity Versus Fairness: This compromise is also known as the efficiency versus fairness trade-off. In order to maximize system capacity, the wireless resources must be allocated in the most efficient way possible. This is accomplished by using opportunistic resource allocation algorithms, which assign the resources to the users who have the best channel conditions with respect to these resources. As commented before, mobile cellular systems present a high variability on the channel quality experienced by the users. The use of opportunistic RRA in order to maximize capacity will inevitably concentrate the resources among the users in good propagation conditions, while the ones in worse channel conditions would starve. This situation is characterized by low fairness. On the other hand, if a high fairness is required, the system is forced to cope with the bad channel conditions of the worst users and allocate resources to them. Since this allocation is not efficient in the resources’ point-of-view, the overall system capacity will be degraded.

-

Capacity Versus QoS: This is also known as the capacity versus satisfaction trade-off. A clear compromise between system capacity and user QoS is the fact that the existence of more users in the system decreases the QoS per capita, because less resources would be available for each of the users. Furthermore, the use of opportunistic resource allocation in order to maximize capacity can degrade the QoS of the worst users, which decreases the total percentage of satisfied users in the system. On the other hand, system capacity is decreased if the users with bad channel conditions are contemplated in order to increase total user satisfaction.

Notice that the compromises described above are fundamental trade-offs found in mobile cellular systems and most of them are technology-independent. System design, the deployment of specific technologies and the use of suitable RRA techniques can help the network operators to decrease the gap between these opposing factors. If these compromises cannot be solved in a “win-win” approach, adaptive RRA strategies are still very useful at finding an appropriate trade-off between these objectives.

In this chapter, we are interested in studying and evaluating two of the aforementioned trade-offs: capacity versus fairness and capacity versus QoS.

3 Heuristic-Based Resource Allocation Framework

In general, the RRA problems that address the capacity versus fairness and capacity versus QoS trade-offs can be represented in a mathematical form as optimization problems. Basically, optimization problems are composed of an objective, constraints and variables to be optimized. The variable to be optimized in the RRA problems are the resources in mobile networks such as frequency chunks and transmit power. The objective of an optimization problem consists in the aspect of mobile networks that should be improved. Common objectives in RRA are the maximization of transmit data rate and minimization of transmit power. In this chapter we focus on the former objective. Finally, the constraints in optimization problems are restrictions imposed by mobile systems and users. Constraints are able to limit the search space of all possible solutions, i.e., a given RRA solution that leads to an improved objective is infeasible when it does not comply with the problem constraints. We call optimal solution the solution that best improves the objective of the optimization problem and obeys the problem constraints.

The RRA problems studied in this chapter assume that the variable to be optimized is the frequency resource assignment. As the frequency resources are discrete, the optimization problems to be solved belong to the class of combinatorial or integer optimization problems. Furthermore, the mathematical expressions that appear in the objective and constraints of the RRA optimization problems studied here are nonlinear functions of the optimization variable. The combination of combinatorial problems with nonlinear objective and constraints in general turns the task of finding the optimal solution or best RRA solution impractical. Often, the optimal solution can be found only by exhaustive search that enumerates all possible solutions and tests the attained objective in order to find the best one, or other techniques that are able to discard part of the search space but still have exponential-order worst case complexity [29].

In order to find good-enough RRA solutions with reduced computational effort we can use heuristic solutions. Heuristic solutions are simple solutions found by methods, techniques, or algorithms that are conceived based on experience and common sense. In general, the outputs of heuristic methods are suboptimal but acceptable solutions for practical deployments. These solutions are especially suitable for cases where the optimal solution is hard or impossible to obtain. In these cases, heuristic methods accelerate the problem-solving process and provide us accessible and simple solutions.

The problems to be solved in both capacity versus fairness and capacity versus QoS trade-offs have a common structure and are illustrated in Fig. 4.1. Each possible resource assignment or solution to the problem is represented by circles in this figure. Also, the objective to be pursued is to maximize the total data rate or spectral efficiency that is shown on the left-hand side of Fig. 4.1. Note that each possible solution has a different value for the spectral efficiency. Regarding the problem constraints, we have network specific constraints such as the multiple access constraints, and the QoS or fairness constraints that are directly related to the data service provided to the users by the network. In this figure we illustrate the space of all solutions and two inner spaces that represent the network specific and fairness or QoS constraints. Note that we are interested in the solutions that obey both set of constraints located in the intersection region (feasible region). Therefore, although “solution 1” is able to achieve higher spectral efficiency than “solution 2” in Fig. 4.1, we are interested in the latter solution since it is in accordance with both sets of constraints.

The heuristic framework to solve the presented problem consists in two parts: Unconstrained Maximization and Resource Reallocation. In the Unconstrained Maximization part we relax the fairness or QoS constraints and solve the problem in order to find the solution that obeys the network-specific constraints that leads to the maximum spectral efficiency. In general, due to the propagation properties of the wireless medium, only few users that are next to the transmit antennas get most of the system resources. Therefore, we expect that the fairness or QoS constraints are not met in this initial solution. This initial solution is illustrated by “Solution 1” in Fig. 4.2. In the Resource Reallocation part of the proposed framework, we have an iterative phase where the system resources assigned in the Unconstrained Maximization part are exchanged between the users in order to meet either fairness or QoS constraints. In Fig. 4.2, we assume that the final solution, “Solution 4”, is found after three iterations or resource reallocations. The reallocations in each iteration are represented by the dashed-line arrows where the intermediate solutions “Solution 2” and “Solution 3” are obtained after the first and second iterations. Note that the main idea in the reallocation procedure is that the loss in spectral efficiency after each iteration should be kept as minimum as possible. At the end of the proposed framework, we expect that the solution found by the proposed heuristic method achieves a spectral efficiency as close as possible to the optimal solution represented in Fig. 4.2 by “Optimal solution”.

4 Utility-Based Resource Allocation Framework

In communication networks, the benefit of the usage of certain resources, e.g., bandwidth and/or power, can be quantified by using utility theory. This theoretical tool can also be used to evaluate the degree to which a network satisfies service requirements of users’ applications, e.g., in terms of throughput and delay.

The general utility-based optimization problem considered in this work is formulated as:

where \(J\) is the total number of users in a cell, \(\fancyscript{K}\) is the set of all resources in the system, \(\fancyscript{K}_j\) is the subset of resources assigned to user \(j\), \(K\) is the total number of resources in the system (subcarriers, codes, etc) to be assigned to the users, and \(U\left( T_{j}\left[ n\right] \right) \) is a monotonically increasing utility function based on the current throughput \(T_{j}\left[ n\right] \) of the user \(j\) in Transmission Time Interval (TTI) \(n\). Constraints (4.1b) and (4.1c) state that the union of all subsets of resources assigned to different users must be contained in the total set of resources available in the system, and that the same resource cannot be shared by two or more users in the same TTI, i.e., these subsets must be disjoint.

The power allocated to the resources could be considered as another optimization variable in the optimization problem (4.1a)–(4.1c). However, this joint optimization problem is very difficult to be solved optimally [8]. Revising the literature, we can find out that most of the sub-optimum solutions split the problem into two stages: first, dynamic resource assignment with fixed power allocation, and next, adaptive power allocation with fixed resource assignment. Furthermore, it has been shown for Orthogonal Frequency-Division Multiple Access (OFDMA)-based systems that adaptive power allocation provides limited gains in comparison with equal power allocation with much more complexity [8]. Therefore, we consider the simplified optimization problem (4.1a)–(4.1c), which can be solved by a suitable dynamic resource assignment with equal power allocation.

Many RRA policies can be proposed if different utility functions are used. In this study, we are interested in formulating general RRA techniques suitable for controlling the capacity versus fairness and capacity versus QoS trade-offs in a scenario with NRT services.

It is demonstrated in the Appendix that we are able to derive a simplified optimization problem that is equivalent to our original problem. According to the Appendix, the objective function of our simplified problem is linear in terms of the instantaneous user’s data rate and given by

where \(R_j\left[ n\right] \) is the instantaneous data rate of user \(j\) and \(U^{'}\left( T_{j}\left[ n-1\right] \right) = \left. \dfrac{\partial U}{\partial T_j}\right| _{T_j = T_{j}\left[ n-1\right] }\) is the marginal utility of user \(j\) with respect to its throughput in the previous TTI. The objective function (4.2) characterizes a weighted sum rate maximization problem [11], whose weights are adaptively controlled by the marginal utilities.

The weighted sum rate maximization problem given by (4.2) has a linear objective function with respect to \(R_j\left[ n\right] \), whose solution is simple to obtain. Particularly, the Dynamic Resource Assignment (DRA) problem in OFDMA systems, which is the optimization problem (4.1) with subcarriers or physical resource blocks (PRB) as the resources and considering equal power allocation, has a closed form solution when the objective function is given by (4.2) [12, 41]. The user with index \(j^{\star }\) is chosen to transmit on resource \(k\) in TTI \(n\) if it satisfies the condition given by

where \(r_{j,k}\left[ n\right] \) denotes the instantaneous achievable transmission rate of resource \(k\) with respect to user \(j\). Notice that this utility-based resource allocation performs a balance between the QoS-dependent factor \(U^{'}\left( T_{j}\left[ n-1\right] \right) \) and the efficiency-dependent factor \(r_{j,k}\left[ n\right] \).

The chosen utility function must be parameterized, for example by a parameter \(\delta \), i.e. \(U\left( \cdot \right) = U\left( T_j[n], \delta \right) \), in order to allow the control of trade-offs between two objectives. The parameter \(\delta \) is limited by \(\delta ^{\text {min}} \le \delta \le \delta ^{\text {max}}\). On the one hand, \(\delta ^{\text {min}}\) is associated to the maximization of one objective, for example system capacity. On the other hand, \(\delta ^{\text {max}}\) is associated to the maximization of the other objective, for example, system fairness or user satisfaction. The adaptation of \(\delta \) according to a suitable metric and a desired target allows the control of trade-offs.

5 Capacity Versus Fairness Trade-Off

In this section, we study the trade-off between capacity and fairness. First, a general definition of the trade-off is presented in Sect. 4.5.1. Next, two RRA techniques are proposed, namely Fairness-based Sum Rate Maximization (FSRM) and Adaptive Throughput-based Efficiency-Fairness Trade-off (ATEF), which are described and evaluated in Sects. 4.5.2 and 4.5.3, respectively. The former is based on the heuristic-based RRA framework described in Sect. 4.3, while the latter is based on the utility-based RRA framework presented in Sect. 4.4. Finally, the conclusions about the study of the capacity versus fairness trade-off are shown in Sect. 4.5.4.

5.1 General Definition

It is well known that the scarcity of radio resources is one of the most important characteristics of wireless communications, which demands a very efficient usage of the available resources. Different criteria can be used for resource allocation; for instance, the users that present the best channel quality can be chosen to use the resources. In this case, the efficiency indicator of the resources is the channel quality. The efficiency in the resource usage can be maximized if opportunistic RRA algorithms are used [26]. The opportunism comes from the fact that the resources are dynamically allocated to the users that present the highest efficiency indicator with regard to the radio resources. When the resources have different efficiency indicators to different users (multi-user diversity), the trade-off between efficiency (capacity) and fairness appears. The use of opportunistic resource allocation to exploit these diversities causes unfair situations in the resource distribution.

From a network operator perspective, it is very important to use the channel efficiently because the available radio resources are scarce and the revenue must be maximized. From the users’ point of view, it is more important to have a fair resource allocation in a way that they are not on a starvation/outage situation and their QoS requirements are guaranteed.Footnote 1 Then the question is: how can the network operator manage this trade-off? In this section we try to answer this question and highlight important clues toward this goal.

In order to better understand the aforementioned trade-off, it is indispensable to define what fairness means. There are two main fairness definitions: resource- or QoS-based [31]. In the former, fairness is related to the equality of opportunity to use network resources, for example, the number of frequency resources a user is allowed to use or the amount of time during which a user is permitted to transmit. In the latter, fairness is associated with the equality of utility derived from the network, e.g., flow throughput. Resource and QoS-based fairness are related to the notion of how equal is the number of resources allocated or how similar is the service quality experienced by the users, respectively. If all users in a given instant approximately have the same number of allocated resources, or perceive more or less the same QoS level, we can say that the system provides a high fairness. On the contrary, if the resources are concentrated among few users, or few of them experience a very good QoS while the others are unsatisfied, the resource allocation can be considered unfair.

Focusing on QoS-based fairness, it is well known that the characteristics and transmission requirements of NRT traffic differ from those of Real Time (RT) data traffics. NRT services, such as World Wide Web (WWW) and File Transfer Protocol (FTP), are not delay-sensitive but require an overall high throughput. Therefore, rate or throughput can be used as fairness indicators in a scenario with NRT services.

Let us consider a simplified scenario of two users in a wireless system. Figure 4.3 depicts a conceptual view of the trade-off between system capacity and QoS-based user fairness in such a scenario. This conceptual analysis is also valid for the case of resource-based fairness.

The QoS experienced by the two users after the resource allocation is represented by the axes on the figure. One can notice that there are two main lines on the figure: efficiency and fairness. Since the radio resources in the wireless system are limited, the efficiency line delimits a capacity region. The fairness line indicates that the QoS of the users are the same in any point along this line, i.e., the fairness is maximum. The crossing between these lines is the optimal network operation point, which characterizes a resource allocation with maximum efficiency and fairness. In the figure, one can see regions of low and high efficiency and fairness. Wired networks can effectively work near the optimal point due to the implementation of congestion control techniques, such as Transmission Control Protocol (TCP) [6]. However, the frequency and time-varying wireless channel poses significant challenges to the solution of this problem, and the optimal RRA technique that always provides maximum efficiency and fairness in wireless networks is still an open problem.

Referring again to Fig. 4.3, let us assume that user 1 has better channel conditions than user 2. If an opportunistic RRA policy that gives importance only to the efficiency in the resource usage were used, we would have the region marked as “A”. In this case, the majority of the resources were allocated to user 1, which would cause an unfair situation. On the other hand, the region marked as “B” characterizes an RRA policy that provides absolute fairness but causes a significant loss in efficiency since it has to deal with the bad channel conditions of user 2. Therefore, one can observe that in most of the times the optimal point of maximum efficiency and fairness may be unfeasible due to the channel quality of the users.

5.2 Fairness-Based Sum Rate Maximization

The Fairness-based Sum Rate Maximization (FSRM) technique is based on the heuristic-based RRA framework described in Sect. 4.3 and tries to solve the problem of controlling the trade-off between capacity and fairness. It was first proposed in [33, 35].

This section is organized as follows. Section 4.5.2.1 revises the state of the art about the topic, while the RRA problem to be solved by FSRM is formulated in Sect. 4.5.2.2. The details of the FSRM technique are presented in Sect. 4.5.2.3, and finally, simulation results in Sect. 4.5.2.4 show the comparison between FSRM and other classical RRA techniques.

5.2.1 Background

In general, heuristic-based RRA strategies are derived from combinatorial optimization formulations. The optimization-based RRA strategies for OFDMA systems found in the literature typically follow two approaches: margin adaptive and rate adaptive. The former formulates the dynamic resource allocation with the goal of minimizing the transmitted power with a rate constraint for each user [22, 44]. The latter aims at maximizing the instantaneous data rate with a power constraint [18, 32, 38]. Since the capacity versus fairness trade-off is an explicit consequence of the use of opportunistic rate adaptive RRA algorithms, this latter approach is the one studied in this section.

There are three main classical approaches to cope with the rate adaptive optimization problem: Max–Min Rate (MMR) [21, 32], Sum Rate Maximization (SRM) [18], and Sum Rate Maximization with Proportional Rate Constraints (SRM-P) [38, 45].

The rate adaptive approach was first proposed in [32], where the objective was to maximize the minimum rate of the users. A sub-optimum heuristic solution comprising subcarrier assignment and equal power allocation was proposed. After the resource allocation the users have almost the same rate, which results in the fairest policy in terms of data rate distribution. The MMR optimization problem was reformulated in [21] in order to be solved by Integer Programming techniques. Notice that such a policy is able to maximize fairness at the expense of degraded system capacity (see region “B” in Fig. 4.3).

Reference [18] presented the solution of the SRM problem, which is the classical opportunistic rate adaptive policy. SRM maximizes the system capacity regardless of the QoS of the individual users. The subcarriers are assigned to the users who have the highest channel quality, and next the power is allocated among the subcarriers following the waterfilling procedure [30]. This resource allocation ignores the users with bad channel conditions, who may not receive any resources, and benefits the users close to the base station. According to Fig. 4.3, this policy would be located in region “A”.

The SRM-P optimization problem attempts to be a trade-off solution between system capacity and user fairness [38]. The same objective function of the problem described in [18] was considered and a new optimization constraint of rate proportionality for each user was added. This constraint aims to rule the rate distribution in the system. This new optimization problem is suitable for a scenario where there are different service classes with different proportional rate requirements. The solution was divided into two steps: a sub-optimum subcarrier assignment based on [32] and an optimal power allocation. The SRM-P problem was further addressed by [45], which linearized the power allocation problem avoiding the solution of a set of nonlinear equations that was required by the solution proposed in [38].

In this section, a new proposed fairness/rate adaptive policy called FSRM is described. It is a generalization of a classical rate adaptive policy SRM found in the literature [18].

5.2.2 Problem Formulation

The generalization of the classical SRM policy takes into account a new way to control the trade-off between system capacity and fairness. This control is applied on a cell fairness index and is formulated as a new constraint in the optimization problem.

The considered RRA optimization problem is formulated as follows:

where \(J\) and \(K\) are the total number of active users and available frequency resources, respectively; \(\fancyscript{J}\) and \(\fancyscript{K}\) are the sets of users and resources, respectively; \(\mathbf {X}\) is a \(J \times K\) assignment matrix whose elements \(x_{j,k}\) assume the value 1 if the resource \(k\) is assigned to the user \(j\) and 0 otherwise; \(\varPhi ^{\mathrm {cell}}\) is the instantaneous Cell Fairness Index (CFI); and \(\varPhi ^{\mathrm {target}}\) is the Cell Fairness Target (CFT), i.e., the desired target value of the CFI.

Constraints (4.5) and (4.6) say that each frequency resource must be assigned to only one user at any instant of time. A new fairness control mechanism is explicitly introduced into the optimization problem of the fairness/rate adaptive policy by means of the fairness constraint (4.7). A short-term (instantaneous) fairness control can be achieved, because this constraint requires that the instantaneous CFI \(\varPhi ^{\mathrm {cell}}\) must be equal to the CFT \(\varPhi ^{\mathrm {target}}\) at each TTI.

The fairness/rate adaptive optimization (4.4)–(4.7) is a nonlinear combinatorial optimization problem, because it involves an integer variable \(x_{j,k}\) and a nonlinear constraint (4.7), as will be explained in the following. This problem is not convex because the integer constraint (4.5) makes the feasible set nonconvex.

Constraint (4.7) is the main novelty in comparison with the classical SRM rate adaptive policy. It has a deep impact on the design of the RRA technique used to solve the optimization problem (4.4)–(4.7), as will be shown in Sect. 4.5.2.3. In order to better comprehend the importance of this constraint, let us further elaborate on the concept of the fairness index.

It is assumed that each user has a rate requirement \(R_j^{\text {req}}\) that will indicate whether this user is satisfied or not. In order to evaluate how close the user’s transmission rate is from its rate requirement, the UFI is defined as

where \(R_j\) is the instantaneous transmission rate of user \(j\).

In order to measure the fairness in the rate distribution among all users in the cell, the CFI is calculated by

where \(J\) is the number of users in the cell and \(\phi _j\) is the UFI of user \(j\) given by (4.8). This proposed CFI is a particularization of the well-known Jain’s fairness index proposed by Jain et al. in [16]. Notice that \(1/J \le \varPhi ^{\mathrm {cell}} \le 1\). On one hand, the worst allocation occurs when \(\varPhi ^{\mathrm {cell}}=1/J\), which means that all resources were allocated to only one user. On the other hand, a perfect fair allocation is achieved when \(\varPhi ^{\mathrm {cell}}=1\), which means that the instantaneous transmission rates allocated to all users are equally proportional to their requirements \(R_j^{\text {req}}\) (all UFIs are equal).

The objective function (4.4) is the same of the classical rate adaptive SRM policy [18]. The constraint (4.7) does not exist in the original SRM problem. Therefore, SRM is a pure channel-based opportunistic policy, where the resources are allocated to the users with better channel conditions, which maximizes the cell throughput. However, such a solution is extremely unfair because the other users with worse channel conditions are neglected.

Although the objective function of the proposed FSRM policy seeks the maximization of capacity, the fairness constraint (4.7) acts as a counterpoint, provoking the explicit appearance of a trade-off. FSRM tries to answer the following question: How can a given fairness level be achieved while keeping the system capacity as high as possible? Guided by this criterion, the FSRM policy can achieve different fairness levels and draw a complete capacity-fairness curve. We will answer in the next section this design question.

5.2.3 Algorithm Description

The underlying concept behind the FSRM policy is that resource allocation can be based on two possible approaches:

-

Resource-centric/efficiency-oriented: the RRA policy allows the resource to “choose” who is the best user to use it;

-

User-centric/fairness-oriented: the RRA policy allows the user to choose which is the most adequate resource to him/her.

Whether the RRA policy uses the former, the latter, or both approaches, will determine its ability to control the intrinsic trade-off between resource efficiency and user fairness found in wireless networks.

Three “actors” play an important role in the proposed technique: the “richest” user (the one with the maximum proportional rate), the “poorest” user (the one with the minimum proportional rate), and the resource.

The FSRM policy is able to increase the fairness in the system. This process is illustrated in Fig. 4.4. In this hypothetical example, we have the distribution of the QoS among 20 users. The user IDs are ordered in such a way that the users with best QoS are given IDs around 10, and the users with worst service quality are given the extreme IDs (close to 1 or 20). A QoS distribution depicted by the dashed curve shows an unfair resource usage. If fairness is to be increased from that point, the resources, and consequently the QoS, should be divided more equally among the users. This is accomplished removing resources from the rich and giving them to the poor. The solid curve is an example of a fair QoS distribution.

The fairness/rate adaptive problem formulated in (4.4)–(4.7) is a nonconvex optimization problem, which makes it very difficult to find the optimum solution. This work proposes an RRA technique able to solve the proposed fairness/rate adaptive problem in a sub-optimum way. Based on the heuristic-based framework described in Sect. 4.3, the FSRM policy is implemented by a sequence of two RRA algorithms, as explained in the following.

-

1.

Unconstrained Maximization: An initial fairness level (CFI) is achieved after the execution of the DRA algorithm of the classical SRM policy. Therefore, an initial positioning on the capacity-fairness plane is determined.

-

2.

Resource Reallocation: The initial CFI is, in general, low because SRM is an unfair policy. Thus, in order to meet the desired CFT, fairness must be increased by means of resource reallocations among users. Fairness variation is only possible if resources are moved between different users. The first step is to decide from which user a resource will be removed. Next, a small amount of resource (resource with worst channel quality) is removed from this user. Finally, this resource is given to the user that can take the most benefit of it, or in other words, this resource is given to the user that can use it in the most efficient way. This means to assign the removed resource to the user that has the highest channel gain on it. Hopefully, after this procedure an accurate approximation of the CFT is achieved.

After the Unconstrained Maximization and Resource Reallocation parts, we perform Equal Power Allocation (EPA), i.e., the power is divided equally among the resources.

In the Unconstrained Maximization part, a resource should be assigned to only one user who has the best channel gain for that resource, as indicated in Algorithm 7.

The detailed pseudo-code of the Resource Reallocation part of FSRM is presented in Algorithm 8 while its flowchart is depicted in Fig. 4.5.

Algorithm 8 is an iterative heuristic algorithm that adapts the CFI by means of a resource reallocation procedure. Initially, the CFI according to (4.9) is calculated. As previously mentioned, the initial DRA procedure is performed by the classical SRM technique, which in general provides low levels of CFI. Therefore, it is most likely that the initial CFI provided by SRM is lower than the desired CFT value \(\varPhi ^{\mathrm {target}}\). Based on that, the fairness-based DRA algorithm of the FSRM technique must increase the fairness until a value close to \(\varPhi ^{\mathrm {target}}\). This is accomplished by an iterative procedure that stops when the CFT is achieved. Details are given below.

-

1.

Select a user \(j^{*}\) from the set of available users in such a way that fairness can be increased if a resource is removed from this user. This can be accomplished by taking resources from the user with maximum proportional rate (richest user) and give them to other users.

-

2.

From the subset of resources assigned to user \(j^{*}\), select the one with the minimum Signal-to-Noise Ratio (SNR) with respect to this user (resource \(k^{*}\)).

-

3.

Find the user \(j^{**}\) (different of user \(j^{*}\)) who can be most benefited from the resource reallocation. This is the user with maximum SNR on resource \(k^{*}\).

-

4.

Remove resource \(k^{*}\) from user \(j^{*}\) and give it to user \(j^{**}\) (resource reallocation). The rates and subsets of assigned resources of users \(j^{*}\) and \(j^{**}\) must be updated.

-

5.

Re-calculate the new value of CFI and repeat the process until the CFT is achieved.

During the fairness increase procedure, the resources have more freedom to move between the users. In order to avoid ping-pong effects, the resource \(k^{*}\) cannot return to its original owner (user \(j^{*}\)) in subsequent iterations of the algorithm. Due to this restriction, after some iterations, the resource \(k^{*}\) may not have any user eligible to receive it. In this case, this resource is removed from the set of available resources.

As can be noticed, the way the resources are reallocated in the FSRM policy guarantees that a desired CFT is met while maximum capacity is achieved.

5.2.4 Simulation Results

In this section, we compare the performance of the proposed FSRM technique with three classical rate adaptive techniques, namely SRM [18], SRM-P [45] and MMR [32]. Table 4.1 shows the parameters considered in the system-level simulations, where the main characteristics of a single-cellFootnote 2 Long Term Evolution (LTE)-based system were modeled.

Figure 4.6 depicts the mean CFI averaged over all snapshots as a function of the number of users for all classical rate adaptive algorithms and the fairness/rate adaptive technique proposed in this work. It can be observed that the SRM technique, which uses a pure opportunistic policy that allocates the resources only to the best users, is the one that presents the highest rates. However, this benefit comes at the expense of a very unfair distribution of the QoS among the users, since many of them do not have the opportunity to transmit due to the lack of resources. Notice that the higher the number of users, the lower the fairness provided by SRM. This is due to the multi-user diversity which is fully exploited by the opportunistic resource allocation of the SRM technique. At the other extreme we have the SRM-P and MMR techniques, where the transmission rates of the users are more equalized, and therefore the fairness in the system is higher. However, the transmission rates of the users are also lower, which characterizes a capacity loss. Notice that the users’ rates provided by SRM-P are slight higher than the ones achieved with MMR, because the former takes extra actions that allow a better utilization of the resources [45].

Figure 4.6 also shows that the FSRM technique is successful at guaranteeing the fairness targets, which were \(\left[ 1/J, 0.2, 0.4, 0.6, 0.8, 1.0\right] \), where \(J\) is the total number of NRT users in the cell. It can be observed that for lower system loads that FSRM is not able to exactly meet very low CFTs (see for instance 6 or 8 users and CFT=\(1/J\) in Fig. 4.6). This happens due to two interrelated factors: (1) the performance of the FSRM technique is lower-bounded by the classic SRM policy; and (2) the multi-user diversity is not sufficient with a low number of users. As explained in Sect. 4.5.2.3, the initial resource assignment performed by the classic SRM is the first step of the FSRM technique. If the CFT is larger than the initial CFI, fairness should be increased, and resource reallocations are done in the reallocation part of the heuristic-based framework. This explains the lower bound given by SRM. On the other hand, the performance of the FSRM strategy converges to the performance of the classic MMR for extremely high values of CFT, since the latter presents the highest values of CFI.

Figure 4.7 compares the performance of the proposed FSRM strategy with the classical rate adaptive techniques in terms of total cell throughput, which is the efficiency indicator that we use in this analysis. As a consequence of the trade-off, we have that the total cell throughput is inversely proportional to the CFT. As can be seen in Fig. 4.7, the higher the CFT, the lower the total cell throughput. Regarding the classical strategies, SRM provides much better results in terms of system capacity than SRM-P and MMR. SRM-P also shows slightly better results than MMR due to its resource assignment algorithm that seeks the maximization of the capacity whenever possible. One can see the inverse proportion between capacity and CFT by the performance of the FSRM technique.

The best way to evaluate the trade-off between resource efficiency and user fairness is plotting the 2D capacity-fairness plane. The chosen efficiency and fairness indicators are the total cell data rate (capacity) and cell fairness index, respectively. Figure 4.8 summarizes the most relevant aspects discussed so far. It compares the performance of the classical rate adaptive techniques (SRM, SRM-P and MMR), which are indicated as single markers, and the generalized fairness/rate adaptive strategy (FSRM), which is indicated as solid line. In order to plot the capacity-fairness plane, the number of users must be fixed, which in this case is 16.

The classical rate adaptive techniques are represented as single points in the capacity-fairness plane because they represent static policies, i.e., each policy provides only one trade-off operation point. SRM provides maximum capacity at the expense of very poor fairness among users, while SRM-P and MMR are very fair in the rate distribution (CFI close to one) but as a consequence they achieve much lower system capacity.

On the other hand, the FSRM technique is able to achieve a desired cell fairness target thanks to a new fairness constraint in the optimization problem. It is able to cover the whole path between extreme points in the capacity-fairness plane (classical rate adaptive points), drawing a complete curve. One can observe that the performance of the proposed fairness/rate adaptive strategy converges to the results of the classical rate adaptive techniques in both extremes of the CFI, which are \(1/J\) and 1.

5.3 Adaptive Throughput-Based Efficiency-Fairness Trade-Off

The Adaptive Throughput-based Efficiency-Fairness Trade-off (ATEF) technique is based on the utility-based RRA framework described in Sect. 4.4 and tries to solve the problem of controlling the trade-off between capacity and fairness. It was first proposed in the seminal works [33, 34].

This section is organized as follows. Section 4.5.3.1 presents some works related to the topic, while the RRA problem to be solved is formulated in Sect. 4.5.3.2. The proposed technique is described in Sect. 4.5.3.3, while Sect. 4.5.3.4 shows the performance evaluation of ATEF and other classical RRA techniques.

5.3.1 Background

Most of the works that proposed packet scheduling (PS) algorithms to effect a compromise between efficiency and fairness among NRT flows [4, 5, 13, 46] are based on the Proportional Fair (PF) PS algorithm proposed in [43] for High Data Rate (HDR) CDMA systems. However, there are some works [7, 23] that used different approaches. The former introduced a PS algorithm with a fairness controlling parameter that accounts for any intermediate policy between the instantaneous fairness and the opportunistic policies, while the latter evaluated a scheduling algorithm whose priority function is a linear combination between instantaneous channel capacity and the average throughput. As a generalization of the PF criterion, we can highlight the weighted \(\alpha \)-proportional fairness PS algorithm, which is also known as the alpha-rule and was initially proposed by [28] and later used in [20]. The idea behind this algorithm is to embody a number of fairness concepts, such as rate maximization, proportional fairness and max–min fairness, by varying the values of the parameter \(\alpha \) and the weight parameter.

A more general class of RRA algorithms is based on utility fairness. Utility fairness is defined with a utility function that composes the optimization problem, where the objective is to find a feasible resource allocation that maximizes the utility function specific to the fairness concept used. Some examples of utility functions can be found in [9, 19, 39]. There is a general family of utility functions that were presented and/or evaluated in [36, 37, 42] that includes the weighted \(\alpha \)-proportional fairness algorithm as a special case. Some works followed a similar approach, but using different utility functions, e.g., [3, 40, 41].

The utility fairness concept is used in this section to propose the utility-based alpha-rule, which is a generalized parametric RRA framework suitable for NRT services that can balance efficiency and fairness in wireless systems according to the network operator’s interest. This framework is composed of dynamic resource assignment algorithm and can be designed to work as any of well-known classical RRA policies by adjusting only one parameter in their corresponding parametric structures.

5.3.2 Problem Formulation

We consider a family of utility functions based on throughput of the form presented in (4.10) below [37].

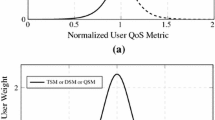

where \(\alpha \in \left[ 0, \infty \right) \) is a nonnegative parameter that determines the degree of fairness.

Figure 4.9 depicts, for different values of \(\alpha \), the utility and marginal utility functions. A family of concave and increasing utility functions is shown, which represents that the satisfaction of the users increases when their throughput increases. The marginal utilities play an important role in the DRA algorithm, as explained in Sect. 4.4. Let us consider a utility-based weight of user \(j\) as its marginal utility, i.e., \(w_j = U^{'}\left( T_{j}\left[ n-1\right] \right) \). The higher the weight, the higher the priority of the user to get a resource. The marginal utility functions also show that users experiencing poor QoS (low throughput) will have higher priority in the resource allocation process. And such priority is higher when \(\alpha \) increases. Therefore, one can conclude that when \(\alpha \) increases, the users with poorest QoS are benefited, and so the fairness in the system becomes stricter.

Taking into account (4.10), the expression of the weight \(w_j\) becomes

The corresponding DRA algorithm, which is given by (4.3), must use the particular expression of \(w_j\) presented in (4.11).

Depending on the value of the fairness controlling parameter \(\alpha \), the alpha-rule framework presented above can be designed to work as different RRA policies, achieving different performances in terms of resource efficiency and throughput-based fairness. The main characteristics of the alpha-rule framework and the four particular RRA policies contemplated by this framework are presented in Table 4.2. The first three RRA policies are well-known classical policies, namely Rate Maximization (RM) [18] (also known as SRM), Max–Min Fairness (MMF) [37] and Proportional Fair (PF) [19]. The novel adaptive policy ATEF is described in detail in the following.

5.3.3 Algorithm Description

The ATEF policy is an adaptive version of the utility-based alpha-rule. It aims to achieve an efficient trade-off between resource efficiency and throughput-based fairness planned by the network operator in a scenario with NRT services. This is done by means of the adaptation of the fairness controlling parameter \(\alpha \) in the utility function presented in (4.10). The user priority in the resource allocation is very sensitive to the value of \(\alpha \), as can be seen in Fig. 4.9. So small values are sufficient to provide the desired fairness degrees on the ATEF DRA algorithm.

The ATEF policy is based on the definition of a user fairness index (UFI) \(\phi _j\), which is based on throughput and calculated for each user in the cell. The instantaneous UFI is defined as

where \(T_{j}^{\mathrm {req}}\) is the throughput requirement of user \(j\).

Next, we define a cell fairness index considering all users connected to it as follows:

where \(J\) is the number of users in the cell. This proposed CFI is based on the well-known Jain’s fairness index [16]. This fairness index was also used by the heuristic-based FSRM technique, whose formulation is presented in Sect. 4.5.2.2.

The objective of the ATEF policy is to assure that the instantaneous CFI \(\varPhi ^{\mathrm {cell}}\left[ n\right] \) is kept around a planned value \(\varPhi ^{\mathrm {target}}\), i.e., a strict throughput-based fairness distribution among the users is achieved. Therefore, the ATEF policy adapts the parameter \(\alpha \) in the utility-based alpha-rule framework in order to achieve the desired operation point. Therefore, the new value of the parameter \(\alpha \) is calculated using a feedback control loop of the form:

where the parameter \(\eta \) is a step size that controls the adaptation speed of the parameter \(\alpha \); \(\varPhi ^{\mathrm {filt}}\left[ n\right] \) is a filtered version of the CFI \(\varPhi ^{\mathrm {cell}}\left[ n\right] \) using an exponential smoothing filtering, which is used to smooth time series with slowly varying trends and suppress short-run fluctuations; and \(\varPhi ^{\mathrm {target}}\) is the CFT, i.e. the desired value for the CFI.

The ATEF technique is an iterative and sequential process. At each TTI, the steps indicated in Fig. 4.10 are executed. This process is executed indefinitely. After some iterations (TTIs), the ATEF technique reaches a stable convergence of the fairness pattern defined by the target CFI. The simplicity of the ATEF policy makes it a robust and reliable way to control the trade-off between capacity and fairness. By keeping the cell fairness around a planned target value, the network operator can have a stricter control of the network QoS and also have a good prediction about the performance in terms of system capacity.

5.3.4 Simulation Results

In this section, the performance of the utility-based alpha-rule is evaluated by means of system-level simulations. The performance of the ATEF policy is compared to the three classic RRA policies (MMF, PF and RM). In this simulation scenario, several CFTs were considered for the ATEF policy, namely \(\varPhi ^{\mathrm {target}} = \left[ 1/J, 0.2, 0.4, 0.6, 0.8, 1.0\right] \). The simulations took into account the main characteristics of an LTE-based cellular system. The general simulation parameters are the same as used for the evaluation of the FSRM technique in Sect. 4.5.2.4 (see Table 4.1). Table 4.3 shows the specific simulation parameters used in the performance evaluation of the utility-based alpha-rule framework.

The throughput-based CFI calculated by (4.13) averaged over all simulation snapshots is depicted in Fig. 4.11 for various system loads. It can be observed that ATEF is successful at achieving its main objective, which is to guarantee a strict fairness distribution among the users. This is achieved due to the feedback control loop that dynamically adapts the parameter \(\alpha \) of the alpha-rule framework.

Notice that the structure of the utility-based alpha-rule framework bounds the performance of the ATEF policy between the performances of the RM and MMF policies. According to Table 4.2, the extreme values of the parameter \(\alpha \) are \(0\) and \(\infty \) (in practice a very large number), which correspond to RM and MMF policies, respectively. We considered in the simulations a range of values from 0 to 10 for the adaptation of the parameter \(\alpha \) by the ATEF policy. Notice that this upper limit of \(\alpha =10\) was sufficient for the ATEF policy configured with \(\varPhi _{\mathrm {target}}=1.0\) to be very close to the performance of the MMF policy. On the other extreme, it is clear that RM works as a lower bound for ATEF configured with \(\varPhi _{\mathrm {target}}=1/J\).

Regarding the classic RRA policies, as expected, MMF provided the highest fairness, very close to the maximum value of 1, while RM was the unfairest strategy with a high variance on the fairness distribution for high cell loads. PF presented a good intermediate fairness distribution. From this fairness analysis, it can be concluded that the advantage of the ATEF policy compared with the classic RRA strategies is that the former can be designed to provide any required fairness distribution, while the latter are static and do not have the freedom to adapt themselves and guarantee a specific performance result.

We consider the total cell throughput (cell capacity) as the efficiency indicator, which is presented in Fig. 4.12 as a function of the number of users.

As expected, RM was able to maximize the system capacity, while MMF presented the lowest cell throughput, since it is not able to exploit efficiently the available resources. PF is a trade-off between RM and MMF, so its performance is laid between them. The ATEF policy is able to achieve several cell throughput performances depending on the value of the chosen CFT. In this way, we realize that ATEF is able to work as a hybrid policy between any classic RRA strategy contemplated in the framework.

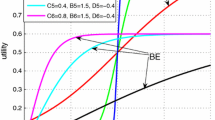

Looking at Figs. 4.11 and 4.12, one can clearly see the conflicting objectives of capacity and fairness maximization, and how RM and MMF are able to achieve one objective in detriment of the other. PF and ATEF were able to achieve a static and a dynamic trade-off, respectively. A didactic way to explicitly evaluate the trade-off between resource efficiency and user fairness is to combine Figs. 4.11 and 4.12 and plot a 2D plane between total cell throughput (capacity) and the cell fairness index. Figure 4.13 presents the plane built from the simulations of all studied RRA policies on a scenario with 16 active NRT flows.

In Fig. 4.13, the classic RRA policies are indicated as single markers, and the adaptive policy ATEF is indicated as a solid line. The classic policies show a static behavior on the capacity-fairness plane. RM is the most efficient on the resource usage but provides an unfair throughput distribution among users, while MMF is able to provide maximum throughput-based fairness at the expense of low system capacity. The PF policy appears as a fixed trade-off between MMF and RM, with intermediate system capacity and throughput-based fairness.

In order to achieve a desired cell fairness target, the ATEF policy controls the parameter \(\alpha \) adaptively according to (4.14). In this way, it is able to cover the whole path between the classic policies in the capacity-fairness plane. Notice in the ATEF curve that the fairness targets set in the simulations (0.2, 0.4, 0.6, 0.8, and 1.0) are successfully met. As expected, the performance of the ATEF policy for very low fairness range converges to the performance of the RM policy. Therefore, it can be concluded that the ATEF policy can adaptively adjust the utility-based alpha-rule framework presented in Table 4.2 in order to provide a dynamic trade-off between resource efficiency and throughput-based fairness.

5.4 Conclusions

Two adaptive RRA techniques for the control of the capacity versus fairness trade-off are proposed: FSRM and ATEF. We propose to manage this trade-off by means of fairness control. FSRM and ATEF use two different ways to control the fairness in the system: instantaneousor average fairness control, respectively.

FSRM is able to cover the whole path between the extreme points in the capacity-fairness plane, drawing a complete capacity-fairness curve. One can observe that the performance of FSRM converges to the results of the classical rate adaptive strategies in both extremes of the cell fairness index, which are \(1/J\) and 1. These classical techniques are SRM and MMR, respectively. The performance of FSRM is constrained by SRM and MMR because FSRM plays with the competition of two paradigms: efficiency-oriented (resource-centric) and fairness-oriented (user-centric). SRM is the maximum exponent of the former paradigm, while MMR is the best representative of the latter.

The fairness control performed by ATEF is bounded by the structure of the alpha-rule framework, i.e., the minimum and maximum fairness performance depends on the allowed range of values for the parameter \(\alpha \). In the alpha-rule framework, minimum and maximum \(\alpha \) correspond to the classical RM and MMF policies, respectively. The ATEF technique dynamically adapts the fairness-controlling parameter \(\alpha \) of the alpha-rule framework using a feedback control loop, in order to achieve a desired fairness distribution in terms of throughput (average data rate).

ATEF is able to provide equal or better cell capacity than the respective classical policies for the same cell fairness indexes. Furthermore, it is also able to provide dynamic trade-offs covering the capacity-fairness plane. This is a remarkable strategic advantage to the network operators, because they can now control the aforementioned trade-off and decide in which point on the plane they want to operate.

6 Capacity Versus QoS Trade-Off

In this section, we study the trade-off between capacity and QoS. First, a general definition of the trade-off is presented in Sect. 4.6.1. Next, two RRA techniques are proposed: Constrained Rate Maximization (CRM) and Adaptive Throughput-based Efficiency-Satisfaction Trade-off (ATES). The former is based on the heuristic-based RRA framework described in Sect. 4.3, while the latter is based on the utility-based RRA framework presented in Sect. 4.4. The CRM and ATES techniques are described and evaluated in Sects. 4.6.2 and 4.6.3, respectively. Finally, the conclusions about the study of the capacity versus QoS trade-off are shown in Sect. 4.6.4.

6.1 General Definition

Capacity and QoS are two contradicting objectives in wireless networks. Without loss of generality, let us consider the case of opportunistic RRA that take into account the channel quality of the users. As it was previously mentioned, the objective of such opportunistic RRA is to allocate more resources to the users with better channel conditions, which leads to a higher resource utilization and system capacity. However, this strategy benefits the users closer to the Base Station (BS), i.e., the ones with highest SNR, and can cause starvation to the users with worse channel conditions. This can severely degrade some users’ experience as a result of unfair resource allocation and increased variability in the scheduled rate and delay. Moreover, long delays in the scheduling of packets coming from bad channels can cause severe degradation in the overall performance of the system for higher layer protocols, such as TCP.

On the other hand, schemes that aim to maximize the overall satisfaction have to fulfill QoS requirements and guarantee specific targets of throughput, packet delays, among others. Sometimes, system resources should be assigned to users independently of channel quality state in order to take into account users with degraded QoS, which penalizes users with better channel conditions and reduces system efficiency. Therefore, in general maximizing the system capacity leads to poor QoS provision and vice versa.

The compromise between efficiency and fairness has been widely studied in the literature, as explained in Sect. 4.5. However, to the best of our knowledge, the explicit evaluation of the capacity versus QoS trade-off has not been covered in the literature.

6.2 Constrained Rate Maximization

The capacity versus QoS tradeoff will be characterized, in Sect. 4.6.2.1, by the optimization problem of maximizing the system capacity under minimum satisfaction constraints. Then we present the optimal and the heuristic solutions to this problem in Sects. 4.6.2.2 and 4.6.2.3, respectively. Finally, simulation results for performance evaluation are presented in Sect. 4.6.2.4. The contributions presented in this section were first shown in the seminal works [24, 25].

6.2.1 Problem Formulation

We consider that in a given TTI, \(J\) active users compete for \(K\) available resources. We define \(\fancyscript{J}\) and \(\fancyscript{K}\) as the set of active users and available resources, respectively. As we are dealing with a multiservice scenario we assume that the number of services provided by the system operator is \(S\) and that \(\fancyscript{S}\) is the set of all services. We consider that the set of users from service \(s \in \fancyscript{S}\) is \(\fancyscript{J}_{s}\) and that \(|\fancyscript{J}_s| = J_s\), where \(|\cdot |\) denotes the cardinality of a set. Note that \(\bigcup \limits _{s \in \fancyscript{S}} \fancyscript{J}_s = \fancyscript{J}\) and \(\sum \limits _{s \in \fancyscript{S}} J_s = J\). We define \(\mathbf {X}\) as a \(J \times K\) assignment matrix with elements \(x_{j,k}\) that assume the value 1 if the resource \(k \in \fancyscript{K}\) is assigned to the user \(j \in \fancyscript{J}\) and 0 otherwise. According to the link adaptation functionality, the BS can transmit at different data rates according to the channel state, allocated power, and perceived noise/interference. We consider that user \(j \in \fancyscript{J}\) can transmit using resource \(k \in \fancyscript{K}\) with the data rate \(r_{j,k}\). The transmit power is uniformly distributed among the available resources. A user \(j\) is satisfied if its transmit data rate is higher than or equal to its data rate requirement \(R_j^{\text {req}}\) after resource allocation. Furthermore, the system operator requires that \(\kappa _s\) users of service \(s\) should be satisfied after resource allocation.

The problem of maximizing capacity under minimum satisfaction constraints is formulated as

where \(u(x,b)\) is a step function that assumes the value 1 if \(x \ge b\) and 0 otherwise, where \(b\) is a constant. The first part of this optimization problem is the objective function in (4.15a). The objective of this problem is to maximize the total downlink data rate transmitted by the BS to the connected users. When the problem constraints are concerned, we can see that constraints (4.15b) and (4.15c) assure that the each resource \(k\) should be allocated exclusively to a given user, i.e., a given resource cannot be shared by multiple users. Another consequence of these constraints is that within a cell covered by a given BS there is no intra-cell interference. The last constraint (4.15d) addresses QoS and user satisfaction issues. In this constraint, for each provided service \(s\) in the system, a minimum number of users should be satisfied (\(\kappa _s\)). This is equivalent to satisfy a certain percentage of the connected users for each service in the system.

6.2.2 Method for Obtaining the Optimal Solution

Note that problem (4.15) has a binary optimization variable \(x_{j,k}\). Therefore, this problem belongs to the class of combinatorial optimization problems. Moreover, constraint (4.15d) is a nonlinear function of the optimization variable \(x_{j,k}\). Therefore, problem (4.15) is a nonlinear combinatorial problem that is hard to solve optimally depending on the problem dimensions [47].

A well-known method to solve problem (4.15) consists in the brute force method that consists in numerating all possible solutions, testing whether they obey the constraints (4.15b)–(4.15d), and evaluating the achieved total data rate. The optimal solution is the one that presents the highest total data rate. The total number of possible solutions that can be enumerated is \(J^K\). Therefore, this method only works for small \(J\) and \(K\), which is not the case in cellular networks.

Fortunately, problem (4.15) can be simplified by modifying constraint (4.15d). Consider a binary selection variable \(\rho _j\) that assumes the value 1 if user \(j\) is selected to be satisfied and 0 otherwise. According to this, the problem (4.15) can be restated as

As can be seen, the constraint (4.15d) of problem (4.15) was replaced by constraints (4.16d), (4.16e) and (4.16f) in problem (4.16). Now, the optimization variables are \(x_{j,k}\) and \(\rho _j\), and all problem constraints and objective function are linear. Therefore, we managed to convert problem (4.15) to an Integer Linear Problem (ILP). This special class of optimization problems can be solved by standard numerical solvers based on the Branch and Bound (BB) algorithm. The main idea of the BB algorithm is to decrease the search space by solving a relaxed version of the original optimization problem [29].

Although, the optimal solution of problem (4.16) can be obtained with much less processing time with BB-based solvers compared to the brute force method, the worst-case complexity of the BB-based solvers is exponential with the number of variables and problem constraints [47]. In problem (4.16), we have \(J \times K + J\) variables and \(J+K+S\) constraints. Consequently, obtaining the optimal solution to the studied problem is not feasible for the short time basis of cellular networks even for moderated number of users, resources and services.

6.2.3 Algorithm Description

In this section we present an algorithm to solve the problem presented in Sect. 4.6.2.1 following the heuristic framework presented in Sect. 4.3. As it was shown previously, the first part of the solution consists in solving the studied problem without the minimum satisfaction constraints. In other words, we are interested in finding the solution that maximizes the spectral efficiency. The implementation of this first part, called Unconstrained Maximization, is presented in Fig. 4.14.

In step (1) of the Unconstrained Maximization part we define two temporary user sets: auxiliary user set represented by \(\fancyscript{B}\) and the available user set denoted by \(\fancyscript{A}\). The auxiliary user set contains the users that can be disregarded without violating the minimum satisfaction constraints per service. The available user set contains the users that were not disregarded along the Unconstrained Maximization part and will get resources in the Reallocation part. Both user sets are initialized with the set of all users \(\fancyscript{J}\).

In step (2) we solve the relaxed version of problem (4.16), i.e., without the minimum satisfaction constraints, with the users of the available user set. The optimal solution to the relaxed problem is simple; basically the resources should be assigned to the users with best channel quality on them [18]. According to the RRA performed in step (2), some of the users would get an allocated data rate higher than or equal to the required data rate, \(R_j^{\text {req}}\), whereas other users would get an allocated data rate lower than the data rate requirement. Therefore, in step (3) we define the former users as the satisfied users while the latter are the unsatisfied users.

In step (4) we evaluate if the minimum number of users that should be satisfied per service, \(\kappa _s\), is fulfilled with the RRA performed in step (2). Basically, in step (4) we evaluate if the set of constraints (4.16d), (4.16e) and (4.16f) of problem (4.16) are fulfilled. If so, the RRA solution in step (2) is the optimal solution of the studied problem as presented in step (5). Note that this is an uncommon situation because of the wireless propagation characteristics where few users present the best channel qualities in most of the resources. In this way, only few users would get satisfied with the solution in step (2).

In step (6), a user is taken out of the RRA process. The main idea here is to take out of the RRA process the user that demands more resources to be satisfied. According to this, the selected user is chosen according to the following equation:

As it can be seen in (4.17), the denominator of the fraction in the argument of the \(\arg \max \left( \cdot \right) \) function consists in the estimated average transmit data rate of user \(j\) per resource, whereas the numerator is the required data rate of user \(j\). Therefore, the ratio between these two quantities consists in the estimated number of resources that user \(j\) needs to be satisfied. The objective is to disregard the user that needs more resources. As it will be shown later, there is a limit in the number of users that can be disregarded that depends on the minimum satisfaction constraints of the studied problem.

Note that if there are initially \(J_s\) users from service \(s\) and a minimum of \(\kappa _s\) users should be satisfied, the maximum number of users that can be disregarded is \(J_s-\kappa _s\) in order to be still possible guaranteeing the minimum satisfaction constraint for service \(s\). In step (7), we check whether the service of the user selected in step (6) (represented here by \(s^{*}\)) can have another user disregarded without violating the minimum satisfaction constraints. If so, the algorithm returns to step (2) where the relaxed version of problem (4.16) is solved with the users of the available user set. Otherwise, all users from service \(s^{*}\) will be taken out of the auxiliary user set in step (8), i.e., these users could not be disregarded in the Unconstrained Maximization part.

In step (9) we check if the auxiliary user set is empty which means that we could not disregard any user without violating the minimum satisfaction constraints. If so, we check in step (10) if at least one user is satisfied. If the output of step (10) is positive, we define from the available user set and the resource set three new sets: the donor (\(\fancyscript{D}\)) and receiver (\(\fancyscript{R}\)) user sets, and the available resource set (\(\fancyscript{K}\)). The donor user set \(\fancyscript{D}\) is composed of the satisfied users in the available user set \(\fancyscript{A}\) and can donate resources to unsatisfied users. The receiver user set \(\fancyscript{R}\) is composed of the unsatisfied users from the available user set \(\fancyscript{A}\) that need to receive resources from the donors to have their data rate requirements fulfilled. Finally, the available resource set \(\fancyscript{K}\) is composed of all the resources from the users in the donor user set, i.e., the resources that can be donated to the unsatisfied users (receiver users).

Note that if the auxiliary user set is not empty in step (9), step (2) is executed again with the users of the available user set. Also, if there is no satisfied user in step (10) the algorithm is not able to find a feasible solution. A satisfied user or donor user is necessary in the second part of the proposed algorithm in order to donate resources to the unsatisfied users or receiver users.

In Fig. 4.15 we present the flowchart of the second part of the proposed solution named as Resource Reallocation. In step (1) of the Reallocation part of the proposed solution, the user from the receiver user set with the worst channel condition is chosen to receive resources. The main motivation for choosing the user with worst channel condition is to increase the probability that this user will get resources in good channel conditions, and therefore, need few resources to become satisfied. Then, in step (2) a resource previously assigned to a donor user is reassigned to the receiver user selected in step (1). The criterion to select the resource \(k^{*}\) is presented in the following:

where \(j^{*}\) is the selected receiver user in step (1) and \(j^{+}\) is the user from the donor user set \(\fancyscript{D}\) that has got assigned the resource \(k\) in the first part of the proposed solution (Unconstrained Maximization). The numerator of the fraction in the argument of the \(\arg \max \left( \cdot \right) \) function represents the transmit data rate of the selected user \(j^{*}\) on resource \(k\) whereas the denominator comprises the transmit data rate of user \(j^{+}\) (donor user) on resource \(k\). Therefore, the chosen resource \(k^{*}\) is the one belonging to user \(j^{*}\) that presents the lowest loss in transmit data rate compared to the previous allocation.

The selected resource in step (2) is reassigned to the receiver user only if the donor user does not become unsatisfied with the resource reallocation. This test is performed in step (3). If the donor cannot donate resources without becoming unsatisfied, the selected resource in step (2) is taken out of the available resource set. Otherwise, the resource is reallocated in step (4) and the data rates of the receiver and donor users are updated in step (5). Another test that should be performed is to check if the selected receiver is satisfied in step (6). If so, the selected receiver user is taken out of the receiver user set in step (7). According to step (8), if the receiver user set becomes empty after step (7), the algorithm is able to find a feasible solution as shown in step (9). Note that if the output of step (6) is negative, the algorithm goes to step (10) where the chosen resource is taken out of the reallocation process. Finally, in step (11) we check if there are available resources to be reassigned. If so, the algorithm goes to step (1). Otherwise, the algorithm is not able to find a feasible solution as it is shown in step (12).

As it can be seen in the proposed solution, depending on the system load, channel state, and data rate requirements, the algorithm is not able to find a feasible solution. In these cases, an alternative is to softly decrease the minimum satisfaction constraints and/or the data rate requirements and re-run the proposed solution again.

6.2.4 Simulation Results

In this section we present some simulation results to illustrate the performance of the CRM technique. We consider the downlink of a hexagonal sector belonging to a tri-sectorized cell of a cellular system. In order to get valid results in a statistical sense we perform several independent snapshots. In each snapshot, the terminals are uniformly distributed within each sector, whose BS is placed at its corner. The minimum allocable resource consists in a time-frequency grid composed of a group of 12 adjacent subcarriers in the frequency dimension and 14 consecutive Orthogonal Frequency-Division Multiplexing (OFDM) symbols in the time dimension. We assume that there are 20 resources in the system.

The propagation model includes a distance-dependent path loss model, a log-normal shadowing component, and a Rayleigh-distributed fast fading component. Specifically, we consider that the fast-fading component of the channel gain of a given terminal is independent among resources. We assume that the link adaptation is performed based on the report of 15 discrete Channel Quality Indicators (CQI) used by the LTE system [2]. The SNRs thresholds for MCS switching were obtained by link level simulations from [27]. The main simulation parameters are summarized in Table 4.4.

We assume that there are four different services with three users each. We consider three different cases when the minimum number of satisfied users is concerned (\(\kappa _s\)). The cases are summarized in Table 4.5. As it can be seen in this table we vary the minimum number of users that should be satisfied for services 3 and 4. Case 3 requires that all users from all services should be satisfied while in case 1 two users should be satisfied for services 3 and 4.

In order to assess the relative performance of the proposed solution we simulate also two other RRA solutions. The first solution is the optimal solution of problem (4.16) obtained by ILP solver.Footnote 3 We call this solution as SatisOpt. The second is the optimal solution of the relaxed version of problem (4.16), i.e., without constraints from (4.16d) to (4.16f). As explained previously, this solution corresponds to the solution that maximizes the total data rate. We call this solution as MaxRateOpt.