Abstract

In the independent setup with multivariate responses, the data become incomplete when partial responses, such as responses on some variables as opposed to all variables, are available from some individuals. The main challenge here is obtaining valid inferences such as unbiased and consistent estimates of mean parameters of all response variables by using available responses. Typically, unbalanced correlation matrices are formed and moments or likelihood analysis based on the available responses are employed for such inferences. Various imputation techniques also have been used. In the longitudinal setup, when a univariate response is repeatedly collected from an individual, these repeated responses become correlated and the responses form a multivariate distribution. In this setup, it may happen that a portion of responses are not available from some individuals under study. These non-responses may be monotonic or intermittent. Also the response may be missing following a mechanism such as missing completely at random (MCAR), missing at random (MAR), or missing non-ignorably. In a longitudinal regression setup, the covariates may also be missing, but typically they are known for all time periods. Obtaining unbiased and consistent regression estimates specially when longitudinal responses are missing following MAR or ignorable mechanism becomes a challenge. This happens because one requires to accommodate both longitudinal correlations and missing mechanism to develop a proper inference tool. Over the last three decades some progress has been made toward this mainly by taking partial care of missing mechanism in developing estimation techniques. But overall, they fall short and may still produce biased and hence inconsistent estimates. The purpose of this paper is to outline these perspectives in a comprehensive manner so that real progress and challenges are understood in order to develop proper inference techniques.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Response Indicator

- Multivariate Response

- Missing Mechanism

- Longitudinal Correlation

- Longitudinal Response

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Missing data analysis in the independent setup with multivariate responses has a long history. For example, for an early work, we refer to Lord (1995) who considered a set of incomplete trivariate normal responses collected from K independent individuals. But all components of all three variables were not available from the K individuals. To estimate the mean parameters consistently, instead of dropping out the individuals with incomplete information, Lord (1995) has utilized the available information and constructed unbalanced (bivariate and trivariate) probability functions for individuals toward writing a likelihood function for the desired inference. Note that this technique for consistent estimation of the parameters and other similar inferences by using incomplete data have been used by many researchers over the last six decades. See, for example, Mehta and Gurland (1973), Morrison (1973), Naik (1975), Little (1988), and Krishnamoorthy and Pannala (1999), among others.

In the independent setup, techniques of imputation and multiple imputation (Rubin 1976; Rubin and Schenker 1986; Meng 1994) have also been widely used. Some authors such as Paik (1997) used this imputation technique in repeated measure (longitudinal) setup. The imputation at a given time point is done mainly by averaging over the responses of other individuals at that time who has the same covariates history as that of the individual concerned. Once the missing values are estimated, they are used as data with necessary adjustments to construct complete data based estimating equations for the desired parameters.

In a univariate longitudinal response setup, when T repeated measures are taken they become correlated and hence they jointly follow a T-dimensional multivariate distribution. However, unlike in the Gaussian setup for linear data, the multivariate distributions for repeated binary and count data become complex or impractical. However if a portion of individuals do not provide responses for all T time points, then adopting likelihood approach by blending missing mechanism and correlation structure of the repeated data would naturally become extremely complicated or impossible. As a remedy, either imputation or estimating equation approaches became popular which, however, work well if the missing data occur following the simplest MCAR mechanism. When the missing data occur following the MAR mechanism, writing a proper estimating equation by accommodating both longitudinal correlations and missing mechanism becomes difficult. Robins et al. (1995) proposed an inverse probability weights based generalized estimating equations (WGEE) approach as an extension of the GEE approach proposed by Liang and Zeger (1986) to the incomplete setup. Remark that as demonstrated by Sutradhar and Das (1999) and Sutradhar (2010), for example, the GEE approach can produce less efficient regression estimates than the well-known simpler moments or quasi-likelihood (QL) estimates, in the complete data setup. Thus, to be realistic, there is no reason how WGEE approach can be more efficient in the incomplete longitudinal setup as compared to simpler moments and QL estimates. In fact in the incomplete longitudinal setup, the WGEE approach constructed based on working correlations as opposed to the use of MAR based correlation matrix may yield biased and hence inconsistent regression estimates (Sutradhar and Mallick 2010). Further remark that this inconsistency issue was, however, not adequately addressed in the literature including the studies by Robins et al. (1995), Paik (1997), Rotnitzky et al. (1998), and Birmingham et al. (2003). One of the main reasons is this that none of the studies used any stochastic correlation structure in conjunction with the missing mechanism to model the longitudinal count and binary data in the incomplete longitudinal setup. Details on this inconsistency problem are given in Sect. 3, whereas in Sect. 2 we provide a detailed discussion on missing data analysis in independent setup.

Without realizing the aforementioned inconsistency problems that can be caused because of the use of working correlations in the estimating equations under the MAR based longitudinal setup, some authors such as Wang (1999) and Rotnitzky et al. (1998) used similar estimating equations approach in non-ignorable missing mechanism-based incomplete longitudinal setup. Some authors such as Troxel et al. (1998) (see also Troxel et al. 1997) and Ibrahim et al. (2001) (see also Ibrahim et al. 1999) have used random effects based generalized linear mixed model to accommodate the longitudinal correlations and certain binary logistic models to generate the non-ignorable mechanism based response indicator variables. In general expectation-maximization (EM) techniques are used to estimate the likelihood based parameters. These approaches appear to encounter similar difficulties as the existing MAR based approaches in generating first the response indicator and then the responses so that underlying longitudinal correlation structure is satisfied. Thus the inference validity of these approaches is not yet established. This problem becomes more complicated when longitudinal correlations are not generated through random effects and writing a likelihood such as for repeated count data becomes impossible. For clarity, in this paper we discuss in detail the successes and challenges with the inferences for MAR based incomplete longitudinal models only. The non-ignorable missing data based longitudinal analysis will therefore be beyond the scope of the paper.

2 Missing Data Analysis in Independent Setup

Missing data analysis in the independent setup with multivariate responses has a long history. For example, for an early work, we refer to Lord (1995) who considered a set of incomplete trivariate normal responses collected from K independent individuals. To be specific, suppose that \(y = (y_{1},y_{2},y_{3})^{\prime} \) represents a trivariate response, but all components of y were not available from K individuals. Suppose that y 3 was recorded from all K individuals, and either y 1 or y 2 was recorded for all individuals, but not both. For j = 1, …, 3, let K j denote the number of individuals having the response y j . It then follows that

Further suppose that the K 1 individuals for whom y 1 is recorded will be denoted collectively as group 1 (G 1); and the K 2 individuals with y 2 will be denoted as group 2 (G 2). Now because y 1 and y 2 are correlated, it is obvious that the data for G 2 contain some information relevant for estimating the parameters of variable y 1, and that the data for G 1 contain some information relevant for estimating the parameters of y 2. The problem is to use the available data as efficiently as possible for estimating the parameters concerned. Denote the distribution of \(y = [y_{1},\;y_{2},\;y_{3}]^{\prime} \) as

with \(\mu = [\mu _{1},\mu _{2},\mu _{3}]^{\prime} \) and

Note that in this setup, there are no data available to estimate ρ 12. For the likelihood estimation of all the other parameters, define

The maximum likelihood estimators for the means are then given by

where

These estimators in (2) are unbiased and consistent for \(\mu _{1},\;\mu _{2},\;\mbox{ and}\;\mu _{3}\), respectively. The remaining parameters may also be estimated similarly (Lord 1995).

Note that the aforementioned technique for consistent estimation of the parameters and for other similar inferences by using incomplete data has been subsequently used by many researchers over the last six decades. See, for example, Mehta and Gurland (1973), Morrison (1973), Naik (1975), Little (1988), and Krishnamoorthy and Pannala (1999), among others. This idea of making inferences about the underlying model parameters such that the missing data (assuming a small proportion of missing) may not to any major extent negatively influence the inferences has also been extended to the analysis of incomplete repeated measure data. For example, one may refer to Little (1995), Robins et al. (1995), and Paik (1997), as some of the early studies. This inference procedure for incomplete longitudinal data is discussed in detail in the next section.

In the independent setup, techniques of imputation and multiple imputation (Rubin 1976; Rubin and Schenker 1986; Meng 1994) have also been widely used. Later on some authors also used this imputation technique in repeated measure (longitudinal) setup. For example, here we illustrate an imputation formula from Paik (1997) in repeated measure setup. The imputation at a given time point is done mainly by averaging over the responses of other individuals at that time who has the same covariates history as that of the individual concerned. Once the missing values are estimated, they are used as data with necessary adjustments to construct complete data based estimating equations for the desired parameters.

In a univariate longitudinal response setup, when T repeated measures are taken they become correlated and hence they jointly follow a T-dimensional multivariate distribution. Now suppose that T i responses are observed for the ith (i = 1, …, K) individual. So, one requires to impute T − T i missing values which may be done following Paik (1997), for example. Interestingly, a unified recursive relation can be developed as follows to obtain the imputed value \(\tilde{y}_{i,T_{i}+k_{i}}\) at time point \(T_{i} + k_{i}\) for all \(k_{i} = 1,\ldots,T - T_{i}\). For this, first define

for the jth individual where j ≠ i, j = 1, …, K. Also, let \(D_{iT_{i}}\) denote the covariate history up to time point T i for the ith individual, and

is the covariate information for the ith individual from time T i + 1 up to \(T_{i} + k_{i}\) for \(k_{i} = 1,\ldots,T - T_{i}\). Further let, \(r_{jw} = 1,\mbox{ or},0\), for example, indicates the response status of the jth individual at wth time. One may then obtain \(\tilde{y}_{i,T_{i}+k_{i}}\) by computing \(\tilde{y}_{i,T_{i}+k_{i}}^{(k_{i})}\), that is, \(\tilde{y}_{i,T_{i}+k_{i}} \equiv \tilde{ y}_{i,T_{i}+k_{i}}^{(k_{i})}\), where

Note that \(\tilde{y}_{i.T_{i}+k_{i}} \equiv \tilde{ y}_{i,T_{i}+k_{i}}^{(k_{i})}\) is an unbiased estimate of \(\mu _{i,T_{i}+k_{i}}\) as the individuals used to impute the missing value of the ith subject has the same covariate history up to time point \(T_{i} + k_{i}\), unlike the covariate history up to time point T i (Paik 1997).

3 Missing Data Models in Longitudinal Setup

Let Y it be the potential response from the ith (i = 1, …, K) individual at time point t which may or may not be observed, and \(x_{it} = (x_{it1},\ldots,x_{itp})^{\prime} \) be the corresponding p-dimensional covariate vector which is assumed to be available for all times t = 1, …, T. In this setup, K is large (K → ∞) and T is small such as 3 or 4. Suppose that \(\beta = (\beta _{1},\ldots,\beta _{p})^{\prime} \) denote the effect of x it on y it . Irrespective of the situation whether Y it is observed or not, it is appropriate in the longitudinal setup to assume that the repeated responses follow a correlation model with known functional forms for the mean and the variance, but the correlation structure may be unknown. Recall that in the independent setup, Lord (1995) considered multivariate responses having a correlation structure and incompleteness arose because of missing information on some response variables, whereas in the present longitudinal setup, repeated responses from an individual form a multivariate response with a suitable mean, variance, and correlation structures, but it remains a possibility that one individual may not provide responses for the whole duration of the study. As indicated in the last section, suppose that for the ith (i = 1, …, K) individual T i responses (1 < T i ≤ T) are collected. Also suppose that the remaining T − T i potential responses are missing and the non-missing responses occur in a monotonic pattern.

As far as the mean, variance, and correlation structure of the potential responses are concerned, it is convenient to define them for the complete data. Let \({y_{i}}^{c} = {(y_{i1},\cdots \,,y_{it},\cdots \,,y_{iT})}^{{\prime}}\) and \({X_{i}}^{c} = {(x_{i1},\cdots \,,x_{it},\cdots \,,x_{iT})}^{^{\prime} }\) denote the T ×1 complete outcome vector and T ×p covariate matrix, respectively, for the i-th (i = 1, ⋯ , K) individual over T successive points in time. Also, let

where \(\mu _{it}(\beta ) = {h}^{-1}(\eta _{it})\;\;\mbox{ with}\;\;\eta _{it} = x_{it}^{^{\prime} }\beta\), h being a suitable link function. For example, for linear models, a linear link function is used so that \(\mu _{it}(\beta ) = x^{\prime} _{it}\beta;\) whereas for the binary data a logistic link function is commonly used so that \(\mu _{it}(\beta ) =\exp (\eta _{it})/[1 +\exp (\eta _{it})]\), and for count data a log linear link function is used so that \(\mu _{it}(\beta ) =\exp (\eta _{it})\). Further let

be the true covariance matrix of \(y_{i}^{c}\), where \(A_{i}^{c}(\beta ) = \mbox{ diag}[\sigma _{i11}(\beta ),\cdots \,,\sigma _{itt}(\beta ),\cdots \,,\sigma _{iTT}(\beta )]\) with \(\sigma _{itt}(\beta ) = var(Y _{it})\), and \(\tilde{C}_{i}(\rho,x_{i}^{c})\) is the correlation matrix for the ith individual with ρ as a suitable vector of correlation parameters, for example, \(\rho \equiv (\rho _{1},\ldots,\rho _{\ell},\ldots,\rho _{T-1})^{\prime} \), where ρ ℓ is known to be the lag ℓ auto-correlation. Note that when covariates are time dependent, the true correlation matrix is free from time-dependent covariates in linear longitudinal setup, but it depends on the time-dependent covariates through \(X_{i}^{c}\) in the discrete longitudinal setup (Sutradhar 2010). In the stationary case, that is, when covariates are time independent, we will denote the correlation matrix by \(\tilde{C}(\rho )\) in the complete longitudinal setup, and similar to Sutradhar (2010, 2011), this matrix satisfies the auto-correlation structure given by

where for ℓ = 1, …, T, ρ ℓ is known to be the ℓth lag auto-correlation. Note that when this correlation structure (7) will be used in the incomplete longitudinal setup, it would be denoted by \(\tilde{C_{i}}(\rho )\) as it will be constructed for T i available responses.

As far as the missing mechanism is concerned, it is customary to assume that a longitudinal response may be missing completely at random (MCAR), or missing at random (MAR), or the missing can be non-ignorable. Under the MCAR mechanism, the missing-ness does not depend on any present, past, or future responses. Under the MAR mechanism, the missing-ness depends only on the past responses but not on the present or future responses, whereas under the non-ignorable mechanism the missing-ness depends on the past, present, and future possible responses. In notation, let R it be a response indicator variable at time t (t = 1, ⋯ , T) for the i-th (i = 1, ⋯ , K) individual, so that

Note that all individuals provide the responses at the first time point t = 1. Thus, we set R i1 = 1 with \(P(R_{i1} = 1) = 1.0\) for all i = 1, ⋯ , K. Further we assume that the response indicators satisfy the monotonic relationship

Next suppose that r it denote the observed value for R it . For \(t = 2,\ldots,T,\) one may then describe the aforementioned three missing mechanisms as

(Little and Rubin 1987; Laird 1988; Fitzmaurice et al. 1996). Furthermore, it follows under the monotonic missing pattern (9) that \(Pr(R_{it} = 1\vert y_{i}^{c},x_{i},r_{i,t-1} = 0) = 0\) irrespective of the missing mechanism. Note that the inferences based on the non-ignorable missing mechanism may be quite complicated, and we do not include this complicated mechanism in the current paper.

3.1 Inferences When Longitudinal Responses Are Subject to MCAR

When the longitudinal responses are MCAR, R it does not depend on the past, present, or future responses. In such a situation, R it and Y it are independent, implying that

because \(E[Y _{it} -\mu _{it}(\beta )] = 0\). It is then clear that the inference for β involved in μ it (β) is not affected by the MCAR mechanism. Thus, one may estimate the regression effects β consistently and efficiently by solving the GQL estimating equation

where for T i -dimensional observed response vector \(y_{i} = (y_{i1},\ldots,y_{iT_{i}})^{\prime} \),

with \(A_{i}(\beta ) = \mbox{ diag}(\sigma _{i,11}(\beta ),\cdots \,,\sigma _{i,tt}(\beta ),\cdots \,,\sigma _{i,T_{i}T_{i}}(\beta ))\), where \(\sigma _{i,tt}(\beta ) = \mbox{ var}[Y _{it}]\). Note that the incomplete data based estimating equation (11) can be written in terms of pretended complete data. To be specific, by using the available responses \(y_{i} = (y_{i1},\ldots,y_{iT_{i}})^{\prime} \) corresponding to the known response indicators

one may write the GQL estimating equation (11) under the MCAR mechanism as

where \(y_{i}^{c} = {(y_{i}^{^{\prime} },y_{im}^{^{\prime} })}^{^{\prime} }\) with y im representing the T − T i dimensional missing responses which are unobserved but for the computational purpose in the present approach one can use it as a zero vector, for convenience, without any loss of generality. Let \(\hat{\beta }_{GQL,MCAR}\) denote the solution of (11) or (12). This estimator is asymptotically unbiased and hence consistent for β.

Note that the computation of \(\tilde{C}_{i}(\hat{\rho },x_{i})\) matrix in (11) in general, i.e., when covariates are time dependent, depends on the specific correlation structure (Sutradhar 2010). In stationary cases as well as in linear longitudinal model setup, one may, however, compute the stationary correlation matrix \(\tilde{C}_{i}(\hat{\rho })\), by first computing a larger \(\tilde{C}(\hat{\rho })\) matrix for \(\ell= 1,\ldots,T - 1\), and then using the desired part of this large matrix for t = 1, …, T i . Turning back to the computation for the larger matrix with dimension \(T = \mbox{ max}_{1\leq i\leq K}T_{i}\) for T i ≥ 2, we exploit the observed response indicator r it given by

for all t = 1, …, T. For known β and σ i t t , the ℓth lag correlation estimate \(\hat{\rho }_{\ell}\) for the larger \(\tilde{C}(\hat{\rho })\) matrix may be computed as

(cf. Sneddon and Sutradhar 2004, eqn. (16)) for \(\ell= 1,\ldots,T - 1\). Note that as this estimator contains \(\hat{\beta }_{GQL,MCAR}\), both (11) and (13) have to be computed iteratively until convergence.

Further note that in the existing GEE approach, instead of (11), one solves the estimating equation

[Liang and Zeger 1986] where \(V _{i}(\beta,\hat{\alpha }) = A_{i}^{1/2}(\beta )Q_{i}(\alpha )A_{i}^{1/2}(\beta )\), with Q i (α) as the \(T_{i} \times T_{i}\) “working” correlation matrix of y i . It is, however, known that this GEE approach may sometimes encounter consistency breakdown (Crowder 1995) because of the difficulty in estimating the “working” correlation or covariance structure, leading to the failure of estimation of β or the non-convergence of β estimator to β. Furthermore, even if GEE β estimate becomes consistent, it may produce inefficient estimate than simpler independence assumption based moment or quasi-likelihood (QL) estimate (Sutradhar and Das 1999; Sutradhar 2011). Thus, one should be clear from these points that the GEE approach even if corrected for missing mechanism may encounter similar consistency and inefficiency in estimating the regression parameters.

We also remark that even though the non-response probability is not affected by the past history under the MCAR mechanism, the respective efficiency of GQL and GEE estimators will decrease if T i is very small as compared to the attempted complete duration T, that is, if T − T i is large. As far as the value of T i is concerned, it depends on the probability, P[R it = 1] which in general decreases due to the monotonic condition (9). This is because under this monotonic property (9) and following MCAR mechanism, one writes

which gets smaller as t gets larger, implying that T i can be small as compared to T if P[R i j = 1] is far away down from 1 such as \(P[R_{ij} = 1] = 0.90\), say.

3.2 Inferences When Longitudinal Responses Are Subject to MAR

Unlike in the MCAR case, R it and y it are not independent under the MAR mechanism. That is

This is because

as R it does not depend on Y it by the definition of MAR.

Next due to the monotonic property (9) of the response indicators

and

where \(\lambda _{it}(H_{i,t-1}(y),\beta,\rho )\) is the conditional mean of Y it . In (18), one may, for example, use g ij (γ) as

Now because both \(w_{it}\{H_{i,t-1}(y);\gamma \}\) and \(\lambda _{it}(H_{i,t-1}(y),\beta,\rho )\) are functions of the past history of responses H i, t − 1(y), and because

it then follows from (17), by (18) and (19), that

unless \(w_{it}\{H_{i,t-1}(y);\gamma \}\) is a constant free of H i, t − 1(y), which is, however, impossible under MAR missing mechanism as opposed to the MCAR mechanism. Thus, \(E[R_{it}\{Y _{it} -\mu _{it}(\beta )\}]\neq 0\).

3.2.1 Existing Partially Standardized GEE Estimation for Longitudinal Data Subject to MAR

Note, however, that

Now suppose that

implying that \(E[\Delta _{i}\vert H_{i}(y)] = I_{T_{i}}\), and where H i (y) is used to denote appropriate past history showing that the response indicators are generated based on observed responses only.

By observing the unconditional expectation property from (23), in the spirit of GEE [Liang and Zeger 1986], Robins et al. (1995, eqn. (10), p. 109) proposed a conditional inverse weights based PSGEE for the estimation of β which has the form

(see also Paik 1997, eqn. (1), p. 1321). Note that we refer to the GEE in (23) as a partly or partially standardized GEE (PSGEE) because \(V _{i}(\alpha ) =\hat{ \mbox{ cov}}(Y _{i})\) used in this GEE is a partial weight matrix which ignores the missing mechanism, whereas \(\mbox{ cov}[\Delta _{i}(y_{i} -\mu _{i}(\beta ))]\) would be a full weight matrix.

Note that over the last decade many researchers have used this PSWGEE approach for studying various aspects of longitudinal data subject to non-response. See, for example, the studies by Rotnitzky et al. (1998), Preisser et al. (2002), and Birmingham et al. (2003), among others. However, even if the MAR mechanism is accommodated to develop an unbiased estimating function \(\Delta _{i}(y_{i} -\mu _{i}(\beta ))\) (for 0) to construct the fully standardized GEE (FSGEE), the consistency of the estimator of β may break down (see Crowder 1995 for complete longitudinal models) because of the use of “working” covariance matrix V i (α), whereas the true covariance matrix for y i is given by \(\mbox{ cov}[Y _{i}] = \Sigma _{i}(\rho )\). This can happen for those cases where α is not estimable. To be more clear, V i (α) is simply a “working” covariance matrix of y i , whereas a proper estimating equation must use the correct variance (or its consistent estimate) matrix of \(\{\Delta _{i}(y_{i} -\mu _{i}(\beta ))\}\).

To understand the roles of both missing mechanism and longitudinal correlation structure in constructing a proper estimating equation, we now provide following three estimating equations for β. The difficulties and/or advantages encountered by these equations are also indicated.

3.2.2 Partially Standardized GQL (PSGQL) Estimation for Longitudinal Data Subject to MAR

When V i (α) matrix in (24) is replaced with the true \(T_{i} \times T_{i}\) covariance matrix of the available responses, that is, \(\Sigma _{i}(\rho ) = \mbox{ cov}[Y _{i}]\), one obtains the PSGQL estimating equation given by

which also may produce biased and hence inconsistent estimate. This is because Σ i (ρ) may still be very different than the covariance matrix of the actual variable \(\{\Delta _{i}(y_{i} -\mu _{i}(\beta ))\}\). Thus, if the proportion of missing values is more, one may not get convergent solution to the estimating equation (25) and the consistency for β would break down (Crowder 1995). The convergence problems encountered by (24) would naturally be more severe as even in the complete data case V i (α) may not be estimable.

3.2.3 Partially Standardized Conditional GQL (PSCGQL) Estimation for Longitudinal Data Subject to MAR

Suppose that one uses conditional (on history) variance

to construct the estimating equation. Then following (25), one may write the PSCGQL estimating equation given by

It is, however, seen that

But,

even though

Thus, the PSCGQL estimating equation (27) is not an unbiased equation for 0, and may produce bias estimate.

3.2.3 Computational formula for \(\Sigma _{ich}^{{\ast}}(\beta,\rho,\gamma )\)

For convenience, we first write

It then follows that

Now to compute the covariance matrix in the middle term in the right-hand side of (30), we first re-express \(R_{i}(y_{i} -\mu _{i}(\beta ))\) as

and compute the variances for its components as

because R i1 = 1 always and y i1 is random. In the Poisson case \(\sigma _{i,11} =\mu _{i1}\) and in the binary case \(\sigma _{i,11} =\mu _{i1}(1 -\mu _{i1})\), with appropriate formula for μ i1 in a given case. Next for t = 2, …, T i ,

where, given the history, λ it and σ ic, tt are the conditional mean and variance of y it , respectively.

Furthermore, all pairwise covariances conditional on the history H i, t − 1(y) may be computed as follows. For u < t,

3.2.4 A Fully Standardized GQL (FSGQL) Approach

All three estimating equations, namely PSGEE (24), PSGQL (25), and PSCGQL (27) may produce bias estimates, PSGEE being the worst. The reasons for the poor performance of PSGEE are two fold. This is because it completely ignores the missing mechanism and uses a working correlation matrix to accommodate the longitudinal nature of the available data. As opposed to the PSGEE approach, PSGQL approach uses the true correlation structure under a class of auto-correlations but similar to the PSGEE approach it also ignores the missing mechanism. As far as the PSCGQL approach it uses a correct conditional covariance matrix which accommodates both missing mechanism and correlation structure. However, the resulting estimating equation may not unbiased for zero as the history of the responses involved in covariance matrix make a weighted distance function which is not unbiased.

To remedy the aforementioned problems, it is therefore important to use the correct covariance matrix or its consistent estimate to construct the weight matrix by accommodating both missing mechanism and longitudinal correlations of the repeated data. For this to happen, because the distance function is unconditionally unbiased for zero, i.e.,

one must use the unconditional covariance matrix of \(\left \{\Delta _{i}(Y _{i} -\mu _{i}(\beta ))\right \}\) to compute the incomplete longitudinal weight matrix, for the construction of a desired unbiased estimating equation. Let \(\Sigma _{i}^{{\ast}}(\beta,\rho,\gamma )\) denote this unconditional covariance matrix which is computed by using the formula

In the spirit of Sutradhar (2003), we propose the FSGQL estimating equation for β given by

where \(\Sigma _{i}^{{\ast}}(\beta,\rho,\gamma )\) is yet to be computed. This estimating equation is solved iteratively by using

3.2.4 Computation of \(\Sigma _{i}^{{\ast}}(\beta,\rho,\gamma ) = \mbox{ cov}[\Delta _{i}(y_{i} -\mu _{i})]\)

Rewrite (34) as

where \(\Sigma _{ich}^{{\ast}}(\beta,\rho )\) is constructed by (30) by using the formulas from (31) to (33), and E i c h (β, ρ) has the form \(E_{ich}(\beta,\rho ) = [(y_{i1} -\mu _{i1}),(\lambda _{i2} -\mu _{i2}),\ldots,(\lambda _{iT_{i}} -\mu _{iT_{i}})]^{\prime} \).

It then follows that the components of the \(T_{i} \times T_{i}\) unconditional covariance matrix \(\Sigma _{i}^{{\ast}}(\beta,\rho,\gamma )\) are given by

3.2.4.1 (a). Example of \(\Sigma _{i}^{{\ast}}(\beta,\rho,\gamma )\) under linear longitudinal models with T = 2

Note that \(R_{i1} = r_{i1} = 1\) always. But R i2 can be 1 or 0 and under MAR, its probability depends on y i1. Consider

by (20), yielding

(see also (18)). With regard to the longitudinal model for potential responses \(y_{i1},y_{i2}\), along with their non-stationary (time dependent covariates), consider the model as:

Assuming normal distribution, one may write

When the response y it depends on its immediate history, the conditional mean has the formula

implying that the unconditional mean is given by \(\mu _{it} = E[Y _{it}] = x^{\prime} _{it}\beta\), which is the same as the mean in (40), as expected.

Now following (38), we provide the elements of the 2 ×2 matrix \(\Sigma _{i}^{{\ast}}(\beta,\rho,\gamma )\) as

where

\(g_{N}(y_{i1})\) being the normal (say) density of y i1. Thus, \(\Sigma _{i}^{{\ast}}(\beta,\rho,\gamma )\) has the formula

Note that in the complete longitudinal case w i2 would be 1 and \(\sigma _{i22}^{{\ast}}\) would reduce to \(\frac{{\sigma }^{2}} {1{-\rho }^{2}}\), leading to

which is free from β in this linear model case, and the PSGEE (24) uses a “working” version of (44), namely

whereas the FSGQL estimating equation (35) would use \(\Sigma _{i}^{{\ast}}(\beta,\rho,\gamma )\) from (43). This shows the effect of missing mechanism in the construction of the weight matrix for the estimating equation.

3.2.4.2 (b). Example of \(\Sigma _{i}^{{\ast}}(\beta,\rho,\gamma )\) under binary longitudinal AR(1) model with T = 2

Consider a binary AR(1) model with

where \(\mu _{it} = \frac{\exp (x^{\prime} _{it}\beta )} {1+\exp (x^{\prime} _{it}\beta )}\), for all \(t = 1,\ldots,T\).

Now considering y i1 as fixed, by using (31)–(33) we first compute the history-dependent conditional covariance matrix \(\Sigma _{ich}(\beta,\rho ) = \mbox{ cov}[\{\Delta _{i}(y_{i} -\mu _{i})\}\vert H_{i}(y)]\) as:

yielding

Next because

one obtains

By combining (48) and (49), it follows from (38) that the 2 ×2 unconditional covariance matrix \(\Sigma _{i}^{{\ast}}(\beta,\rho,\gamma )\) has the form

where

3.2.4.2.1 General formula for \(\Sigma _{i}^{{\ast}}(\beta,\rho,\gamma )\) under the binary AR(1) model

In general, it follows from (38) that the elements of the \(T_{i} \times T_{i}\) unconditional covariance matrix \(\Sigma _{i}^{{\ast}}(\beta,\rho,\gamma )\) under AR(1) binary model are given by

3.3 An Empirical Illustration

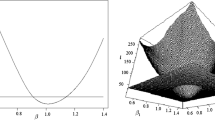

First, to illustrate the performance of the existing PSGEE (24) approach, we refer to some of the simulation results reported by Sutradhar and Mallick (2010). It was shown that this approach may produce highly biased and hence inconsistent regression estimates. In fact these authors also demonstrated that PSGEE(I) (independence assumption based) approach produces less biased estimates than any “working” correlation structures based PSGEE approaches. For example, we consider here their simulation design chosen as

3.3 Simulation Design

K = 100, T = 4, p = 2, q = 1, γ = 4, ρ = 0. 4, 0. 8, \(\beta _{1} =\beta _{2} = 0\) along with two time-dependent covariates:

and

Details on the MAR based incomplete binary data generation, one may be referred to Sutradhar and Mallick (2010, Sect. 2.1). Based on 1,000 simulations, the PSGEE estimates obtained from (24) and PSGEE (I) obtained from (24) by using zero correlation are displayed in Table 1.

These results show that the PSGEE estimates for \(\beta _{1} = 0\) and β 2 = 0 are highly biased. For example, when ρ = 0. 8, the estimates of β 1 and β 2 are − 0. 213 and − 0. 553, respectively. These estimates are inconsistent and unacceptable. Note that these biases are caused by the wrong correlation matrix used to construct the PSGEE (24), whereas this PSGEE provides almost unbiased estimates when data are treated to be independent even if truly they are not so. However the standard errors of the PSGEE(I) estimates appear to be large and hence it may provide inefficient estimates. In fact when the proportion of missing values is large, the PSGEE(I) will also encounter estimation breakdown or it will produce biased estimates. This is verified by a simulation study reported by Mallick et al. (2013). The reason for this inconsistency encountered by PSGEE and PSGEE(I) is the failure of accommodating MAR mechanism in the covariance matrix used as the longitudinal weights.

As a remedy to this inconsistency, we have developed a FSGQL (35) estimating equation by accommodating both MAR mechanism and longitudinal correlation structure in constructing the weight matrix \(\Sigma _{i}^{{\ast}}(\beta,\rho,\gamma )\). This FSGQL equation would provide consistent and efficient regression estimates. For simplicity, Mallick et al. (2013) have demonstrated through a simulation study that FSGQL(I) approach by using ρ = 0 in \(\Sigma _{i}^{{\ast}}(\beta,\rho = 0,\gamma )\) produces almost unbiased estimates with small variances. This provides a guidance that ignoring missing mechanism in constructing the weight matrix would provide detrimental results, whereas ignoring longitudinal correlations does not appear to cause any significant loss.

References

Birmingham, J., Rotnitzky, A., Fitzmaurice, G.M.: Pattern-mixture and selection models for analysing longitudinal data with monotone missing patterns. J. R. Stat. Soc. Ser. B 65, 275–297 (2003)

Crowder, M.: On the use of a working correlation matrix in using generalized linear models for repeated measures. Biometrika 82, 407–410 (1995)

Fitzmaurice, G.M., Laird, N.M., Zahner, G.E.P.: Multivariate logistic models for incomplete binary responses. J. Am. Stat. Assoc. 91, 99–108 (1996)

Ibrahim, J.G., Lipsitz, S.R., Chen, M.H.: Missing covariates in generalized linear models when the missing data mechanism is non-ignorable. J. R. Stat. Soc. Ser. B 61, 173–190 (1999)

Ibrahim, J.G., Chen, M.H., Lipsitz, S.R.: Missing responses in generalized linear mixed models when the missing data mechanism is non-ignorable. Biometrika 88, 551–564 (2001)

Krishnamoorthy, K., Pannala, M.K.: Confidence estimation of a normal mean vector with incomplete data. Can. J. Stat. 27, 395–407 (1999)

Laird, N.M.: Missing data in longitudinal studies. Stat. Med. 7, 305–315 (1988)

Liang, K.-Y., Zeger, S.L.: Longitudinal data analysis using generalized linear models. Biometrika 73, 13–22 (1986)

Little, R.J.A.: A test of missing completely at random for multivariate data with missing values. J. Am. Stat. Assoc. 83, 1198–1202 (1988)

Little, R.J.A.: Modeling the drop-out mechanism in repeated-measures studies. J. Am. Stat. Assoc. 90, 1112–1121 (1995)

Little, R.J.A., Rubin, D.B.: Statistical Analysis with Missing Data. Wiley, New York (1987)

Lord, F.M.: Estimation of parameters from incomplete data. J. Am. Stat. Assoc. 50, 870–876 (1995)

Mallick, T., Farrell, P.J., Sutradhar, B.C.: Consistent estimation in incomplete longitudinal binary models. In: Sutradhar, B.C. (ed.) ISS-2012 Proceedings Volume On Longitudinal Data Analysis Subject to Measurement Errors, Missing Values, and/or Outliers. Springer Lecture Notes Series, pp. 125146. Springer, New York, (2013)

Mehta, J.S., Gurland, J.: A test of equality of means in the presence of correlation and missing values. Biometrika 60, 211–213 (1973)

Meng, X.L.: Multiple-imputation inferences with uncongenial sources of input. Stat. Sci. 9, 538–573 (1994)

Morrison, D.F.: A test for equality of means of correlated variates with missing data on one response. Biometrika 60, 101–105 (1973)

Naik, U.D.: On testing equality of means of correlated variables with incomplete data. Biometrika 62, 615–622 (1975)

Paik, M.C.: The generalized estimating equation approach when data are not missing completely at random. J. Am. Stat. Assoc. 92, 1320–1329 (1997)

Preisser, J.S., Lohman, K.K., Rathouz, P.J.: Performance of weighted estimating equations for longitudinal binary data with drop-outs missing at random. Stat. Med. 21, 3035–3054 (2002)

Robins, J.M., Rotnitzky, A., Zhao, L.P.: Analysis of semiparametric regression models for repeated outcomes in the presence of missing data. J. Am. Stat. Assoc. 90, 106–121 (1995)

Rotnitzky, A., Robins, J.M., Scharfstein, D.O.: Semi-parametric regression for repeated outcomes with nonignorable nonresponse. J. Am. Stat. Assoc. 93, 1321–1339 (1998)

Rubin, D.B.: Inference and missing data (with discussion). Biometrika 63, 581–592 (1976)

Rubin, D.B., Schenker, N.: Multiple imputation for interval estimation from simple random sample with ignorable nonresponses. J. Am. Stat. Assoc. 81, 366–374 (1986)

Sneddon, G., Sutradhar, B.C.: On semi-parametric familial longitudinal models. Statist. Prob. Lett. 69, 369–379 (2004)

Sutradhar, B.C.: An overview on regression models for discrete longitudinal responses. Stat. Sci. 18, 377–393 (2003)

Sutradhar, B.C.: Inferences in generalized linear longitudinal mixed models. Can. J. Stat. 38, 174–196 (2010), Special issue

Sutradhar, B.C.: Dynamic Mixed Models for Familial Longitudinal Data. Springer, New York (2011)

Sutradhar, B.C., Das, K.: On the efficiency of regression estimators in generalized linear models for longitudinal data. Biometrika 86, 459–65 (1999)

Sutradhar, B.C., Mallick, T.S.: Modified weights based generalized quasilikelihood inferences in incomplete longitudinal binary models. Can. J. Stat. 38, 217–231 (2010), Special issue

Troxel, A.B., Lipsitz, S.R., Harrington, D.P.: Marginal models for the analysis of longitudinal measurements subject to non-ignorable and non-monotonic missing data. Biometrika 85, 661–672 (1988)

Troxel, A.B., Lipsitz, S.R., Brennan, T.A.: Weighted estimating equations with nonignorably missing response data. Biometrics 53, 857–869 (1997)

Wang, Y.-G.: Estimating equations with nonignorably missing response data. Biometrics 55, 984–989 (1999)

Acknowledgment

The author fondly acknowledges the stimulating discussion by the audience of the symposium and wishes to thank for their comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this paper

Cite this paper

Sutradhar, B.C. (2013). Inference Progress in Missing Data Analysis from Independent to Longitudinal Setup. In: Sutradhar, B. (eds) ISS-2012 Proceedings Volume On Longitudinal Data Analysis Subject to Measurement Errors, Missing Values, and/or Outliers. Lecture Notes in Statistics(), vol 211. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-6871-4_5

Download citation

DOI: https://doi.org/10.1007/978-1-4614-6871-4_5

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-6870-7

Online ISBN: 978-1-4614-6871-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)