Abstract

Let (X n ) n ≥ 0 be an irreducible, aperiodic, and homogeneous binary Markov chain and let LI n be the length of the longest (weakly) increasing subsequence of (X k )1 ≤ k ≤ n . Using combinatorial constructions and weak invariance principles, we present elementary arguments leading to a new proof that (after proper centering and scaling) the limiting law of LI n is the maximal eigenvalue of a 2 ×2 Gaussian random matrix. In fact, the limiting shape of the RSK Young diagrams associated with the binary Markov random word is the spectrum of this random matrix.

Received 9/29/2011; Accepted7/1/2012; Final 7/16/2012

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Longest increasing subsequence

- Markov chains

- Functional central limit theorem

- Random matrices

- Young diagrams

1 Introduction

The identification of the limiting distribution of the length of the longest increasing subsequence of a random permutation or of a random word has attracted a lot of interest in the past decade, in particular in light of its connections with random matrices (see [1–4, 6, 8, 12–15, 17, 18]). For random words, both the iid uniform and nonuniform settings are understood, leading respectively to the maximal eigenvalue of a traceless (or generalized traceless) element of the Gaussian unitary ensemble (GUE) as limiting laws of LI n . In a dependent framework, Kuperberg [16] conjectured that if the word is generated by an irreducible, doubly stochastic, cyclic, Markov chain with state space an ordered m-letter alphabet, then the limiting distribution of the length LI n is still that of the maximal eigenvalue of a traceless m ×m element of the GUE. More generally, the conjecture asserts that the shape of the Robinson–Schensted–Knuth (RSK) Young diagrams associated with the Markovian random word is that of the joint distribution of the eigenvalues of a traceless m ×m element of the GUE. For m = 2, Chistyakov and Götze [7] positively answered this conjecture, and in the present paper this result is rederived in an elementary way.

The precise class of homogeneous Markov chains with which Kuperberg’s conjecture is concerned is more specific than the ones we shall study. The irreducibility of the chain is a basic property we certainly must demand: each letter has to occur at some point following the occurrence of any given letter. The cyclic (also called circulant) criterion, i.e., the Markov transition matrix P has entries satisfying \(p_{i,j} = p_{i+1,j+1}\), for 1 ≤ i, j ≤ m (where \(m + 1 = 1\)), ensures a uniform stationary distribution.

Let us also note that Kuperberg implicitly assumes the Markov chain to also be aperiodic. Indeed, the simple 2-state Markov chain for the letters α1 and α2 described by \(\mathbb{P}(X_{n+1} = \alpha _{i}\vert X_{n} = \alpha _{j}) = 1\), for i≠j, produces a sequence of alternating letters, so that LI n is always either n ∕ 2 or \(n/2 + 1\), for n even, and \((n + 1)/2\), for n odd, and so has a degenerate limiting distribution. Even though this Markov chain is irreducible and cyclic, it is periodic.

By the end of this introduction, the reader might certainly have wondered how the binary results do get modified for ordered alphabets of arbitrary fixed size m. As shown in [10], for m = 3, Kuperberg’s conjecture is indeed true. However, for m ≥ 4, this is no longer the case; and some, but not all, cyclic Markov chains lead to a limiting law as in the iid uniform case.

2 Combinatorics

As in [9], one can express LI n in a combinatorial manner. For convenience, this short section recapitulates that development.

Let (X n ) n ≥ 1 consist of a sequence of values taken from an m-letter ordered alphabet, \(\mathcal{A}_{m} =\{ \alpha _{1} < \alpha _{2} < \cdots < \alpha _{m}\}\). Let a k r be the number of occurrences of α r among (X i )1 ≤ i ≤ k . Each increasing subsequence of (X i )1 ≤ i ≤ k consists simply of consecutive identical values, with these values forming an increasing subsequence of α r . Moreover, the number of occurrences of \(\alpha _{r} \in \{ \alpha _{1},\ldots,\alpha _{m}\}\) among (X i ) k + 1 ≤ i ≤ ℓ , where 1 ≤ k < ℓ ≤ n, is simply a ℓ r − a k r. The length of the longest increasing subsequence of \(X_{1},X_{2},\ldots,X_{n}\) is thus given by

i.e.,

where a 0 r = 0.

For \(i = 1,\ldots,n\) and \(r = 1,\ldots,m - 1\), let

and let \(S_{k}^{r} =\sum _{ i=1}^{k}Z_{i}^{r}\), \(k = 1,\ldots,n\), with also S 0 r = 0. Then clearly \(S_{k}^{r} = a_{k}^{r} - a_{k}^{r+1}\). Hence,

By the telescoping nature of the sum \(\sum _{k=r}^{m-1}S_{n}^{k} =\sum _{ k=r}^{m-1}(a_{n}^{k} - a_{n}^{k+1})\), we find that, for each 1 ≤ r ≤ m − 1, \(a_{n}^{r} = a_{n}^{m} +\sum _{ k=r}^{m-1}S_{n}^{k}\). Since \(a_{k}^{1},\ldots,a_{k}^{m}\) must evidently sum up to k, we have

Solving for a n m gives us

Substituting into Eq. (23.4), we finally obtain

As emphasized in [9], Eq. (23.5) is of a purely combinatorial nature or, in more probabilistic terms, is of a pathwise nature. We now proceed to analyze Eq. (23.5) for a binary Markovian sequence.

3 Binary Markovian Alphabet

In the context of binary Markovian alphabets, (X n ) n ≥ 0 is described by the following transition probabilities between the two states (which we identify with the two letters α1 and α2): \(\mathbb{P}(X_{n+1} = \alpha _{2}\vert X_{n} = \alpha _{1}) = a\) and \(\mathbb{P}(X_{n+1} = \alpha _{1}\vert X_{n} = \alpha _{2}) = b\), where 0 < a + b < 2. We later examine the degenerate cases \(a = b = 0\) and \(a = b = 1\). In keeping with the common usage within the Markov chain literature, we begin our sequence at n = 0, although our focus will be on n ≥ 1. Denoting by (p n 1, p n 2) the vector describing the probability distribution on {α1, α2} at time n, we have

The eigenvalues of the matrix in Eq. (23.6) are λ1 = 1 and \(-1 < \lambda _{2} = 1 - a - b < 1\), with respective left eigenvectors \((\pi _{1},\pi _{2}) = (b/(a + b),a/(a + b))\) and (1, − 1). Moreover, (π1, π2) is also the stationary distribution. Given any initial distribution (p 0 1, p 0 2), we find that

as n → ∞, since | λ2 | < 1.

Our goal is now to use these probabilistic expressions to describe the random variables Z k 1 and S k 1 defined in the previous section. (We retain the redundant superscript “1” in Z k 1 and S k 1 in the interest of uniformity.)

Setting \(\beta = ap_{0}^{1} - bp_{0}^{2}\), we easily find that

for each 1 ≤ k ≤ n. Thus,

and so \(\mathbb{E}S_{k}^{1}/k \rightarrow (b - a)/(a + b)\), as k → ∞.

Turning to the second moments of Z k 1 and S k 1, first note that \(\mathbb{E}{(Z_{k}^{1})}^{2} = 1\), since (Z k 1)2 = 1 a.s. Next, we consider \(\mathbb{E}Z_{k}^{1}Z_{\ell}^{1}\), for k < ℓ. Using the Markovian structure of (X n ) n ≥ 0, it quickly follows that

For simplicity, we will henceforth assume that our initial distribution is the stationary one, i.e., (p 0 1, p 0 2) = (π1, π2). (This assumption can be dropped as explained in the Concluding Remarks of [10].) Under this assumption, β = 0, \(\mathbb{E}S_{k}^{1} = k\mu \), where \(\mu = \mathbb{E}Z_{k}^{1} = (b - a)/(a + b)\), and Eq. (23.10) simplifies to

We can now compute \(\mathbb{E}Z_{k}^{1}Z_{\ell}^{1}\):

Hence, recalling that β = 0,

for all k ≥ 1 and, for k < ℓ, the covariance of Z k 1 and Z ℓ 1 is

Proceeding to the covariance structure of S k 1, we first find that

Next, for k < ℓ, and using Eqs. (23.14) and (23.15), the covariance of S k 1 and S ℓ 1 is given by

From Eqs. (23.15) and (23.16) we see that, as k → ∞,

and, moreover, as k ∧ ℓ → ∞,

When a = b, \(\mathbb{E}S_{k}^{1} = 0\), and in Eq. (23.17) the asymptotic variance becomes

For a small, we have a “lazy” Markov chain, i.e., a Markov chain which tends to remain in a given state for long periods of time. In this regime, the random variable S k 1 has long periods of increase followed by long periods of decrease. In this way, linear asymptotics of the variance with large constants occur. If, on the other hand, a is close to 1, the Markov chain rapidly shifts back and forth between α1 and α2, and so the constant associated with the linearly increasing variance of S k 1 is small.

As in [9], Brownian functionals play a central rôle in describing the limiting distribution of LI n . To move towards a Brownian functional expression for the limiting law of LI n , define the polygonal function

for 0 ≤ t ≤ 1. In our finite-state, irreducible, aperiodic, stationary Markov chain setting, we may conclude that \(\hat{B}_{n} \Rightarrow B\), as desired. (See, e.g., the more general settings for Gordin’s martingale approach to dependent invariance principles and the stationary ergodic invariance principle found in Theorem 19.1 of [5].)

Turning now to LI n , we see that for the present 2-letter situation, Eq. (23.5) simply becomes

To find the limiting distribution of LI n from this expression, recall that \(\pi _{1} = b/(a + b)\), \(\pi _{2} = a/(a + b)\), \(\mu = \pi _{1} - \pi _{2} = (b - a)/(a + b)\), \({\sigma }^{2} = 4ab/{(a + b)}^{2}\), and that \(\lambda _{2} = 1 - a - b\). Define πmax = max{π1, π2} and \(\tilde{{\sigma }}^{2} = {\sigma }^{2}(1 + \lambda _{2})/(1 - \lambda _{2})\). Rewriting Eq. (23.19) as

LI n becomes

This immediately gives

Let us examine Eq. (23.21) on a case-by-case basis. First, if \(\pi _{\mathrm{max}} = \pi _{1} = \pi _{2} = 1/2\), i.e., if a = b, then σ = 1 and \(\tilde{\sigma } = (1 - a)/a\), and so Eq. (23.21) becomes

Then, by the invariance principle and the continuous mapping theorem,

Next, if πmax = π2 > π1, Eq. (23.21) becomes

On the other hand, if πmax = π1 > π2, Eq. (23.21) becomes

In both Eqs. (23.24) and (23.25) we have a term in our maximal functional which is linear in t or 1 − t, with a negative slope. We now show, in an elementary fashion, that in both cases, as n → ∞, the maximal functional goes to zero in probability.

Consider first Eq. (23.24). Let \(c_{n} = \sqrt{n}(\pi _{\mathrm{max}} - \pi _{1})/\tilde{\sigma } > 0,\) and for any c > 0, let \(M_{c} =\max\limits_{0\leq t\leq 1}(B(t) - ct)\), where B(t) is a standard Brownian motion. Now for n large enough,

a.s., for all 0 ≤ t ≤ 1. Then for any z > 0 and n large enough,

and so by the Invariance Principle and the Continuous Mapping Theorem,

Now, as is well known, \(\mathbb{P}(M_{c} > z) \rightarrow 0\) as c → ∞. One can confirm this intuitive fact with the following simple argument. For z > 0, c > 0, and 0 < ε < 1, we have that

But, as c and ε are arbitrary, we can first take the limsup of Eq. (23.28) as c → ∞, and then let ε → 0, proving the claim.

We have thus shown that

and since the functional clearly is equal to zero when t = 0, we have

as n → ∞. Thus, by the continuous mapping theorem and the converging together lemma, we obtain the weak convergence result

Lastly, consider Eq. (23.25). Here we need simply note the following equality in law, which follows from the stationary and Markovian nature of the underlying sequence (X n ) n ≥ 0:

for \(t = 0,1/n,\ldots,(n - 1)/n,1\). With a change of variables \((u = 1 - t)\) and noting that B(t) and − B(t) are equal in law, our previous convergence result Eq. (23.29) implies that

as n → ∞. Our limiting functional is thus of the form

Since B(1) is simply a standard normal random variable, the different signs in Eqs. (23.30) and (23.33) are inconsequential.

Finally, consider the degenerate cases. If either a = 0 or b = 0, then the sequence (X n ) n ≥ 0 will be a.s. constant, regardless of the starting state, and so LI n ∼ n. On the other hand, if \(a = b = 1\), then the sequence oscillates back and forth between α1 and α2, so that LI n ∼ n ∕ 2. Combining these trivial cases with the previous development gives

Theorem 3.1.

Let (X n)n≥0 be a 2-state Markov chain, with \(\mathbb{P}(X_{n+1}\,=\,\alpha _{2}\vert X_{n} = \alpha _{1})\) = a and \(\mathbb{P}(X_{n+1} = \alpha _{1}\vert X_{n} = \alpha _{2}) = b\) . Let the law of X 0 be the invariant distribution \((\pi _{1},\pi _{2}) = (b/(a + b),a/(a + b))\) , for 0 < a + b ≤ 2, and be (π 1 ,π 2 ) = (1,0), for \(a = b = 0\) . Then, for a = b > 0,

where (B(t)) 0≤t≤1 is a standard Brownian motion. For a≠b or \(a = b = 0,\)

with π max = max {π 1 ,π 2 } and where \(N(0,\tilde{{\sigma }}^{2}/4)\) is a centered normal random variable with variance \(\tilde{{\sigma }}^{2}/4 = ab(2 - a - b)/{(a + b)}^{3}\) , for a≠b, and \(\tilde{{\sigma }}^{2} = 0\) , for \(a = b = 0\) . (If \(a = b = 1\) or \(\tilde{{\sigma }}^{2} = 0\) , then the distributions in Eqs. (23.34) and (23.35), respectively, are understood to be degenerate at the origin.)

To extend this result to the entire RSK Young diagrams, let us introduce the following notation. By

we shall indicate the weak convergence of the joint law of the k-vector \((Y _{n}^{(1)},Y _{n}^{(2)},\ldots,Y _{n}^{(k)})\) to that of \((Y _{\infty }^{(1)},Y _{\infty }^{(2)},\ldots,Y _{\infty }^{(k)})\), as n → ∞. Since LI n is the length of the top row of the associated Young diagrams, the length of the second row is simply n − LI n . Denoting the length of the ith row by R n i, Eq. (23.36), together with an application of the Cramér–Wold theorem, recovers the result of Chistyakov and Götze [7] as part of the following easy corollary, which is in fact equivalent to Theorem 3.1:

Corollary 3.1.

For the sequence in Theorem 3.1, if a = b > 0, then

where the law of Y ∞ is supported on the second main diagonal of \({\mathbb{R}}^{2}\) and with

If a≠b or \(a = b = 0\) , then setting π min = min {π 1 ,π 2 }, we have

where \(\tilde{\Sigma }\) is the covariance matrix

where \(\tilde{{\sigma }}^{2} = 4ab(2 - a - b)/{(a + b)}^{3}\) , for a≠b, and \(\tilde{{\sigma }}^{2} = 0\) , for \(a = b = 0\) .

Remark 3.1.

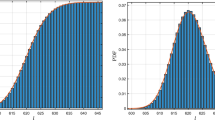

The joint distributions in Eqs. (23.37) and (23.38) are of course degenerate, in that the sum of the two components is a.s. identically zero in each case. In Eq. (23.37), the density of the first component of R ∞ is easy to find and is given by (e.g., see [11])

As in Chistyakov and Götze [7], Eq. (23.37) can then be stated as follows: for any bounded, continuous function \(g : {\mathbb{R}}^{2} \rightarrow \mathbb{R}\),

where ϕGUE, 2 is the density of the eigenvalues of the 2 ×2 GUE and is given by

To see the GUE connection more explicitly, consider the 2 ×2 traceless GUE matrix

where X 1, X 2, Y, and Z are centered, normal random variables. Since \(\mbox{ Corr }(X_{1},X_{2}) = -1\), the largest eigenvalue of M 0 is

almost surely, so that λ1, 0 2 ∼ χ3 2 if \(\mbox{ Var }X_{1} = \mbox{ Var }Y = \mbox{ Var }Z = 1\). Hence, up to a scaling factor, the density of λ1, 0 is given by Eq. (23.39). Next, let us perturb M 0 to

where α and β are constants, G is a standard normal random variable independent of M 0, and I is the identity matrix. The covariance of the diagonal elements of M is then computed to be \(\rho := {\alpha }^{2} - {\beta }^{2}\). Hence, to obtain a desired value of ρ, we may take \(\alpha = \sqrt{(1 + \rho )/2}\) and \(\beta = \sqrt{(1 - \rho )/2}.\) Clearly, the largest eigenvalue of M can then be expressed as

At one extreme, \(\rho = -1\), we recover λ1 = λ1, 0. At the other extreme, ρ = 1, we obtain λ1 = Z. Midway between these two extremes, at ρ = 0, we have a standard GUE matrix, so that

References

Baik, J., Deift, P., Johansson, K.: On the distribution of the length of the longest increasing subsequence of random permutations. J. Am. Math. Soc. 12(4), 1119–1178 (1999)

Baik, J. Deift, P., Johansson, K.: On the distribution of the length of the second row of a Young diagram under Plancherel measure. Geom. Funct. Anal. 10(4), 702–731 (2000)

Baik, J. Deift, P., Johansson, K.: Addendum to: on the distribution of the length of the second row of a Young diagram under Plancherel measure. Geom. Funct. Anal. 10(6), 1606–1607 (2000)

Baryshnikov, Y.: GUEs and queues. Probab. Theory Related Fields 119(2), 256–274 (2001)

Billingsley, P.: Convergence of probability measures. In: Wiley Series in Probability and Statistics: Probability and Statistics, 2nd edn. Wiley, New York (1999) (A Wiley-Interscience Publication)

Borodin, A., Okounkov, A., Olshanski, G.: Asymptotics of Plancherel measures for symmetric groups. J. Am. Math. Soc. 13(3), 481–515 (electronic) (2000)

Chistyakov, G.P., Götze, F.: Distribution of the shape of Markovian random words. Probab. Theory Related Fields 129(1), 18–36 (2004)

Gravner, J., Tracy, C., Widom, H.: Limit theorems for height fluctuations in a class of discrete space and time growth models. J. Statist. Phys. 102(5–6), 1085–1132 (2001)

Houdré, C., Litherland, T.: On the longest increasing subsequence for finite and countable alphabets. In: High Dimensional Probability V: The Luminy Volume, pp. 185–212. Institute of Mathematical Statistics, Beachwood (2009)

Houdré C. and Litherland, T., On the limiting shape of Young diagrams associated with Markov random words. ArXiv #math.Pr/1110.4570 (2011)

Houdré C., Lember, J., Matzinger, H.: On the longest common increasing binary subsequence. C. R. Acad. Sci. Paris 343(9), 589–594 (2006)

Houdré C., Xu, H.: On the limiting shape of Young diagrams associated with inhomogeneous random words, HDP VI: The Banff Volume (Progress in Probability), Birkhaüser (2013)

Its, A.R., Tracy, C.A., Widom, H.: Random words, Toeplitz determinants, and integrable systems. I. In: Random Matrix Models and their Applications, vol. 40 of Mathematical Sciences Research Institute Publications, pp. 245–258. Cambridge University Press, Cambridge (2001)

Its, A.R., Tracy, C.A., Widom, H.: Random words, Toeplitz determinants, and integrable systems. II. Advances in Nonlinear Mathematics and Science, Phys. D 152/153, 199–224 (2001)

Johansson, K.: Discrete orthogonal polynomial ensembles and the Plancherel measure. Ann. Math. 153(2), 259–296 (2001)

Kuperberg, G.: Random words, quantum statistics, central limits, random matrices. Methods. Appl. Anal. 9(1), 99–118 (2002)

Okounkov, A.: Random matrices and random permutations. Int. Math. Res. Notices 2000(20), 1043–1095 (2000)

Tracy, C.A., Widom, H.: On the distributions of the lengths of the longest monotone subsequences in random words. Probab. Theory Related Fields 119(3), 350–380 (2001)

Acknowledgements

Research supported in part by the NSA Grant H98230-09-1-0017.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this paper

Cite this paper

Houdré, C., Litherland, T.J. (2013). Asymptotics for the Length of the Longest Increasing Subsequence of a Binary Markov Random Word. In: Viens, F., Feng, J., Hu, Y., Nualart , E. (eds) Malliavin Calculus and Stochastic Analysis. Springer Proceedings in Mathematics & Statistics, vol 34. Springer, Boston, MA. https://doi.org/10.1007/978-1-4614-5906-4_23

Download citation

DOI: https://doi.org/10.1007/978-1-4614-5906-4_23

Published:

Publisher Name: Springer, Boston, MA

Print ISBN: 978-1-4614-5905-7

Online ISBN: 978-1-4614-5906-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)