Abstract

The survey presents an overview of approximation algorithms for the classical bin packing problem and reviews the more important results on performance guarantees. Both on-line and off-line algorithms are analyzed. The investigation is extended to variants of the problem through an extensive review of dual versions, variations on bin sizes and item packing, as well as those produced by additional constraints. The bin packing papers are classified according to a novel scheme that allows one to create a compact synthesis of the topic, the main results, and the corresponding algorithms.

Access provided by Autonomous University of Puebla. Download reference work entry PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

In the classical version of the bin packing problem, one is given an infinite supply of bins with capacity C and a list L of n items with sizes no larger than C: the problem is to pack the items into a minimum number of bins so that the sum of the sizes in each bin is no greater than C. In simpler terms, a set of numbers is to be partitioned into a minimum number of blocks subject to a sum constraint common to each block. Bin packing rather than partitioning terminology will be used, as it eases considerably the problem of describing and analyzing algorithms.

The mathematical foundations of bin packing were first studied at Bell Laboratories by M.R. Garey and R.L. Graham in the early 1970s. They were soon joined by J.D. Ullman; then at Princeton, these three published the first [103] of many papers to appear in the conferences of the computer science theory community over the next 40 years. The second such paper appeared within months; in that paper, D.S. Johnson [125] extended the results in [103] and studied general classes of approximation algorithms. In collaboration with A. Demers at Princeton, researchers Johnson, Garey, Graham, and Ullman published the first definitive analysis of bin packing approximation algorithms [129]. In parallel with the research producing this landmark paper, Johnson completed his 1973 Ph.D. thesis at MIT which gave a more comprehensive treatment and more detailed versions of the abbreviated proofs in [129].

The pioneering work in [129] opened an extremely rich research area; it soon turned out that this simple model could be used for a wide variety of different practical problems, ranging from a large number of cutting stock applications to packing trucks with a given weight limit, assigning commercials to station breaks in television programming, or allocating memory in computers. The problem is well-known to be \(\mathcal{N}\mathcal{P}\)-hard (see, e.g., Garey and Johnson [100]); hence, it is unlikely that efficient (i.e., polynomial-time) optimization algorithms can be found for its solution. Researchers have thus turned to the study of approximation algorithms, which do not guarantee an optimal solution for every instance, but attempt to find a near-optimal solution within polynomial time. Together with closely related partitioning problems, bin packing has played an important role in applications of complexity theory and in both the combinatorial and average-case analysis of approximation algorithms (see, e.g., the volume edited by Hochbaum [115]).

Starting with the seminal papers mentioned above, most of the early research focused on combinatorial analysis of algorithms leading to bounds on worst-case behavior, also known as performance guarantees. In particular, letting \(A(L)\) be the number of bins used by an algorithm A and letting \({\it \text{OPT}}(L)\) be the minimum number of bins needed to pack the items of L, one tries to find a least upper bound on \(A(L)/{\it \text{OPT}}(L)\) over all lists L (for a more formal definition, see Sect. 2). Much of the research can be divided along the boundary between on-line and off-line algorithms. In the case of on-line algorithms, items are packed in the order they are encountered in a scan of L; the bin in which an item is packed is chosen without knowledge of items not yet encountered in L. These algorithms are the only ones that can be used in certain situations. For example, the items to be packed may arrive in a sequence according to some physical process and have to be assigned to a bin as soon as they arrive. Off-line algorithms have complete information about the entire list throughout the packing process.

Since the early 1980s, progressively more attention has been devoted to the probabilistic analysis of packing algorithms. A book by Coffman and Lueker [46] covers the methodology in some detail (see also the book by Hofri [119, Chap. 10]). Nevertheless, combinatorial analysis remains a central research area, and from time to time, numerous new results need to be collected into survey papers. The first comprehensive surveys of bin packing algorithms were by Garey and Johnson [101] in 1981 and by Coffman et al. [50] in 1984. The next such survey was written some 10 years later by Galambos and Woeginger [96] who gave an overview restricted to on-line algorithms. New surveys appeared at the end of the 1990s. Csirik and Woeginger [62] concentrated on on-line algorithms, while Coffman et al. [52] extended the coverage to include off-line algorithms, and Coffman et al. [53] considered both classes of algorithms in an earlier version of the present survey. Worst-case and average-case analysis are surveyed in [62] and [52]. More recently, classical bin packing and its variants were covered in Coffman and Csirik [43] and in Coffman et al. [54, 55].

This survey gives a broad summary of results in the one-dimensional bin packing arena, concentrating on combinatorial analysis, but in contrast to other surveys, greater space is devoted to variants of the classical problem. Important new variants continue to arise in many different settings and help account for the thriving interest in bin packing research. The survey does not attempt to give a self-contained work, for example, it does not present all algorithms in detail nor does it give formal proofs, but it refers to certain proof techniques which have been used frequently to establish the most important results. Also, in the coverage of variants, the restriction to partitioning problems in one dimension is maintained. Packing in higher dimensions, for example, strip packing and two-dimensional bin packing, is itself a big subject, one deserving its own survey. The interested reader is referred to the recent survey by Epstein and van Stee [79].

Another important feature of this survey is that it adopts the novel classification scheme for bin packing papers recently introduced by Coffman and Csirik [44]. This scheme allows one to create a compact synthesis of the topic, the main results, and the corresponding algorithms.

Section 2 covers the main definitions and as well as a full description of the classification scheme. For the classical bin packing problem, on-line (and semi-on-line) algorithms are discussed in Sect. 3 and off-line algorithms in Sect. 4. (Section 4.4 is a slight departure from this organization: it deals with anomalous behavior both for on-line and off-line algorithms.)

The second part of this survey concentrates on special cases and variants of the classical problem. Variations on bin size are considered in Sect. 5, dual versions in Sect. 6, variations on item packing in Sect. 7, and additional conditions in Sect. 8. Finally, Sect. 9 gives a series of examples to familiarize the reader with the classification scheme. The classification of the papers considered in the survey is also given, when appropriate, in the Bibliography.

2 Definitions and Classification

This section introduces more formally all of the relevant notation required to define, analyze, and classify bin packing problems. Starting with the classical problem, the notation is then extended to more general versions, and finally, the new classification scheme is described. Additional definitions needed by specific variants are introduced in the appropriate sections.

2.1 Basic Definitions for Classical Bin Packing

The classical bin packing problem is defined by an infinite supply of bins with capacity C and a list \(L = (a_{1},\ldots,a_{n})\) of items (or elements). A value \(s_{i} \equiv s(a_{i})\) gives the size of item \(a_{i}\) and satisfies \(0 < s_{i} \leq C,\ 1 \leq i \leq n.\) The problem is to pack the items into a minimum number of bins under the constraint that the sum of the sizes of the items in each bin is no greater than C.

In the classical problem, the bin capacity C is just a scale factor, so without loss of generality, one can adopt the normalization \(C = 1.\) Unless stated otherwise, this convention is in force throughout. One of the exceptions will be in the sections treating variants of the classical problem in which bins \(B_{j}\) have varying sizes. In those sections, bin sizes will be denoted by \(s(B_{j}).\)

In the algorithmic context, nonempty bins will be classified as either open or closed. Open bins are available for packing additional items. Closed bins are unavailable and can never be reopened. It is convenient to regard a completely full bin as open under any given algorithm until it is specifically closed, even though such bins can receive no further items. Denote the bins by \(B_{1},B_{2},\ldots.\) When no confusion arises, \(B_{j}\) will also denote the set of items packed in the \(j\)-th bin, and \(\vert B_{j}\vert \) will denote the number of such items.

The items are always organized into a list L (or a list \(L_{j}\) in some indexed set of lists). The notation for list concatenation is as usual; \(L = L_{1}L_{2}\ldots L_{k}\) means that the items of list \(L_{i}\) are followed by the items of list \(L_{i+1}\) for each \(i = 1,2,\ldots,k - 1.\) When the number of items in a list is needed as part of the notation, an index in parentheses is used: \(L_{(n)}\) denotes a list of n items.

If \(B_{j}\) is nonempty, its current content or level is defined as

A bin (necessarily empty) is opened when it receives its first item. Of course, it may be closed immediately thereafter, depending on the algorithm and the item size. It is assumed, without loss of generality, that all algorithms open bins in order of increasing index. Within any collection of nonempty bins, the earliest opened, the leftmost, and the lowest indexed all refer to the same bin.

In general, in the considered lists, the item sizes are taken from the interval \((0,\alpha ]\), with \(\alpha \in (0,1].\) It is usually assumed that \(\alpha = \frac{1} {r}\), for some integer \(r \geq 1\), and many results apply only to \(r =\alpha = 1.\)

There are two principal measures of the worst-case behavior of an algorithm. Recall that \(A(L)\) denotes the number of bins used by algorithm A to pack the elements of L; \({\it \text{OPT}}\) denotes an optimal algorithm, one that uses a minimum number of bins. Define the set \(V _{\alpha }\) of all lists L for which the maximum size of the items is bounded from above by \(\alpha.\) For every \(k \geq 1\), let

Then the asymptotic worst-case ratio (or asymptotic performance ratio, APR) as a function of \(\alpha\) is given by

Clearly, \(R_{A}^{\infty }(\alpha ) \geq 1\), and this number measures the quality of the packings produced by algorithm A compared to optimal packings in the worst case. In an equivalent definition, \(R_{A}^{\infty }(\alpha )\) is a smallest number such that there exists a constant \(K \geq 0\) for which

for every list \(L \in V _{\alpha }.\) Hereafter, if \(\alpha\) is left unspecified, the APR of algorithm A refers to \(R_{A}^{\infty }\equiv R_{A}^{\infty }(1).\)

The second way to measure the worst-case behavior of an algorithm A is the absolute worst-case ratio (AR)

The comparison of algorithms by asymptotic bounds can be strikingly different from that by absolute bounds. Generally speaking, the number of items n must be sufficiently large (how large will depend on the algorithm) for the asymptotic bounds to be the better measure for purposes of comparison. Note that the ratios are bounded below by 1; the better algorithms have the smaller ratios. When algorithm A is an on-line algorithm, the asymptotic ratio is also called the competitive ratio.

There are some further proposals to measure the quality of a packing, like differential approximation measure (see Demange et al. [66], [67]), random-order ratio (see Kenyon [136]), relative worst-order ratio (see Boyar and Favrholdt [27]), and accommodation function (see Boyar et al. [28]).

It is finally worth mentioning a special sequence \(t_{i}\) of integers which was investigated by Sylvester [170] in connection with a number theoretic problem and later generalized by Golomb [107]. (In describing the performance of various algorithms in later sections, such sequence will occasionally be considered.) For an integer \(r \geq 1\), define

(The Sylvester sequence was defined for \(r = 1\)). It was conjectured by Golomb that for \(r = 1\), this sequence gives the closest approximation to \(1\) from below among the approximations by sums of reciprocals of k integers, the basis of the conjecture being

Furthermore, it is also easily proved that the following expression is valid for the Golomb sequences:

On the other hand, the following value appears in several results:

The first few values of \(h_{\infty }(r)\) are the following: \(h_{\infty }(1) \approx 1.69103\), \(h_{\infty }(2) \approx 1.42312\), \(h_{\infty }(3) \approx 1.30238.\)

2.2 General Definitions

This survey covers results on several variants of the one-dimensional bin packing problem. In such variants, the meaning of a number of notations can be different, so more general definitions are necessary. These will be introduced in the present section, together with the basic ideas behind the adopted classification scheme.

The notion of packing items into a sequence of initially empty bins helps visualize algorithms for constructing partitions. It is also helpful in classifying algorithms according to the various constraints under which they must operate in practice. The items are normally given in the form of a sequence or list \(L = (a_{1},\ldots,a_{n}),\) although the ordering in many cases will not have any significance. To economize on notation, a harmless abuse is adopted whereby \(s_{i}\) denotes the name as well as the size of the \(i\)-th item. The generic symbol for packing is \(\mathsf{P}\), the number of items in \(\mathsf{P}\) is denoted by \(\vert \mathsf{P}\vert,\) the sum of the sizes of the items in \(\mathsf{P}\) is denoted by \(c(\mathsf{P})\), and the number of bins in \(\mathsf{P}\) is denoted by \(\#\mathsf{P}.\) In the classical bin packing optimization problem, the objective is to find a packing \(\mathsf{P}\) of all the items in L such that \(\#\mathsf{P}\) is minimized over all partitions of L satisfying the sum constraints. Recall that C is only a scale factor in this problem; it is usually convenient to replace the \(s_{i}\) by \(s_{i}/C\) and take \(C = 1.\)

Let \(\mathsf{P}_{A}(L)\) denote the packing of L produced by algorithm \(A.\) In the literature, one finds the notation \(A(L)\) representing metrics such as \(\#\mathsf{P}\), but since \(A(L)\) may denote different metrics for different problems (the same algorithm A may apply to problems with different objective functions), the alternative notation will be necessary on occasion. The minimum of \(\#\mathsf{P}\) over all partitions \(\mathsf{P}\) of L satisfying the sum constraints will have the notation \({OPT}(L) :=\min _{\pi (L)}\#\mathsf{P}(L)\), where \(\pi (L)\) denotes the set of all such partitions. The notation \({\it \text{OPT}}(L)\) suffers from the same ambiguity as before, that is, the objective function to which it applies is determined by context. Moreover, in contrast to other algorithm notation, \({\it \text{OPT}}\) does not denote a unique algorithm.

There are two variants of classical bin packing that arise immediately from the definition. In one, a general bin capacity \(C\) and a number m of bins are part of the problem instance, and the problem is to find a maximum cardinality subset of \(\{s_{i}\}\) that can be packed into m bins of capacity \(C.\) In the other, all \(n\) items must be packed into m bins, and the problem is to find a smallest bin capacity C such that there exists a packing of all the items in m bins of capacity \(C.\) The first problem suggests yet another in which the total size of the items in a subset \(\mathcal{S}\) is the quantity of interest rather than the number \(\vert \mathcal{S}\vert \) of items. Problems fixing the number of bins fall within scheduling theory whose origins in fact predate those of bin packing theory. In scheduling theory, which is very large in its own right, makespan scheduling is more likely to be described as scheduling a list of tasks or jobs (items) on m identical processors (bins) so as to minimize the schedule length or makespan (bin capacity). This incursion into scheduling problems will be limited to the most elementary variants and applications of bin packing problems, such as those above.

Bin covering problems are also included in bin packing theory for classification purposes, and these change the sum constraints to \(c(B_{j}) \geq 1,\) where again, bin capacity is normalized to 1. The classical covering problem asks for a partition, \(\mathsf{C}\), called a cover, which maximizes the number \(\#\mathsf{C}\) of bins satisfying the new constraint. Both the packing and covering combinatorial optimization problems are \(\mathcal{N}\mathcal{P}\)-hard. With problems defined on restricted item sizes or number of items per bin being the major exceptions, this will be the case for nearly all problem variants in the classification scheme. Note that there are immediate variants to bin covering, just as there were for bin packing. For example, one can take the number \(m\) of bins to be fixed and ask for a minimum cardinality subset of \(\{a_{i}\}\) from which a cover of m bins can be obtained. Or one can consider the problem of finding a largest capacity C such that L can be made to cover m bins of capacity \(C.\)

Order-of-magnitude estimates of time complexities of fundamental algorithms and their extensions are usually easy to derive. The analysis of parallel algorithms for computing packings is an example where deriving time complexities is not always so easy. However, the research in this area, in which results take the form of complexity measures, has been very limited.

Several results quantify the trade-off between the running time of algorithms and the quality of the packings. They produce polynomial-time (or fully polynomial-time) approximation schemes [100], denoted by PTAS (or FPTAS). In simplified terms, a typical form of such results is illustrated by “Algorithm A produces packings with \(O(\epsilon )\) wasted space and has a running time that is polynomial in \(1/\epsilon.\)”

The most common approach to the analysis of approximation algorithms has been worst-case analysis by which the worst possible performance of an algorithm is compared with the performance of an optimization algorithm. (Detailed definitions will be provided shortly.) The term performance guarantee puts a more positive slant on results of this type. So also does the term competitive analysis, which usually refers to a worst-case analysis comparing an on-line approximation algorithm with an optimal off-line algorithm. Probability models also enjoy wide use and have grown in popularity, as they bring out typical, average-case behavior rather than the normally rare worst-case behavior. In probabilistic analysis, algorithms have random inputs; the items are usually assumed to be independent, identically distributed random variables. For a given algorithm \(A\), \(A(L_{n})\) is a random variable whose distribution becomes the goal of the analysis. Because of the major differences in probabilistic results and in the analysis that produces them, they are not covered in this survey, which focuses exclusively on combinatorial analysis. For a general treatment of average-case analysis of packing and related partitioning problems, see the text by Coffman and Lueker [46].

2.3 Classification Scheme

The scheme for classifying problems and solutions is aimed at giving the reader a good idea of the results in bin packing theory to be found in any given paper on the subject. Although in many, if not most cases, it is impractical to describe every result contained in a paper, an indication of the main results should be useful to the reader.

The classification uses four fields, presented in the form

problem \(\vert \) algorithm class \(\vert \) results \(\vert \) parameters

For a brief preview, observe that, normally, the problem is to minimize or maximize a metric under sum constraints and refers, for example, to the number of bins of fixed capacity and the capacity of a fixed number of bins. The algorithm class refers to paradigms such as on-line, off-line, or bounded space. The results field specifies performance in terms of absolute or asymptotic worst-case ratios, problem complexity, etc. Lastly, the parameters field describes restrictions on problem parameters, such as a limit on item sizes or on the number of items per bin, and a restriction of all data to finite or countable sets. In many cases, there are no additional parameters to specify, in which case the parameters field will be omitted. The three remaining fields will normally be nonempty.

In the following, a detailed description of the classification scheme is introduced. Section 9 gives a number of annotated examples to familiarize the reader with the proposed classification. The special terms or abbreviations adopted for entries will be given in bold face. In the large majority of cases, the entry will have a mnemonic quality that makes the precise meaning of an entry clear in spite of its compact form.

2.3.1 Problem

The combinatorial optimization problems of interest can easily be expressed in the notation given so far, but for readability, abbreviations of the names by which the problems are commonly known will be used. Most, but not all, of the problems below were discussed earlier. Moreover, some of them are not treated in this survey and are listed for the sake of completeness.

-

1.

pack refers to the problem of minimizing \(\#\mathsf{P}(L).\) The default sum constraints are \(c(B_{j}) \leq 1.\) But they are \(c(B_{j}) \leq s(B_{j})\), if variable bin capacities \(s(B_{j})\) are considered, and more simply \(c(B_{j}) \leq C\) if there is only a common bin capacity \(C.\)

-

2.

maxpack problems invert the problem above, that is, \(\#\mathsf{P}\) is to be maximized. Clearly, such problems trivialize unless there is some constraint placed on opening new bins. The tacit assumption will be that packings under approximation algorithms must obey a conservative, any-fit constraint: a new bin cannot be opened for an item \(s_{i}\) unless \(s_{i}\) cannot fit into any currently open bin. Optimal packings by definition must be such that, for some permutation of the bins, no item in \(B_{i}\) fits into \(B_{j}\) for any \(j < i.\)

-

3.

mincap refers to the problem of minimizing the common bin capacity needed to pack L into a given number m of bins. Bin-stretching analysis applies if the problem instances are restricted to those for which an optimization rule can pack \(L\) into m unit-capacity bins. Under this assumption, in the analysis of algorithm \(A\), one asks how large must the bin capacity be (how much must it be stretched) for algorithm A to pack L into the same number of bins.

-

4.

maxcard(subset) has the number m of bins and their common capacity C as part of the problem instance. The problem is to find a largest cardinality subset of L that can be packed into m bins of capacity \(C.\)

-

5.

maxsize(subset) has the number m of bins and their common capacity C as part of the problem instance. The problem is to find a subset of L with maximum total item size that can be packed into m bins of capacity \(C.\)

-

6.

cover refers to the problem of finding a partition of L into bins that maximizes \(\#\mathsf{P}(L)\) subject to the constraints \(c(B_{j}) \geq 1.\) The partition is called a cover.

-

7.

capcover refers to the problem of finding, for a given number m of bins, the maximum capacity C such that a covering of m bins of capacity C can be obtained from \(L.\)

-

8.

cardcover(subset) is the related problem of minimizing the cardinality of the subset of L needed to cover a given number \(m\) of bins with a given capacity \(C.\)

2.3.2 Algorithm Class

-

1.

on-line algorithms sequentially assign items to bins, in the order encountered in \(L\), without knowledge of items not yet packed. Thus, the bin to which \(a_{i}\) is assigned is a function only of the sizes \(s_{1},\ldots,s_{i}.\)

-

2.

off-line algorithms have no constraints beyond the intrinsic sum constraints; an off-line algorithm simply maps the entire list L into a packing \(\mathsf{P}(L).\) All items are known in advance, so the ordering of L plays no role.

-

3.

bounded-space algorithms decide where an item is to be packed based only on the current contents of at most a finite number \(k\) of bins, where k is a parameter of the algorithm. A more precise definition and further discussion of these algorithms appear later.

-

4.

linear-time algorithms have \(O(n)\) running time. More precisely, all such algorithms take constant time to pack each item.

The three characterizations above are orthogonal. But the literature suggests that the following convention allows to use one term in classifying algorithms most of the time: bounded space implies linear time and linear time implies on-line. Exceptions will be noted explicitly: it will be seen below (under repack) how off-line algorithms can be linear time.

-

5.

open-end refers to certain cover approximation algorithms. An open bin \(B_{j}\), that is, one for which \(c(B_{j})< s(B_{j})\) and further packing is allowed, must be closed just as soon as \(c(B_{j}) \geq s(B_{j}).\) (The item causing the “overflow” is left in the bin.)

-

6.

conservative algorithms are those required to pack the current item into an open bin with sufficient space, whenever such a bin exists; in particular, it cannot choose to open a new bin. Scheduling algorithms satisfying a similar constraint are sometimes called work conserving.

-

7.

repack refers to packing problems which allow the repacking (possibly limited in some way) of items, that is, moving an item, say \(s_{i}\), from one bin to another.

-

8.

dynamic packing introduces the time dimension; an instance L of this problem consists of a sequence of triples \((s_{i},b_{i},d_{i})\) with \(b_{i}\) and \(d_{i}\) denoting arrival and departure times, respectively. Under a packing algorithm \(A\), \(A(L,t)\) denotes the number of bins occupied at time \(t\), that is, the number of bins occupied by those items \(a_{i}\) for which \(b_{i} \leq t < d_{i}.\)

2.3.3 Results

Almost all results fall into the broad classes mentioned in Sect. 2.1.

-

1.

\(\mathbf{R}_{\mathbf{A}}^{\infty }\) is the asymptotic worst-case ratio, with algorithm A specified when appropriate. When only a bound is proved, the word bound is appended.

-

2.

\(\mathbf{R}_{\mathbf{A}}\) is the absolute worst-case ratio. When only a bound is proved, the word bound is appended.

-

3.

Where possible, complexity of the problem will be given in the standard notation of problem complexity. Approximation schemes are classified as complexity results and have entries like PTAS and FPTAS as noted earlier.

-

4.

Complexity of the algorithm may also be a result; it refers to running-time complexity and will be signaled by the entry running time.

A paper classified as a worst-case analysis may also have complexity results (but not conversely, unless both types of results figure prominently in the paper, in which case both classifications will be given).

2.3.4 Parameters

These typically correspond to generalizations whereby limitations can be placed on the problem instance, or further properties of the algorithm classification. In some cases, problems may be simplified by certain limitations, such as a specific upper limit of two to the number of items per bin.

-

1.

\(\{\mathbf{B}_{\mathbf{i}}\}\) means that there can be more than one bin size and an unlimited supply of each size.

-

2.

stretch refers to certain asymptotic bounds which compare the number of bins of capacity C needed to pack L by algorithm A to the number of unit-capacity bins needed by an optimal packing.

-

3.

mutex stands for mutual exclusion and introduces constraints, where needed, in the form of a sequence of pairs \((s_{i},s_{j}),\ i\neq j,\) meaning that \(s_{i}\) and \(s_{j}\) cannot be put in the same bin.

-

4.

\(\mathbf{card(B) \leq k}\) gives a bound on the number of items that can be packed in a bin.

-

5.

\(\mathbf{s}_{\mathbf{i}} \leq \alpha\) or \(\mathbf{s}_{\mathbf{i}} \geq \alpha\) denotes bounds on item sizes, the former being far more common in the literature. In some cases, \(\alpha\) is specialized to a discrete parameter \(1/k\), k an integer. The problems with item-size restrictions are called parametric cases in the literature.

-

6.

restricted \(\mathbf{s}_{\mathbf{i}}\) refers to simplified problems where the number of different item sizes is finite.

-

7.

discrete calls for discrete sets of item sizes, in particular, item sizes that, for a given integer \(r\), are all multiples of \(1/r\) with \(C = 1.\) Equivalently, the bin size could be taken as r and item sizes restricted to the set \(\{1,\ldots,j\}\) for some \(j \in \{ 1,\ldots,r\}.\) While this may not be a significant practical constraint, it will affect the difficulty of the analysis and may create significant changes in the results.

-

8.

controllable means that one has the possibility not to pack an item as is, but to first take some decision about it, such as rejecting it or splitting it into parts.

3 On-Line Algorithms

Recall that a bin packing algorithm is called on-line if it packs each element as soon as it is inspected, without any knowledge of the elements not yet encountered (either the number of them or their sizes). This review starts with results for some classical algorithms and then generalizes to the Any-Fit and the Almost Any-Fit classes of on-line algorithms. The subclass of bounded-space on-line algorithms will also be considered: an algorithm is bounded space if the number of open bins at any time in the packing process is bounded by a constant. (The practical significance of this condition is clear; e.g., one may have to load trucks at a depot where only a limited number of trucks can be at the loading dock). This section will be concluded by a detailed discussion of lower bounds on the APRs of certain classes of on-line algorithms, but before doing so, relevant variations of old algorithms and the current best-in-class algorithms will be presented. Anomalous behavior occurring in on-line algorithms is discussed in Sect. 4.4.

3.1 Classical Algorithms

In describing an on-line algorithm, it will occasionally be convenient, just before a decision point, to refer to the next item to be packed as the current item; right after A packs \(a_{i},\ i < n\), \(a_{i+1}\) becomes the current item. A simple approach is to pack the bins one at a time according to

Next-Fit (NF): After packing the first item, NF packs each successive item in the bin containing the last item to be packed, if it fits in that bin; if it does not fit, NF closes that bin and packs the current item in an empty bin.

The time complexity of NF is clearly \(O(n).\) Note that only one bin is ever open under NF, so it is bounded space. This advantage is compensated by a relatively poor APR, however.

Theorem 1 (Johnson et al. [129])

One has

Fisher [86] discovered an interesting property that NF does not share with other classical approximation algorithms. He proved that NF packs any list and its reverse into the same number of bins. The conspicuous disadvantage of NF is that it closes bins that could be used for packing later items. An immediate improvement would seem to be never to close bins. But then the next question is: if an item can be put into more than one open bin, which bin should be selected? One possible rule drawn from scheduling theory (where it is known as the greedy or largest processing time rule) is the following

Worst-Fit (WF): If there is no open bin in which the current item fits, then WF packs the item in an empty bin. Otherwise, WF packs the current item into an open bin of smallest content in which it fits; if there is more than one such bin, WF chooses the lowest indexed one.

Although one might expect WF to behave better than NF, it does not.

Theorem 2 (Johnson [127])

For all \(\alpha \in (0,1]\)

To achieve smaller APRs, there are many better rules for choosing from among the open bins. One that quickly comes to mind is

First-Fit (FF): FF packs the current item in the lowest indexed nonempty bin in which it fits, assuming there is such a bin. If no such bin exists, FF packs the current item in an empty bin. FF is neither linear time nor bounded space.

A natural complement to WF packs each item into a bin that minimizes the space leftover.

Best-Fit (BF): If there is no open bin in which the current item fits, then BF packs the item in an empty bin. Otherwise, BF packs the current item into an open bin of largest content in which it fits; if there is more than one, such bin BF chooses the lowest indexed one.

By adopting appropriate data structures for representing packings, it is easy to verify that the time complexity of these algorithms is \(O(n\log n).\) The analysis of the worst-case behavior of the packings they produce is far more complicated. The basic idea of the upper bound proofs is the weighting function technique, which has played a fundamental role in bin packing theory. So, before proceeding with other algorithms, it is convenient to describe this technique, which was introduced in [103, 129] and subsequently applied in many other papers (see, e.g., [13, 92, 127, 143]).

3.2 Weighting Functions

To bound the asymptotic worst-case behavior of an algorithm \(A\), one can try to find a function \(W_{A} : (0,1]\rightarrow IR\) with the properties: (i) There exists a constant \(K \geq 0\) such that for any list \(L\)

and (ii) there exists a constant \({K}^{{\ast}}\) such that for any set B of items summing to no more than 1

The value \(W_{A}(a)\) is the weight of item a under \(A\)’s weighting function \(W_{A}.\) Note that (1) requires that, for large packings (large \(A(L)\)), the average total weight of the items in a bin must satisfy a lower bound close to 1 for all lists \(L.\) On the other hand, (2) says that the total weight in any bin of any packing is at most \({K}^{{\ast}}\), so for an optimal packing

Together, (1) and (3) obviously imply the bound \(A(L) \leq {K}^{{\ast}}{\it \text{OPT}}(L) + K\), and hence \(R_{A}^{\infty }\leq {K}^{{\ast}}.\) Note that the technique above is only representative; it is easy to find weaker conditions on the weighting function which will produce the same upper bound for the APR.

The proof of the NF upper bound is not appreciably simplified by a weighting function argument, but it does offer a simple example of such arguments. Consider the case \(\alpha = 1\) and define \(W_{\mathrm{NF}}(a) = 2s(a)\) for all \(a\), where \(s(a)\) denotes the size of item \(a.\) An observation is needed about NF when \(\mathrm{NF}(L)>1\): Since the first item of \(B_{j+1}\) did not fit in \(B_{j},\ 1 \leq j < \mathrm{NF}(L)\), the sum of the item sizes in \(B_{j} \cup B_{j+1}\) exceeds 1, and hence the sum of the weights of the items in \(B_{j} \cup B_{j+1}\) exceeds 2. Then

so (1) holds with \(K = 1.\) The inequality (2) with \({K}^{{\ast}} = 2\) is immediate from the definition of \(W_{\mathrm{NF}}\), so \(R_{\mathrm{NF}}^{\infty }\leq 2\), as desired.

There is no systematic way to find appropriate weighting functions, and the approach can be difficult to work out. For example, consider the proof of the following result.

Theorem 3 (Johnson et al. [129])

The proof of the \(\frac{17} {10}\) upper bound consists of verifying that the following weighting function suffices

and it requires a substantial effort. (The argument encompasses several pages of case analysis.) Yet, despite a few hints that emerge in this effort, a clear understanding of how this function was obtained in the first place requires still more effort.

Theorem 3 for \(\alpha \leq \frac{1} {2}\) can be proved without weighting functions (see [129]), but the reader may find it instructive to prove the \(1 + {\lfloor \frac{1} {\alpha } \rfloor }^{-1}\) upper bound with the weighting function

Sequences of specific examples establish lower bounds for \(R_{A}^{\infty }.\) In particular, one seeks a sequence of lists \(L_{(n_{1})},L_{(n_{2})},\ldots\) satisfying \(L_{(n_{k})} \in V _{\alpha }\) \((k = 1,2,\ldots )\), \(\lim _{k\rightarrow \infty }\mathit{OPT}(L_{(n_{k})}) = \infty \), and for some constant \(K_{{\ast}}\),

Then \(R_{A}^{\infty }\geq K_{{\ast}}\), and if \(K_{{\ast}}\) is equal to \({K}^{{\ast}}\) of the upper bound analysis, one has the APR for the algorithm considered. Finding worst-case examples can be anywhere from quite easy to quite hard. For example, the reader would have little trouble with NF but would probably find FF to be quite challenging.

3.3 Any-Fit and Almost Any-Fit Algorithms

The algorithms described so far belong to a much larger class of on-line heuristics satisfying similar worst-case properties. It is clear that FF, WF, and BF satisfy the following condition:

Any-Fit constraint: If \(B_{1},\ldots,B_{j}\) are the current nonempty bins, then the current item will not be packed into \(B_{j+1}\) unless it does not fit in any of the bins \(B_{1},\ldots,B_{j}.\)

The class of on-line heuristics satisfying the Any-Fit constraint will be denoted by \(\mathcal{A}\mathcal{F}.\) The following result shows that FF and WF are best and worst algorithms in \(\mathcal{A}\mathcal{F}\), in the APR sense.

Theorem 4 (Johnson [127])

For every algorithm \(A \in \mathcal{A}\mathcal{F}\) and for every \(\alpha \in (0,1]\)

By a slight tightening of the Any-Fit constraint, one can eliminate the high-APR algorithms like WF and define a class of heuristics all having the same APR.

Almost Any-Fit constraint: If \(B_{1},\ldots,B_{j}\) are the current nonempty bins, and \(B_{k}\ (k \leq j)\) is the unique bin with the smallest content,then the current item will not be packed into \(B_{k}\) unless it does not fit in any of the bins to the left of \(B_{k}.\)

Clearly, WF does not satisfy this condition, but it is easy to verify that both FF and BF do. The class of on-line algorithms satisfying both constraints above will be denoted by \(\mathcal{A}\mathcal{A}\mathcal{F}.\)

Theorem 5 (Johnson [127])

\(R_{A}^{\infty }(\alpha ) = R_{\mathrm{FF}}^{\infty }(\alpha )\) for every A in \(\mathcal{A}\mathcal{A}\mathcal{F}.\)

Almost Worst-Fit (AWF) is a modification of WF whereby the current item is always placed in a bin having the second lowest content, if such a bin exists and the current item fits in it; the current item is packed in a bin with smallest content only if it fits nowhere else. Interestingly, though it seems to differ little from WF, AWF has a substantially better APR, since it is in \(\mathcal{A}\mathcal{A}\mathcal{F}\) and hence \(R_{\mathrm{AWF}}^{\infty }(\alpha ) = R_{\mathrm{FF}}^{\infty }(\alpha ) = \frac{17} {10}.\)

3.4 Bounded-Space Algorithms

An on-line bin packing algorithm uses \(k\)-bounded space if, for each item, the choice of where to pack it is restricted to a set of at most k open bins. One obtains bounded-space counterparts of the algorithms of the previous section by specifying a suitable policy for closing bins.

As previously observed, NF uses only 1-bounded space; the only algorithm to use less is the trivial algorithm that puts each item in a separate bin. To improve on the APR of NF, yet stay with bounded-space algorithms, Johnson [126] proposed an algorithm that packs items according to the First-Fit rule, but considers as candidates only the \(k\) most recently opened bins; when a new bin has to be opened and there are already k open bins, then the lowest indexed open bin is closed. It can be expected that the APR of the resulting algorithm, which is known as Next-k-Fit (NF\(_{k}\)), tends to \(\frac{17} {10}\) as \(k\) increases. Finding the exact bound was not an easy task, although Johnson did give a narrow range for the APR. Later, Csirik and Imreh [58] constructed the worst-case sequences, and then Mao was able to prove the exact bound:

Theorem 6 (Mao [153])

For any \(k \geq 2,\quad R_{\mathrm{NF}_{k}}^{\infty } = \frac{17} {10} + \frac{3} {10(k-1)}.\)

In general, a bounded-space algorithm is defined by specifying the packing and closing rules. An interesting class of such rules is based on FF and BF as follows:

-

Packing rules: The elements are packed following either the First-Fit rule or the Best-Fit rule.

-

Closing rules: The next bin to close is either the lowest indexed one or one of largest content.

The algorithm that uses packing rule X, closing rule Y, and \(k\)-bounded space is denoted by \(\mathrm{A}XY _{k}\), where \(X =\) F or B for FF or BF and \(Y =\) F or B for the lowest indexed (First) open bin or the largest-content (Best) open bin. With this terminology, NF\(_{k}\) can also be classified as AFF\(_{k}.\) Note that, independently of the chosen rules, if \(k = 1\), then one always gets NF.

Algorithm ABF\(_{k}\) was first analyzed by Mao, who called it Best-k-Fit. He proved that for any fixed \(k\), this algorithm is slightly better than NF\(_{k}.\)

Theorem 7 (Mao [152])

For any \(k \geq 2,\quad R_{\mathrm{ABF}_{k}}^{\infty } = \frac{17} {10} + \frac{3} {10k}.\)

The tight asymptotic bound for AFB\(_{k}\) was found by Zhang [184] (see also the paper version [187]).

Theorem 8 (Zhang [187])

For any \(k \geq 2,\quad R_{\mathrm{AFB}_{k}}^{\infty } = R_{\mathrm{NF}_{k}}^{\infty }.\)

Finally, consider the algorithm ABB\(_{k}\) whose asymptotic behavior is, rather surprisingly, independent of \(k \geq 2\) and equal to that of FF and BF.

Theorem 9 (Csirik and Johnson [60])

If \(k \geq 2\) then \(R_{\mathrm{ABB}_{k}}^{\infty } = \frac{17} {10}.\)

Since all of the above algorithms fulfill the Any-Fit constraint with respect to the open bins, the overall bound \(R_{A}^{\infty }\geq \frac{17} {10}\) is to be expected from Theorem 4. A better on-line algorithm can only be obtained without the Any-Fit constraint. In the remaining part of this section, it is shown how the fruitful idea of reservation techniques (introduced by Yao [180] for unbounded-space algorithms and discussed in the next section) led to on-line algorithms which are neither in class \(\mathcal{A}\mathcal{F}\) nor in \(\mathcal{A}\mathcal{A}\mathcal{F}.\)

Yao’s idea appeared in the work of Lee and Lee [143] who developed the Harmonic-Fit algorithm, which will be denoted by HF\(_{k}\) since, for the case \(\alpha = 1\), it uses at most k open bins. The algorithm divides the interval \((0,1]\) into subintervals \(I_{j} = ( \frac{1} {j+1}, \frac{1} {j}](1 \leq j \leq k - 1)\) and \(I_{k} = (0, \frac{1} {k}].\) An element is called an \(I_{j}\)-element if its size belongs to interval \(I_{j}.\) Similarly, there are \(k\) different bin types: an \(I_{j}\)-bin is reserved for \(I_{j}\)-elements only. An \(I_{j}\)-element is always packed into an \(I_{j}\)-bin following the Next-Fit rule, and so at most, k bins are open at the same time. Galambos [92] extended the idea to general \(\alpha.\) Observe that, by selecting \(\alpha \in \left ( \frac{1} {r+1},{ 1 \over r} \right ]\), the number, say \(M\), of bin types exceeds the space bound k by \(r - 1\); this is because \(I_{j}\)-bins for \(j < r\) are never opened. Instead of notation HF\(_{k}\) with k the space bound, the literature often uses HF\(_{M}\) with \(M = k + r - 1\) being the number of bin types.

The general APR can be formulated as follows. (See the end of Sect. 2 for the definitions of the quantities \(t_{s}(r)\) and \(h_{\infty }(r).\))

Theorem 10 (Lee and Lee [143], Galambos [92])

Suppose that \(L \in V _{\alpha }\) with \(\alpha \in ( \frac{1} {r+1}, \frac{1} {r}]\) for some positive integer r and choose any sequence \(k_{s},\ s \geq 1,\) such that \(t_{s}(r) < k_{s} + 1 \leq t_{s+1}(r)\). Then

The results in [92, 143] gave tight bounds only for the cases \(k = t_{j}(r) - r + 1\) and \(k = t_{j+1}(r) - r\) for integers \(j \geq 1.\) Also, considering only the \(\alpha = 1\) case, one can see that, to obtain an APR better than \(\frac{17} {10}\), at least seven open bins are needed. These observations raised two further questions:

-

For the case \(\alpha = 1\), is there an on-line, bounded-space algorithm that uses fewer than 7 bins and has an APR better than \(\frac{17} {10}\)?

-

What are tight bounds on HF\(_{k}\) for specific \(k\)?

An affirmative answer to the first question was given by Woeginger [175]. Using a more sophisticated interval structure, one based on the Golomb sequences, the performance of his Simplified Harmonic (SH\(_{k}\)) algorithm improved on the \(\frac{17} {10}\) bound with six open bins; precisely, \(R_{\mathrm{SH}_{6}}^{\infty }\approx 1.69444.\) Moreover, Woeginger proved the following deeper, more general result.

Theorem 11 (Woeginger [175])

To achieve the worst-case performance ratio of heuristic HF \(_{k}\) with k open bins and \(\alpha = 1\), heuristic SH \(_{k}\) only needs \(O(\log \log k)\) open bins.

The second question was investigated by Csirik and Johnson [60] (see also [59]) and van Vliet [172, 173]. They gave tight bounds for the case \(\alpha = 1\) with \(k = 4\) and 5. Tight bounds for further k remain open problems.

Similarly, the general case has not been discussed exhaustively, and some questions raised by Woeginger [175] are still open:

-

What is the smallest k such that there exists an on-line heuristic using \(k\)-bounded space and having an APR strictly less than \(\frac{17} {10}\)?

-

What is the best possible APR for any on-line heuristic using 2-bounded space? (ABB\(_{2}\) achieves a worst-case ratio of \(\frac{17} {10}.\))

-

By considering only algorithms that pack the items by the Next-Fit rule according to some fixed partition of \((0,1]\) into \(k\) subintervals, which partition gives the best APR ? (It is known that for \(k \leq 2\), the best possible APR is \(2\) (see Csirik and Imreh [58]), but for \(k \geq 3\), no tight bound is known.)

Tables 1 and 2 show the best results known for bounded-space algorithms. Note that the worst-case ratios of all algorithms in Table 1 are never smaller than \(h_{\infty }(1) \approx 1.69103.\) As pointed out by Lee and Lee [143] for the \(\alpha = 1\) case, bounded-space algorithms cannot do better. The result holds for general \(\alpha\) too, as shown by Galambos, that is,

Theorem 12 (Lee and Lee [143], Galambos [92])

Every bounded-space on-line bin packing algorithm \(A\) satisfies \(R_{A}^{\infty }(\alpha ) \geq h_{\infty }(r)\) for all \(\alpha,\ \frac{1} {r+1} <\alpha \leq \frac{1} {r}.\)

None of the known bounded-space algorithms achieves the lower bound using a finite number of open bins. It will be later shown (see Sect. 3.7) that the bound can be achieved with three open bins if repacking among the open bins is allowed, but without such a relaxation, the question remains open.

3.5 Variations and the Best-in-Class

Chronologically, Yao [180] was the first to break through the \(h_{\infty }(1)\) barrier with his Refined First-Fit (RFF) algorithm. RFF classifies items into types 1, 2, 3, or 4 accordingly as their sizes are in the respective intervals \((0, \frac{1} {3}]\), \((\frac{1} {3}, \frac{2} {5}]\), \((\frac{2} {5}, \frac{1} {2}]\), and \((\frac{1} {2},1].\) RFF packs four sequences of bins, one for each type. With one exception, RFF packs type-\(i\) items into the sequence of type-\(i\) bins using First-Fit. The exception is that every sixth type-2 item (with a size in \((\frac{1} {3}, \frac{2} {5}]\)) is thrown in with the type-4 items, that is, packed by First-Fit into the sequence of type-4 bins. It is easily verified that RFF uses unbounded space and has a time complexity \(O(n\log n).\) Yao proved that \(R_{\mathrm{RFF}}^{\infty } = \frac{5} {3} = 1.666\ldots.\) Yao did not use the usual weighting function technique but based his proof on enumeration of the elements in each class. Also, the APR remains unchanged if the special treatment given every sixth type-2 item is instead given every \(m\)-th type-2 item, where m is taken to be one of 7, 8, or 9.

It was immediately clear that this reservation technique was a promising approach, a fact supported by the Harmonic-Fit algorithm discussed in the previous section. The main disadvantage of the latter algorithm is that each \(I_{1}\)-element, even with a size slightly over \(\frac{1} {2}\), is packed alone into a bin. An immediate improvement is to try to add other items to these bins. In their algorithm Refined Harmonic-Fit (RHF), Lee and Lee [142, 143] modified HF\(_{20}\) by subdividing intervals \(I_{1}\) and \(I_{2}\) into two subintervals:

with \(I_{2,s} = (\frac{1} {3}, \frac{37} {96}]\), \(I_{2,b} = (\frac{37} {96}, \frac{1} {2}]\), \(I_{1,s} = (\frac{1} {2}, \frac{59} {96}]\), and \(I_{1,b} = (\frac{59} {96},1].\) This brought the number of bin types to \(M = 22.\) The packing strategy for \(I_{j}\)-elements (\(3 \leq j \leq M - 2 = 20\)), \(I_{1,b}\)-elements, and \(I_{2,b}\)-elements is the same as in Harmonic-Fit, but the \(I_{1,s}\)-elements and the \(I_{2,s}\)-elements are allowed to share the same bins in certain situations. The details are omitted. Note however that the time complexity of RHF is \(O(n)\), but the algorithm is no longer bounded space. Its APR is given by

Theorem 13 (Lee and Lee [143])

\(R_{\mathrm{RHF}}^{\infty }\leq \frac{373} {228} \approx 1.63596.\)

It is not known whether this bound is tight.

In 1989, several improved algorithms were presented by Ramanan et al. [159]. The first one, called Modified Harmonic-Fit (MHF), applies Yao’s idea in a more sophisticated way. Instead of choosing \(\frac{59} {96}\) as the point dividing \((\frac{1} {2},1]\), the problem is handled in a more general fashion. Let the number of bin types satisfy \(M \geq 5\), and consider the subdivision of \((0,1]\):

with \(I_{1} = I_{1,s} \cup I_{1,b}\) and \(I_{2} = I_{2,s} \cup I_{2,b}\) as earlier, but now

for some y satisfying \(\frac{1} {3} < y < \frac{1} {2}.\) Initially, the set of empty bins is divided into M infinite classes, each associated with a subinterval. All \(I_{1,b}\)-bins, \(I_{2,s}\)-bins, \(I_{2,b}\)-bins, and \(I_{j}\)-bins (\(3 \leq j \leq M - 2\)) are only used to pack elements from the associated interval (as in Harmonic-Fit). \(I_{1,s}\)-bins on the other hand can contain \(I_{1,s}\)-elements and some of the \(I_{2,s}\)-elements and \(I_{j}\)-elements (\(j \in \{ 3,6,7,\ldots,M - 2\}\)). The algorithm also includes more complicated rules for packing and for deciding when items with sizes in different intervals can share the same bin. For this algorithm with \(M = 40\), the following bounds hold.

Theorem 14 (Ramanan et al. [159])

The above algorithm was further generalized by Ramanan et al. [159] who introduced a sequence of classes of on-line linear-time algorithms, called \({C}^{(h)}\); an algorithm A belongs to \({C}^{(h)}\), for a given \(h \geq 1\), if it divides \((0,1]\) into disjoint subintervals including

where \(\frac{1} {3} = y_{0} < y_{1} <\ldots < y_{h} < y_{h+1} = \frac{1} {2}\), and \(I_{\lambda,2} = \varnothing \) if \(\lambda = \frac{1} {3}.\) Elements are classified, as usual, according to the intervals in which their sizes fall.

Note that algorithm MHF belongs to \({C}^{(1)}.\) Ramanan et al. [159] developed an algorithm (Modified Harmonic-2, MH2), which is in \({C}^{(2)}\) and proved that \(R_{\mathrm{MH2}}^{\infty }\leq 1.612\ldots.\) The algorithm is quite elaborate and beyond the scope of this survey. The authors discussed further improvements aimed at reducing the APR to \(1.59.\) They also proved the lower bound result.

Theorem 15 (Ramanan et al. [159])

There is no on-line algorithm A in \({C}^{(h)}\) such that \(R_{A}^{\infty } < \frac{3} {2} + \frac{1} {12} = 1.58333\ldots.\)

The HARMONIC+1 algorithm of Richey [160] subdivides intervals \((\frac{1} {2},1]\) and \((\frac{1} {3}, \frac{1} {2}]\) very finely (using 76 classes of subintervals!) in order to allow a precise item pairing in these two intervals. It also allows items of size \((\frac{1} {4}, \frac{1} {3}]\) to be mixed with larger items of various sizes and not just with those of size at least \(\frac{1} {2}.\) Seiden [163] showed that such result was flawed and gave a new algorithm, HARMONIC++, whose asymptotic performance ratio is at most 1.58889, which is the current best APR for on-line bin packing.

3.6 APR Lower Bounds

In previous sections, some results concerning lower bounds on the APR were mentioned, but the fundamental problem was not considered yet: what is the best an on-line algorithm can do in the asymptotic worst case? In this section, lower bound results are discussed in chronological order.

Most of the existing lower bounds come from the same idea. To obtain a good packing, it seems advisable to first pack the large elements so that either a bin is “full enough” or its empty space can be reduced by subsequent small elements. Therefore, in order to force bad behavior on a heuristic algorithm \(A\), one should challenge it with a list in which small items come first. If A adopts a policy that packs these small items tightly, then it will not be able to find a good packing for the large items which may come later. If, instead, \(A\) leaves space for large items while packing the small ones, then the expected large items might not appear in the list. In both cases, the resulting packing will be poor.

To give a more precise description, consider a simple example involving two lists \(L_{1}\) and \(L_{2}\), each containing n identical items. The size of each element in \(L_{1}\) is \(\frac{1} {2} - \epsilon \), and the size of each element in \(L_{2}\) is \(\frac{1} {2} + \epsilon.\) The asymptotic behavior of an arbitrary approximation algorithm A will be investigated on two lists: \(L_{1}\) alone and the concatenated list \(L_{1}L_{2}.\) It is easy to see that \(\mathit{OPT}(L_{1}) = \frac{n} {2}\) and \({\it \text{OPT}}(L_{1}L_{2}) = n.\) Consider the behavior of this algorithm on \(L_{1}\): it will pack some elements alone into bins (say x of them), and it will match up the remaining \(n - x.\) Hence \(A(L_{1}) = \frac{n+x} {2}.\) For the concatenated list \(L_{1}L_{2}\), when processing the elements of \(L_{2}\), the best that A can do is to add one element of \(L_{2}\) to each of x bins containing a single element of \(L_{1}\) and to pack alone the remaining \(n - x\) elements. Therefore, \(A(L_{1}L_{2}) = \frac{3n-x} {2}.\) Since n may be arbitrarily large,

for which the minimum is attained when \(x = \frac{n} {3}\), implying a lower bound of \(\frac{4} {3}\) for the APR of any on-line algorithm \(A.\)

The above idea can be easily generalized by taking a carefully chosen series of lists \(L_{1},\ldots,L_{k}\) and evaluating the performance of a heuristic on the concatenated lists \(L_{1}\ldots L_{j}\), (\(1 \leq j \leq k\)). The first step along these lines was made by Yao [180]. He proved a lower bound of \(\frac{3} {2}\) based on three lists of equal-size elements, the sizes being \(\frac{1} {2} + \epsilon \), \(\frac{1} {3} + \epsilon \), and \(\frac{1} {6} - 2\epsilon.\) Using Sylvester’s sequences, Brown [32] and Liang [147] independently gave a further improvement to \(1.53634577\ldots.\) (The largest sizes in their sequences were as follows: \(\frac{1} {2} + \epsilon \), \(\frac{1} {3} + \epsilon \), \(\frac{1} {7} + \epsilon \), \(\frac{1} {43} + \epsilon \), and \(\frac{1} {1,807} + \epsilon.\)) Galambos [92] used the Golomb sequences to extend the idea to general \(\alpha.\) The proof in [92] was considerably simplified by Galambos and Frenk [93]. van Vliet gave an exhaustive analysis of the lower bound constructions with a linear programming technique applied to all \(\alpha\) and gave the following lower bound:

Theorem 16 (van Vliet [171])

For any on-line algorithm \(A\), \(R_{A}^{\infty }\geq 1.54015\ldots\)

The current best-in-class, \(R_{A}^{\infty }\geq 1.54037\), was given by Balogh et al. [15]. Table 3 gives a comparison for several values of \(\alpha = \frac{1} {r}\) between the best lower bounds and the corresponding upper bounds for various algorithms. It is interesting to note that the gap between the lower and upper bounds becomes rather small for \(r \geq 2.\)

Faigle, Kern, and Turán [83] proved that if there are only two item sizes, then no on-line algorithm can be better that 4/3.

Chandra [38] has examined the effect on lower bounds when randomization is allowed in the construction of on-line algorithms, that is, when coin flips are allowed in determining where to pack items. The performance ratio for randomized algorithm A is now \(E[A(L)]/{\it \text{OPT}}(L)\), where \(E[A(L)]\) is the expected number of bins needed by A to pack the items in \(L.\) Chandra has shown that there are lists such that this ratio exceeds \(1.536\) for all randomized on-line algorithms, and so from this limited standpoint, results suggest that randomization is not a valuable tool in the design of on-line algorithms.

3.7 Semi-on-line Algorithms

The APR of on-line algorithms cannot break through the \(1.540\ldots\) barrier (see Sect. 3.6). For almost 20 years, only the pure on-line and off-line algorithms were analyzed, and no attention was paid to algorithms lying between these two classes. In a more general setting, one can consider giving the algorithm more information about the list and/or more freedom with respect to the pure on-line case. This section deals with semi-on-line (SOL) algorithms that can

-

Repack elements

-

Look ahead to later elements before assigning the current one

-

Assume some preordering of the elements

First consider the case where repacking is allowed. SOL algorithms allowing only a restricted number of elements to be repacked at each step are called c-repacking SOL algorithms. It was seen in Sect. 3.4 that no known on-line bounded-space algorithm reaches the bound \(h_{\infty }(1)\) using finitely many open bins. It will be shown that this is possible with SOL algorithms.

In 1985, Galambos [91] made a first step in this direction. His Buffered Next-Fit (BNF) algorithm uses two open bins, say \(B_{1}\) and \(B_{2}.\) The arriving elements are initially packed into \(B_{1}\), until the first element arrives for which \(B_{1}\) does not have enough space. This element and those currently packed in \(B_{1}\) are then reordered by decreasing size and repacked in \(B_{1}\) and \(B_{2}\) following the Next-Fit rule. \(B_{1}\) is now closed, \(B_{2}\) is renamed \(B_{1}\), and a new bin \(B_{2}\) is opened. Using a weighting function approach, it was proved that \(h_{\infty }(1) \leq R_{\mathrm{BNF}}^{\infty }\leq \frac{18} {10}.\)

Galambos and Woeginger [95] generalized the above idea, adopting a better weighting function. Let \(w(B)\) be the sum of the weights associated with the items currently packed in bin \(B.\) Their Repacking algorithm (REP\(_{3}\)) uses three open bins. When a new item \(a_{i}\) arrives, the following steps are performed: (i) \(a_{i}\) is packed into an empty bin; (ii) all the elements in the three open bins, sorted by nonincreasing size, are repacked by the FF strategy, with the result that either one bin becomes empty or at least one bin B has \(w(B) \geq 1\); and (iii) all bins B with \(w(B) \geq 1\) are closed and replaced by new empty bins. In [95], it was proved that this repacking in fact helps, since \(R_{\mathrm{REP}_{3}}^{\infty } = h_{\infty }(1).\) It is not known whether the same result can be obtained with two bins.

Gambosi et al. [99] (see also [97] and [98]) were the first to beat the \(1.540\) on-line bound via repacking. They gave two algorithms. Both of them use an unusual step to repack the elements: the small elements are grouped into a “bundle” of \(O(n)\) elements, which can be repacked in a single step.

In the first algorithm, A\(_{1}\), the interval \((0,1]\) is divided into four subintervals, and the elements are packed into bins in a Harmonic-like way. As each new item is packed, groups of small elements can be repacked – in a bundle – so as to fill up gaps in bins that are not full enough. By using appropriate data structures, this repacking is performed in constant time. The algorithm has linear time and space complexity, and it has an APR, \(R_{\mathrm{A}_{1}}^{\infty }\leq \frac{3} {2}.\) In the second algorithm, A\(_{2}\), the unit interval is divided into six subintervals, and, as each new item is encountered, the elements are repacked more carefully, in \(O(\log n)\) time, by means of a pairing technique analogous to that introduced by Martel (see Sect. 4.2). The time complexity of A\(_{2}\) is \(O(n\log n)\), and its APR is \(R_{\mathrm{A}_{2}}^{\infty }\leq \frac{4} {3}.\)

Ivkovic and Lloyd [122] gave a further improvement on SOL algorithms achieving a \(\frac{5} {4}\) worst-case ratio. Their algorithm is much more complicated than the previous ones, as it was designed for handling the dynamic packing case. The dynamic bin packing problem will be considered in Sect. 7.1 in detail, but here it is enough to know that in case of dynamic packing, deletions of some elements are allowed in each step and \(A(L)\) is considered as the maximum number of occupied bins during the packing. Ivkovic and Lloyd proved a \(\frac{4} {3}\) lower bound for the c-repacking SOL algorithms. This result was improved by Balogh et al. [14].

Theorem 17 (Balogh et al. [14])

For any c-repacking SOL algorithm A, the APR satisfies \(R_{A}^{\infty }\geq 1.3871\ldots.\)

Ivkovic and Lloyd [121] also presented approximation schemes for their dynamic, SOL model, applying the techniques of Fernandez de la Vega and Lueker and those of Karmarkar and Karp cited in Sect. 4.3.

Consider now the case in which the algorithm is allowed to look ahead, in the sense that, when an element arrives, it is not necessary to pack it immediately; one is allowed to collect further elements whose sizes can effect the packing decision. In order to avoid relaxation to off-line algorithms, consider the case of bounded lookahead. Grove [111] proposed a \(k\)-bounded algorithm which had, in addition, a capacity (or warehouse) constraint \(W.\) The algorithm can delay the packing of item \(a_{i}\) until it has collected all subsequent elements \(a_{i+1},\ldots,a_{j}\) such that \(\sum _{r=i}^{j}a_{r} \leq W.\) For any fixed \(k\) and \(W\), the \(h_{\infty }(1)\) lower bound (see Theorem 12) remains valid, but Grove’s Revised Warehouse (RW) algorithm reaches the bound if W is sufficiently large. In his proof, Grove uses a weighting function argument.

Another subclass of the lookahead SOL problems arises if the input list is divided into a number of batches. If an algorithm works on a batched list, then it has to pack each batch as an off-line list (i.e., lookahead is only possible within the current batch). However, while packing elements of the current batch, the items of earlier batches cannot be moved. If the number of batches is bounded by \(m\), the problem is called m-batched bin packing (BBP) problem. Gutin et al. [112] were the first to study the BBP problem: they investigated in depth the 2-BBP problem and proved a lower bound of \(1.3871\ldots\) for this case.

The rarely considered class of algorithms in which it is assumed that the input list is presorted is finally considered. Because of the lower bound constructions, it is easy to see that, if the list is presorted by increasing item size, the on-line lower bounds remain valid. Moreover, the Johnson result holds: if the list is presorted by decreasing item size, then \(\frac{11} {9} \leq R_{A}^{\infty }\leq \frac{5} {4}\) for any algorithm \(A \in \mathcal{A}\mathcal{F}\) (see Theorem 21). This begged the broader question of lower bounds: how good can an arbitrary algorithm be? Based on two different lists, a partial answer was given in the early 1980s by Csirik et al. [64]. They proved that if the list is presorted by decreasing item size, then \(R_{A}^{\infty }\geq \frac{8} {7}\) for all algorithms A. Although this approach seemed to be very simple (the authors used only two different list types), no progress was made until very recently Balogh et al. [15] gave an improved lower bound:

Theorem 18 (Balogh et al. [15])

If the list is presorted by decreasing item size, then \(R_{A}^{\infty }\geq \frac{54} {47}\) for all algorithms A.

4 Off-line Algorithms

An off-line algorithm has all items available for preprocessing, reordering, grouping, etc. before packing. It has been seen that most of the classical on-line algorithms achieve their worst-case ratio when the items are packed in increasing order of size (see, e.g., FF and BF), or if small and large items are merged (see, e.g., NF). Thus, one is led to expect improved behavior by a sorting of the items in decreasing order of size. Note that the \(O(n\log n)\) sorting step makes the algorithm no longer linear time.

The review starts with results for approaches that sort the items before executing one of the on-line algorithms. Linear-time heuristics are then considered. The section is concluded by approximation schemes and by a discussion of the anomalous behavior that is exhibited by many bin packing algorithms, including both on-line and off-line algorithms.

4.1 Algorithms with Presorting

When the sorted list is packed according to the Next-Fit rule, one obtains the Next-Fit Decreasing (NFD) algorithm. This heuristic was investigated by Baker and Coffman, who proved by a weighting function argument that its APR is slightly better than that of FF and BF:

Theorem 19 (Baker and Coffman [13])

If \(\alpha \in ( \frac{1} {r+1}, \frac{1} {r}]\ (r \geq 1)\), then \(R_{\mathrm{NFD}}^{\infty }(\alpha ) = h_{\infty }(r).\)

Packing the sorted list according to First-Fit or Best-Fit gives the algorithms First-Fit Decreasing (FFD) and Best-Fit Decreasing (BFD), with much better asymptotic worst-case performance.

Theorem 20 (Johnson et al. [129])

\(R_{\mathrm{FFD}}^{\infty } = R_{\mathrm{BFD}}^{\infty } = \frac{11} {9}.\)

The original proof was based on the weighting function technique, but subsequent proofs introduced dramatic changes; the giant case analysis made in 1973 by Johnson [126] was considerably shortened by Baker [12] in 1985, and in 1991, Yue [183] presented the shortest proof known so far. In parallel, the additive constant was also improved; Johnson had proved that \(\mathrm{FFD}(L) \leq \frac{11} {9} \mathit{OPT}(L) + 4\), but Baker reduced the constant to 3, and Yue reduced it to 1. Then, in 1997, Li and Yue [146] further reduced the constant to \(\frac{7} {9}\) and conjectured the tight value to be \(\frac{5} {9}.\) However, in 2007, Dósa [68] closed the issue by proving that the tight value is \(\frac{6} {9}.\)

The behavior of BFD for general \(\alpha\) is not known, but that of FFD has been intensively investigated. Johnson et al. [129] analyzed several cases, showing that

and conjecturing that, for any integer \(m \geq 4\),

Twenty years later, Csirik [57] proved that the above conjecture is valid only for m even, and that, for m odd,

In the same year, the complete analysis of FFD for arbitrary values of \(\alpha \leq \frac{1} {4}\) was published by Xu [178] (see also [179]), who showed that if m is even, then \(F_{m}\) is the correct APR for any \(\alpha\) in \(( \frac{1} {m+1}, \frac{1} {m}]\), while for m odd, the interval has to be divided into two parts, with

where \(d_{m} := {(m + 1)}^{2}/({m}^{3} + 3{m}^{2} + m + 1).\)

Recall that, after a presorting stage, all of the above algorithms belong to the Any-Fit class (see Sect. 3.3). Johnson showed that, after a presort in increasing order, Any-Fit algorithms do not perform well in the worst case; for example, their APRs must be at least \(h_{\infty }(1)\) when \(\alpha = 1.\) But presorting in decreasing order is much more useful.

Theorem 21 (Johnson [126, 127])

Any algorithm \(A \in \mathcal{A}\mathcal{F}\) operating on a list presorted in decreasing-size order must have

For a long time, the FFD bound was the smallest proved APR. Johnson [126] made an interesting attempt to obtain a better APR. His Most-k-Fit (MF\(_{k}\)) algorithm takes elements from both ends of the sorted list, packing bins one at a time. At any step, after trying to place the largest unpacked element into the current bin, the algorithm attempts to fill up the remaining space in the bin using the smallest k (or fewer) as yet unpacked items. As soon as the available space becomes smaller than the smallest unpacked element, the algorithm starts packing a new bin. The algorithm has time complexity \(O({n}^{k}\log n)\), so it is practical only for small \(k.\) Johnson conjectured that \(\lim _{k\rightarrow \infty }R_{\mathrm{MF}_{k}}^{\infty } = \frac{10} {9}\), but almost 20 years later, the conjecture was contradicted by Friesen and Langston [90], who gave examples for which \(R_{\mathrm{MF}_{k}}^{\infty }\geq \frac{5} {4}\), \(k \geq 2.\)

Yao [180] devised the first improvement to FFD. He presented a complicated \(O({n}^{10}\log n)\) algorithm, called Refined First-Fit Decreasing (RFFD), with worst-case ratio \(R_{\mathrm{RFFD}}^{\infty }\leq \frac{11} {9} - 1{0}^{-7}.\) Following this result, further efforts were made to develop better off-line algorithms. Garey and Johnson [102] proposed the Modified First-Fit Decreasing (MFFD) algorithm. The main idea is to supplement FFD with an attempt to improve that part of the packing containing bins with items of sizes larger than \(\frac{1} {2}\) by trying to pack in these bins pairs of items (to be called S items) with sizes in \((\frac{1} {6}, \frac{1} {3}].\) The non-FFD decisions of MFFD occur only during the packing of S items. At the time these items come up for packing, the bins currently containing a single item larger than \(\frac{1} {2}\) are packed first, where possible, in decreasing-gap order as follows. In packing the next such bin, MFFD first checks whether there are two still unpacked S items that can fit into the bin; if not, MFFD finishes out the remaining packing just like FFD. Otherwise, the smallest available S item is packed first in the bin; the largest remaining available S item that fits with it is packed second. The running time of MFFD is not appreciably larger than that for FFD, but Garey and Johnson proved that

Theorem 22 (Garey and Johnson [102])

\(R_{\mathrm{MFFD}}^{\infty } = \frac{71} {60} = 1.18333\ldots.\)

Another modification of FFD was presented by Friesen and Langston [90]. Their Best Two-Fit (B2F) algorithm starts by filling one bin at a time, greedily; when no further element fits into the current bin, and the bin contains more than one element, an attempt is made to replace the smallest one by two unpacked elements with sizes at least \(\frac{1} {6}.\) When all the unpacked elements have sizes smaller than \(\frac{1} {6}\), the standard FFD algorithm is applied. Friesen and Langston proved that \(R_{\mathrm{B2F}}^{\infty } = \frac{5} {4}\), which is worse than \(\frac{11} {9}.\) However, they further showed that a combined algorithm (CFB), which runs both B2F and FFD and takes the better packing, has an improved APR.

Theorem 23 (Friesen and Langston [90])

\(1.16410\ldots = \frac{227} {195} \leq R_{\mathrm{CFB}}^{\infty }\leq \frac{6} {5} = 1.2.\)

Concerning the absolute worst-case ratio, Johnson et al. [129] had already conjectured that, if the number of bins in the optimal solution is more than 20, then the absolute worst-case ratio of FF is no more than 1.7. The first results were given by Johnson et al. [129] and Simchi-Levi [169]. They showed that FF and BF have an absolute performance bound not greater than 1.75 and that FFD and BFD have an absolute performance ratio of 1.5. The latter is the best possible for the classical bin packing problem, unless \(\mathcal{P} = \mathcal{N}\mathcal{P}.\) Xie and Liu [177] improved the bound for FF to 1.737. Zhang et al. [188] provided a linear-time bounded-space off-line approximation algorithm with an absolute worst-case ratio of 1.5 and a linear-time bounded-space on-line algorithm with an absolute worst-case ratio of 1.75. Berghammer and Reuter [24] gave a different linear-time algorithm with an absolute worst-case ratio of 1.5.

4.2 Linear Time, Randomization, and Other Approaches

The off-line algorithms analyzed so far have time complexity at least \(O(n\log n).\) It is also interesting to see what can be accomplished in linear time – in particular, without sorting. The first such heuristic was constructed by Johnson [127]. His Group-X-Fit Grouped (GXFG) algorithm depends on the choice of a set of breakpoints \(X\), defined by a sequence of real numbers \(0 = x_{0} < x_{1} <\ldots < x_{p} = 1.\) For a given \(X\), the algorithm partitions the items, according to their size, into at most \(p\) classes, and renumbers them in such a way that items of the same class are consecutive and classes are ordered by decreasing maximum size. The bins are also collected into p groups according to their actual gap, defined as the maximum \(x_{j}\) such that the current empty space in the bin is at least \(x_{j}.\) The items are packed using the Best-Fit rule with respect to the actual gaps. The algorithm can be implemented so as to require linear time and has the following APR.

Theorem 24 (Johnson [127])

For all \(m \geq 1\), if X contains— \(\frac{1} {m+2}\), \(\frac{1} {m+1}\), and \(\frac{1} {m}\), then \(R_{\mathrm{GXFG}}^{\infty }(\alpha ) = \frac{m+2} {m+1}\) for all \(\alpha \leq 1\) such that \(m = \left \lfloor \frac{1} {\alpha } \right \rfloor.\)

For \(\alpha = 1\), the above theorem gives \(R_{\mathrm{GXFG}}^{\infty } = \frac{3} {2}\), a bound subsequently improved by Martel. His algorithm, H\(_{4}\), uses a set \(X =\{ \frac{1} {4}, \frac{1} {3}, \frac{1} {2}, \frac{2} {3}\}\), but it does not reorder the items. It instead inserts them into heaps and uses a linear search for the median-size item. The packing strategy makes use of an elaborate pairing technique.

Theorem 25 (Martel [154])

\(R_{\mathrm{H}_{4}}^{\infty } = \frac{4} {3}.\)

Later, Békési et al. [23] applied the Martel idea to a set \(X =\{ \frac{1} {5}, \frac{1} {4}, \frac{1} {3}, \frac{3} {8}, \frac{1} {2},\) \(\frac{2} {3}, \frac{4} {5}\}\) and improved the bound to \(\frac{5} {4}.\) Making experimental comparisons, they further showed that this linear-time algorithm is faster than the less complicated FFD rule even for small problem instances. They also mentioned that, by using more breakpoints, one can further improve the above result, but the resulting algorithms would be linear time with a constant term so large that they would not be useful in practice.

Table 4 summarizes the tightest worst-case ratios of off-line algorithms (H\(_{7}\) denotes the algorithm of Békési and Galambos [22]).

As a final note on fast off-line algorithms, the reader is referred to the work of Anderson et al. [4] for the implementation of approximation algorithms on parallel architectures. They show that, with \(n/\log n\) processors in the EREW PRAM model, a packing can be obtained in parallel in \(O(\log n)\) time which has the same asymptotic \(\frac{11} {9}\) bound as FFD.

Caprara and Pferschy [34] proposed a simple (although non-polynomial) algorithm, discussed in Sect. 7.2, for which they proved an upper bound on the worst-case ratio of \(4/3 +\ln 4/3 = 1.62102\ldots.\)

4.3 Asymptotic Approximation Schemes

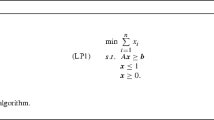

In 1980, Yao [180] raised an interesting question: does there exist an \(\epsilon> 0\) such that every \(O(n)\)-time algorithm \(A\) must satisfy the lower bound \(R_{A}^{\infty }\geq 1 + \epsilon \)? Fernandez de la Vega and Lueker [84] answered the question in the negative by constructing an asymptotic linear approximation scheme for bin packing. In their important paper, LP (linear programming) relaxations were first introduced as a technique for devising bin packing approximation algorithms. (See Sect. 8.1 for an integer programming formulation of the bin packing problem.) In this section, their result is first discussed, and then some improvements proposed by Johnson and by Karmarkar and Karp are introduced. Further discussion can be found in [52, 116].

The main idea in [84] is the following. Given an \(\epsilon,\ 0 < \epsilon < 1\), define \(\epsilon _{1}\) so that \(\frac{\epsilon } {2} < \epsilon _{1} \leq \frac{\epsilon } {\epsilon +1}.\) Instead of packing the given list \(L\), the algorithm packs a concatenation of three lists \(L_{1}L_{2}L_{3}\) determined as follows:

-

\(L_{1}\) contains all the elements of L with sizes smaller than \(\epsilon _{1}.\)

-

\(L_{2}\) is a list of \((m - 1)h\) dummy elements with “rounded” sizes, corresponding to the \((m - 1)h\) smallest elements of \(L \setminus L_{1}\), where \(m = \left \lceil \frac{4} {{\epsilon }^{2}} \right \rceil \) and \(h = \left \lfloor \frac{\vert L\setminus L_{1}\vert } {m} \right \rfloor.\) The elements of \(L_{2}\) have only \(m - 1\) different sizes, and, for each size \(s\), the list has h elements; the sizes of the corresponding h elements in \(L \setminus L_{1}\) are no greater than \(s.\)

-