Abstract

Much of the early research in aversive learning concerned motivation and reinforcement in avoidance conditioning and related paradigms. When the field transitioned toward the focus on Pavlovian threat conditioning in isolation, this paved the way for the clear understanding of the psychological principles and neural and molecular mechanisms responsible for this type of learning and memory that has unfolded over recent decades. Currently, avoidance conditioning is being revisited, and with what has been learned about associative aversive learning, rapid progress is being made. We review, below, the literature on the neural substrates critical for learning in instrumental active avoidance tasks and conditioned aversive motivation.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Close to the finish line of the 2013 Boston marathon, there were two explosions that killed three people and injured over two hundred others. These explosions caused a chaotic burst of activity in the group of people gathered in the area for the event. Immediately upon detonation of the bombs, people in the crowd oriented toward the explosions and froze, and then, they began to flee the site. This sequence of reaction and action is repeatedly seen in emotionally evocative experiences in human and non-human animals (LeDoux 1996a; LeDoux and Gorman 2001). While reactions are inflexible responses automatically elicited by a stimulus, actions are responses or behaviors that are produced by an organism. Reactive behaviors are genetically embedded into an organism’s response repertoire as prepared consequences to significant environmental pressures (e.g., predators; Hirsch and Bolles 1980; Coss and Biardi 1997). On the other hand, actions are behaviors learned by their reinforcing consequences (for example, a child learning to cry in order to receive attention) performed in order to obtain some goal or reward.

Using animal models, research on the neural basis of emotion has uncovered a great deal on how threatening conditioned stimuli come to control defensive reactions (LeDoux 2014, 2015). Progress on the neural foundations of aversively motivated actions has been slower, though recent times have seen a resurgence of interest in deciphering the circuits and mechanisms underlying these behaviors. In this chapter, we will describe the relationship between reactions and actions insofar as they pertain to defensive motivation, and the neural substrates of these phenomena.

2 Taxonomy of Behavior

Among the forms of behaviors exhibited by humans, many can be seen as fitting into four categories: reflexes, reactions, actions and habits (see Balleine and Dickinson 1998; Cardinal et al. 2002; Lang and Davis 2006; Yin and Knowlton 2006).

Reflexes are genetically prepared, stimulus-evoked behaviors that involve simple mechanisms and limited muscle groups. For example, myotatic reflexes (e.g., monosynaptic stretch reflex) do not require control outside of a single spinal level. Examples of defensive reflexes include withdrawal reflexes seen when painful stimuli are applied to the skin, or eyelid closure when an air puff is delivered to the region (i.e., NMR preparation).

Reaction and reflex behaviors share the attribute that the response itself is unlearned. Both of these types of responses are innate and are typically elicited by stimuli that have, through evolutionary processes, come to be embedded in the genetic wiring of the nervous system. However, reactions are distinct from reflexes in that they involve the whole organism and require control from components of the central nervous system (CNS) outside of the processing level of the sensory stimulus. For example, freezing behavior, in which the organism crouches in a certain posture and non-respiratory-related movement ceases, occurs as an initial reaction to a threatening event in many organisms. This reaction requires sustained contraction of the skeletal musculature throughout the body to maintain the characteristic posture. Metabolic support for this energy-demanding activity is provided by changes in visceral organ physiology controlled by the autonomic nervous system under the direction of the CNS. Freezing reactions can be thought of as an example of a fixed action pattern (Lorenz and Tinbergen 1938; Tinbergen 1951), or more specifically, a species-typical defensive behavior (Blanchard and Blanchard 1972; Bolles 1970; Bolles and Fanselow 1980).

The stimuli that elicit reaction and reflex behaviors, called releasers by ethologists, are specific to the response they command: freezing, for example, is an innate reaction to predators (Lorenz and Tinbergen 1938; Tinbergen 1951; Thorpe 1963; Hinde 1966; Bolles 1970; Blanchard and Blanchard 1969b, 1972; Rosen 2004). For example, Hirsch and Bolles (1980) found that two strains of deer mice that were removed from their natural environment for two breeding generations still demonstrated responses specific to the particular predator present in that environment when encountered. These prepared and unlearned responses proved useful—subjects that were presented with their ancestral predator tended to fare better than those exposed to the historically unfamiliar one. Even more dramatic, Coss and Biardi (1997) reported that a group of squirrels living in the higher elevations of a mountain and separated from snakes at lower elevations by an earthquake millions of years ago still exhibited species-typical defense responses to snakes. While such responses require no prior personal experience with an innate releaser, they can come under the control of previously neutral stimuli paired with these events through Pavlovian associative learning processes (Blanchard and Blanchard 1969a, b, 1972; Bolles 1972; Fanselow 1980). When these responses are produced by acquired stimuli, they are referred to as conditioned or learned defensive responses. This is somewhat of a misnomer since the response itself is not learned or conditioned. Instead, the response comes to be conditional on the presence of the stimulus. Some therefore prefer then terminology conditional response over conditioned response (Pavlov 1927).

Actions are also complex behaviors, but they are not simply elicited by a stimulus. Rather, they are emitted in the combined presence of certain stimuli and internal factors such as motivation and arousal and performed in order to obtain a goal or reward (Skinner 1938; Estes and Skinner 1941; Estes 1948; Rescorla and LoLordo 1965; Rescorla 1968; Lovibond 1983; Balleine and Dickinson 1998; Holland and Gallagher 2003; Niv et al. 2006). These responses are understood associatively as response-outcome (R-O) learning and include hierarchical control by the surrounding stimuli. A further distinction of actions from reactions is that actions are flexible behaviors rather than fixed responses. Depending on the circumstances, the appropriate action one must take in order to reach a goal can be quite different (Fanselow and Lester 1988). Therefore, a certain degree of preparedness may allow for some responses to be acquired or performed more readily than others (see Cain and LeDoux 2007). Nevertheless, the response can take multiple physical forms. For example, in the description of the aftermath of the Boston bombings above, the general goal would be to escape the danger; thus, the appropriate response would be to run away from the area. However, if the explosion had taken place on a boat and survivors were in the water, the appropriate action would be to swim away. Furthermore, if one had friends or loved ones with them, the goal would be to find them before fleeing, regardless of the specific behavioral demands. Much of the research on action learning, especially in terms of brain mechanisms, has focused on appetitive motivation (i.e., food, sex, or drugs); the neural substrates for instrumental motivation based on aversive outcomes (e.g., shock omission, punishment) has received less attention. A major aim of this chapter is to summarize recent research on aversively motivated actions.

When an action has been performed so frequently that it has become automatic or inflexible, it is referred to as a habit (Thorndike 1898; Skinner 1938; Hull 1943; Killcross and Coutureau 2003). Initially, actions are guided by the outcome they obtain, which can be demonstrated using outcome revaluation manipulations (including devaluation and inflation). If a food reward is made less valuable through pairings with emetics or simply by free access, relevant instrumental actions are attenuated. However, if these manipulations are conducted after extended training, no changes in behavior are seen and subjects still respond despite the outcome value having been reduced. Thus, following extended training, the action becomes an inflexible habit and is not sensitive to outcome devaluation. At this point, the behavior is guided by the stimuli present around the response instead of the outcome and is referred to as a stimulus-response (S-R) habit. This makes habits similar to reactions in that they are elicited by trigger stimuli, but they are distinct in that the response is innate in the case of reactions and learned to varying degrees (e.g., shuttle responses vs. lever press) in the case of habits. The underlying associative nature of the learned action changes over time from the hierarchical stimulus[response-outcome] (S[R-O]) representation to an S-R habit. Behavioral automation can be beneficial as it frees cognitive resources to attend to other demands. For instance, perfecting a skill such as bike-riding can become second nature requiring less attention. Furthermore, turning off the light when one leaves a room can save money. However, habits have a well-known downside too, with consequences much worse than simply leaving a roommate in a dark room. Habits achieve pathological status when they become maladaptive (e.g., compulsive hand-washing or compulsive taking of addictive drugs), and are very resistant to extinction when contingencies change.

3 Historical Context of Studies on Aversive Learning

The background above defines reactions and actions among various classes of behavior. Below, we focus on reactions and actions. However, prior to discussing the current state of research on these behaviors, we will briefly review the history of this field.

In aversive conditioning a neutral conditioned stimulus (CS), such as a tone, is repeatedly paired with an aversive unconditioned stimulus (US), usually an electric shock. Following this episode, the CS alone elicits conditioned freezing responses (CRs; Blanchard and Blanchard 1969a; Bolles and Fanselow 1980). This learning process was originally studied by Pavlov (1927) who referred to it as ‘defensive conditioning.’ And while there were some notorious studies of the basic phenomenon in humans such as John Watson’s famous studies involving little Albert (Watson 1929), early psychologists were more interested in Thorndike’s (1898) ‘law of effect’ and instrumental conditioning. As a result, in the first half of the twentieth century, psychologists interested in aversive motivation were more likely to use aversive instrumental tasks, such as avoidance conditioning (Miller 1948, 1951; Mowrer 1947; Mowrer and Lamoreaux 1946) instead of Pavlovian conditioning.

In avoidance conditioning, animals learn to perform (active avoidance) or withhold (passive avoidance) an action in order to avoid negative or harmful results (typically electric shock). Below, we focus on active avoidance, which we will refer to as avoidance conditioning throughout. Responses that are measured in avoidance studies include shuttling (moving between rooms), wheel turning, or pressing a lever. Avoidance conditioning has traditionally been understood as a two-stage process. First, stimuli become associated with the shock via Pavlovian conditioning, and then, instrumental responses are performed that terminate or escape (or avoid) those threatening stimuli, thus providing negative reinforcement of the response (Brown and Jacobs 1949; Kalish 1954; Levis 1989; McAllister and McAllister 1971; Miller 1948, 1951; Mowrer 1947; Mowrer and Lamoreaux 1946; Overmier and Lawry 1979; Solomon and Wynne 1954). Because it helps cope with a threat, the avoidance response can, therefore, be used to study aversive motivation. Typically, Pavlovian conditioning processes were not directly measured or studied outside of avoidance procedures in the heyday of avoidance.

Starting in the 1950s, avoidance tasks were used to explore the brain mechanisms of aversive behavior, typically involving imprecise tools to understand neural function. Because avoidance conditioning intermixes Pavlovian (reaction) and instrumental (action) learning, studies using these tasks (Weiskrantz 1956; Goddard 1964; Gabriel et al. 1983; Isaacson 1982) were not able to provide a clear picture of the neural mechanisms underlying either the Pavlovian or instrumental learning processes (for review, see LeDoux et al. 2009; Sarter and Markowitsch 1985).

While psychologists struggled to understand aversive motivation with avoidance studies, research on the neural basis of learning and memory turned to Pavlovian conditioning, especially in invertebrates (Alkon 1983; Carew et al. 1983; Dudai et al. 1976; Kandel and Spencer 1968; Walters et al. 1979). Success in identifying neural mechanisms with simple tasks in invertebrates leads vertebrate researchers to take a similar approach (Cohen 1974; Thompson 1976). In the 1980s and 1990s, considerable progress was made in identifying the neural systems underlying Pavlovian aversive conditioning (Davis 1986; Kapp et al. 1979; LeDoux et al. 1983, 1984, 1988; for review, see: Davis 1992; Kapp et al. 1992; LeDoux 1992). Because simpler behaviors depend on simpler mechanisms, progress was rapidly made in understanding the brain mechanisms of defensive learning, which we will review below.

4 Neural Basis of Reactions

Pavlov’s defense conditioning procedure came to be called fear conditioning. However, because this term implies that a subjective mental state of fear is being acquired, we have recently argued that this procedure should be called Pavlovian threat conditioning (PTC; LeDoux 2014, 2015). This term is preferable to defense conditioning since the latter term implies that the defensive responses are being learned, whereas PTC implies that the stimulus is acquiring a new meaning.

PTC is a widely used paradigm for studying the brain because of its simplicity, robustness, and repeatability (Fig. 1). In most studies, the CS of choice is an auditory tone and the US is a mild footshock. Learning can occur following the first CS–US pairing as is evidenced by defensive responding (freezing) during the second presentation of the CS (before the shock US is presented; Blanchard and Blanchard 1969a, 1972; Bolles and Fanselow 1980. Moreover, these associations are long-lasting, as they can be observed hours, days, weeks, and even years following conditioning (Gale et al. 2004).

Auditory Pavlovian threat conditioning: depiction of a standard Pavlovian threat conditioning (PTC) paradigm using an auditory cue as the CS (a) and typical results obtained (b). Prior to training, rats are habituated to the conditioning chamber in the absence of stimuli. The next day, animals are returned to the conditioning chamber and an unconditioned stimulus (US; a mild shock) is paired with the conditioned stimulus (CS; a tone), usually one to five times. After three hours for short-term memory (STM) or 24–48 h for long-term memory (LTM, depicted), animals are placed in a new context and exposed to multiple presentations of the CS alone. Innate defensive responses, i.e., ‘freezing’ behavior, are then scored as total time spent in this state over the course of the CS presentation. To test for non-associative effects, an ‘unpaired’ control group is often used in which CS and US do not co-occur. This unpaired control group shows very little CS-elicited freezing, indicating that associative learning processes are responsible for this defensive behavior

In addition to behavioral responses to the CS, physiological changes such as autonomic and endocrine responses also occur (Kapp et al. 1979; LeDoux et al. 1983; Schneiderman et al. 1974), indicating that the CS, like the US, can elicit an integrated organismic response to perceived danger.

Research over the past several decades has clearly identified the amygdala as a key site where CS–US associations are formed as well as a necessary site for the later expression of defensive reactions elicited by the CS (Davis et al. 1997; Fanselow and LeDoux 1999; LeDoux 1996b, 2000; Maren 2001; Maren and Fanselow 1996; Fig. 2). In particular, the neural circuitry of auditory cued PTC has been well established.

The amygdala may be separated into several regions, nuclei and subnuclei based on their emergence in evolution and neuroanatomical or cytoarchitectural features (Fig. 2). According to one scheme, the amygdala can be separated into an evolutionarily primitive division extending from subcortical areas (cortical, medial, and central nuclei) and a newer division associated with the neocortex (lateral, basal, and accessory basal nuclei) (Johnston 1923). The basal nucleus can be separated into basolateral and basomedial nuclei and the central nucleus into lateral, capsular, and medial subdivisions (Pitkänen 2000; Pitkänen et al. 2000). Considering the heterogeneity of these subnuclei, in terms of neuroanatomy, functional circuitry, and molecular expression, it is important to study these various subnuclei in isolation. Indeed, classic brain manipulations, including lesion studies and intracranial drug infusions, did not always account for the functional differences in these subareas (as highlighted in Romanski et al. 1993; Amano et al. 2011). Recent advances in molecular genetics, such as optogenetics, are allowing researchers to identify, with spatial and temporal precision, the distinct functions for subnuclear partitions within the amygdala (Ehrlich et al. 2009; Johansen et al. 2012). Furthermore, advances in transgenic technology are allowing for manipulations of distinct cell subtypes (as defined by their molecular expression profile) within these subnuclei not only in mice, but also in rats and non-human primates (Gafford and Ressler 2015).

Neural circuits underlying Pavlovian threat conditioning: a Anatomy of the amygdala: the rat amygdala may be separated into at least 12 distinct nuclei as identified using (a) Nissl cell body stain, b Acetylcholinesterase stain, and a c silver fiber stain. Abbreviations: Amygdala areas: AB accessory basal; B basal nucleus; Ce central nucleus; CO cortical nucleus; ic intercalated cells; La lateral nucleus; M medial nucleus. Non-amygdala areas: AST amygdalo-striatal transition area: CPu caudate putamen; CTX cortex. d Amygdala circuitry involved in the acquisition, consolidation, and expression of threat memories. The auditory CS (tone) and US (mild shock) converge in the lateral amygdala (LA) where synaptic plasticity and memory formation are thought to occur. The LA communicates with CeA directly and indirectly by way of connections in the basal, accessory basal, and intercalated cell masses (ICM). The CeA (CeM) sends projections to hypothalamic and brainstem areas to elicit the conditioned defensive response (freezing) as well as autonomic and hormonal responses. CeL lateral nucleus of Ce

Decades of research indicate that these amygdala structures work together to coordinate defensive responses to threatening stimuli. Importantly, learning and expression of threat memories require the lateral nucleus of the amygdala (LA), the major input nucleus of the amygdala. Without the LA, and its efferent communication with the basal nucleus (B) and central nucleus (CeA), the CS cannot gain control of innate defensive responses.

Auditory PTC is thought to involve a Hebbian mechanism within the LA, whereby relatively weak auditory inputs on LA neurons are potentiated by concurrent strong depolarization produced by somatosensory inputs processing the US. The potentiation of auditory inputs increases the probability that LA neurons will show conditioned increases in cell firing when an auditory CS is presented. Indeed, this model is supported by significant work both in vitro (McKernan and Shinnick-Gallagher 1997; Rumpel et al. 2005; Clem and Huganir 2010; Tsvetkov et al. 2002; Schroeder and Shinnick-Gallagher 2004, 2005) and in vivo (Rogan and LeDoux 1995; Quirk et al. 1995; Grace and Rosenkranz 2002). With the development of new molecular tools for manipulating neural circuits, (i.e., optogenetics and chemogenetics), recent studies have provided more causal evidence for the importance of LA plasticity in PTC. For example, expanding on earlier studies shows that LA neurons can be selectively recruited to form memory by synthetically increasing their plasticity (i.e., infecting these neurons with the plasticity-related transcription factor CREB (cAMP Response Element Binding protein; Kida et al. 2002; Han et al. 2007); Josselyn and colleagues showed that activation of these specific neurons with DREADD (designer Receptors Exclusively Activated by Designer Drugs) increases PTC learning (Yiu et al. 2014). Moreover, it was recently shown that replacing footshock with light activation of LA principal cells expressing the excitatory opsin, channelrhodopsin-2 (ChR2), is sufficient for threat conditioning to an auditory tone (Johansen et al. 2010, 2014), and another recent study used optogenetics to ‘engineer’ a synthetic memory by inducing LTP at auditory synapses in LA paired with footshocks (Nabavi et al. 2014). Together, these data highlight the LA as a critical mediator of associative, Hebbian plasticity and memory formation.

The LA, in addition to receiving and integrating CS and US information for the formation of associative memories, also controls defense reactions via direct and indirect connections to CeA, a major output nucleus of the amygdala (Fig. 2). The indirect pathway is more prominent and involves synaptic connections to the basal nucleus (B) and the accessory basal nucleus (ABA), which make synaptic contacts on neurons in the central nucleus of the amygdala CeA. The CeA then coordinates the behavioral and autonomic responses to threat via long-range projections to the brainstem periaqueductal gray (PAG) and hypothalamus, respectively. Plasticity has also been shown to occur in CeA (Pascoe and Kapp 1985; Wilensky et al. 2006), and recent efforts have begun to dissect the microcircuitry within the CeA and the efferents that control defensive behaviors (see Ehrlich et al. 2009; Johansen et al. 2012 and Lüthi and Lüscher 2014 for a review of recent studies).

With the advent of modern molecular genetic techniques, recent work has corroborated and extended findings previously shown with more traditional techniques. The newfound ability to manipulate specific cell subtypes and circuits has allowed for detailed probing of circuitry to, within, and from the amygdala.

5 Diverse Functions of a Threat Stimulus: The Nature of Action and Reaction Learning in Aversive Motivation

The transition of the field toward the studies of purely Pavlovian conditioning led to considerable progress in understanding this fundamental phenomenon. However, studies of avoidance behavior are also valuable because they can provide additional insight into more complex forms of aversively motivated behavior. Knowledge obtained about the neural mechanisms involved in these processes could be very useful in understanding human disorders characterized by pathological avoidance. This is because in addition to eliciting anticipatory prepared responses, Pavlovian stimuli can do much more. As we have briefly pointed out earlier, Pavlovian stimuli play important roles in reinforcing instrumental responses during avoidance learning in tasks. Avoidance itself can be an adaptive way to cope with threats and exert control in dangerous situations, above and beyond the anticipatory responses produced by Pavlovian cues. Pavlovian stimuli can also function as incentives that motivate previously learned instrumental responses. Thus, Pavlovian processes can control the expression of maladaptive avoidance behaviors, and understanding this process on a neural level can help treat such behavioral problems. Below, we will review studies that have explored the neural circuits involved in supporting these different roles of aversive Pavlovian conditioned stimuli in avoidance and related behaviors. But first, we will briefly consider some conceptual issues regarding the psychological underpinnings of avoidance behavior.

A general feature of avoidance learning is negative reinforcement (Rescorla 1968) where some expected aversive event is removed contingent upon the performance of some target action (e.g., lever press or shuttling). Behaviors performed that remove these aversive events are subsequently more likely to be performed. While this would appear to reflect instrumental learning processes similar to those that guide actions when the reinforcer is a primary and appetitive one, there is some debate as to the exact nature of the underlying psychological substrates of this form of learning. An argument can be made that Pavlovian learning processes can explain avoidance behaviors without the involvement of goal-directed processes. This view is supported by observations that behaviors that closely approximate prepared defensive reactions (e.g., fleeing, rearing) are more readily acquired as avoidance responses than artificial responses (e.g., lever press; Bolles 1970). Additionally, some of these prepared behaviors (e.g., freezing) cannot be eliminated by punishment with shock, and this has been taken as evidence that they are not sensitive to control by instrumental contingencies and are, therefore, not truly instrumental behaviors (Fanselow and Lester 1988; Fanselow 1997). However, this is a complicated discussion that we will not focus on here. There has been evidence on both sides of this issue that suggest there is some basis for control of these behaviors by instrumental contingencies (Matthews et al. 1974; Killcross et al. 1997). For example, in addition to any modulation by Pavlovian associations, CS-escape and US-avoidance contingencies contribute independently to avoidance learning. Studies by Kamin (1956) as well as Herrnstein and Hineline (1966) show that learning is impaired if either contingency is discontinued. Furthermore, studies in appetitive learning suggest that similarly, some behaviors are more easily used as a means to food reinforcement (Shettleworth 1978). More germane to our current discussion, the neural control of these behaviors differs from basic Pavlovian learning processes, and differences also exist in neural control between different action-based tasks (i.e., different forms of avoidance or escape behavior). These differences will be considered below. Regardless of the exact nature of the representation developed through the learning of aversive actions, and specifically whether or not it is a true instance of instrumental learning, we believe unique processes underlie these actions and that further pursuit of their neural control is important. Through avoidance and related behaviors, organisms are able to reduce danger under a variety of circumstances, which attenuates defensive reactions and, therefore, has broad implications for understanding aversive motivation.

6 The Neural Basis of Aversively Motivated Actions

While a variety of preparations can be used to study avoidance and aversively motivated action learning, we focus on four main procedures here: signaled avoidance, unsignaled avoidance, escape from threat, and Pavlovian-to-instrumental transfer (PIT). We will discuss the underlying circuitry related to these tasks in the sections below.

6.1 Neural Control of Signaled and Unsignaled Avoidance Behavior

In a signaled avoidance procedure (SigA), a CS (e.g., tone) is first established as a threat via standard delay pairings of the CS with an aversive US (e.g., footshock). During later trials, presentation of the CS or the US can be terminated by performance of a target response. In many of our studies, subjects are trained to perform a shuttle response in a two-way chamber in order to control stimulus deliveries (e.g., see Fig. 3); we chose this seminatural response because of its similarity to prepared fleeing behaviors among those found to have been used in the available literature. If the subject performs the shuttle response during the CS, the CS is removed, and additionally, the expected US is omitted. While both events (CS-offset and US-omission) possess the power to reinforce shuttling (see Kamin 1956), the contribution of each is difficult to disentangle in a SigA procedure because trials without responses result in further US deliveries.

Despite the limitations, early studies (see Gabriel et al. 2003) found that amygdala was important for avoidance, but what exact function it served could not be determined from these efforts. Using small lesions of amygdala subnuclei placed following baseline SigA training in rats, Choi et al. (2010) found that damage restricted to lateral (LA) or basal (BA) amygdala impaired performance, but that central (CeA) amygdala damage had no impact on SigA learning. Based on decades of studies in pure Pavlovian conditioning, the role of LA in SigA can be inferred; the Pavlovian learning presumed to be required for reinforcing and motivating the action via the CS is stored in LA. Without this associative knowledge, SigA learning cannot proceed. Given this role for LA, these findings suggest that BA is important for using the LA-based CS–US association to reinforce responding downstream. This pathway out of the amygdala as it relates to SigA will be further considered below.

It should be noted that a similar circuit is implicated when avoidance responses are acquired in the absence of any auditory CS. In unsignaled Sidman active avoidance (USA; see Sidman 1953), subjects are similarly placed in the two-way chamber, but are never exposed to a tone CS. Instead, in this procedure, footshock USs are presented at a fixed interval and any shuttle response that occurs postpones the next US, thus negatively reinforcing the response. Lazaro-Munoz et al. (2010) found that LA and BA but not CeA lesions impaired acquisition of USA behavior compared to sham controls. While the tone CS is absent from USA training procedures, the role of the context in promoting the response becomes more important, as do feedback cues presented when the response is performed. Because the context cannot be removed following a response in USA as a CS can be in SigA, the presence of discreet feedback cues has been found to help reinforce and shape USA. The feedback cues are negatively correlated with shock and may become safety signals that reinforce avoidance responding by counteracting the threat (Dinsmoor 2001; Rescorla 1968). Whether signaled by a discreet CS (as in SigA) or a context (as in USA), the associative component of avoidance learning appears to depend on LA, which after decades of Pavlovian conditioning studies may not appear surprising, but given that starting point, further analysis of avoidance circuitry can now proceed more quickly than before that was common knowledge. In both SigA and USA, BA appears critical for producing actions via LA-based associative knowledge regarding threats.

Before actions can be effectively promoted, incompatible reactions normally evoked by the CS must be sufficiently opposed to allow for the expression of action. There is evidence that this regulation is accomplished in a parallel manner via infra limbic ventromedial prefrontal cortex (PFCIL) inhibition of CeA during signaled avoidance (Moscarello and LeDoux 2013; but see Bravo-Rivera et al. 2015). Preventing PFCIL from normally functioning (permanently or temporarily) impairs avoidance behav2013ior. Subjects with lesions or muscimol inactivation of IL show reduced avoidance responding and increased freezing relative to control subjects (Moscarello and LeDoux 2013). Further evidence of this antagonistic process comes from subjects that fail to acquire SigA and USA, so-called poor-performers. These subjects exhibit more freezing and less avoidance relative to animals that acquire normally. If CeA is lesioned in these poorly performing subjects, normal responding emerges compared to sham-lesioned poor-performers (Choi et al. 2010; Lazaro-Munoz et al. 2010). This suggests that excessive CeA activity interferes with acquisition and expression of avoidance responses to cause poor performance in both tasks. Thus, in normal subjects, PFCiL comes to oppose CeA-mediated defensive reactions, which allows for expression of SigA. Whether this same regulatory process applies to USA has not been systematically investigated, but the findings regarding basic circuitry and response competition via CeA-induced freezing suggest convergence. Furthermore, Martinez et al. (2013) found that good USA performers showed more c-Fos in PFCiL compared to poor performers (however, see Bravo-Rivera et al. 2014, 2015 for results implicating PFCPL).

While there has been a considerable amount of attention paid to the striatum in the context of appetitive instrumental conditioning and motivation (Cardinal et al. 2002; Setlow et al. 2002; Balleine and Killcross 2006; Shiflett and Balleine 2010), not much is known about how these structures contribute to aversive motivational processes. Cheer and colleagues (Oleson et al. 2012) have recently shown using fast-scan cyclic voltammetry that the amount of dopamine released during sub-second release events is increased in nucleus accumbens (NAcc) core following an avoidance response in a footshock SigA procedure. This result suggests that dopamine release in NAcc may play a critical role in reinforcement during SigA.

In agreement with these findings, Ramirez et al. (2015) found that expression of c-Fos in NAcc (but in the shell rather than the core) was significantly higher in subjects that were trained on SigA compared to yoked and box control subjects (see also Bravo-Rivera et al. 2014, 2015). Furthermore, this study also showed that muscimol inactivation of NAcc shell but not core impaired SigA performance. More powerful still temporary disconnection of BA and NAcc shell with muscimol impaired SigA. By inactivating BA and NAcc shell unilaterally, but on contralateral (or opposite) sides of the brain, communication between these structures, but not overall function is compromised. Because a single nucleus remains active in each hemisphere, this can mostly compensate for the inactivated counterpart. This manipulation impaired SigA compared to control subjects that received inactivation of these structures on the same side of the brain, thus leaving communication intact (Ramirez et al. 2015). Together, the Oleson et al. (2012) and Ramirez et al. (2015) findings clearly suggest a role for BA and NAcc in mediating acquisition and expression of SigA. There are many procedural and design difference between these studies; therefore, it is difficult to make easy comparisons and address discrepant findings regarding subregions of the NAcc (i.e., core vs. shell). For example, lever-press avoidance requires much more training than shuttle-based avoidance, and therefore, this could be a potential reason for the functional neuroanatomical difference. The NAcc shell may be required early on in training and the core later, when the behavior has transitioned to habit. Nevertheless, these findings provide strong evidence that a LA-BA-NAcc circuit is important for SigA behavior and that prefrontal regulation of CeA facilitates this process by reducing reactive freezing CRs. Whether this is also true of USA is currently not known.

A note on habitual avoidance behavior

As briefly discussed above, actions that are repeatedly performed or overtrained are referred to as habits. Studies in appetitive motivation using reinforcer revaluation have found that early in training, instrumental actions are guided by the associated outcome and changes in the value of this outcome directly influence responding (Adams 1982; Balleine and Dickinson 1991, 1998). After extended training, performance of the response is no longer sensitive to the outcome value and persists despite manipulations that render the outcome devalued (e.g., taste aversion or selective satiety). The transition from an S[R-O] ‘goal-directed’ action to an S-R ‘habit’ has been found to depend on how the action is coordinated by the ventromedial prefrontal cortex. Killcross and Coutureau (2003) found that while sham control subjects showed reductions in lever-press behavior following pre-feeding only when given limited training, subjects with prelimbic lesions continued to demonstrate this devaluation effect regardless of training amount. This suggests that the transition to outcome independence and habitual action involved prelimbic function coming online to guide the response in a way that is unbound from the outcome representation. While the development of habits has not been thoroughly studied in the context of aversive motivation there is some evidence that a similar transition might apply. Poremba and Gabriel (1999) have shown that the passage of time renders avoidance behavior amygdala-independent. Using a discriminative footshock avoidance procedure in rabbits, muscimol inactivation of the amygdala impaired wheel-turning responses very early in training but not after extended training or following a seven-day rest period. In agreement with this result, using shuttling in rats, Lazaro-Munoz et al. (2010) found that amygdala lesions placed after extended USA training had no effect, while these lesions in another group impaired initial acquisition. This is in contrast to overtrained Pavlovian learning, which has been shown to not undergo this transition and remain amygdala dependent (Zimmerman et al. 2007). Avoidance behavior can be an effective means of coping with threats; however, habitual avoidance is maladaptive and disruptive. Whether the transition to amygdala independent avoidance discussed above reflects outcome independence as well is not known.

6.2 Neural Control of Escape from Threat

Acquisition of SigA responses requires BA, though it is not clear whether BA is sensitive to CS-offset, US-omission, or both. From a behavioral perspective, SigA does not permit isolation of these processes. As noted above, there have been studies that demonstrate the importance of the CS-escape and US-avoidance contingencies to learning (Kamin 1956) and also those that emphasized the importance of feedback in avoidance learning (Sidman 1953; Rescorla 1968). However, because Pavlovian and instrumental learning occur together in SigA, it is unclear which neural processes might pertain to which psychological phenomenon. In order to study this issue on a psychological level in the past, tasks were designed in which Pavlovian and instrumental conditioning occur in separate phases as opposed to the intermixed nature of SigA training described above. These tasks were referred to as ‘escape from fear’ tasks. We will refer to them as ‘escape from threat’ or EFT (see LeDoux 2014). EFT demonstrates that once a CS has been established as a threat, the removal of this threat alone can support acquisition of an instrumental response. This is because no shocks are presented during instrumental training (see Fig. 4). A trial never ends with a shock US during EFT training, even when no response occurs. Thus, the Pavlovian contingency is under extinction during the instrumental session. That responding is acquired under these conditions at all suggests that threat removal is a powerful reinforcement process on its own.

EFT has not been as thoroughly studied on a neural level as have SigA or USA due to complications with the task that have made it historically controversial. For example, the reliability and parametric uniformity of EFT learning have been sources of criticism for EFT as has a lack of testing procedures to demonstrate response retention (see Cain and LeDoux 2007). What research has been done suggests that the same amygdala circuitry is involved as in SigA. For example, using a one-way shuttle task, Amorapanth et al. (2000) found that LA and BA lesions impaired EFT, while CeA lesions did not. These findings suggest that a similar circuit may control both SigA and EFT forms of avoidance learning.

6.3 Incentive Motivation: Neural Circuits of Aversive Pavlovian-to-Instrumental Transfer

In addition to conditioned reinforcement, another function served by the CS in avoidance and related phenomena is conditioned motivation, which can be studied in isolation with Pavlovian-to-instrumental transfer (PIT). In PIT, an instrumental response is performed at some baseline rate, and presentation of the CS increases the rate with which this response is performed (Bolles and Popp 1964; Rescorla and LoLordo 1965; Rescorla 1968; Weisman and Litner 1969; Overmier and Payne 1971; Overmier and Brackbill 1977; Patterson and Overmier 1981; Campese et al. 2013; Nadler et al. 2011). The neural basis of PIT has been extensively studied in cases where the US is appetitive (e.g., food, or drugs; for a review see Holmes et al. 2010; Laurent et al. 2015). While some early studies used similar procedures in aversive motivation, only recently has there been progress on identifying the neural foundations of aversive PIT. Using early studies as a guide, we have developed a simple aversive PIT task for rats using USA shuttling in a two-way chamber as the instrumental response. As described earlier, subjects learn to avoid footshocks in the USA procedure independently from any discreet Pavlovian stimuli with an excitatory relationship to shock (i.e., in the absence of the tone CS). When a previously trained CS is presented during USA, subjects show little freezing to the CS and, instead, display enhanced USA behavior. An example of this PIT effect is shown below in Fig. 5 along with figures depicting the training procedure (see Campese et al. 2013).

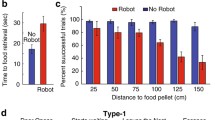

Neural circuits underlying signaled active avoidance (SigA): Left panel shows data collected using a two-way shuttle response. Following an inescapable CS–US pairing, shuttle responses can terminate presentations of the CS and prevent the US. On trials where no response occurs during the CS, shuttling can escape the subsequent shock. a Lateral, basal, and central amygdala lesions impair SigA, from Choi et al. (2010). b Disconnection of basal amygdala and nucleus accumbens shell with muscimol impairs SigA, from Ramirez et al. (2015). Muscimol-induced inactivation of the infralimbic region of the prefrontal cortex increases CS-evoked freezing (c) and reduces avoidance responses (d) from Moscarello and LeDoux (2013). Right panel shows results using the step platform procedure (Bravo-Rivera et al. 2014, 2015). US delivery can be avoided by stepping onto the platform into the safety zone. Prelimbic (a) and not infralimbic (b) inactivation impairs SigA, as does inactivation of the ventral striatum (c) and basolateral complex of the amygdala (d)

We have begun to use this procedure to examine the neural circuits important for aversive PIT and have found evidence for a different pathway that processes incentive motivation than that seen for SigA, USA, and EFT acquisition. Using lesions, Campese et al. (2014) found that LA and CeA but not BA are required for aversive PIT. LA and CeA damage eliminated the facilitative effect of the CS, but BA lesions had no effect. Further studies (McCue et al. 2014) found that medial amygdala (MeA) is also required for PIT. One possibility suggested by these findings is that LA-CeA-MeA circuitry (see Fig. 6) is important for aversive PIT, departing from the LA-BA-NAcc circuit implicated in CS-based avoidance tasks discussed above. An important procedural difference between PIT and CS-based avoidance/escape (i.e., SigA) tasks is that avoidance/escape responses are learned in the repeated presence of the CS. This likely results in the CS becoming part of the associative structure during learning, where it comes to act as a discriminative or occasion-setting stimulus that signals when the instrumental (i.e., avoidance) contingency is in effect. This process engages extinction-like regulation of CeA defensive behavior (e.g., freezing) via the prefrontal cortex (Moscarello and LeDoux 2013; Bravo-Rivera et al. 2014, 2015), which allows for expression of active responding along the ‘informational’ LA-BA-NAcc pathway. This is in contrast to PIT where the CS is not present during USA training and cannot come to influence the instrumental contingency. The CS is previously trained and is not presented again until transfer tests. Thus, the increased USA behavior observed in PIT tests can be considered a reaction because the CS has no control over the instrumental contingency. Therefore, during PIT, the CS modulates behavior through ‘emotional/affective’ routes (mediated by CeA/MeA) rather than ‘informational’ routes (mediated by BA). While studies in appetitive motivation have implicated NAcc (Shiflett and Balleine 2010; Laurent et al. 2015) in PIT, the contribution of this region to aversive PIT has not been explored as of yet (Figs. 5 and 6).

Neural circuits underlying escape from threat (EFT): Experimental procedure developed by Cain and LeDoux (2007) (Upper right corner) Following standard Pavlovian conditioning, rearing can terminate CS presentations and retention of this response can be tested the following day. Over the course of EFT training, rearing frequency increases in subjects that received the R-O (i.e., CS-offset) contingency only, and yoked and unpaired control groups did not show this pattern. During EFT training, freezing decreases for both groups that received CS–US pairings during Pavlovian conditioning (c). More rearing is seen during the test session in EFT-trained subjects and (b) freezing spontaneously recovers in the yoked control group only (d). Using a one-way shuttle response, Amorapanth et al. (2000) found that EFT was impaired by lateral and basal amygdala lesions, but not central amygdala lesions

Neural circuits underlying aversive Pavlovian-to-instrumental transfer (PIT): procedure used to study aversive PIT from Campese et al. (2013) (top). Following Pavlovian conditioning subjects undergo unsignaled Sidman active avoidance (USA) in the two-way shuttle chambers. During transfer testing, the CS is presented in this context and the effect on USA rate is measured. Subjects that received CS–US pairings during Pavlovian conditioning show an elevation of shuttle rate compared to the pre-CS period, while unpaired and naïve controls do not (a from Campese et al. 2013). Lateral, central but not basal amygdala lesions impair PIT (b from Campese et al. 2014). Medial amygdala lesions also impair PIT (c) but have no effect on CS-elicited freezing (d from McCue et al. 2014)

Schematic representation of the circuits underlying aversively motivated instrumental reactions and actions: CS–US processing occurs in LA and ‘informational’ actions (SigA, EFT, USA) require the LA–BA pathway, while ‘emotional’ reactions (CS-elicited freezing, facilitation or suppression) engage the LA–CeA circuitry

7 Summary

The systems described above depict a flexible and adaptive network capable of producing appropriate responses under a variety of circumstances motivated by threat or general aversive processes. These responses can be innate and reflexive, produced by evolutionarily selected stimuli or those given the power to convey threat through associative learning. The responses could alternatively be learned, artificial to some extent, but still prepared in other ways. It is clear that these dimensions of an aversively motivated response depend on unique circuitry more complex than that which accomplishes simple Pavlovian learning processes. There is evidence that microcircuits within the amygdala may contribute to conditioned motivation and conditioned reinforcement in different ways. These forms of aversively motivated learning have great relevance to clinical issues concerning coping strategies in treating anxiety, phobic disorders, and post-traumatic stress. Understanding the behavioral, neurobiological, and pharmacological basis of these phenomena can lead to profound progress in applications to these problems. While the fragility of extinction treatments are well known (e.g., spontaneous recovery, and renewal), avoidance learning produces reductions in defensive responding (i.e., active coping responses) that survive the passage of time and shifts in physical context (Bouton 2004; Cain and LeDoux 2007; LeDoux 2015). Additionally because maladaptive and inappropriate habitual avoidance behavior underlies emotional disorders in humans (e.g., agoraphobia, obsessive compulsive disorder), the importance of developing a rich understanding of these processes is twofold. Because of the great deal of knowledge obtained on PTC processes over decades of research, we are poised to learn more about these complex and elaborate circuits than was permitted in the past.

References

Adams C (1982) Variations in the sensitivity of instrumental responding to reinforcer devaluation. Q J Exp Psychol 34B:77–98

Alkon DL (1983) Learning in a marine snail. Sci Am 249:70–85

Amano T, Duvarci S, Popa D, Pare D (2011) The fear circuit revisited: contributions of the basal amygdala nuclei to conditioned fear. J Neurosci 31:15481–15489

Amorapanth P, LeDoux JE, Nader K (2000) Different lateral amygdala outputs mediate reactions and actions elicited by a fear-arousing stimulus. Nat Neurosci 3:74–79

Balleine BW, Dickinson A (1991) Instrumental performance following reinforcer devaluation depends upon incentive learning. Q J Exp Psychol 43B:279–296

Balleine BW, Dickinson A (1998) Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology 37:407–419

Balleine BW, Killcross S (2006) Parallel incentive processing: an integrated view of amygdala function. Trends Neurosci 29:272–279

Blanchard RJ, Blanchard DC (1969a) Crouching as an index of fear. J Comp Physiol Psych 67:370–375

Blanchard RJ, Blanchard DC (1969b) Passive and active reactions to fear-eliciting stimuli. J Comp Physiol Psychol 68:129–135

Blanchard RJ, Blanchard DC (1972) Effects of hippocampal lesions on the Rat’s reaction to a Cat. J Comp Physiol Psychol 78:77–82

Bolles RC (1970) Species-specific defense reactions and avoidance learning. Psychol Rev 77:32–48

Bolles RC (1972) Reinforcement, expectancy, and learning. Psychol Rev 79(5):394–409

Bolles RC, Popp RJ (1964) Parameters affecting the acquisition of sidman avoidance. J Exp Anal Behav 7(4):315

Bolles RC, Fanselow MS (1980) A perceptual-defensive-recuperative model of fear and pain. Behav Brain Sci 3:291–323

Bouton ME (2004) Context and behavioral processes in extinction. Learn Mem 11:485–494

Bravo-Rivera C, Roman-Ortiz C, Brignoni-Perez E, Sotres-Bayon F, Quirk GJ (2014) Neural structures mediating expression and extinction of platform-mediated avoidance. J Neurosci 34:9736–9742. PMC4099548

Bravo-Rivera C, Roman-Ortiz C, Montesinos-Cartagena M, Quirk GJ (2015) Persistent active avoidance correlates with activity in prelimbic cortex and ventral striatum. Front Behav Neurosci 9:184. http://doi.org/10.3389/fnbeh.2015.00184

Brown JS, Jacobs A (1949) The role of fear in the motivation and acquisition of responses. J Exp Psychol 39:747–759

Cain CK, LeDoux JE (2007) Escape from fear: a detailed behavioral analysis of two atypical responses reinforced by CS termination. J Exp Psychol Anim Behav Process 33:451–463

Campese V, McCue M, Lazaro-Munoz G, LeDoux JE, Cain CK (2013) Development of an aversive Pavlovian-to-instrumental transfer task in rat. Front Behav Neurosci 7:176. PMC3840425

Campese VD, Kim J, Lazaro-Munoz G, Pena L, LeDoux JE, Cain CK (2014) Lesions of lateral or central amygdala abolish aversive Pavlovian-to-instrumental transfer in rats. Front Behav Neurosci 8:161. PMC4019882

Cardinal RN, Parkinson JA, Hall J, Everitt BJ (2002) Emotion and motivation: the role of the amygdala, ventral striatum, and prefrontal cortex. Neurosci Biobehav Rev 26:321–352

Carew TJ, Hawkins RD, Kandel ER (1983) Differential classical conditioning of a defensive withdrawal reflex in Aplysia californica. Science 219:397–400

Choi JS, Cain CK, LeDoux JE (2010) The role of amygdala nuclei in the expression of auditory signaled two-way active avoidance in rats. Learn Mem 17:139–147

Clem RL, Huganir RL (2010) Calcium-permeable AMPA receptor dynamics mediate fear memory erasure. Science 330:1108–1112

Cohen DH (1974) The neural pathways and informational flow mediating a conditioned autonomic response. In: Di Cara, LV (ed) Limbic and autonomic nervous system research. Plenum Press, New York, pp 223–275

Coss RG, Biardi JE (1997) Individual variation in the antisnake behavior of California ground squirrels (Spermophilus beecheyi). J Mammal 78(2):294–310

Davis M (1986) Pharmacological and anatomical analysis of fear conditioning using the fear-potentiated startle paradigm. Behav Neurosci 100:814–824

Davis M (1992) The role of the amygdala in conditioned fear. In: Aggleton JP (ed) The amygdala: neurobiological aspects of emotion, memory, and mental dysfunction. Wiley-Liss, Inc, NY, pp 255–306

Davis M, Walker DL, Lee Y (1997) Roles of the amygdala and bed nucleus of the stria terminalis in fear and anxiety measured with the acoustic startle reflex. Possible relevance to PTSD. Ann N Y Acad Sci 21(821):305–331

Dinsmoor JA (2001) Stimuli inevitably generated by behavior that avoids electric shock are inherently reinforcing. J Exp Anal Behav 75(3):311–333

Dudai Y, Jan YN, Byers D, Quinn WG, Benzer S (1976) Dunce, a mutant of Drosophila deficient in learning. Proc Natl Acad Sci USA 73:1684–1688. PMC430364

Ehrlich I, Humeau Y, Grenier F, Ciocchi S, Herry C, Luthi A (2009) Amygdala inhibitory circuits and the control of fear memory. Neuron 62:757–771

Estes WK, Skinner BF (1941) Some quantitative properties of anxiety. J Exp Psychol 29:390–400

Estes WK (1948) Discriminative conditioning; effects of a Pavlovian conditioned stimulus upon a subsequently established operant response. J Exp Psychol 38:173–177

Fanselow MS, LeDoux JE (1999) Why we think plasticity underlying Pavlovian fear conditioning occurs in the basolateral amygdala. Neuron 23:229–232

Fanselow MS, Lester LS (1988) A functional behavioristic approach to aversively motivated behavior: predatory imminence as a determinant of the topography of defensive behavior. In: Bolles RC, Beecher MD (eds) Evolution and learning. Erlbaum, Hillsdale, N.J., pp 185–211

Fanselow MS (1980) Conditioned and unconditional components of post-shock freezing. Pavlovian J Biol Sci 15:177–182

Fanselow MS (1997) Species-specific defense reactions: retrospect and prospect. In: Bouton ME, Fanselow MS (eds) Learning, motivation, and cognition. American Psychological Association, Washington, D. C., pp 321–341

Gabriel M, Burhans L, Kashef A (2003) Consideration of a unified model of amygdalar associative functions. Ann N Y Acad Sci 985:206–217

Gabriel M, Lambert RW, Foster K, Orona E, Sparenborg S, Maiorca RR (1983) Anterior thalamic lesions and neuronal activity in the cingulate and retrosplenial cortices during discriminative avoidance behavior in rabbits. Behav Neurosci 97:675–696

Gale GD, Anagnostaras SG, Godsil BP, Mitchell S, Nozawa T, Sage JR, Wiltgen B, Fanselow MS (2004) Role of the basolateral amygdala in the storage of fear memories across the adult lifetime of rats. J Neurosci 24:3810–3815

Goddard G (1964) Functions of the amygdala. Psychol Rev 62:89–109

Gafford GM, Ressler KJ (2015). Mouse models of fear-related disorders: cell-type-specific manipulations in amygdala. Neuroscience doi: 10.1016/j.neuroscience.2015.06.019

Grace AA, Rosenkranz JA (2002) Regulation of conditioned responses of basolateral amygdala neurons. Physiol Behav 77:489–493

Han JH, Kushner SA, Yiu AP, Cole CJ, Matynia A, Brown RA, Neve RL, Guzowski JF, Silva AJ, Josselyn SA (2007) Neuronal competition and selection during memory formation. Science 316:457–460

Hinde RA (1966) Animal behaviour. McGraw-Hill, New York

Herrnstein RJ, Hineline PN (1966) Negative reinforcement as shock-frequency reduction. J Exp Anal Behav 9(4):421–30

Hirsch SM, Bolles RC (1980) Zeitschrift für Tierpsychologie 54(1):71–84

Holland PC, Gallagher M (2003) Double dissociation of the effects of lesions of basolateral and central amygdala on conditioned stimulus-potentiated feeding and Pavlovian-instrumental transfer. Eur J Neurosci 17:1680–1694

Holmes NM, Marchand AR, Coutureau E (2010) Pavlovian to instrumental transfer: a neurobehavioural perspective. Neurosci Biobehav Rev 34:1277–1295

Hull CL (1943) Principles of behavior. Appleton-Century-Crofts, New York

Isaacson RL (1982) The limbic system. Plenum Press, New York

Johansen JP, Diaz-Mataix L, Hamanaka H, Ozawa T, Ycu E, Koivumaa J, Kumar A, Hou M, Deisseroth K, Boyden ES, LeDoux JE (2014) Hebbian and neuromodulatory mechanisms interact to trigger associative memory formation. Proc Natl Acad Sci USA 111:E5584–E5592. PMC4280619

Johansen JP, Hamanaka H, Monfils MH, Behnia R, Deisseroth K, Blair HT, LeDoux JE (2010) Optical activation of lateral amygdala pyramidal cells instructs associative fear learning. Proc Natl Acad Sci USA 107(28):12692–12697. http://doi.org/10.1073/pnas.1002418107

Johansen JP, Wolff SB, Luthi A, LeDoux JE (2012) Controlling the elements: an optogenetic approach to understanding the neural circuits of fear. Biol Psychiatry 71:1053–1060

Johnston JB (1923) Further contribution to the study of the evolution of the forebrain. J Comp Neurol 35:337–481

Kalish HI (1954) Strength of fear as a function of the number of acquisition and extinction trials. J Exp Psychol 47:1–9

Kamin LJ (1956) The effects of termination of the CS and avoidance of the US on avoidance learning. J Comp Physiol Psychol 49:420–424

Kandel ER, Spencer WA (1968) Cellular neurophysiological approaches to the study of learning. Physiol Rev 48:65–134

Kapp BS, Frysinger RC, Gallagher M, Haselton JR (1979) Amygdala central nucleus lesions: effect on heart rate conditioning in the rabbit. Physiol Behav 23:1109–1117

Kapp BS, Whalen PJ, Supple WF, Pascoe JP (1992) Amygdaloid contributions to conditioned arousal and sensory information processing. In: Aggleton JP (ed) The amygdala: neurobiological aspects of emotion, memory, and mental dysfunction. Wiley-Liss, New York, pp 229–254

Kida S, Josselyn SA, de Ortiz SP, Kogan JH, Chevere I, Masushige S, Silva AJ (2002) CREB required for the stability of new and reactivated fear memories. Nat Neurosci 5:348–355

Killcross S, Coutureau E (2003) Coordination of actions and habits in the medial prefrontal cortex of rats. Cereb Cortex 13(4):400–408

Killcross S, Robbins TW, Everitt BJ (1997) Different types of fear-conditioned behaviour mediated by separate nuclei within amygdala. Nature 388:377–380

Lang PJ, Davis M (2006) Emotion, motivation, and the brain: reflex foundations in animal and human research. Prog Brain Res 156:3–29

Laurent V, Morse AK, Balleine BW (2015) The role of opioid processes in reward and decision-making. Br J Pharmacol 172(2):449–459. doi:10.1111/bph.12818

Lazaro-Munoz G, LeDoux JE, Cain CK (2010) Sidman instrumental avoidance initially depends on lateral and Basal amygdala and is constrained by central amygdala-mediated Pavlovian processes. Biol Psychiatry 67:1120–1127

LeDoux JE (1992) Emotion and the amygdala. In: Aggleton JP (ed) The amygdala: neurobiological aspects of emotion, memory, and mental dysfunction. Wiley-Liss, Inc, New York, pp 339–351

LeDoux JE (1996a) The emotional brain. Simon and Schuster, New York

LeDoux J (1996b) Related articles emotional networks and motor control: a fearful view. Prog Brain Res 107:437–446. Review

LeDoux JE (2000) Emotion circuits in the brain. Annu Rev Neurosci 23:155–184

LeDoux JE (2014) Coming to terms with fear. Proc Natl Acad Sci USA 111:2871–2878

LeDoux JE (2015) Anxious. Viking, New York

LeDoux JE, Gorman JM (2001) A call to action: overcoming anxiety through active coping. Am J Psychiatry 158:1953–1955

LeDoux JE, Iwata J, Cicchetti P, Reis DJ (1988) Different projections of the central amygdaloid nucleus mediate autonomic and behavioral correlates of conditioned fear. J Neurosci, 8(7):2517–2529

LeDoux JE, Sakaguchi A, Reis DJ (1983a) Strain difference in fear between spontaneously hypertensive and normotensive rats. Brain Res 227:137–143

LeDoux JE, Thompson ME, Iadecola C, Tucker LW, Reis DJ (1983b) Local cerebral blood flow increases during auditory and emotional processing in the conscious rat. Science 221:576–578

LeDoux JE, Sakaguchi A, Reis DJ (1984) Subcortical efferent projections of the medial geniculate nucleus mediate emotional responses conditioned to acoustic stimuli. J Neurosci 4:683–698

LeDoux JE, Schiller D, Cain C (2009) Emotional reaction and action: from threat processing to goal-directed behavior. In: Gazzaniga MS (ed) The cognitive neurosciences. MIT Press, Cambridge, pp 905–924

Levis DJ (1989) The case for a return to a two-factor theory of avoidance: the failure of non-fear interpretations. In: Klein SB, Mowrer RR (eds) Contemporary learning theories: Pavlovian conditioning and the status of traditional learning theory. Lawrence Erlbaum Assn, Hillsdale, pp 227–277

Lorenz KZ, Tinbergen N (1938) Taxis und instinktbegriffe in der Eirollbewegung der Graugans. Z Tierpsych 2:1–29

Lovibond PF (1983) Facilitation of instrumental behavior by a Pavlovian appetitive conditioned stimulus. J Exp Psychol Anim Behav Process 9:225–247

Lüthi A, Lüscher C (2014) Pathological circuit function underlying addiction and anxiety disorders. Nat Neurosci 17(12):1635

Maren S, Fanselow MS (1996) The amygdala and fear conditioning: has the nut been cracked? Neuron 16:237–240

Maren S (2001) Neurobiology of Pavlovian fear conditioning. Annu Rev Neurosci 24:897–931

Martinez RC, Gupta N, Lazaro-Munoz G, Sears RM, Kim S, Moscarello JM, LeDoux JE, Cain CK (2013) Active vs. reactive threat responding is associated with differential c-Fos expression in specific regions of amygdala and prefrontal cortex. Learn Mem 20:446–452. PMCPMC3718200

Matthews TJ, McHugh TG, Carr LD (1974) Pavlovian and instrumental determinants of response suppression in the pigeon. J Comp Physiol Psychol 87(3):500–506

McAllister WR, McAllister DE (1971) Behavioral measurement of conditioned fear. In: Brush FR (ed) Aversive conditioning and learning. Academic Press, New York, pp 105–179

McCue MG, LeDoux JE, Cain CK (2014) Medial amygdala lesions selectively block aversive pavlovian-instrumental transfer in rats. Front Behav Neurosci 8:329. PMC4166994

McKernan MG, Shinnick-Gallagher P (1997) Fear conditioning induces a lasting potentiation of synaptic currents in vitro. Nature 390:607–611

Miller NE (1948) Studies of fear as an acquirable drive: I. Fear as motivation and fear reduction as reinforcement in the learning of new responses. J Exp Psychol 38:89–101

Miller NE (1951) Learnable drives and rewards. In: Stevens SS (ed) Handbook of experimental psychology. Wiley, New York, pp 435–472

Moscarello JM, LeDoux JE (2013) Active avoidance learning requires prefrontal suppression of amygdala-mediated defensive reactions. J Neurosci 33:3815–3823

Mowrer OH, Lamoreaux RR (1946) Fear as an intervening variable in avoidance conditioning. J Comp Psychol 39:29–50

Mowrer OH (1947) On the dual nature of learning: a reinterpretation of “conditioning” and “problem solving”. Harvard Educ Rev 17:102–148

Nabavi S, Fox R, Proulx CD, Lin JY, Tsien RY, Malinow R (2014) Engineering a memory with LTD and LTP. Nature 511(7509):348–352. http://doi.org/10.1038/nature13294

Nadler N, Delgado MR, Delamater AR (2011) Pavlovian to instrumental transfer of control in a human learning task. Emotion 11:1112–1123. PMC3183152

Niv Y, Joel D, Dayan P (2006) A normative perspective on motivation. Trends Cogn Sci 10(8):375–381. (Epub 2006 Jul 13)

Oleson EB, Gentry RN, Chioma VC, Cheer JF (2012) Subsecond dopamine release in the nucleus accumbens predicts conditioned punishment and its successful avoidance. J Neurosci 17;32(42):14804–14808. doi:10.1523/JNEUROSCI.3087-12.2012

Overmier JB, Brackbill RM (1977) On the independence of stimulus evocation of fear and fear evocation of responses. Behav Res Ther 15:51–56

Overmier JB, Lawry JA (1979) Pavlovian conditioning and the mediation of avoidance behavior. In: Bower G (ed) The psychology of learning and motivation, vol 13. Academic Press, New York, pp 1–55

Patterson J, Overmier JB (1981) A transfer of control test for contextual associations. Anim Learn Behav 9:316–321

Overmier JB, Payne RJ (1971) Facilitation of instrumental avoidance learning by prior appetitive Pavlovian conditioning to the cue. Acta Neurobiol Exp (Wars) 31:341–349

Pascoe JP, Kapp BS (1985) Electrophysiological characteristics of amygdaloid central nucleus neurons in the awake rabbit. Brain Res Bull 14(4):331–338

Pavlov IP (1927) Conditioned reflexes. Dover, New York

Pitkänen A (2000) Connectivity of the rat amygdaloid complex. In: Aggleton JP (ed) The amygdala: a functional analysis. Oxford University Press, Oxford, pp 31–115

Pitkänen A, Pikkarainen M, Nurminen N, Ylinen A (2000) Reciprocal connections between the amygdala and the hippocampal formation, perirhinal cortex, and postrhinal cortex in rat. A review. Ann N Y Acad Sci 911:369–391

Poremba A., Gabriel M (1999) Amygdala neurons mediate acquisition but not maintenance of instrumental avoidance behavior in rabbits. J Neurosci 19:9635–9641

Quirk GJ, Repa C, LeDoux JE (1995) Fear conditioning enhances short-latency auditory responses of lateral amygdala neurons: parallel recordings in the freely behaving rat. Neuron 15:1029–1039

Ramirez F, Moscarello JM, LeDoux JE, Sears RM (2015) Active avoidance requires a serial Basal amygdala to nucleus accumbens shell circuit. J Neurosci 35:3470–3477

Rescorla RA, Lolordo VM (1965) Inhibition of avoidance behavior. J Comp Physiol Psychol 59:406–412

Rescorla RA (1968) Pavlovian conditioned fear in Sidman avoidance learning. J Comp Physiol Psychol 65(1):55–60

Rogan MT, LeDoux JE (1995) LTP is accompanied by commensurate enhancement of auditory-evoked responses in a fear conditioning circuit. Neuron 15:127–136

Romanski LM, Clugnet MC, Bordi F, LeDoux JE (1993) Somatosensory and auditory convergence in the lateral nucleus of the amygdala. Behav Neurosci 107(3):444–450

Rosen JB (2004) The neurobiology of conditioned and unconditioned fear: a neurobehavioral system analysis of the amygdala. Behav Cogn Neurosci Rev 3:23–41

Rumpel S, LeDoux J, Zador A, Malinow R (2005) Postsynaptic receptor trafficking underlying a form of associative learning. Science 308:83–88

Sarter MF, Markowitsch HJ (1985) Involvement of the amygdala in learning and memory: a critical review, with emphasis on anatomical relations. Behav Neurosci 99:342–380

Schneiderman N, Francis J, Sampson LD, Schwaber JS (1974) CNS integration of learned cardiovascular behavior. In: DiCara LV (ed) Limbic and autonomic nervous system research. Plenum, New York, pp 277–309

Schroeder BW, Shinnick-Gallagher P (2004) Fear memories induce a switch in stimulus response and signaling mechanisms for long-term potentiation in the lateral amygdala. Eur J Neurosci 20:549–556

Schroeder BW, Shinnick-Gallagher P (2005) Fear learning induces persistent facilitation of amygdala synaptic transmission. Eur J Neurosci 22(7):1775–1783

Setlow B, Holland PC, Gallagher M (2002) Disconnection of the basolateral amygdala complex and nucleus accumbens impairs appetitive pavlovian second-order conditioned responses. Behav Neurosci 116:267–275

Sidman M (1953) Avoidance conditioning with brief shock and no extero- ceptive warning signal. Science 118:157–158

Shettleworth SJ (1978) Reinforcement and the organization of behavior in golden hamsters: Pavlovian conditioning with food and shock USs. J Exp Psychol Anim Behav Process 4:152–169

Shiflett MW, Balleine BW (2010) At the limbic-motor interface: disconnection of basolateral amygdala from nucleus accumbens core and shell reveals dissociable components of incentive motivation. Eur J Neurosci 32(10):1735–1743. doi: 10.1111/j.1460-9568.2010.07439.x. (Epub Oct 7)

Skinner BF (1938) The behavior of organisms: an experimental analysis. Appleton-Century-Crofts, New York

Solomon RL, Wynne LC (1954) Traumatic avoidance learning: the principles of anxiety conservation and partial irreversibility. Psychol Rev 61:353

Thompson RF (1976) The search for the engram. Am Psychol 31:209–227

Thorndike EL (1898) Animal intelligence: an experimental study of the associative processes in animals. Psychol Monogr 2:109

Thorpe WH (1963) Learning and instinct in animals. Methuen, London

Tinbergen N (1951) The study of instinct. Oxford University Press, New York

Tsvetkov E, Carlezon WA, Benes FM, Kandel ER, Bolshakov VY (2002) Fear conditioning occludes LTP-induced presynaptic enhancement of synaptic transmission in the cortical pathway to the lateral amygdala. Neuron 34:289–300

Walters ET, Carew TJ, Kandel ER (1979) Classical conditioning in Aplysia californica. Proc Natl Acad Sci USA 76:6675–6679

Watson JB (1929) Behaviorism. W. W. Norton, New York

Weiskrantz L (1956) Behavioral changes associated with ablation of the amygdaloid complex in monkeys. J Comp Physiol Psychol 49:381–391

Weisman RG, Litner JS (1969) The course of Pavlovian excitation and inhibition of fear in rats. J Comp Physiol Psychol 69:667–672

Wilensky AE, Schafe GE, Kristensen MP, LeDoux JE (2006) Rethinking the fear circuit: the central nucleus of the amygdala is required for the acquisition, consolidation, and expression of pavlovian fear conditioning. J Neurosci 26:12387–12396

Yin HH, Knowlton BJ (2006) The role of the basal ganglia in habit formation. Nat Rev Neurosci 7(6):464–476

Yiu AP, Mercaldo V, Yan C, Richards B, Rashid AJ, Hsiang HL, Pressey J, Mahadevan V, Tran MM, Kushner SA, Woodin MA, Frankland P, Josselyn SA (2014) Neurons are recruited to a memory trace based on relative neuronal excitability immediately before training. Neuron 6;83(3):722–735. doi: 10.1016/j.neuron.2014.07.017

Zimmerman JM, Rabinak CA, McLachlan IG, Maren S (2007) The central nucleus of the amygdala is essential for acquiring and expressing conditional fear after overtraining. Learn Mem 14:634–644

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Campese, V.D., Sears, R.M., Moscarello, J.M., Diaz-Mataix, L., Cain, C.K., LeDoux, J.E. (2015). The Neural Foundations of Reaction and Action in Aversive Motivation. In: Simpson, E., Balsam, P. (eds) Behavioral Neuroscience of Motivation. Current Topics in Behavioral Neurosciences, vol 27. Springer, Cham. https://doi.org/10.1007/7854_2015_401

Download citation

DOI: https://doi.org/10.1007/7854_2015_401

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-26933-7

Online ISBN: 978-3-319-26935-1

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)