Abstract

In this chapter, we will review evidence about the role of multiple distinct systems in driving the motivation to perform actions in humans. Specifically, we will consider the contribution of goal-directed action selection mechanisms, habitual action selection mechanisms and the influence of Pavlovian predictors on instrumental action selection. We will further evaluate evidence for the contribution of multiple brain areas including ventral frontal and dorsal cortical areas and several distinct parts of the striatum in these processes. Furthermore, we will consider circumstances in which adverse interactions between these systems can result in the decoupling of motivation from incentive valuation and performance.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

In this chapter, I will review our current state of understanding of how it is the human brain makes it possible for an individual to be motivated to perform actions. I will define motivation as the vigor with which a particular action is implemented. The motivation to perform a particular action will often scale in proportion to the expected value or utility of the outcome engendered by that action, in that an individual will be more motivated to perform a particular action if it leads to a more valued outcome. In the first part of this chapter, I will detail what is known about how the human brain represents the value of potential goals at the time of decision-making, which could then be used to motivate actions to attain those goals. I will then review evidence about how actions and goal values might get bound together so that when a particular action is being considered, the value of the corresponding goal associated with successful performance of that action can be evaluated. However, while the current value or utility of a goal state is often going to be an important source of motivation, we will also consider that under a number of circumstances the motivation to perform an action might get decoupled from the expected value of its outcome. These situations involve the influence of stimulus-response habits and the effects of Pavlovian cues on action selection. Overall, we will show that there are multiple ways in which the vigor of an action can be modulated in the brain. Some of these are dependent on the current value of the goal, while others are less so. Understanding how these different potential mechanisms interact has the potential to yield novel insights into why on occasion people might be prompted to take actions that may not always be in their best interests.

2 The Neural Representation of Goal Values

There is evidence to suggest from human neuroimaging studies to indicate that ventromedial prefrontal cortex (vmPFC) which includes the ventral aspects of the medial prefrontal cortex and the adjacent orbitofrontal cortex (OFC) is involved in representing the value of goal states at several stages in the action selection process (O’Doherty 2007, 2011). First of all, this region is involved in encoding the value of the goal outcome as it is experienced, once the action has been performed. Secondly, this region is involved in encoding the value of prospective goals at the point of decision-making. Thirdly, the region is involved in encoding the value of the goal that is ultimately chosen.

3 Outcome Values

There is now an extensive literature implicating the vmPFC in responding to goal outcomes as they are attained following the performance of an action, particularly involving the receipt of monetary rewards (see, e.g., O’Doherty et al. 2001; see also Knutson et al. 2001; Smith et al. 2010). This region is also known to be involved in responding to other types of reward outcomes, irrespective of whether an action was performed to attain the outcome or not. For example, regions of medial and central OFC are known to be correlated with the subjective value of food and other odor outcomes as they are presented, while participants remain passive in the scanner (de Araujo et al. 2003b; Rolls et al. 2003). Other kinds of rewarding stimuli such as attractive faces also recruit the medial OFC and adjacent medial prefrontal cortex (O’Doherty et al. 2003). OFC representations to outcomes are also strongly influenced by changes in underlying motivational states. Activity decreases in this region to food or odor or even water outcomes as motivational states change from hungry or thirsty to satiated, in a manner that parallels changes in the subjective pleasantness experienced to the stimulus (O’Doherty et al. 2000; Small et al. 2001; de Araujo et al. 2003a; Kringelbach et al. 2003). Not only can such representations be modulated as a function of changes in internal motivational state, but value-related activity in this region can also be influenced by cognitive factors such as the provision of price information or merely the use of semantic information or even semantic labels (de Araujo et al. 2005; Plassmann et al. 2008). Thus, the online computation of outcome value in the OFC is highly flexible and can be directly influenced by a variety of internal and external factors. Overall, these findings which are highly reproducible and consistent across studies (Bartra et al. 2013) implicate the ventromedial prefrontal cortex as a whole in representing the value of experienced outcomes.

4 Prechoice Goal-Value Signals

In order to select an action in a goal-directed manner, a goal-directed agent needs to be able to retrieve a representation of the goal associated with each possible available action at the time of decision-making. A number of experiments have examined the representation of goal values at the time of decision-making. Plassmann et al. (2007) used a procedure from behavioral economics to assay goal-value representations. In this paradigm, hungry human participants are scanned while being presented with pictures denoting a variety of foods while indicating their “willingness-to-pay” (WTP) for each of the food items, out of an initial endowment of four dollars available for each item. After the experiment was over, one of the trials is selected at random, and if the reported WTP exceeds a random draw from a lottery, then subjects are provided with the good and invited to consume it (and their endowment is drawn on); otherwise, they keep the endowment and do not receive the good. This procedure is designed to ensure that the participants give their true underlying valuation for each of the items. Activity in a region of vmPFC was found to be correlated with trial-by-trial variations in WTP, suggesting a role for this region in encoding the goal value of the potential outcome. A follow-up experiment compared and contrasted goal-value representations for appetitive food goods that participants would pay to obtain as well as aversive food goods that participants would pay to avoid (Plassmann et al. 2010). The same region of ventromedial prefrontal cortex was found to correlate with goal-value representations for both appetitive and aversive food items, with activity increasing in this region in proportion to the value of goods with positive goal values, and with activity decreasing in proportion to the value of goods with negative goal values. These findings suggest that goal-value codes are represented in vmPFC on a single scale ranging from negative to positive value, indicating that positive and negative goals are encoded using a common coding mechanism.

5 Common and Distinct Goal Values for Different Goods

The finding of a role for a region of ventromedial prefrontal cortex in encoding goal values for food items then invites the question of whether the same region is also involved in the value of other categories of goods. Chib et al. (2009) used the same WTP paradigm to identify areas of brain activation correlating with the goal value of three distinct categories of good: food items, non-food consumer items (such as DVDs and clothing) and monetary gambles. An overlapping region of vmPFC (just above the orbital surface) was found to be correlated with the value of all three classes of items, suggesting that goal-value codes for many different categories of goods may all converge within the same region of vmPFC. Such a region would, therefore, be an excellent candidate for mediating coding of the utilities assigned to diverse types of goal stimuli (Fig. 1a). The finding of an overlapping representation for the goal value of different classes of goods raises the question of whether the value of these goods are being coded in a common currency in which the value of diverse goods are represented using a common scale. The existence of a common currency would facilitate decisions to be made between very different classes of goods that otherwise would not be comparable. For example, a common currency would enable decisions to be made between the prospects of going to the movies versus opting to go for a steak dinner. Levy and Glimcher (2011) found evidence consistent with the existence of a common currency by giving people explicit choices between different types of goods, specifically money versus food. Activity in vmPFC was found to scale similarly for the value of food items and monetary items across participants, suggesting that these items were being represented on the same scale whereby the subjective value of a food item was associated with a similar level of activation for a comparable subjectively valued monetary item.

Role of the ventromedial prefrontal cortex in encoding the value of a goal at the time of decision-making. a Region of ventromedial prefrontal cortex correlating with the value of 3 different categories of goal stimuli: monetary gambles, food items and non-consumable consumer items. From Chib et al. (2009). b Region of medial prefrontal cortex exhibiting category independent coding of goal values is shown in purple, while a food-category-specific goal-value signal is shown in blue and a consumer-item category specific non-food value is shown in red. From McNamee et al. (2013)

Another issue about the encoding of a common currency is whether or not the same distributed representations within the area of vmPFC found to show overlapping activations are elicited for the value of different goods. It could be the case that while the same brain region is activated for the value of different goods, these overlapping activations at the group level belie entirely separate (or largely non-overlapping) representations of value at the voxel level, or alternatively the same voxels might provide further evidence that the value of these goods is being encoded in a common currency. To address this question, McNamee et al. (2013) used a very similar paradigm to that deployed by Chib et al. in which participants made decisions about how much to pay to obtain food, monetary and non-food consumer items. These authors trained multivariate pattern classifiers (which are statistical tools that can detect patterns in the fMRI data and relate those patterns to specific perceptual, cognitive or behavioral states) to detect distributed patterns of voxels corresponding to the encoding of high versus low goal values for each category of good separately. To find out whether the distributed value codes generalized across category, the authors tested whether the classifier trained on one class of good (say food items) could decode the value of another class of good (say money or consumer items). In a very circumscribed region of vmPFC above the orbital surface, evidence for a very general value code was found in which a classifier trained on one category could successfully decode the value of a good from another category (Fig. 1b).

Taken together, these findings provide strong support for the existence of a common currency in this region in which the value code for a given good scales in a manner proportional to its subjective value irrespective of the category from which it is drawn. Intriguingly, in addition to finding evidence for a common value representation in vmPFC, McNamee et al. also found evidence for the existence of value codes that are more selective to particular categories of good along the medial orbital surface. In posterior medial OFC, a region was found to have a distributed encoding of the value of a food items, but not of other categories of items, while a more anterior region of mOFC was found to encode the value of non-food consumer items but not food or monetary goods (Fig. 1b). The apparent category specificity of coding along the medial orbital surface could suggest that these regions represent a precursor stage to the encoding of a common currency in which the value of particular item categories is encoded in a manner specific to that category of good before being combined together to make a common currency in more dorsal parts of vmPFC. Another possibility (which is not incompatible with the above suggestion) is that these signals could correspond to the point at which the value of individual items is computed from its underlying sensory features in the first place. Interestingly, no region was found to uniquely encode the distributed value of monetary items, but instead the value of monetary goods was only represented within the vmPFC area found to encode general value signals. This might be because money is a generalized reinforcer that can be exchanged for many different types of goods.

6 From Goal Values to Actions

Ultimately in order to attain a goal within the goal-directed system, it is necessary to establish which actions can be taken in order to attain a particular goal. In order to establish which action to take, it is necessary to compute an action value for each available action in a given state, which encodes the overall expected utility that would follow from taking each action. At the point of decision-making, a goal-directed agent can then compare and contrast available action values in order to choose to pursue the action with the highest expected utility. How can an action value be computed? Clearly, it is necessary to integrate the current incentive value of the goal state as discussed above, with the probability of attaining the goal if performing a given action, while discounting the action value by the expected effort cost that will ensue from performing the same action.

7 A Role for Dorsal Cortical Areas in Encoding Action–Outcome Probabilities and Effort

There is now emerging evidence to suggest that information about action–outcome probability and action effort is encoded not in the ventromedial prefrontal cortex and adjacent orbitofrontal cortex that we previously described as being important in the computation of goal values and outcome values, but instead these action-related variables appear to be represented in dorsal areas of the cortex, ranging from the posterior parietal cortex all the way to dorsolateral and dorsomedial prefrontal cortex. With regard to action–outcome probabilities, Liljeholm et al. (2011) reported that a region of inferior parietal lobule is involved in computing the action contingency, which is the probability of obtaining an outcome if an action is performed less than the probability of obtaining an outcome if the action is not performed. Liljeholm et al. (2013) extended this work by showing that inferior parietal lobule appears more generally to be involved in encoding the divergence in the probability distributions of outcomes over available actions, which is suggested to be valence independent (i.e. not modulated as a function of differences in the expected value across outcomes) representation of the extent to which different actions lead to different distributions of outcomes.

There is now emerging evidence to suggest that the effort associated with performing an action is represented in parts of the dorsomedial prefrontal cortex alongside other areas such as insular cortex (Prévost et al. 2010). Taken together, these findings indicate that much of the key information required to compute an overall action value is represented in dorsal parts of cortex.

8 Action Values

A number of studies have also examined the representation of prechoice action values that could be used at the point of decision-making. Studies in rodents and monkeys examining single-neuron responses have found candidate action-value signals to be encoded in the dorsal parts of the striatum, as well as in dorsal cortical areas of the brain including parietal and supplementary motor cortices (Platt and Glimcher 1999; Samejima et al. 2005; Lau and Glimcher 2007; Sohn and Lee 2007). Consistent with the animal studies, human fMRI studies have also found evidence that putative action-value signals are present in dorsal cortical areas, including the supplementary motor cortex, as well as lateral parietal and dorsolateral cortex (Wunderlich et al. 2009; Hare et al. 2011; Morris et al. 2014). However, few studies to date have examined the integration of all of the variables needed to compute an overall action value, incorporating action effort costs, goal values and contingencies. In monkeys, Hosokawa et al. (2013) found that some neurons in the anterior cingulate cortex are involved in encoding an integrated value signal that summed over expected costs and benefits for an action. Hunt et al. (2014) also reported a region of dorsomedial prefrontal cortex to be involved in encoding integrated action values. A pretty consistent finding in both humans and animals is that the ventromedial prefrontal and adjacent orbitofrontal cortices appear to not be involved in computing integrated action values or in encoding action effort costs and that instead as indicated earlier, this region is more concerned with representing the value of potential outcomes or goals and not the value of actions required to attain those goals. Thus, the tentative evidence to date points to the possibility that integration of goals with action probabilities and costs occurs in the dorsal cortex and parts of the dorsal striatum and not in anterior ventral parts of the cortex. This supports the possibility that goal-directed valuation involves an interaction between multiple brain systems, and that goal-value representations in the vmPFC are ultimately integrated with action information in dorsal cortical regions in order to compute an overall action value (Fig. 2).

Illustration of some of the key component processes involved in goal-directed decision-making. An associative map of stimuli and actions bound together by transition probabilities, together with a representation of the outcome stimuli, and forms a representation of a world “model.” Within this model representation through learned associations, stimuli or actions can elicit a representation of the associated outcome that in turn retrieves a representation of the incentive value for that outcome (goal). This is done over all-known available outcomes, enabling a relative goal-value signal to be computed, taking into account the range of possible outcomes. Action contingencies are then calculated for a given outcome using the forward model machinery, which, when combined with the relative goal-value signal and effort cost, constitute an action value. This signal is in turn fed into a decision comparator that enables selection of a particular action, which is then fed to the motor output system as well as enabling the chosen value to be elicited. The vmPFC is suggested to be involved in encoding a number of these signals (illustrated by the green area), particularly those pertaining to encoding the value of goal outcomes. Yet, the process of computing action values and ultimately generating a choice is suggested to occur outside of this structure, in dorsal cortical areas as discussed in the text

9 An Alternative Route to Action: Habitual Mechanisms

So far we have considered the role that regions of the cortex play in computing the value of goal-directed actions. When an individual is behaving in a goal-directed manner, the vigor with which a particular action is performed will be strongly influenced by the overall value of that action. However, it has long been known that there exists another mechanism for controlling actions in the mammalian brain: habits. In contrast to goal-directed actions that are sensitive to the current value of an associated goal, habitual actions are not sensitive to current goal values, but instead are selected on the basis of the past history of reinforcement of that action. Evidence for the existence of habits as being distinct from goal-directed actions was first uncovered in rodents using a devaluation manipulation in which the (food) goal associated with a particular action is rendered no longer desirable to the animal by feeding it to satiety on that food or pairing the food with illness (Dickinson et al. 1983; Balleine and Dickinson 1998). After being exposed to modest amounts of experience on a particular action–outcome relationship, animals exhibit evidence of being goal-directed in that they flexibly respond with lower response rates on the action associated with the now devalued outcome. However, after extensive experience with a particular action, animals exhibit evidence of being insensitive to the value of the associated goal, indicating that behavior eventually transitions to habitual control. Similar experience-dependent effects have been found to occur in humans (Tricomi et al. 2009). There is now converging evidence to implicate a circuit involving the posterior putamen and premotor cortex in habitual actions in humans (Tricomi et al. 2009; Wunderlich et al. 2012; Lee et al. 2014; de Wit et al. 2012; McNamee et al. 2013), which suggests a considerable degree of overlap with the circuits identified as being involved in habitual control in the rodent brain (Balleine and O’Doherty, 2010). Associative learning theories propose that habits depend on the formation of stimulus-response associations (Dickinson 1985). In these theories, the stimulus-response association gets stamped in or strengthened by the delivery of a positive reinforcer, but critically and in contrast to goal-directed actions, the value of the reinforcer itself does not get embedded in with the stimulus-response association. Thus, in contrast to goal-directed actions, when habits control behavior, it is possible for an individual to vigorously perform an action even when the outcome of the action is no longer valued. As a consequence, the invocation of the habitual control system can lead to apparently paradoxical behavior in which animals appear to compulsively pursue outcomes that are not currently valued.

10 Pavlovian Effects on Motivation

In addition to the goal-directed and habitual action control systems, another important associative learning and behavioral control system in the brain is the Pavlovian system (Dayan et al. 2006; Balleine et al. 2008). Unlike its instrumental counterparts, the Pavlovian system does not concern itself with learning to select actions in order to increase the probability of obtaining particular rewards, but instead exploits statistical regularities in the environment in which particular stimuli provide information about the probability of particular appetitive or aversive outcomes occurring. The Pavlovian system then initiates reflexive actions such as approach or withdrawal as well as preparatory and consummatory responses both skeletomotor and physiological that have come to be selected over an evolutionary timescale as adaptive responses to particular classes of outcome. In associative learning terms, the Pavlovian system can be considered to be concerned with learning associations between stimuli and outcomes based on the contingent relationship between those stimuli and the subsequent delivery of a particular outcome. There is now a large body of evidence in animals to implicate specific neural circuits in Pavlovian learning and behavioral expression, including the amygdala, ventral striatum and parts of the orbitofrontal cortex (Parkinson et al. 1999; LeDoux 2003; Ostlund and Balleine 2007). Furthermore in humans, similar brain systems have also been consistently implicated as playing a role in Pavlovian learning and control in both reward-related and aversive contexts (LaBar et al. 1998; Gottfried et al. 2002, 2003).

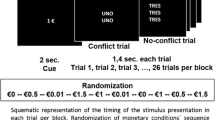

Of particular interest to the present discussion is the fact that Pavlovian associations and their effects on behavior do not occur in isolation, but instead appear to interact with instrumental actions in interesting and important ways. Pavlovian to instrumental transfer (or PIT) is the term typically given to these types of interactions in associative learning theory (Lovibond 1983). PIT effects are typically manifested as a modulation in instrumental response rates in the presence of a stimulus that has by virtue of a learned Pavlovian association acquired a predictive relationship to the subsequent delivery of a particular outcome. In the presence of a stimulus that has predicted the subsequent delivery of a reward, the vigor of responding on an instrumental action for a reward can be increased. Thus, in the appetitive domain, Pavlovian stimuli can exert energizing effects, appearing to result in an increased motivation to respond on an instrumental action. A distinction has been made between general Pavlovian to instrumental transfer in which a Pavlovian cue associated with even an unrelated reward can elicit increased responding on an instrumental action, and specific transfer effects in which a Pavlovian cue is associated with the same specific outcome as that engendered by the instrumental action on which the agent is responding. In general PIT, an appetitive Pavlovian stimulus can result in increased responding on an instrumental action irrespective of the identity of the outcome signaled by the Pavlovian stimulus, whereas in specific PIT, instrumental responding is specifically enhanced on an instrumental action associated with the same outcome predicted by the Pavlovian stimulus.

Lesion studies in animals have implicated specific regions of the amygdala and ventral striatum in general and specific transfer effects. The basolateral amygdala and the shell of the nucleus accumbens have been implicated in specific PIT, while the centromedial complex of the amygdala and the core of the accumbens have been implicated in general PIT (Hall et al. 2001; Corbit and Balleine 2005, 2011). Studies in humans have similarly found partly distinct circuits to play a role in these different types of PIT (Fig. 3). General PIT effects may depend on the nucleus accumbens proper in humans as well as the centromedial amygdala, whereas specific PIT effects appear to involve the basolateral amygdala and ventrolateral parts of the putamen in humans (Bray et al. 2008; Talmi et al. 2008; Prevost et al. 2012).

Regions of the human striatum and amygdala contributing to Pavlovian to instrumental interactions. a Region of the ventrolateral putamen involved in specific PIT effects in humans (data from Bray et al. 2008). b Distinct regions of human amygdala contributing to specific and general PIT effects in humans. Basolateral amygdala is involved in specific PIT while centromedial parts of the amygdala contribute to general PIT effects. The graphs at the bottom show the relationship between the strength of the specific and general PIT effects in the behavior of each individual participant in the experiment and the degree of activation (parameter estimates) in the basolateral and centromedial amygdala, respectively, in each participant. Data from Prevost et al. (2012)

11 Pavlovian and Habitual Interactions and Paradoxical Motivational Effects for Non-valued Outcomes

While in many cases, the presence of a Pavlovian cue will energize responding and may constructively facilitate the performance of instrumental actions to obtain rewards, Pavlovian effects on instrumental responding can also promote maladaptive behavior whereby responding persists under situations in which the goal of an instrumental action is no longer valued by an organism. This is because specific PIT effects appear to be immune to reinforcer devaluation effects (Holland 2004). That is, Pavlovian cues continue to exert an energizing effect on instrumental actions even under situations where the outcome predicted by the Pavlovian cue has been devalued. This effect initially shown in rodents has also recently been demonstrated in humans (Watson et al. 2014; though see Allman et al. 2010), further demonstrating that not only do specific PIT effects persist after outcome devaluation, but so do general PIT effects. One interpretation of these results is that PIT effects may serve to selectively engage the habitual system, increasing the engagement of a devaluation insensitive habitual action at the expense of its goal-directed counterpart. Thus, Pavlovian cues can through a putative interaction with the habitual system promote the motivation to respond to obtain an outcome that is not considered valuable by the organism.

12 Other Approaches to Demonstrating the Role of the Ventral Striatum in Motivation Beyond PIT

A number of fMRI studies have implicated the ventral striatum in mediating the effects of incentives on the motivation to perform an action beyond the PIT paradigm described above. For instance, Pessiglione et al. (2007) used a paradigm in which the amount of physical effort exerted for performing an action correlated with the amount of incentive provided. While activity in the motor cortex scaled with the magnitude of force exerted, activity in the ventral striatum was found to be correlated with the magnitude of the potential incentive available and the strength of the correlation in the activity patterns between the ventral striatum and motor cortex was found to be related to the translation from incentive to motor action, in that the greater the coupling of activity between these two areas the greater the effects of incentive amounts on the effort exerted. In follow-up work, Schmidt et al. (2012) also found activity in ventral striatum scaled with incentive motivation not only during performance of a motor task but also during performance of a cognitively demanding task, and that increases in connectivity between the ventral striatum and brain areas involved in motor control versus cognition were differentially engaged as a function of which task the participant was performing on a given trial, suggesting that ventral striatum may be a common node for facilitating the transfer of incentives to motivations for task performance irrespective of the nature of the task being performed.

Miller et al. (2014) used a task in which trials varied both in the difficulty of responding successfully to obtain an outcome and the magnitude of the outcome was varied for performing a speeded response. An interesting property of this task is that the speed of responding (a proxy for the motivation to respond) was actually greater on more difficult trials even though this type of trial was associated with a lower probability of reward. While activity in the nucleus accumbens scaled with the expected value, activity in putamen and caudate within the striatum scaled instead with difficulty, perhaps suggesting a role for those regions in representing the level of motivation to respond (which increased with greater difficulty). Thus, the above-mentioned studies do largely converge with the PIT paradigms mentioned earlier in implicating parts of the striatum (particularly its ventral aspects) in motivational processes. These studies could be taken as evidence for a more general role for this structure in motivation beyond Pavlovian to instrumental interactions. Or, alternatively (as favored by the present author), the results of these studies could also be considered to reflect a manifestation of Pavlovian to instrumental interactions whereby the monetary incentive cues are acting as Pavlovian cues which in turn influence instrumental motor performance.

13 “Over-Arousal” and Choking Effects on Instrumental Responding

The classical relationship between incentives, motivation and instrumental responding is that the provision of higher incentives results in increased motivation to respond which results in the more vigorous and ultimately more successful performance of an action. However, under certain circumstances, increased incentives can result in the counter-intuitive effect of resulting in less efficacious instrumental performance, an effect known in the cognitive psychology and behavioral economics literature as “choking under pressure.” In a stark demonstration of this effect, Ariely et al. (2009) provided participants in rural India with the prospect of winning very large monetary amounts relative to their average monthly salaries, contingent on successful performance on a range of motor and cognitive tasks. Compared to a group offered smaller incentive amounts, the performance of the high incentive group was markedly reduced, suggesting the paradoxical effect of reduced performance under a situation where the motivation to respond is likely to be very high.

Mobbs et al. (2009) examined the neural correlates of this effect in humans, in which participants could sometimes obtain a relatively large incentive (~$10) for successfully completing a reward-related pursuit task and on other occasions obtain a smaller incentive (~$1) for completing the same task. Performance on the task decreased in the high incentive condition indicative of choking, and this was also associated with an increase of activity in the dopaminergic midbrain. Relatedly, Aarts et al. (2014) measured individual differences in dopamine synthesis in the striatum using FMT PET and demonstrated that performance decrements in response to monetary incentives on a simple cognitive task was associated with the degree of dopamine synthesis capacity in the striatum. Another study by Chib et al. (2012) also explored the paradoxical relationship between incentives and performance and the role of dopaminoceptive striatal circuits. In the study by Chib et al., participants were offered the prospect of different incentives ranging from $5 to $100 in order to complete a complex motor skill (moving a ball attached to a virtual spring to a target location). Whereas small to moderate incentives resulted in improved performance, once incentives became too large, performance started to decrease, consistent with a choking effect. Activity in the ventral striatum was directly associated with the propensity for choking to manifest (Fig. 4). Whereas at the time in the trial when the available incentive was signaled to the participant but before the motor response itself was triggered, ventral striatum activity correlated positively with the magnitude of the incentive presented, at the time that the motor response itself was being implemented by the participant, activity in the ventral striatum changed markedly and instead of correlating positively with the incentive amount, began to correlate negatively with the amount of incentive available, i.e. activity decreased in the ventral striatum in proportion to the incentive amount. Notably, the slope of the decrease in the ventral striatum during motor task performance was correlated with individual differences in the decrease of susceptibility to the behavioral choking effect, while no such correlation was found between ventral striatal activity and choking effects at the time of initial cue presentation. Given the role of ventral striatum in Pavlovian learning and in the influence of Pavlovian cues on instrumental responding discussed earlier, one interpretation of these findings is that Pavlovian skeletomotor reflexes mediated by the striatum may interfere with the production of a skilled motor response. Further supporting this interpretation was the finding that individual differences in loss aversion (the extent to which an individual is prepared to avoid a loss relative to obtaining a gain of a similar amount) were also correlated both with ventral striatal activity and behavioral manifestations of choking. This led Chib et al. to suggest that the engagement of specifically aversive Pavlovian avoidance-related responses could be responsible for mediating behavioral choking. According to this perspective, adverse interactions between the Pavlovian system and instrumental actions are responsible for producing behavioral choking effects. In a subsequent follow-up study, Chib et al. (2014) demonstrated that the behavioral choking effect was strongly dependent on the framing of the incentive structure in terms of losses and gains and that the correlation with behavioral loss aversion was more nuanced than previously suspected, as it interacted in a complex way with the extrinsic framing manipulation: whereas individual with high loss aversion showed increased choking in response to high incentives when those incentives were initially framed as a gain as found in the initial Chib et al. study, individuals with low loss aversion paradoxically showed increased susceptibility to choking when the initial outcomes were framed as a loss. These puzzling behavioral results can potentially be explained by an account in which individuals shift their internal frame of reference when moving from the initial incentive phase to the phase when they are performing the motor task: if initially the incentive is framed as a gain, then participants start focusing on the prospect of losing that potential gain when performing the task, whereas if the initial incentive is framed as a potential loss, then the individual focuses on the prospective of avoiding that loss (and hence incurring a relative gain) during the motor task. However, in the ventral striatum, a relatively more straightforward relationship was observed between incentives and performance which is that irrespective of the extrinsic framing manipulation, activity in the ventral striatum decreased as a function of increased incentives during the motor task, and this activity correlated directly with performance decrements for high incentives whether potential gains or to be avoided losses. Importantly, connectivity between the ventral striatum and premotor cortex was significantly decreased on trials in which choking effects were manifested. These results, when taken together, suggest that large incentives whether prospective gains or losses can result in increased choking effects. Thus, in the context of a Pavlovian to instrumental interaction account for this phenomenon, it appears unlikely that this effect is mediated exclusively by aversive Pavlovian to instrumental interactions, but instead may result from the effects of increased arousal generated by either aversive or appetitive Pavlovian predictions, resonating with an “over-arousal” account advocated by Mobbs et al. and others (Broadhurst 1959; Mobbs et al. 2009).

Relationship between ventral striatum activity responses to incentives and susceptibility to behavioral choking effects for large incentives. a When performing a skilled motor task in a hard (60 % performance level) compared to an easy (80 % performance level) condition, large incentives ($100) resulted in an increased susceptibility to performance decrements. b Activity in the ventral striatum correlated positively with the incentive amount during the initial trial onset when the incentive available for successfully performing the task was indicated, but during performance of the motor task itself activity instead flipped and correlated negatively with incentive amount. c The degree of the activity decrease as a function of incentive during the motor task was correlated with the decrement in behavioral performance across participants: those participants who showed greater performance decrements for large incentives showed a greater deactivation effect for large incentives in the ventral striatum during motor task performance but not during the time of the initial incentive presentation. Data from Chib et al. (2012)

14 How Is the Control of These Systems Over Behavior Regulated: The Role of Arbitration

Given these different systems, all appear to be exerting effects on instrumental motivation, and a natural question that arises is what factors influence which of these systems is going to influence behavior at any one moment in time. With respect to goal-directed and habitual control, an influential hypothesis is that there exists an arbitrator that deputizes the influence of these systems over behavior based on a number of criteria. The first of these is the relative precision or accuracy of the estimates about which action should be selected within the two systems. All else being equal, behavior should be controlled by the system with the most accurate prediction (Daw et al. 2005). Using a computational framework and a computational account for goal-directed and habitual control described in detail elsewhere (Daw et al. 2005), Lee et al. (2014) found evidence for the existence of an arbitrator which allocates the relative amount of control over goal-directed and habitual systems as a function of which system is predicted to have the most reliable (a proxy for precision) estimates. Specifically, Lee et al. (2014) implicated the ventrolateral prefrontal cortex bilaterally as well as a region of right frontopolar cortex in this function. In addition to precision, other variables are also likely to be important for the arbitration between these strategies, including the amount of cognitive effort that needs to be exerted. While goal-directed actions may require considerable cognitive effort to implement, habitual actions require much less effort, and therefore, there is likely a trade-off between the amount of cognitive effort needed by the two systems and the relative precision of their estimates (FitzGerald et al. 2014). Subsequent work will need to follow on precisely which variables are involved in the arbitration process between goal-directed and habitual action selection mechanisms.

In addition, much less is known about how arbitration occurs when determining the degree of influence that a Pavlovian predictor exerts on behavior. It is known that changes in cognitive strategies or appraisal implemented via prefrontal cortex can influence the degree of Pavlovian expression of both aversive and appetitive Pavlovian conditioned responses, which are suggested to be manifested via down-regulatory effects on the amygdala and ventral striatum (Delgado et al. 2008a, b; Staudinger et al. 2009). For example, Delgado et al. (2008a) had participants either attend to cues that were associated with a subsequent reward by focusing on the reward expected, or to “regulate” their processing of such cues (in essence by distracting themselves and thinking of something other than the imminent reward), when regulating, activity was increased in lateral prefrontal cortex, while reward-related activity in the ventral striatum was modulated as a function of the regulatory strategy. Thus, some type of “top-down” control mechanism clearly is being implemented via the prefrontal cortex on the expression of Pavlovian behaviors. However, the nature of the computations mediating this putative arbitration process is not well understood. Also, unknown is how the Pavlovian system interacts with the habitual and goal-directed system to influence instrumental motivation. As suggested earlier, the devaluation insensitive nature of PIT effects support a specific role for interactions with the habitual system in governing the effects of Pavlovian stimuli on motivation. However, direct neural evidence for this suggestion is currently lacking, and a mechanistic account for how this interaction occurs is not yet in place.

15 Translational Implications

The body of research reviewed in this chapter has the potential not only to shed light on the nature of human motivation, but also has clear and obvious applications toward improving understanding of the nature of the dysfunctions in motivation and behavioral control that can emerge in psychiatric, neurological and other disorders. For example, some theories of addiction have emphasized the possibility that drugs of abuse could hijack the habitual system in particular, resulting in the stamping in of very strong drug-taking habits (Robbins and Everitt 1999), although it is likely that a complete understanding of the brain mechanisms of addiction will need to take into account effects of drugs of abuse on goal-directed and Pavlovian systems too (see chapter in this volume by Robinson et al.). Relatedly, psychiatric disorders involving compulsive behavioral symptoms such as obsessive compulsive disorder may also be associated with dysregulation in habitual control, or in the arbitration of goals and habits (Gillan and Robbins 2014; Voon et al. 2014; Gruner et al. 2015). These examples are only the tip of the iceberg in terms of the applicability of the framework reviewed in this chapter to clinical questions. The basic research summarized in this chapter has influenced the emergence of a new movement in translational research that has come to be known as “Computational Psychiatry,” in which formal computational models of brain processes are used to aid classification and fundamental understanding of psychiatric disorders (see Montague et al. 2012; Maia and Frank 2011 for reviews).

16 Conclusions

Here, we have considered the role of multiple systems implemented in at least partly distinct neural circuits in governing the motivation to respond on an instrumental action: the goal-directed action selection system, the habitual action selection system and the Pavlovian system. Under many and perhaps most circumstances, the vigor and effectiveness of an instrumental action will scale appropriately in proportion to the underlying incentive value of the goal of the action. However, we have also seen that a number of “bugs” or quirks can also emerge as a result of the interactions between these systems such that the overall motivation to respond on an instrumental action or the effectiveness of the action energized by such motivational processes can sometimes end up being inappropriately mis-matched to the incentive value of the associated outcome.

Ultimately in order to fully understand when and how human actions are selected effectively or ineffectively as a function of incentives it will be necessary to gain a more refined understanding of how these distinct action control systems interact at the neural level, and in particular to gain better insights into the nature of the neural computations underlying such interactions.

References

Aarts E, Wallace DL, Dang LC, Jagust WJ, Cools R, D’Esposito M (2014) Dopamine and the cognitive downside of a promised bonus. Psychol Sci. doi:10.1177/0956797613517240

Allman MJ, DeLeon IG, Cataldo MF, Holland PC, Johnson AW (2010) Learning processes affecting human decision making: an assessment of reinforcer-selective pavlovian-to-instrumental transfer following reinforcer devaluation. J Exp Psychol Anim Behav Process 36:402

Ariely D, Gneezy U, Loewenstein G, Mazar N (2009) Large stakes and big mistakes. Rev Eco Stud 76:451–469

Balleine BW, Dickinson A (1998) Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology 37:407–419

Balleine BW, O’Doherty JP (2010) Human and rodent homologies in action control: corticostriatal determinants of goal-directed and habitual action. Neuropsychopharmacology 35:48–69

Balleine BW, Daw ND, O’Doherty JP (2008) Multiple forms of value learning and the function of dopamine. In: Glimcher PW, Camerer C, Fehr E, Poldrack RA (eds) Neuroeconomics: decision making and the brain. Elsevier, New York, pp 367–385

Bartra O, McGuire JT, Kable JW (2013) The valuation system: a coordinate-based meta-analysis of BOLD fMRI experiments examining neural correlates of subjective value. Neuroimage 76:412–427

Bray S, Rangel A, Shimojo S, Balleine B, O’Doherty JP (2008) The neural mechanisms underlying the influence of pavlovian cues on human decision making. J Neurosci 28:5861–5866

Broadhurst P (1959) The interaction of task difficulty and motivation: the Yerkes-Dodson Law revived. Acta Psychol 16:321–338

Chib VS, Rangel A, Shimojo S, O’oherty JP (2009) Evidence for a common representation of decision values for dissimilar goods in human ventromedial prefrontal cortex. J Neurosci 29:12315–12320

Chib VS, De Martino B, Shimojo S, O’Doherty JP (2012) Neural mechanisms underlying paradoxical performance for monetary incentives are driven by loss aversion. Neuron 74:582–594

Chib VS, Shimojo S, O’Doherty JP (2014) The effects of incentive framing on performance decrements for large monetary outcomes: behavioral and neural mechanisms. J Neurosci 34:14833–14844

Corbit LH, Balleine BW (2005) Double dissociation of basolateral and central amygdala lesions on the general and outcome-specific forms of pavlovian-instrumental transfer. J Neurosci 25:962–970

Corbit LH, Balleine BW (2011) The general and outcome-specific forms of Pavlovian-instrumental transfer are differentially mediated by the nucleus accumbens core and shell. J Neurosci 31:11786–11794

Daw ND, Niv Y, Dayan P (2005) Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci 8:1704–1711

Dayan P, Niv Y, Seymour B, Daw ND (2006) The misbehavior of value and the discipline of the will. Neural Netw 19:1153–1160

de Araujo IE, Kringelbach ML, Rolls ET, McGlone F (2003a) Human cortical responses to water in the mouth, and the effects of thirst. J Neurophysiol 90:1865–1876

de Araujo IE, Rolls ET, Kringelbach ML, McGlone F, Phillips N (2003b) Taste-olfactory convergence, and the representation of the pleasantness of flavour, in the human brain. Eur J Neurosci 18:2059–2068

de Araujo IE, Rolls ET, Velazco MI, Margot C, Cayeux I (2005) Cognitive modulation of olfactory processing. Neuron 46:671–679

de Wit S, Watson P, Harsay HA, Cohen MX, van de Vijver I, Ridderinkhof KR (2012) Corticostriatal connectivity underlies individual differences in the balance between habitual and goal-directed action control. J Neurosci 32:12066–12075

Delgado MR, Gillis MM, Phelps EA (2008a) Regulating the expectation of reward via cognitive strategies. Nat Neurosci 11:880–881

Delgado MR, Nearing KI, LeDoux JE, Phelps EA (2008b) Neural circuitry underlying the regulation of conditioned fear and its relation to extinction. Neuron 59:829–838

Dickinson A (1985) Actions and habits: the development of a behavioural autonomy. Philos Trans R Soc Lond Ser B Biol Sci 308:67–78

Dickinson A, Nicholas D, Adams CD (1983) The effect of the instrumental training contingency on susceptibility to reinforcer devaluation. Q J Exp Psychol 35:35–51

FitzGerald TH, Dolan RJ, Friston KJ (2014) Model averaging, optimal inference, and habit formation. Front Hum Neurosci 8:457

Gillan CM, Robbins TW (2014) Goal-directed learning and obsessive–compulsive disorder. Philos Trans R Soc B Biol Sci 369:20130475

Gottfried JA, O’Doherty J, Dolan RJ (2002) Appetitive and aversive olfactory learning in humans studied using event-related functional magnetic resonance imaging. J Neurosci 22:10829–10837

Gottfried JA, O’Doherty J, Dolan RJ (2003) Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science 301:1104–1107

Gruner P, Anticevic A, Lee D, Pittenger C (2015) Arbitration between action strategies in obsessive-compulsive disorder. Neuroscientist, 1073858414568317

Hall J, Parkinson JA, Connor TM, Dickinson A, Everitt BJ (2001) Involvement of the central nucleus of the amygdala and nucleus accumbens core in mediating Pavlovian influences on instrumental behaviour. Eur J Neurosci 13:1984–1992

Hare TA, Schultz W, Camerer CF, O’Doherty JP, Rangel A (2011) Transformation of stimulus value signals into motor commands during simple choice. Proc Natl Acad Sci 108:18120–18125

Holland PC (2004) Relations between Pavlovian-instrumental transfer and reinforcer devaluation. J Exp Psychol Anim Behav Process 30:104

Hosokawa T, Kennerley SW, Sloan J, Wallis JD (2013) Single-neuron mechanisms underlying cost-benefit analysis in frontal cortex. J Neurosci 33:17385–17397

Hunt LT, Dolan RJ, Behrens TE (2014) Hierarchical competitions subserving multi-attribute choice. Nat Neurosci 17:1613–1622

Knutson B, Fong GW, Adams CM, Varner JL, Hommer D (2001) Dissociation of reward anticipation and outcome with event-related fMRI. NeuroReport 12:3683–3687

Kringelbach ML, O’Doherty J, Rolls ET, Andrews C (2003) Activation of the human orbitofrontal cortex to a liquid food stimulus is correlated with its subjective pleasantness. Cereb Cortex 13:1064–1071

LaBar KS, Gatenby JC, Gore JC, LeDoux JE, Phelps EA (1998) Human amygdala activation during conditioned fear acquisition and extinction: a mixed-trial fMRI study. Neuron 20:937–945

Lau B, Glimcher PW (2007) Action and outcome encoding in the primate caudate nucleus. J Neurosci 27:14502–14514

LeDoux J (2003) The emotional brain, fear, and the amygdala. Cell Mol Neurobiol 23:727–738

Lee SW, Shimojo S, O’Doherty JP (2014) Neural computations underlying arbitration between model-based and model-free learning. Neuron 81:687–699

Levy DJ, Glimcher PW (2011) Comparing apples and oranges: using reward-specific and reward-general subjective value representation in the brain. J Neurosci 31:14693–14707

Liljeholm M, Tricomi E, O’Doherty JP, Balleine BW (2011) Neural correlates of instrumental contingency learning: differential effects of action-reward conjunction and disjunction. J Neurosci 31:2474–2480

Liljeholm M, Wang S, Zhang J, O’Doherty JP (2013) Neural correlates of the divergence of instrumental probability distributions. J Neurosci 33:12519–12527

Lovibond PF (1983) Facilitation of instrumental behavior by a Pavlovian appetitive conditioned stimulus. J Exp Psychol Anim Behav Process 9:225

Maia TV, Frank MJ (2011) From reinforcement learning models to psychiatric and neurological disorders. Nat Neurosci 14:154–162

McNamee D, Rangel A, O’Doherty JP (2013) Category-dependent and category-independent goal-value codes in human ventromedial prefrontal cortex. Nat Neurosci 16:479–485

Miller EM, Shankar MU, Knutson B, McClure SM (2014) Dissociating motivation from reward in human striatal activity. J Cogn Neurosci 26:1075–1084

Mobbs D, Hassabis D, Seymour B, Marchant JL, Weiskopf N, Dolan RJ, Frith CD (2009) Choking on the money reward-based performance decrements are associated with midbrain activity. Psychol Sci 20:955–962

Montague PR, Dolan RJ, Friston KJ, Dayan P (2012) Computational psychiatry. Trends Cogn Sci 16:72–80

Morris RW, Dezfouli A, Griffiths KR, Balleine BW (2014) Action-value comparisons in the dorsolateral prefrontal cortex control choice between goal-directed actions. Nat Commun 5

O’Doherty JP (2007) Lights, camembert, action! The role of human orbitofrontal cortex in encoding stimuli, rewards, and choices. Ann N Y Acad Sci 1121:254–272

O’Doherty JP (2011) Contributions of the ventromedial prefrontal cortex to goal-directed action selection. Ann N Y Acad Sci 1239:118–129

O’Doherty J, Kringelbach ML, Rolls ET, Hornak J, Andrews C (2001) Abstract reward and punishment representations in the human orbitofrontal cortex. Nat Neurosci 4(1):95–102

O’Doherty J, Rolls ET, Francis S, Bowtell R, McGlone F, Kobal G, Renner B, Ahne G (2000) Sensory-specific satiety-related olfactory activation of the human orbitofrontal cortex. NeuroReport 11:893–897

O’Doherty J, Winston J, Critchley H, Perrett D, Burt DM, Dolan RJ (2003) Beauty in a smile: the role of medial orbitofrontal cortex in facial attractiveness. Neuropsychologia 41:147–155

Ostlund SB, Balleine BW (2007) Orbitofrontal cortex mediates outcome encoding in Pavlovian but not instrumental conditioning. J Neurosci 27:4819–4825

Parkinson JA, Olmstead MC, Burns LH, Robbins TW, Everitt BJ (1999) Dissociation in effects of lesions of the nucleus accumbens core and shell on appetitive pavlovian approach behavior and the potentiation of conditioned reinforcement and locomotor activity byd-amphetamine. J Neurosci 19:2401–2411

Pessiglione M, Schmidt L, Draganski B, Kalisch R, Lau H, Dolan RJ, Frith CD (2007) How the brain translates money into force: a neuroimaging study of subliminal motivation. Science 316:904–906

Plassmann H, O’Doherty J, Rangel A (2007) Orbitofrontal cortex encodes willingness to pay in everyday economic transactions. J Neurosci 27:9984–9988

Plassmann H, O’Doherty J, Shiv B, Rangel A (2008) Marketing actions can modulate neural representations of experienced pleasantness. Proc Natl Acad Sci U S A 105:1050–1054

Plassmann H, O’Doherty JP, Rangel A (2010) Appetitive and aversive goal values are encoded in the medial orbitofrontal cortex at the time of decision making. J Neurosci 30:10799–10808

Platt ML, Glimcher PW (1999) Neural correlates of decision variables in parietal cortex. Nature 400:233–238

Prevost C, Liljeholm M, Tyszka JM, O’Doherty JP (2012) Neural correlates of specific and general Pavlovian-to-Instrumental Transfer within human amygdalar subregions: a high-resolution fMRI study. J Neurosci 32:8383–8390

Prévost C, Pessiglione M, Météreau E, Cléry-Melin M-L, Dreher J-C (2010) Separate valuation subsystems for delay and effort decision costs. J Neurosci 30:14080–14090

Robbins TW, Everitt BJ (1999) Drug addiction: bad habits add up. Nature 398:567–570

Rolls ET, Kringelbach ML, De Araujo IE (2003) Different representations of pleasant and unpleasant odours in the human brain. Eur J Neurosci 18:695–703

Samejima K, Ueda Y, Doya K, Kimura M (2005) Representation of action-specific reward values in the striatum. Science 310:1337–1340

Schmidt L, Lebreton M, Cléry-Melin M-L, Daunizeau J, Pessiglione M (2012) Neural mechanisms underlying motivation of mental versus physical effort. PLoS Biol 10:e1001266

Small DM, Zatorre RJ, Dagher A, Evans AC, Jones-Gotman M (2001) Changes in brain activity related to eating chocolate: from pleasure to aversion. Brain 124:1720–1733

Smith DV, Hayden BY, Truong T-K, Song AW, Platt ML, Huettel SA (2010) Distinct value signals in anterior and posterior ventromedial prefrontal cortex. J Neurosci 30:2490–2495

Sohn JW, Lee D (2007) Order-dependent modulation of directional signals in the supplementary and presupplementary motor areas. J Neurosci 27:13655–13666

Staudinger MR, Erk S, Abler B, Walter H (2009) Cognitive reappraisal modulates expected value and prediction error encoding in the ventral striatum. Neuroimage 47:713–721

Talmi D, Seymour B, Dayan P, Dolan RJ (2008) Human Pavlovian-instrumental transfer. J Neurosci 28:360–368

Tricomi E, Balleine BW, O’Doherty JP (2009) A specific role for posterior dorsolateral striatum in human habit learning. Eur J Neurosci 29:2225–2232

Voon V, Derbyshire K, Rück C, Irvine M, Worbe Y, Enander J, Schreiber L, Gillan C, Fineberg N, Sahakian B (2014) Disorders of compulsivity: a common bias towards learning habits. Mol Psychiatry 20:345–352

Watson P, Wiers R, Hommel B, de Wit S (2014) Working for food you don’t desire. Cues interfere with goal-directed food-seeking. Appetite 79:139–148

Wunderlich K, Rangel A, O’Doherty JP (2009) Neural computations underlying action-based decision making in the human brain. Proc Natl Acad Sci U S A 106:17199–17204

Wunderlich K, Dayan P, Dolan RJ (2012) Mapping value based planning and extensively trained choice in the human brain. Nat Neurosci 15:786–791

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this chapter

Cite this chapter

O’Doherty, J.P. (2015). Multiple Systems for the Motivational Control of Behavior and Associated Neural Substrates in Humans. In: Simpson, E., Balsam, P. (eds) Behavioral Neuroscience of Motivation. Current Topics in Behavioral Neurosciences, vol 27. Springer, Cham. https://doi.org/10.1007/7854_2015_386

Download citation

DOI: https://doi.org/10.1007/7854_2015_386

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-26933-7

Online ISBN: 978-3-319-26935-1

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)