Abstract

Process analytical technology (PAT), the regulatory initiative for incorporating quality in pharmaceutical manufacturing, is an area of intense research and interest. If PAT is effectively applied to bioprocesses, this can increase process understanding and control, and mitigate the risk from substandard drug products to both manufacturer and patient. To optimize the benefits of PAT, the entire PAT framework must be considered and each elements of PAT must be carefully selected, including sensor and analytical technology, data analysis techniques, control strategies and algorithms, and process optimization routines. This chapter discusses the current state of PAT in the biopharmaceutical industry, including several case studies demonstrating the degree of maturity of various PAT tools.

Hierarchy of QbD components

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

1 Content and Introduction

The development and manufacturing of biological therapeutic products should be guided by two fundamental principles:

-

Quality cannot be tested into a product; it must be built into it.

-

Product and process variability should be understood and controlled.

This means that manufacturers of biologics must develop mechanisms and tools to measure the quality of their raw materials, intermediates, and products during processing, not just at the final stage of production of the drug substance or drug product. They must measure, characterize, and attempt to reduce variability in processes and materials (raw materials, intermediates, and final products).

Over the past decade, these two principles have led to the development of a series of Harmonized Tripartite Guidelines by the International Conference on Harmonization (ICH) of Technical Requirements for Registration of Pharmaceuticals for Human Use, on drug product development (ICH Q8), quality risk management (ICH Q9), quality systems (ICH Q10), and drug substance (active pharmaceutical ingredient (API)) development (ICH Q11).

These guidelines were also the foundation stone of the Quality by Design (QbD) initiative, which has significantly affected the development and manufacturing of biopharmaceuticals in the past decade. QbD is a system that encompasses new drug development and manufacture to define better the manufacturing processes, their control, and the analytical methodologies used to evaluate their performance.

As shown in Fig. 1, QbD is based on a hierarchy of components. The quality target product profile is the description of the quality characteristics of the product. It serves as the “goal” (i.e., begun with the end in mind). The critical quality attributes (CQAs) are the quality characteristics of the drug substance and drug product that affect the safety, efficacy, immunogenicity, and pharmacokinetic characteristics of the final drug. Specifications (including raw materials, drug substance, drug product, intermediates, and in-process controls) define analytical methodologies and their associated limits, as these ensure that the CQAs are attained by the product. Critical process parameters (CPPs) are those process parameters that have an impact on product quality, which must be controlled within an acceptable range in order to ensure that product quality is met.

These QbD components define the control strategy, which is the “control system” for manufacturing.

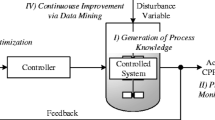

The corresponding guide, entitled “Process Analytical Technology (PAT)” was published by the U.S. Food and Drug Administration (FDA) in 2004 [1]. PAT is a methodology that is predominantly used for the in-line, on-line, and at-line measurement of process adherence and/or compliance with the process parameters and attributes as defined in the QbD. PAT can be seen as an enabling technology for QbD that enhances the probability that the manufacturing process of a drug substance and/or drug product produces material that predictably meets the quality requirements.

PAT can be defined as a system for designing, analyzing, and controlling manufacturing through timely measurements (i.e., during processing) of critical quality and performance attributes of raw and in-process materials and processes with the goal of ensuring final product quality (ICH Q8). It also encompasses any measurement of a process variable or CQA that is performed often enough and reliably enough to permit the process to be adjusted to ensure that there is a high probability that the output of the unit operation meets its CQAs.

The two guiding principles for bioprocessing (quality must be built in, and variability must be controlled) affect both development and manufacturing. Development must “design” and demonstrate these principles and manufacturing must implement them. PAT facilitates the implementation of these principles by manufacturing, providing “real-time” measurement of process and product parameters and attributes. The lack of PAT or the lack of the use of rudimentary concurrent analytical tools (such as pH, flow rate (gas and/or liquid), volume, and pressure control) usually means that control is ex post facto, relying on quality control (QC) results that are often only available days after the events have occurred.

The “real-time” analysis of the process and its performance facilitates control of the process by implementing feedback or feedforward algorithms or manual adjustments, for example, adding an acid or base, or modifying gas flow rate and/or constituent gases, to control pH. It facilitates quality by controlling process or product attributes (e.g., purity or impurities can be controlled by manipulating the process stream in downstream operations, or the concentration of raw materials, such as the carbon or nitrogen source, in upstream operations) in order to select or deselect specific attributes. Moreover, process/product variability can be measured and/or controlled, for example, variations in temperature, purity, impurities, and pH can be minimized by feedback/feedforward control systems, as previously indicated.

In addition to achieving QbD goals, such as consistently meeting CQAs, biotechnology/biologics processes should maximize operating efficiency (e.g., yield, throughput, cycle time reduction). Therefore, applications focusing on improving operating efficiency (e.g., yield) can also be included within the definition of PAT. In general, it is just these that generate a measurable return on investment (ROI), and this can justify a company’s investment in PAT.

PAT has the potential to be a “disruptive” technology in biopharmaceutical manufacturing, (i.e., a technology that changes manufacturing, effectively making current technology obsolete).

Finally, a current trend for biopharmaceutical processes is the more frequent use of continuous and/or completely automated operation [2, 3]. This is unattainable without appropriate PAT, as process decisions must be made in “real time” and PAT provides the sensors required to afford the “real-time” control required.

2 Current PAT Applications, Standards, and Strategies

For biologics processes, numerous examples of the application of PAT tools have been reported in the literature (e.g., [4]). Examples include:

-

Monitoring and controlling the testing of raw materials by spectroscopic methods such as near-infrared (NIR) and two-dimensional (2D) fluorescence spectroscopy, including multivariable data analysis

-

More rapid at-line or even in-line monitoring of fermentation processes:

-

Specific parameter monitoring (e.g., cell density measurement by dielectric spectroscopy)

-

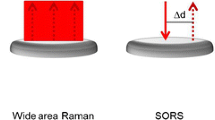

Process fingerprinting (e.g., mid-infrared (MIR), NIR, 2D fluorescence spectroscopy, Raman and other techniques, such as localized or enhanced surface response instruments)

-

Soft sensors

-

Monitoring media components such as amino acids

-

Status of off-gas analysis: techniques and applications

-

-

Closed-loop control of fermentation processes with in-line monitoring signals

-

Process supervision using process models (e.g., by multivariate statistical process control (MSPC), later integration of raw material, starting conditions, early process deviation detection (predictive/preventive mode))

-

Tools for data management and data analysis needed to deal with high data volumes and supported new data formats (e.g., spectra); ability to correlate large volumes of data from different sources in order to detect new patterns and solve problems faster

-

Monitoring cleaning-in-place (CIP) processes

Although all these examples demonstrate the applicability and value that PAT can have for biologics manufacturing, there are few examples of the implementation of PAT tools in testing for release that have developed into real-time release strategies. Likewise, no examples are known of a totally integrated “PAT” strategy or approach encompassing the entire development and manufacturing process of a biologic.

2.1 Raw Materials Screening

Raw materials used as media components in bacterial fermentations or in mammalian cell cultures have typically been a major source of process variability, and have even been reported where purely chemically defined media have been used [5]. NIR and Raman spectroscopy have been successfully implemented by many pharmaceutical companies to identify raw materials upon shipment, and handheld sensors are now used, as seen in Fig. 2.

Handheld NIR material analyzer (Thermo Fisher microPHAZIR RX, http://www.thermoscientific.com)

In principle, screening incoming raw materials using spectroscopic methods makes it possible to characterize raw materials quickly as “suitable for use” before they are used to prepare media. As the measurement is performed before the raw material enters the process, there is no risk that the sensor may breach sterility. In addition, because many of the raw materials are dry powders, NIR spectra can be obtained without the presence of a large water signal. Examples of the use of NIR spectroscopy for this purpose have been given by Kirdar et al. [6], Jose et al. [5], Lee et al. [7], Hakemeyer et al. [8], and Prajapati et al. [9]. A comparison between NIR, Raman, and other spectroscopy methods for a case study of raw material characterization can be found in Trunfio et al. [10].

2.2 PAT Tools in Bioprocesses

2.2.1 In-line Measurements

Electrochemical sensors, such as those used for pH and dissolved oxygen, have been commonly used for decades in bioprocesses. These are obviously also examples of in-line sensors and can be considered as PAT tools. As they are generally accepted and have been used for a long time, they are not discussed here.

In-line measurements in bioreactors can be especially challenging because bioreactors are unique in that they can be thought of as a conglomeration of tiny bioreactors. This means that the actual pharmaceutical product is produced within the individual cells that are suspended within the culture broth and either maintained within the cell walls until additional downstream processing takes place (cell lysis) or secreted into the media. This is obviously a different and considerably more complex scenario from that observed in small-molecule manufacturing, where the product is generally produced within a homogenous solution in the chemical reactor.

In-line sensors for bioreactor monitoring may use NIR, MIR, Raman, 2D fluorescence, or dielectric spectroscopy.

2.2.2 NIR Measurements in Bioreactors

In-line optical sensors typically allow short analysis times, ranging from seconds to minutes per analysis, depending on the number of spectra and amount of data processing required. Although an analysis every 2 min is typically unnecessary for a single bioreactor, this short analytical time does make it possible to interface a single spectrometer to multiple bioreactors. In-line sensors offer the significant advantage of not having to draw a sample from the bioreactor in order to perform the analysis. This is a major advantage of these technologies as it reduces the risk of contamination and potential loss of sterility that is considered to accompany on-line measurements and their associated on-line sampling devices. The disadvantage of these optical sensors is that culture media are generally a challenging matrix for NIR, as water absorbs NIR strongly. This necessitates the use of short path lengths for NIR, which then raises the risk that suspended solids may block the bioreactor. Furthermore, these probes are susceptible to the formation of superficial biofilms, which can also negatively impact the analysis.

The complex fermentation matrix gives rise to multiple overlapping absorptions in the NIR spectra and this requires the use of chemometrics to build correlations between the reference method for analyte concentration and absorptions in the NIR spectrum. Developing these calibration models is not a trivial task – as noted by Calvalhal and Saucedo [11]. The accuracy and robustness of these models is highly dependent on spectral variation in anything present in the media, including the cell line. Robust quantitative models must include these spectral variations (e.g., changes in raw materials, cell lines, etc.) in the model to compensate for the variation and to maintain accurate analyte predictions. However, even being aware of when this spectral variation is impacting the accuracy of the calibration model can be a challenge without running samples by a reference method. It is likely that this complexity and the amount of effort to construct and maintain robust quantitative models is one of the major reasons that NIR has not seen broad and routine use across the biotech industry. There have been numerous articles from academic institutions describing the successful application of NIR for monitoring various analytes in fermenters [12, 13]. In spite of this, NIR does not seem to have been widely and routinely used by major biopharmaceutical companies in production bioreactors. The low limit of detection and the high level skills required to calibrate and maintain might be reasons why NIR is not widely used in industry.

One possible reason for this apparent success of NIR in academia, but relatively poor acceptance in biopharma manufacturing, may be the lack of raw material variation seen in the limited number of fermentation runs performed by the academic institutions. This gives the impression that quantitative NIR measurement models in a fermenter are more robust and stable than is observed when these techniques are applied at an actual production facility, where variation in raw materials over an extended period of time includes spectral variability that may have not been present in the original calibration set. This requires that calibration models should be constantly evaluated and frequently updated to include this new spectral variability. Saucedo et al. [14] proposed a “hybrid” technique, whereby analytes are spiked into media not included in the original calibration set, so that an existing calibration can be extended to applications not included in the calibration set. In spite of the challenges of using NIR for bioreactor monitoring, there appear to be have been several successes, including ammonia, glutamine, and glucose [15,16,17].

2.2.3 Raman

Although not as widespread as NIR, Raman probes for bioprocess monitoring are commercially available and reports of their application have been published recently [18,19,20,21,22], for example, for measuring product concentration in-line.

Berry et al. [21] describe the use of Raman for in-line glucose measurement and control, in order to keep the glucose concentration below a specific threshold and thus to reduce glycation of target protein. This is an example of a true PAT application used to control the CQAs directly with a closed loop system.

The challenge of Raman spectroscopy is similar to that of NIR probes. It is difficult to extract the required information consistently and reliably, and to deploy the technique in an on-line or at-line setting.

2.2.4 Dielectric Spectroscopy

Knowledge of the growth rate of viable cells in the bioreactor is critical in understanding how a bioreactor is proceeding. Information about the cell density or cell growth rate is of quite fundamental importance, as it indicates whether or not the bioreactor is proceeding normally. With a dependable viable cell count sensor, it should be possible to ensure feedback control of nutrient feeds such as glucose and/or amino acids by using metabolic models developed off-line and real-time data from the in-line viable cell count sensor to determine the rate with which metabolism is proceeding. Numerous technologies to sense cell density have been tried in recent decades, including NIR and fluorescence spectroscopy. These are described in later sections.

However, the most successful sensor to date for viable cell growth rate seems to be the dielectric spectroscopy or capacitance sensor. These sensors can differentiate viable from non-viable cells by exploiting the fact that viable cells have an intact, non-conductive cellular membrane surrounding the conductive cytoplasm. When an alternating current is applied, the viable cells act as tiny capacitors. The data obtained can be correlated with an off-line cell counting method, such as trypan Blue staining and manual or semi-automated counting. A common issue with this sensor is that it correlates well with the off-line measurement in the early stages of cell growth, but, with some cell lines the correlation begins to deteriorate considerably in the late growth phase [23].

Publications by Bend Research [24] and Aber Instruments [23] describe a new approach to processing the data by using the concept of an area ratio algorithm. They claim that this predicts viable cell volume fraction better than current methods.

Several applications of dielectric spectroscopy have been described [25, 26] that include the use of the biomass probe to determine the transfer time point from inoculum and seed bioreactors to the next reactor stage. This made it possible to perform the transfer at a much more precise cell concentration than is possible with off-line analysis. Other examples included using the probe to monitor retention filters in perfusion bioreactors [27], with the signal from the dielectric sensor being used to detect filter breakthrough.

2.2.5 In-line Fluorescence Measurement

Many substances occurring in biochemical reactions are fluorescent species, such as NAD/NADH and flavonoids. These compounds produce strong fluorescence signals that can be used to monitor the progress of these metabolic reactions. Since the 1980s, in-line fluorescence probes monitoring NAD/NADH fluorescence for bioreactors have been used to determine rates of viable cell growth. More advanced rapid scanning 2D fluorescence instruments – coupled with chemometric data processing – have now opened the possibility of examining other biochemical reactions involving other fluorescent products such as FADH2 and FMNH2. There are hundreds of biochemical reactions with fluorescent substrates or products that can be used to monitor the progress of cellular metabolism. As such, 2D fluorescence spectroscopy would seem to be a useful tool to understand intracellular reactions that may impact CQAs. Hantelmann et al. [28] proposed 2D fluorescence as an alternative to mid-IR or NIR as a tool for controlling glucose feed. The fluorescent method could predict the change from oxidative to oxido-reductive metabolism. The data from the sensor was used as an input to a feedback control loop to control the feed rate of glucose in a fed-batch reactor to below a value where ethanol was produced. Although this is not specifically of interest in bioreactor monitoring, Schwab and Hesse [29] described the use of 2D fluorescence for the real-time measurement of product aggregation. This could be of importance in antibody and other biotech products if the processes are operated at high concentrations where product aggregation is favorable.

2.2.6 Model-Based Sensors

Soft sensors, model-based sensors, or what are sometimes referred to as “neural networks” are sensors that use multiple existing inputs to predict a process variable [30,31,32,33,34,35]. These systems are a sort of hybrid system in that they may use information from in-line, on-line, at-line, or off-line measurements to generate a value. These systems frequently use less than optimally calibrated sensors to generate some fairly accurate information about the state of a bioreactor. There are several good examples of when data from single sensors correlate poorly with the predicted value but excellently when combined with other sensor inputs [36]. This article models data from many of the sensors described previously, including NIR, dielectric spectroscopy, 2D fluorescence spectroscopy, and proton transfer reaction mass spectroscopy.

2.2.7 Trajectory Analysis

Most of the methods described above have the potential to allow fingerprinting of the culture by “trajectory analysis.” For example, the use of NIR for trajectory analysis has been proposed by various authors as a means of rapidly assessing whether a process is proceeding “normally,” for example, Hakemeyer et al. [16]. Sandor et al. [37] discuss the potential applications of NIR to bioreactor monitoring and include the use of NIR trajectory analysis. Trajectory analysis offers the advantage of not requiring a calibration model to be built which may fail or require frequent updating as raw materials vary. Information obtained this way could potentially be used to determine rapidly whether a fermentation is not proceeding “normally.” If this is not the case and the trajectory leaves a predefined range, there are two options. It is possible either to drill down to the actual problem and try to solve it or to confirm that the process is too far from the limits, cannot be saved, and should therefore be terminated. Such drill-down diagnoses rely, for example, on Q-residual contribution to identify the root cause of the problem. However, there are still lots of questions about this approach (e.g., confirmation of causality, signal resolution).

A long-term objective could be that the process should be thoroughly understood and designed in such a way that all sources of variability are identified and well-controlled. This could eventually be an acceptable method of rapidly releasing product for forward processing (real-time release).

2.2.8 On-line Measurements

On-line sensors are those analysis technologies which lie outside the fermenter. They differ from at-line or off-line analyzers in that they are capable of automatically sampling and analyzing the bioreactor contents without manual intervention by the production personnel. The drawback to this type of system is that they require a sampling interface to the fermenter that enables a sample to be drawn and delivered to the analyzer, while ensuring that the fermenter remains sterile.

2.2.9 Sampling Devices

Developing a suitable sterile sampling device has turned out to be a challenging task. Historically, the approach has been to utilize sample probe material with a pore size small enough to provide a sterile barrier. This approach was first described 30 years ago in a patent from Eli Lilly and Company for a sintered stainless steel sampling probe [38]. Several commercial systems are now available that utilize this approach of using filter material pore size to create a sterile barrier. Besides the obvious concerns about potential sterility breaches caused by these sampling systems, the use of small pore-size filters to provide a sterile barrier excludes the cells from the extracted sample. Therefore, it is normally possible to measure the biomass or cell concentration with cell counters such as the Cedex HiRes® analyzer. Alternatively, a steam-in-place-type sampling device can be used that enables the entire contents to be extracted from the bioreactor for analysis. Withdrawing an intact sample enables off-line viable cell counting from the sample [39]. BaychroMAT® seems to be one of the best automated on-line sampling and analysis systems, although automated on-line sampling devices, in general, have not been widely accepted by the biopharmaceutical community.

2.2.10 On-line Liquid Chromatography

Despite the challenges described above, on-line sampling devices offer the advantage that they can be combined with technologies with superior sensitivity, resolution, and robustness compared to spectroscopic measurements, such as chromatography-based methods.

As the chromatographic column generally eliminates any matrix effect, simple, direct linear calibrations can be used that avoid the need for chemometric models to correlate an analyzer to a reference method. For example, interfacing on-line chromatography with electrochemical detection can provide a complete amino acid analysis, as well as carbohydrate analysis. Organic acids and even trace metals can also be analyzed by on-line chromatography. Furthermore, on-line HPLC analysis even has the potential to measure CQAs during production if the product is released into the media.

If the process is adequately understood, it may be possible to perform feedback control of various CPPs, such as controlled feeding of a particular amino acid or sugar to ensure that CQAs are achieved. The use of online chromatography in a pilot plant to study the uptake rate of key amino acids, coupled to feedback control of the amino acid feed rate in the bioreactors, was shown by Rapoport et al. [40]. Online chromatography or high-performance liquid chromatography (HPLC) are typically not thought of as “real time,” but solid phases with smaller particle sizes are now enabling chromatographic analyses to be performed much more rapidly. For example, amino acid profiling via pre-column derivatization and HPLC analysis can be performed in as little as 10 min in some cases, with the longest time being approximately 30 min per profile, with direct injection into an HPLC with electrochemical detection. Although 30 min may not resemble “real time,” it is adequate for the time scale of a typical bioreactor and can even be fast enough for a single analyzer to be multiplexed to more than one bioreactor. A recent article described the use of a 2-dimensional HPLC assay to monitor charge variants in an monoclonal antibody (mAb) product [41]. Although the title describes the analysis system as at-line, it is actually an on-line system with automated sampling performed by a Groton Biosystems sampler. This is an excellent example of the capabilities of HPLC to monitor a CQA that can be adapted to on-line monitoring of the bioreactor as it produces the product.

2.2.11 Mass Spectroscopy

Mass spectroscopy has been utilized for several decades for off-gas analysis in fermentation [42]. Although this is technically an on-line measurement, as a sample (headspace vapor) is extracted from the bioreactor, there is no direct contact with the bioreactor media. This obviates the concerns about compatibility of materials of construction (MOC) often associated with in-line sensors, as well as issues about breaching sterile containment, as the sensor is placed outside the sterile environment. Historically, low resolution mass analyzers have been used to measure O2 and CO2 in the off-gas to calculate the oxygen uptake rate (OUR) and respiratory quotient (RQ). Within biopharmaceutical manufacturing, off-gas analysis is very well-established in microbial (Escherichia coli) fermentations, but is rarely used in mammalian cell culture. With high resolution mass spectrometers or proton transfer reaction mass spectrometry [43] it is also possible to measure other volatile metabolism products, such as organic acids, which may provide interesting information about the state of cellular metabolism. Although mass spectrometers are fairly expensive, their very short analysis time (typically of the order of tens of seconds) makes it very easy to use one multiplexed instrument to monitor 10–20 fermenters or more. Multi-port sample multiplexing valves or sample multiplexing panels of assembled components can be constructed that fit into a compact space.

2.3 Closed Loop Control of Fermentation Processes

Biopharmaceutical production processes that rely on living organisms as “producers” of an intended molecule are often chaotic in nature. Initial conditions cannot be exactly reproduced, and minor differences in starting conditions, such as make-up and/or inoculum strain history, can have a significant impact on the performance of the culture. Furthermore, once a process has been “set,” manufacturing is effectively “along for the ride,” with minimal ability to control or influence performance of the culture. There are many reasons for these “traits” (e.g., chaotic determinants may be working cell bank (WCB) vials and their storage conditions, batches of media components, etc., and minimal control capability, e.g., dO2, pH, temperature, pCO2, agitation rate, and nutrients by bolus or continuous feed). Some countermeasures have been developed (e.g., randomization of WCB vials in storage and utilization in cultures, large batches of media to feed several cultures, etc.). However, the development of the culture process and conditions, particularly utilizing QbD methodologies, should provide the knowledge to control these processes more effectively.

If appropriate, PAT is implemented in the culture processes on the basis of optimized knowledge of the development, which should provide the opportunity to address these issues by creating closed control loops that effectively stabilize the processes.

Development activities, particularly those utilizing QbD principles, should provide a thorough list of CQAs for the product. The CQAs for N- or C-terminal modifications of structure, amino acid sequence, and product-related impurities – including different post-translational modifications – are often directly impacted by culture conditions. Once these CQAs have been identified, development studies should determine the culture conditions that impact each of these CQAs. This knowledge provides information about the PAT tools that need to be identified and used to control the culture process.

Studies conducted with E. coli fermentations more than 20 years ago led to the development of fed-batch cultures, where cell number, RQ, and glucose concentrations (in the fermenter) were used to optimize the fermentation for titer [44]. This system, although rudimentary today, was a break-through for fermentation at the time.

Another recent example from Zupke et al. [45] describes the use of predictive modeling control of the glycosylation pattern, based on the analysis of cell culture media components. The level of high mannose species in a therapeutic antibody could be influenced by raising or lowering the level of mannose in the cell culture media. Moreover, the authors generated a model of predictive control, which allowed the running process to be adapted to meet the desired product quality.

Various published case studies [46,47,48,49,50,51,52,53] demonstrate that PAT can be used for the real-time control of culture processes with closed loop controllers. Nevertheless, other factors seem to have inhibited the implementation of PAT in bioprocesses. These apparently include concern about the complexity of PAT in the manufacturing environment, concerns about development resources to appropriately define processes and analyses to support these processes, and a fundamental lack of understanding of the potential power in PAT systems and real-time process management.

2.4 PAT Applications in Purification Processes

PAT has been used, as previously indicated, in downstream processes to control purity, maximize yield, and reduce cycle time with significant unit cost benefits. Compared to applications in cell culture, implementation of PAT for use in downstream processes, particularly chromatography, has turned out to be challenging. This is largely because of the rather long time to result of applied analytical methods compared to the short time available for decisions impacting the process. In many cases, the time needed for analysis exceeds the time window for process decisions, so that it is difficult to implement PAT tools.

It is worth highlighting the fact that in-line UV and conductivity sensors, that is, true PAT tools, are routinely used in all chromatography and ultrafiltration/diafiltration operations today. They allow process monitoring, for example, control of chromatography gradients, endpoint analysis of concentration steps, and, most importantly, setting specific pooling criteria for defined product quality.

For over two decades, HPLC has been used as additional application to assess process performance continuously. Particularly in cases where a process decision based on UV is not optimal, for example, pooling during a high-resolution separation, HPLC makes it possible to differentiate between the product of interest and other species. On-line monitoring of these product- and process-related impurities also accounts for differences in load material and thus can ensure consistent product pool quality.

On-line reversed-phase HPLC was used to measure aggregates in the purification of recombinant human insulin-like growth factor-I (IGF). The assay allowed real-time control of purified IGF collection [54]. In a similar set-up, antibody loading onto protein A affinity chromatography was reliably controlled through an on-line chromatographic assay. This allowed real-time measurement of antibody breakthrough during the load phase, thus ensuring constant yield despite differences in titer and protein A capacity [55].

Rathore et al. [56] developed an on-line size exclusion chromatography technique that allowed real-time decision-making on chromatographic pooling. The method allowed consistent product pool purity, despite large variations in the initial feed purity. Although initially performed only on a small scale, a similar setup has successfully been implemented on a large-scale pilot plant. Pooling criteria were defined by on-line HPLC in this large-scale setting. Pool purity levels achieved with the PAT setup were comparable to product quality achieved with pooling based on fractionation followed by off-line analysis. These results show that on-line HPLC analytics and implementation in a large-scale purification process is both feasible and beneficial in order to achieve consistent product quality.

Antibodies are the most common biological therapeutics. Non-antibody biologicals, for example, enzymes, often pose greater challenges to downstream processes, particularly when preparing these molecules for further analytical characterization. Brower et al. [57] recently proposed an on-line HPLC method based on single-step immunoaffinity purification that could respond to these problems in sample preparation and be used as a PAT tool in these processes.

A combination of HPLC, differential refractometry, and multi-angle laser light scattering (HISEC-RI-MALLS) was used to characterize a therapeutic human immunodeficiency virus envelope protein [58]. The combination of these methods allowed the investigators to obtain the molar mass of macromolecules, independent of their shape or hydrodynamic radius, and is thus an ideal tool to define oligomerization states of these molecules. The method proved to be comparable to orthogonal methods, such as native polyacrylamide gel electrophoresis and ultracentrifugation analysis. Although the latter are time-consuming and thus inadequate for on-line monitoring, the HISEC-RI-MALLS analysis is a suitable method for manufacturing control.

In addition to monitoring chromatography unit operations, HPLC can also be used to provide guidance for the development of filtration steps such as microfiltration. Microfiltration processes have more recently been employed as downstream purification steps in tandem with selective precipitation of either product or impurities. Large-scale implementation of these microfiltration purification processes is particularly challenging because of the deposition of insoluble material on the membrane surface or inside membrane pores. To circumvent the scale-up problems, Watson et al. [59] implemented an ultra performance liquid chromatography-based method to monitor impurities and combined these at-line results with a mass balance-based model of ideal filtration performance. The results were used to define large-scale operational changes and increased step yield by >20%. This example also shows how PAT tools can be used successfully during process development to guide transfer into large-scale manufacturing processes.

In addition to these on-line and at-line experimental tools, multivariate data analysis (MVDA) has been applied to analyze downstream processes, particularly to ensure consistent column packing. Principle component analysis (PCA) has been shown to be a suitable tool to assess process performance across a column lifecycle [60]. Signs of column underperformance, including backpressure buildup and inefficient deoxyribonucleic acid clearance, could be predicted using chemometric analysis.

Another approach to analyze column integrity is transition analysis of chromatographic profiles. In general, these studies use signals from step transitions between buffers of different conductivities to describe the same dispersion parameters as the traditional pulse injection method. Bork et al. [61] showed that it is feasible to monitor large-scale manufacturing processes by this method and to establish control limits based on historical data to prevent issues during manufacturing (see Sect. 3.3).

2.5 Monitoring CIP Processes

CIP processes may only be secondary processes for the biologics manufacturing industry, but they are especially suited to implement PAT tools.

There is a lower risk from a QA/QC perspective than for other potential PAT applications, as the biopharmaceutical product is not directly involved. In addition, the point at which the CIP solutions are monitored is downstream of the actual production tank, so there is no risk of contamination if a sensor or sampling device is in contact with product during a production run, as is the case with the other examples listed above.

CIP monitoring following a PAT approach involves using on-line or in-line analytical technology to determine when the end point of the CIP cycle has been reached by actual measurement of the removal of a potential process contaminant rather than by using a fixed time or volume, on the assumption that this represents removal of the process contaminant. The on-line or in-line technology could be used to monitor the rinse water for the presence of contaminants, as well as residual cleaning agents. When these contaminants are measured below the target level, the CIP cycle is stopped automatically and the tank can be returned to service. The key quantifiable benefits of these approaches are:

-

Reduction in the use of rinse water and the costs to generate that water

-

Reduction in overall CIP cycle time, allowing the tank to be returned to service sooner and thus increasing production throughput

CIP monitoring is a PAT project that has excited fairly widespread interest in the biopharmaceutical industry for the reasons mentioned above. The gold standard for determining that a piece of equipment has been thoroughly cleaned is the swab test, followed by off-line HPLC analysis. Although this is a very sensitive method to determine cleanliness, it is not suitable for rapidly and/or automatically determining the CIP cycle endpoint because of the delay in the off-line HPLC analysis. To automatically determine the CIP endpoint from contaminant analysis, one needs to use a measurement technology that can be applied on-line or in-line. On-line/in-line analysis of the CIP solution involves installation of an on-line or in-line sensor downstream of the process equipment that is being cleaned. Depending on whether or not the equipment is cleaned by a fixed or portable CIP system, the sensor can be installed in the drain line of the process equipment or in the drain line of the portable CIP system. Technologies that have been successfully applied in this way include:

-

On-line total organic carbon (TOC) analysis [62]

-

In-line conductivity [62]

-

Light-induced fluorescence [63]

-

In-line UV spectroscopy [64]

2.6 Data Management: Availability for Monitoring, Control, and Analysis

Data management, that is, data acquisition, data analysis, data storage, data retrieval, and data correlation, are significant issues, particularly in the highly regulated pharmaceutical industry, but also in process development.

Monitoring and controlling processes and the analysis of historical data require the provision of meaningful data from bioprocesses, in appropriate formats and with all necessary metadata. Data structures from fermentation processes are complex and often need harmonization prior to use. Non-contextualized and non-aligned numerical values are worthless. To this end, it is important to provide all metadata necessary to align the different data types (e.g., in-line and at-line data) and formats. It may be necessary to prioritize data if more than one analysis of the same type is available per sample. The interpolation of data to align in-line data with at-line or off-line data can employ various algorithms.

Another challenge is the integration of additional process information and event logs, recorded within different components (e.g., in an electronic lab notebook by the supervisor, in the historian, sample management by an operator, or by the system itself) along the course of the process. This information is often needed to interpret data correctly.

The integration of data formats such as, for example, spectra or information derived from this with classical in-line data (e.g., pH, dO) and at-/off-line data (e.g., glucose concentration in a sample from the biosuspension) is still a challenge, especially in a regulated setting where all components have to be fully validated. This is particularly true if you wish to automate your data in such a way that they can also be used for automatic control of the bioprocess.

The performance and availability of the systems for data management have to be high, which in turn increases the operating cost.

Many commercial systems are available for data management, (e.g., PI, Discoverant, etc.), but the industry seems to suffer from the DRIP issue (“data rich – information poor”). The sheer volume of data that can be collected can be daunting. One example that illustrates this is the on-line pH data from a bioreactor: With a sampling rate of fractions of seconds, and a culture period of weeks, this can result in millions of data points per batch. However, in many cases the vast majority of the data collected is not particularly useful; for example, in the pH example, approximately >200 points would provide useful information when the pH was shifted or the process went out of control because of equipment failure.

Application of the above-mentioned PAT tools generates huge amounts of data. Data management and correlation require the services/expertise of trained statisticians and/or chemometricians. In the final analysis, the quality of process supervision depends on the quality of the data management.

Data management and analysis tools commonly used in the biotechnology industry include, but are not limited to:

-

Filtration

-

Compression

-

Statistical process control

-

Multivariate statistical process control

-

Time-sequence analysis

-

PCA

Data compression consolidates data based on a predetermined algorithm. Statistical process control (SPC) is a univariate analysis of process parameters and attributes. Process parameters and specifications should typically be monitored using SPC (this is becoming a regulatory expectation and industry standard practice). MSPC consolidates a number of parameters and/or attributes into a single control chart. The calculation of the T 2 for means and T 2 for dispersions should be established by a statistician/chemometrician, in conjunction with process experts. PCA can be thought of as revealing the internal structure of the data in a way that best explains the variance in the data. A statistician/chemometrician should be consulted for establishing PCA data monitoring.

If mathematical models are employed in the scope of process control, operators with an appropriate qualification are required. The use of models within a regulated bioprocessing environment is still not at all common and one can expect much discussion, at least with QA, until approval of such a strategy.

3 Case Studies

3.1 On-line Estimation of Biomass Concentration

For state estimation, one usually employs static estimators [65]. Current classical engineering correlations are being replaced by advanced estimation techniques. Artificial neural networks (ANN) have primarily been proposed for this purpose [34]. When they are trained on the cumulative signals of the OUR and the carbon dioxide production rate (CPR) as well as on the total base consumption, they lead to excellent estimates of biomass. The reason for cumulating the original signals is to improve the signal-to-noise ratio and thus increase the information content about the process. In addition, as the biomass and its metabolic products accumulate during the cultivation, these masses are better correlated with the cumulative signals of OUR and CPR.

Examples from E. coli cultures showed that the data from all cultures in a given process can be described by a single ANN [32] with rather small estimation errors in a biomass concentration of about 0.5 (g/kg) or only 1%. An important advantage of these static estimators is that they can be set up immediately after a distortion in the system if their input signals are still available. This property is not guaranteed with the dynamic estimators discussed later.

The application of ANNs for biomass estimation is not restricted to microbial systems. As shown in Fig. 3, they deliver reliable values in large-scale industrial cell cultures for a recombinant therapeutic antibody. Thus, such ANNs are not restricted to laboratory-scale experiments. Although the data depicted in Fig. 3 had to be normalized in time and packed cell volume (PCV) for proprietary reasons, it is becoming clear that performance can be estimated well with this technique.

ANN-based estimation of PCV signals in five independent batches of a recombinant mAb-cell culture production process based on on-line signals of the aeration rate of oxygen, total gas supply, base consumption, and culture volume [66]

Recent developments, particularly in the machine learning community have provided some further estimator developments that compete with ANNs. The key expression is “kernel techniques.” These allow us to estimate biomass, for instance, by means of a linear combination of nonlinear functions, referred to as kernel functions [67]. An important example of such a kernel function is the radial basis function, that is, a Gaussian bell. These kernels are data centered, that is, centered at the available measurement points. A key advantage of these representations is their sparseness, meaning that well-performing mappings can be obtained with a few basic functions only. In this way, irrelevant terms of the linear combination are discarded. Two important variants of the kernel techniques are the “support vector regression” (SVR) [68] and the “relevance vector regression” (RVR) [69]. SVR determines the regression parameters in a way similar to conventional least square techniques but with different objective functions, whereas RVR uses Bayesian statistical approaches for that purpose [70]. The latter has the advantage that it automatically performs some model complexity control by automatically removing all irrelevant terms in the sense of Ockham’s razor and thus avoids over-fitting, a problem appearing with most other estimation techniques [71]. The most significant benefit of both techniques is that they result in a globally optimal solution [72].

Figure 4 shows a comparison of the estimates derived from the three static estimators mentioned after training on the same data set. All three techniques give essentially the same estimates across the entire fermentation. This is what we expect, but the important point to note is that the kernel methods need a significantly smaller number of coefficients and, as is important to validation procedures, the same set of coefficients is yielded when the calculation is repeated. It should also be noted that all three estimators depict the same deviations from the data in the upper left corner for culture times above 9 h. This suggests systematic errors in the measurement data or in process behavior and not in the estimation techniques. These alternatives to ANNs should appear more often in biotechnology literature in the near future, as they significantly simplify model complexity control (or over-fitting) and model design issues [74].

3.2 Dielectric Spectroscopy as Basis for Feed Rate Control in Mammalian Cell Culture Processes

Viable cell density (VCD) is an important variable in mammalian cell fermentation that is routinely used for monitoring and manual control. Dielectric spectroscopy can be employed to predict VCD in mammalian cell fermentation. This can be implemented by two different strategies:

-

Permittivity difference at two distinct frequencies to be correlated with VCD

-

Integration of all spectral information (permittivity values over the entire frequency band) and prediction of VCD by using a multivariate calibration model (to be developed in advance)

Better results in terms of noise and prediction power in later process phases can be obtained by employing the second strategy. Processes that need tight monitoring and control of process variables (e.g., nutrient concentration) can benefit from dielectric spectroscopy in two ways:

-

Much higher resolution (down to 0.5/min)

-

Diverse options for process control based on the predicted VCD (can be integrated into classical control approaches)

One example of an application is the prediction of VCD by dielectric spectroscopy according to the second strategy used as input for an algorithm that calculates the feeding rate, in order to control the nutrient concentration level. This algorithm also integrates other in-line variables and the resulting signal is transferred to a controller driving a conventional bioreactor feed pump. This control concept assures that:

-

The nutrient concentration (in biosuspension) is very low so as to provide high product quality

-

The feeding rate is high enough to support optimal cell growth and productivity

The benefits of such a control strategy are:

-

Lower process variability

-

Less sampling of biosuspension and less at-line analytics necessary (because no manual control is based on this)

-

Relieving the operators’ workload and thus increasing throughput (more processes per time interval)

In Fig. 5, the VCD during the process of mammalian cell fermentation for the production of a mAb in a 1-L bioreactor is compared to VCD analyzed by Cedex (Custom Biotech, Roche Diagnostics GmbH, Germany). The multivariate prediction model uses the entire spectral information of the dielectric spectroscope. The predictive power of this method is obvious. Even in later process phases, where prediction is sometimes not of high quality when based on only two frequencies, there is a good correlation with the off-line analyzer. However, an offset is observable within this process phase. After taking into account the measuring tolerances of an at-line method (and the manual steps within), the quality of the predicted VCD is still good enough to be used for process control.

VCD during the process of mammalian cell fermentation for the production of a mAb in a 1-L bioreactor. Symbols (VCD at-line): VCD analyzed by Cedex (Custom Biotech, Roche Diagnostics GmbH, Germany). Lines (VCD predicted): prediction of VCD based on dielectric spectroscopy (evo456XL, Hamilton, Swiss)

Figure 6 shows the effect of this type of process control on the nutrient concentration to be controlled in comparison to a manual control setting, where the VCD of the at-line analytical device is used as input for the algorithm calculating the feed rate. It can clearly be seen that the automated control keeps the glucose concentration at a low level. It is important to mention that product quality was within the acceptance range for the mAb produced, in comparison to the manually controlled fermentation runs.

Nutrient concentration during the process of mammalian cell fermentation for the production of a mAb in a 1-L bioreactor. Open symbols (controlled): nutrient concentration employing feed rate control based on dielectric spectroscopy. Filled circles (non-controlled): nutrient concentration with manual control of feed rate based on at-line determination of VCD by Cedex (Custom Biotech, Roche Diagnostics GmbH, Germany)

Effectively, no operator activity is necessary for the controlled runs based on in-line dielectric spectroscopy. The samples have only been drawn and analyzed to show that the approach works as expected.

This is only one example of how to apply dielectric spectroscopy as input for fermentation control. As this technique can be integrated in several control concepts, there are more options for its use.

3.3 On-line Integrity Monitoring of Chromatography Columns

One factor that can have a significant effect on chromatographic performance is the quality of the column packing [75]. The current standard procedure for testing the quality of a packed bed liquid chromatography column is to use a non-absorbed tracer to perform a pulse-injection experiment. The injected tracer solution is assumed to be a Dirac pulse. The pulse exits the column as a peak because of axial dispersion. Plate number, N, describes the degree of the dispersion, which is influenced by the packing quality of the column bed.

A related term, “height equivalent to a theoretical plate (HETP),” provides a measure of peak broadening in relation to the distance the tracer has traveled in the chromatography column. The mathematical definitions of N and HETP are given by the following equations:

where V r is the retention volume, which is defined as the volume that has passed through the column, from the time when half the tracer is applied to the time when half the tracer has exited the column. In other words, V r is the mean exit volume of the injected tracer, σ 2 the variance of the exit volume distribution, and L the column length. On the basis of the normal density function, the width of a curve at half peak height, W h, is equal to 2σ(2ln2)1/2. Because the peak generated by the tracer as it exits the column is assumed to follow a Gaussian distribution, N is usually calculated with the simplified formula shown in Fig. 7.

Plate calculation via the traditional pulse injection method [61]

In recent years, efforts have been made to use process chromatography data directly to determine column efficiency in order to achieve real-time monitoring [61, 76, 77]. The common approach taken in these studies is to utilize information from step transitions between buffers of different conductivities to describe the same dispersion parameters as the traditional pulse injection method. One method is to transform a breakthrough curve or a washout curve into a peak by taking the first derivative [77]. The dispersion parameters are then derived from peak position and shape. To avoid the inaccuracy in the calculation caused by assuming a normal distribution, algebraic functions other than the normal probability density function were evaluated, and a function that can describe a large number of step transitions has been identified. However, arbitrarily assigning a predetermined function to represent unknown distributions has disadvantages.

Depending on the column packing quality and the running conditions of the chromatography, there are transitions that differ significantly from the chosen function and cannot be adequately represented by it. In these cases, the forced fitting of transitional data to the function would cause loss of information. Another method is to treat the exit volume of the solution that is replacing the original solution in the column as a discrete random variable [76]. The incremental change in a response signal, such as conductivity, serves as the frequency of each exit volume. The starting point for the transition occurs when zero L of the displacing buffer has run onto the column. The conductivity recorded at this point corresponds to that of the buffer on the column at that time (C min). After a sufficient amount of the displacing buffer has flowed through the column, the conductivity reaches a new equilibrium (C max). C max is equal to the sum of C min and the definite integral of dC, which is integrated from C = C min to C = C max. To simplify the calculations, C may first be normalized using the following equation:

As shown in Fig. 8, V r and σ 2 can be calculated from the transition curve using the rectangular approximations of their integral forms.

Monitoring the integrity of large-scale packed bed liquid chromatography columns using transition analysis can provide useful information about the process. Figure 9 provides an example of how the HETP value derived from the transition analysis of a protein A chromatography column changed over multiple cycles of processing. The values increased with time after initial column packing (A). Increased measurement variability was also observed as integrity decreased. It was subsequently shown that this was caused by the formation of a headspace on the column. When the top flow adapter was lowered to eliminate the headspace, the HETP values were restored to their original values (B). However, subsequent repacking of the column once again resulted in rapid degradation of the column integrity because of insufficient consolidation of the resin during the packing procedure (C and E). The flow adapter was lowered again after the second packing (D) to improve column performance.

Control chart of protein A column HETP derived from transition analysis [75]. UCL upper control limit, LCL lower control limit

3.4 Alternative and Rapid Microbiological Methods

Bioburden testing plays a crucial role in ensuring product quality during pharmaceutical manufacturing. It spans the complete process, starting with testing raw materials and ending with the final drug product. Despite its role, the vast majority of tests are still performed using traditional methods originally developed well over 100 years ago [78, 79].

The major disadvantages of these methods are the long time-to-result and the lack of automation. In routine pharmaceutical bioprocessing, results from microbial testing, for example, pre-filtered cell-culture media or in-process controls for purification, are often obtained after use of the respective material. Thus it is not possible to react to a positive result in a timely fashion, which may result in the loss of an otherwise acceptable manufacturing batch. In addition, the large number of samples – particularly for water and environmental testing – necessitates automated routines that cannot be implemented with classical methods.

Several commercially available alternative and rapid microbial methods (ARMM) have addressed this issue (for a comprehensive overview see http://rapidmicromethods.com/files/matrix.php). Growth-based methods rely on traditional culture of samples but utilize sophisticated, highly sensitive methods for detection [80]. Non-growth-based methods, including, for example, spectroscopic methods or fluorescent labeling, allow even faster detection of microorganisms, even in real time [81, 82].

Parveen et al. [80] evaluated ARMM based on the detection of growth by adenosine triphosphate (ATP) bioluminescence (Rapid Milliflex® Detection System) and CO2 monitoring (BacT/Alert and the BACTEC systems). The former was shown to be acceptable as an alternative sterility method and took 5 days rather than 14 days in the compendium method and even proved to be superior to compendium methods with respect to detection time and sensitivity. Technologies based on CO2 monitoring were not as effective as the bioluminescence methods, but provided a potential inoculation sterility method for products that do not contain preservatives or antimicrobial agents.

Additional studies with bioluminescence-based ARMM showed that this technology offers a rapid sterility method and yields results that are equivalent to or better than those obtained with the traditional method. With an incubation (enrichment) phase, the results were easier to interpret and to compare with the methods in the compendium, as both rely on the growth of the microorganisms under conditions similar to those described in the pharmacopoeia [81].

Solid-phase cytometry as used in the ScanRDI® system is based on fluorescent labeling of viable microorganisms collected on a filter membrane and their subsequent automated detection and enumeration by a laser-scanning instrument. The ScanRDI® system appeared to as sensitive as the standard method.

Despite a number of regulatory guidance documents on the validation of ARMM [83, 84], these have been rarely implemented within the pharmaceutical industry.

Novartis and Sandoz have implemented rapid sterility testing based on bioluminescence in a routine setting [85, 86]. In addition to quantification, Grey et al. showed that it is possible to identify non-sterile findings even after using bioluminescence reagents that disrupt cell walls. Regrowth of the treated microbial cells and subsequent genotypic identification reproduced feasible and robust results and showed sufficient recovery.

As with other PAT tools, regulatory acceptance and return-on-investment remain the greatest hurdles. As vendors, regulators, and pharmaceutical companies continue to build and share experience in the use and implementation of ARMM, it should become increasingly easy to gain rapid approval and thus to support these procedures as routine PAT tools.

3.5 Residual Moisture by NIR

In addition to the applications in bioprocessing described above, NIR spectroscopy has been widely used to determine water content in both small molecules and lyophilized biological products [87]. Residual moisture is a CQA in these formulations because it can dramatically influence stability and thus the shelf-life of the product. As water shows two prominent and distinctive bands in the NIR spectra, at 5,155 and 6,895/cm, NIRS is particularly well-suited for this application. Examples include determination of residual moisture in powders or granulates [88,89,90], tablets or capsules [91, 92], and in lyophilized vials or in solutions [93].

The advantage of this technique when compared to the traditional methods, such as the Karl Fischer titration or thermogravimetry, is that it offers rapid, non-invasive, and non-destructive measurements, especially when applied as an on-line tool, for example, in fill/finish manufacturing lines [94]. The improvement in time-to-result allows us to measure entire manufacturing batches for their residual moisture, unlike traditional procedures, which focus on representative samples only. Analysis is performed non-invasively, avoiding contamination through atmospheric moisture. In addition, hazardous or highly active compounds, for example, antibody–drug conjugate, can be handled with a much lower safety risk. Finally, the non-destructive nature of the application allows additional, orthogonal methods to be performed, for example, to confirm out-of-specification results or for use in stability testing.

For absolute quantification of residual moisture by NIRS, a chemometric model has to be developed that uses classical methods as reference, such as the Karl Fischer titration. Although small physical or chemical variations (e.g., surfactant or buffer content) can be accommodated by a standard NIR calibration, significant formulation changes may cause alterations in spectral features and thus invalidate the multivariate model [95]. Such calibration is specific for a fixed formulation and product configuration, and thus makes the method highly suitable for use in routine manufacturing, but not for development applications with a high product turnover.

4 Conclusion and Outlook

4.1 Status of PAT Implementation in the Biopharmaceutical Industry

In general, it is difficult to come to an accurate assessment of the implementation of PAT in the biopharmaceutical industry. There have been many publications and/or presentations describing the use of PAT in the biopharmaceutical industry following the release of the FDA’s PAT Guidance Document, but it is difficult to assess from these presentations/publications how broad the use of the described applications actually is. Many of the applications described in these presentations/publications imply that the techniques are in routine use, but internal discussion often suggests something entirely different. It would appear that, in many cases, the literature is describing applications that have only been explored for possible use or have only been utilized in a few isolated cases, but not implemented on a broad, routine basis. This is also true for research originating from academic institutions where PAT research is stated to be performed and/or supported through collaboration with a biopharmaceutical company, but the research appears to be only work supporting a student’s thesis and never routinely applied at the sponsoring company.

In spite of the support and pressure from regulatory agencies over the past 10 years to implement new approaches to the QC of biopharmaceutical manufacturing (ever since the FDA published the PAT guideline in 2004), the consensus opinion across the industry seems to be that progress on this initiative has been patchy at best. PAT has seen increasing use in the production of small molecules [96, 97], but little change has been observed for biologics manufacturing, especially in fermentation and cell culture operations. There have been developments in the use of various technologies for monitoring and controlling these operations (e.g., NIR, Raman, fluorescence, on-line HPLC, model predictive control, etc.) at academic research centers and some exploration of these technologies within biopharmaceutical companies, but these technologies seem not to have found broad, routine use across the industry.

The sensing technologies that are used routinely continue to be those that have been used for decades to monitor and control the environment in which the cells are grown (e.g., pH, dO, temperature, etc.). The major reasons why the industry seems to be so reluctant to implement new technologies seem to be:

-

The complex regulatory environment

-

The level of process understanding within the fermentation/cell culture field

-

The difficulties in implementing the available technology

-

A lack of understanding of the ROI that could be generated

The culture of the biopharmaceutical industry has historically been very conservative. From a regulatory perspective, it is a risk-averse culture. New, previously unapproved technologies are typically only used in manufacturing when they offer the only way to produce a product. Companies often intentionally avoid being at the leading edge of manufacturing technology because of fear that it may raise concerns from the regulatory agencies reviewing their license application and, as a result, delay approval of their product. This ultimately delays the product reaching the marketplace and generating a stream of revenue.

This “regulatory paranoia” has severely slowed the implementation of PAT in spite of the fact that documented use of process analytics by the chemical industries dates back as far as the 1940s. The common retort to this observation is that the pharmaceutical industry is “different” and the chemical industries are not regulated. Although this observation is accurate to some degree, the nutrition industry has been using process analytics for well over a decade in processes that are indeed regulated by the FDA. A comment was once made by Mark McClellan (at that time FDA Commissioner) to the Wall Street Journal at around the time that the FDA released the PAT Guidance Document that the science of drug manufacturing was behind “that of potato chip and soap making” [98]. Similar observations have been made when comparing the pharmaceutical industry to other industries. For example, specifications for the uniformity of suspensions in paint are more than an order of magnitude tighter than USP quality specifications for pharmaceuticals, and processes in the semiconductor industry approach precision of 5 sigma, whereas the average pharmaceutical process is only able to achieve 2.5 sigma.

In the USA, the FDA has recognized the industry’s reluctance to implement new technologies. In response to the industry’s concerns, the agency initially openly invited any company considering the use of QbD, and potentially PAT elements, to join the FDA pilot program on QbD and to schedule discussions with them early in the process planning stage to ensure there are no surprises that delay approval. However, the reports have been mixed on how successful this approach has been. This initial approach of using the collaborative approach between regulator and manufacturer as a “carrot” to encourage the use of PAT by the manufacturers is felt to be moving more toward a “stick”: The impression is that if the regulatory agencies do not feel convinced that the manufacturer truly understands how their process impacts product quality they risk delays in obtaining approval. Even if single health authorities such as the FDA are more open to new approaches, it has to be acknowledged that biopharmaceutical companies often operate globally, and that they need not only the FDA’s consent on the manufacturing strategy but also that from more than 100 other health authorities which might be much more conservative in their thinking.

The topic of demonstrating process understanding leads to another challenge which hinders the implementation of PAT in bioprocessing in general, and especially in fermentation and cell culture. Unlike in small molecule API production, where the kinetics and organic chemistry mechanisms that impact production of the molecule of interest and generation of impurities are well-understood and controlled, this same level of understanding and control has not been achieved in biologic processes. The higher level of process understanding and control for small molecule API manufacturing enables the identification of process parameters that maximize production of product and minimize impurities, and then controls those factors to meet CQAs specifications consistently. For biologic processes this level of understanding and control is much more difficult to achieve. As was stated earlier, the bioreactor is basically a vessel for producing cells, where the cells are the reactors that produce the product. Fermentation science has a fairly good understanding of what impacts the production of cells and has measured and controlled those factors (e.g., pH, temperature, dO, nutrient sources, etc.) to maximize cell density for decades.

This same level of understanding and control has not been achieved for the biochemical reactions taking place within the individual cells. It is these reactions that actually impact the CQAs of large molecule APIs, such as glycosylation, sialylation, etc. Achieving this level of understanding is no trivial task because of the complexity and adaptability within the cell’s biochemical pathways. Although there has been significant progress in academic institutions toward mapping and modeling these pathways in some specialized cases [99], the industry is far from achieving this goal for every large molecule product, especially not for large glycosylated proteins produced in mammalian cells. Given the numerous cellular pathways, the number of analytes involved in the pathways, and the complexity of the analytical instrumentation and methods required to make these measurements, it is likely that the best that can be achieved with respect to cellular PAT is the development of models describing these pathways and on-line measurement and control of the extra-cellular analytes that impact these pathways. These extracellular measurements can then be used as the inputs to update continuously the on-line, metabolic models used in a model predictive control loop.

The technology issues limiting the implementation of PAT in biopharmaceutical production were described earlier: In-line analytical measurements are primarily spectroscopic measurements such as mid-IR, near-IR, Raman, and fluorescence. It is probable that these measurements are always challenged when it is attempted to predict single analyte concentrations in bioreactors, because of the chemical complexity of the cell culture broth and the limited resolution and sensitivity of spectroscopy-based techniques. The lack of resolution requires the use of multivariate calibration techniques, with the result that their long-term application in bioreactors are likely to be difficult without constant “re-validation” of the model. This is largely impacted by the variability observed in raw materials used in fermentation-based reactions. As more and more cultures are switched to defined media, this may become less of an issue. That said, the importance of trace molecules that are below the detection limit of spectroscopic techniques and the co-linearity that is very probably occurring within the multivariate system make it very difficult for spectroscopy to be used to predict the concentration of individual analytes other than those present in high concentrations (e.g., glucose). The most promising application of spectroscopy is to move away from attempting to use it as a substitute for a lab-based test of an individual analyte to using it as part of a MVDA scheme, where multiple principle components from a spectrum are correlated to an event or events in the bioreactor.

Measurement of specific analytes (particularly those that are present in low concentrations) can currently only be achieved with at-line technologies. In order to use these analytical methods on line, an automated sampling system must be used to interface the analyzer to the bioreactor. As described earlier, on-line sampling methods must provide a sterile barrier between the bioreactor and the analyzer. This has historically been done either by sterilizing the sampling device prior to opening the sampling device to the bioreactor or by providing a filtration media between the bioreactor and the sampling device that provides a sterile filter. The issue that is impeding the widespread use of on-line sampling interfaces is primarily related to the perceived increased risk of bioreactor contamination that can occur with these devices, as well as the added effort and complexity required to set up the sampling devices.

The last possible reason for the slow advance of PAT in biopharmaceutical manufacturing is that the industry fails to understand the potential financial gains this could bring. This may be because some promises of PAT seem to be very “abstract.”

From a process development perspective, utilizing PAT can:

-

Increase process understanding. Utilizing multivariate experimental designs allows the identification of the factors that impact the CQAs.

-

Accelerate time to market. Utilizing on-line/in-line measurements and automated experimental control allows unattended 24/7 experimentation to develop process understanding and define the design space more quickly and efficiently. Automated operations can obviously have a huge ROI from labor savings and increased utilization of R&D infrastructure.

A challenge to this, though, is that it really takes a leap of faith to believe that these advantages can in fact be realized by implementing PAT and, if they are achieved, how much of a measurable advantage is realized compared to the conventional approaches to process development that have been used historically. This is a question that appears still not to have been answered.

On the other hand, common sense indicates that generating more data and utilizing MVDA techniques to mine that data to generate valuable process knowledge should speed up process development and avoid future problems in manufacturing. There has as yet been no published study that quantitatively compares the advantages of the PAT approach to the conventional approach of process development.

From a manufacturing perspective, utilizing PAT can:

-

Reduce process variability. The increased understanding of what and how CPPs impact CQAs enables the process to be operated in a flexible manner within the design space, leading to reduced variability in the process output. This can results in two things: reduced risk of failure and more consistent process yield and quality. By reducing process variability, the risk of product failure is also reduced. This can lead to a reduction in inventories of in-process material and final product necessary to protect against in-process failures interrupting production flow. It also enables better production planning.

-

Increase operating efficiency. By utilizing process analytics, off-line QC assays that create delays in forwarding processing in-process materials can be eliminated. This should decrease overall cycle time and increase production throughput. This may be achieved by moving in-line assays on-line, where they can provide continuous product monitoring, or possibly just a more robust/capable process that eliminates the need to perform off-line verification of product quality.

Once again, these gains are “abstract” and hard to quantify, so it is hard to compare them against the perceived risks.

Although there are now mature technologies that can be readily adapted to biopharmaceutical manufacturing as on-line sensors for the measurement and control of CQAs, the key regulatory message is to ensure that multivariate understanding is obtained from the use of these technologies supporting the whole QbD paradigm.